Astronomers discover an oversized black hole population in the star cluster Palomar 5

"The number of black holes is roughly three times larger than expected from the number of stars in the cluster, and it means that more than 20% of the total cluster mass is made up of black holes. They each have a mass of about 20 times the mass of the Sun, and they formed in supernova explosions at the end of the lives of massive stars, when the cluster was still very young" says Prof Mark Gieles, from the Institute of Cosmos Sciences of the University of Barcelona (ICCUB) and lead author of the paper.

Tidal streams are streams of stars that were ejected from disrupting star clusters or dwarf galaxies. In the last few years, nearly thirty thin streams have been discovered in the Milky Way halo. "We do not know how these streams form, but one idea is that they are disrupted star clusters. However, none of the recently discovered streams have a star cluster associated with them, hence we can not be sure. So, to understand how these streams formed, we need to study one with a stellar system associated with it. Palomar 5 is the only case, making it a Rosetta Stone for understanding stream formation and that is why we studied it in detail" explains Gieles.

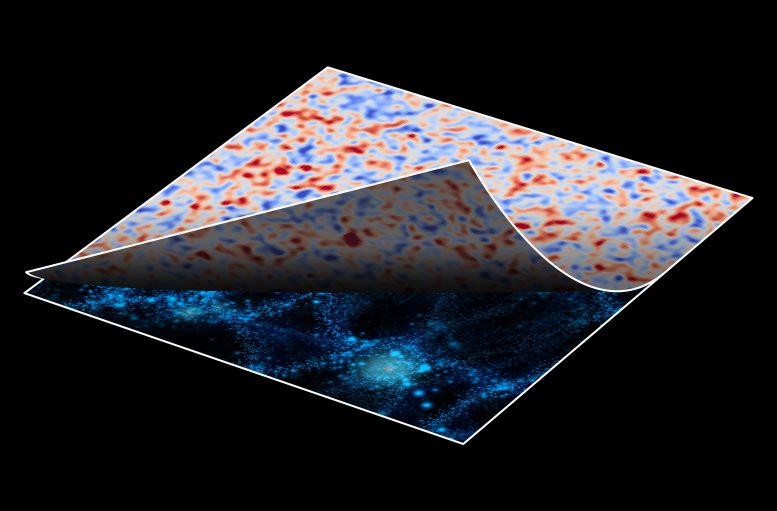

The authors simulate the orbits and the evolution of each star from the formation of the cluster until the final dissolution. They varied the initial properties of the cluster until a good match with observations of the stream and the cluster was found. The team finds that Palomar 5 formed with a lower black hole fraction, but stars escaped more efficiently than black holes, such that the black hole fraction gradually increased. The black holes dynamically puffed up the cluster in gravitational slingshot interactions with stars, which led to even more escaping stars and the formation of the stream. Just before it completely dissolves - roughly a billion years from now - the cluster will consist entirely of black holes. "This work has helped us understand that even though the fluffy Palomar 5 cluster has the brightest and longest tails of any cluster in the Milky Way, it is not unique. Instead, we believe that many similarly puffed up, black hole-dominated clusters have already disintegrated in the Milky Way tides to form the recently discovered thin stellar streams" says co-author Dr. Denis Erkal at the University of Surrey.

Gieles points out that in this paper "we have shown that the presence of a large black hole population may have been common in all the clusters that formed the streams". This is important for our understanding of globular cluster formation, the initial masses of stars and the evolution of massive stars. This work also has important implications for gravitational waves. "It is believed that a large fraction of binary black hole mergers form in star clusters. A big unknown in this scenario is how many black holes there are in clusters, which is hard to constrain observationally because we can not see black holes. Our method gives us a way to learn how many BHs there are in a star cluster by looking at the stars they eject.'', says Dr. Fabio Antonini from Cardiff University, a co-author of the paper.

Palomar 5 is a globular cluster discovered in 1950 by Walter Baade. It is in the Serpens constellation at a distance of about 80,000 light-years, and it is one of the roughly 150 globular clusters that orbit around the Milky Way. It is older than 10 billion years, like most other globular clusters, meaning that it formed in the earliest phases of galaxy formation. It is about 10 times less massive and 5 times more extended than a typical globular cluster and in the final stages of dissolution.

###

© (Shutterstock) Chocolate bars line the shelves at a supermarket in Russia.

© (Shutterstock) Chocolate bars line the shelves at a supermarket in Russia.