Anatomy of a Fake News Headline

How a series of algorithms whiffed and ended up warning Oregonians of an antifa invasion

How a series of algorithms whiffed and ended up warning Oregonians of an antifa invasion

By Jeremy B. Merrill and Aaron Sankin July 7, 2020 08:00

Sam Morris

THE MARKUP

Big Tech Is Watching You. We’re Watching Big Tech.

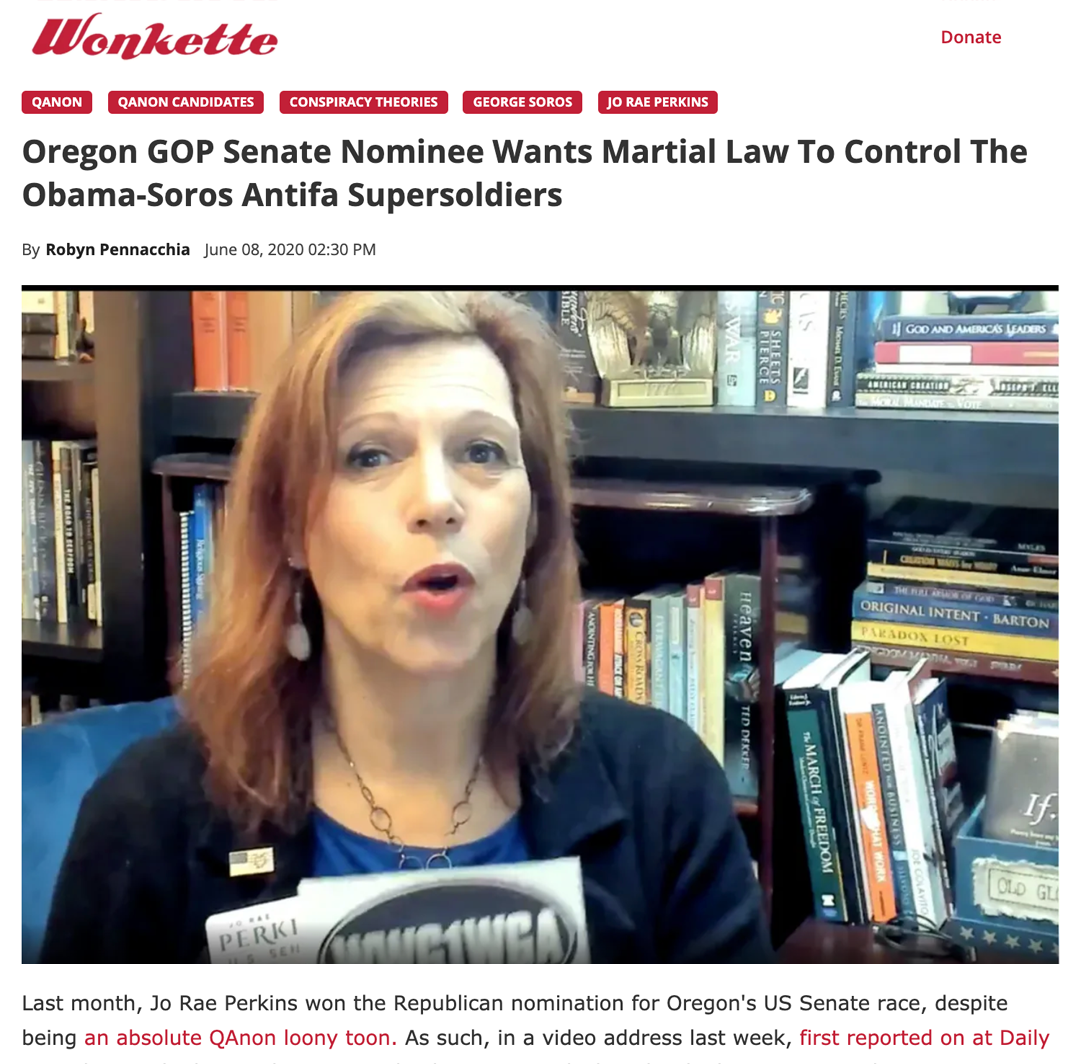

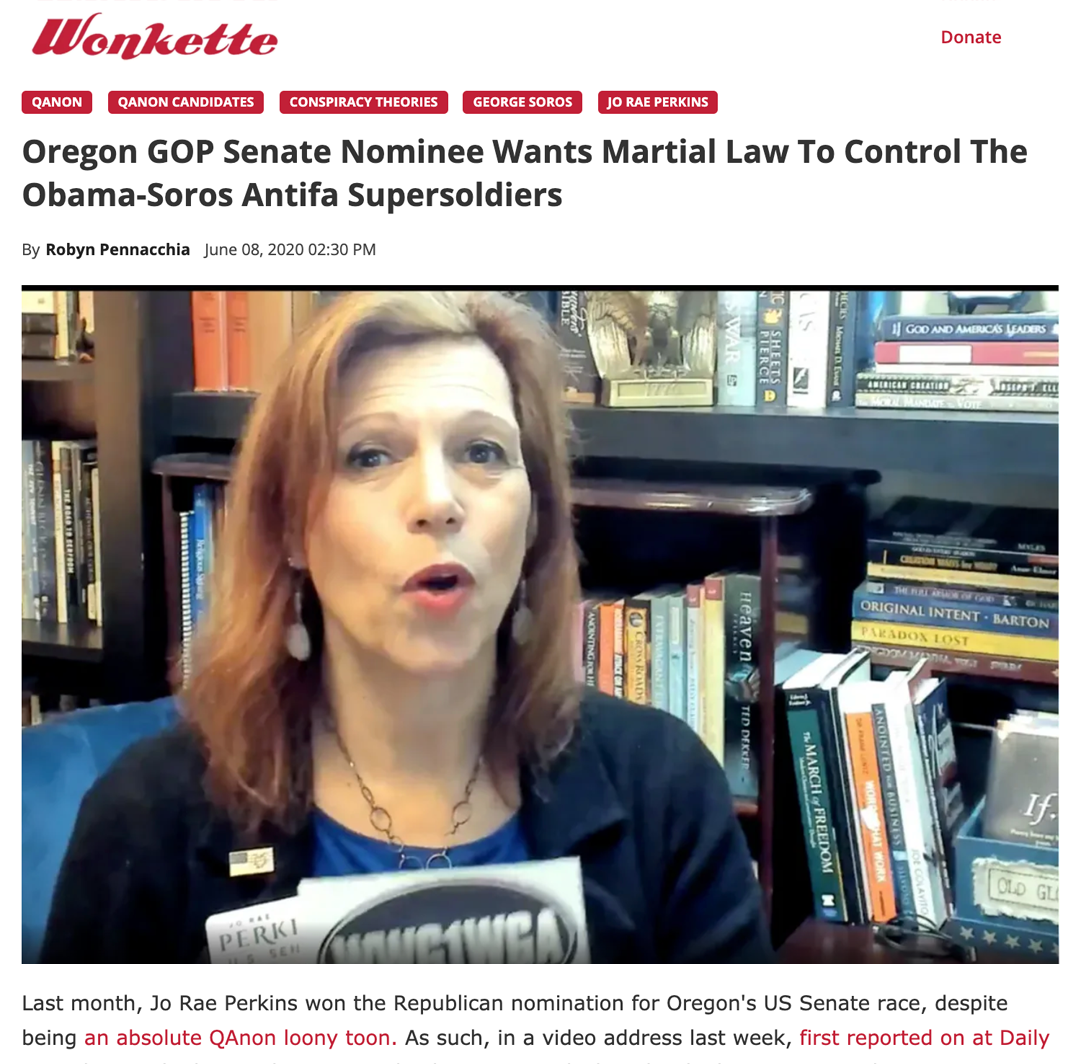

As confrontations between Black Lives Matter protesters and police erupted across the country earlier this month, some Oregonians, mostly older people, saw a Facebook ad pushing a headline about how a Republican politician “Wants Martial Law To Control The Obama-Soros Antifa Supersoldiers.”

Needless to say, there was no army of left-wing “supersoldiers” marching across Oregon, nor were former president Barack Obama and billionaire George Soros known to be funding anything antifa-related. And the politician in question didn’t actually say there were “supersoldiers.” The headline, originally from the often-sarcastic, progressive blog Wonkette, was never meant to be taken as straight news.

Original story published by with tongue-in-cheek headline.

SmartNews ad on Facebook that presents the headline as fact.

The whole thing was a mishap born of the modern news age, in which what headlines you see is decided not by a hard-bitten front-page editor but instead by layers of algorithms designed to pick what’s news and who should be shown it. This system can work fine, but in this instance it fed into a maelstrom of misinformation that was already inspiring some westerners to grab their guns and guard their towns against the largely non-existent threat of out-of-town antifa troublemakers.

This was just one headline that fed into a sense of paranoia reinforced by rumors from many sources. But deconstructing exactly how it came about provides a window into how easy it is for a fringe conspiracy theory to accidentally slip into the ecosystem of mainstream online news.

The trouble started when SmartNews picked up Wonkette’s mocking story. SmartNews is a news aggregation app that brings in users by placing nearly a million dollars worth of ads on Facebook, according to Facebook’s published data. According to the startup’s mission statement, its “algorithms evaluate millions of articles, social signals and human interactions to deliver the top 0.01% of stories that matter most, right now.”

The company, which says that “news should be impartial, trending and trustworthy,” usually picks ordinary local news headlines for its Facebook ads—maybe from your local TV news station. Users who install the app get headlines about their home area and topics of interest, curated by SmartNews’s algorithms. This time, however, the headline was sourced from Wonkette, in a story mocking Jo Rae Perkins, Oregon’s Republican U.S. Senate nominee, who has sparked controversy for her promotion of conspiracy theories.

In early June, as protests against police violence cropped up in rural towns across the country, Perkins recorded a Facebook Live video calling for “hard martial law” to “squash” the “antifa thugs” supposedly visiting various towns in Oregon. She also linked protesters, baselessly, to common right-wing targets: “Many, many people believe that they are being paid for by George Soros,” she said, and “this is the army that Obama put together a few years ago.”

Perkins never said “supersoldier”—the term is apparently a Twitterverse joke, in this case added to its headline by Wonkette to mock Perkins’s apparent fear of protesters. To someone familiar with its deadpan sardonic style, seeing the hyperbolic headline on Wonkette’s website wouldn’t raise an eyebrow—regular readers would know Wonkette was mocking Perkins. But it’s 2020, and even insular blog headlines can travel outside their readers’ RSS feeds and wend their way via social media into precincts where Wonkette isn’t broadly known. SmartNews, when it automatically stripped Wonkette’s headline of its association with Wonkette and presented it neutrally in the ad, epitomized that phenomenon.

THE MARKUP

Big Tech Is Watching You. We’re Watching Big Tech.

As confrontations between Black Lives Matter protesters and police erupted across the country earlier this month, some Oregonians, mostly older people, saw a Facebook ad pushing a headline about how a Republican politician “Wants Martial Law To Control The Obama-Soros Antifa Supersoldiers.”

Needless to say, there was no army of left-wing “supersoldiers” marching across Oregon, nor were former president Barack Obama and billionaire George Soros known to be funding anything antifa-related. And the politician in question didn’t actually say there were “supersoldiers.” The headline, originally from the often-sarcastic, progressive blog Wonkette, was never meant to be taken as straight news.

Original story published by with tongue-in-cheek headline.

SmartNews ad on Facebook that presents the headline as fact.

The whole thing was a mishap born of the modern news age, in which what headlines you see is decided not by a hard-bitten front-page editor but instead by layers of algorithms designed to pick what’s news and who should be shown it. This system can work fine, but in this instance it fed into a maelstrom of misinformation that was already inspiring some westerners to grab their guns and guard their towns against the largely non-existent threat of out-of-town antifa troublemakers.

This was just one headline that fed into a sense of paranoia reinforced by rumors from many sources. But deconstructing exactly how it came about provides a window into how easy it is for a fringe conspiracy theory to accidentally slip into the ecosystem of mainstream online news.

The trouble started when SmartNews picked up Wonkette’s mocking story. SmartNews is a news aggregation app that brings in users by placing nearly a million dollars worth of ads on Facebook, according to Facebook’s published data. According to the startup’s mission statement, its “algorithms evaluate millions of articles, social signals and human interactions to deliver the top 0.01% of stories that matter most, right now.”

The company, which says that “news should be impartial, trending and trustworthy,” usually picks ordinary local news headlines for its Facebook ads—maybe from your local TV news station. Users who install the app get headlines about their home area and topics of interest, curated by SmartNews’s algorithms. This time, however, the headline was sourced from Wonkette, in a story mocking Jo Rae Perkins, Oregon’s Republican U.S. Senate nominee, who has sparked controversy for her promotion of conspiracy theories.

In early June, as protests against police violence cropped up in rural towns across the country, Perkins recorded a Facebook Live video calling for “hard martial law” to “squash” the “antifa thugs” supposedly visiting various towns in Oregon. She also linked protesters, baselessly, to common right-wing targets: “Many, many people believe that they are being paid for by George Soros,” she said, and “this is the army that Obama put together a few years ago.”

Perkins never said “supersoldier”—the term is apparently a Twitterverse joke, in this case added to its headline by Wonkette to mock Perkins’s apparent fear of protesters. To someone familiar with its deadpan sardonic style, seeing the hyperbolic headline on Wonkette’s website wouldn’t raise an eyebrow—regular readers would know Wonkette was mocking Perkins. But it’s 2020, and even insular blog headlines can travel outside their readers’ RSS feeds and wend their way via social media into precincts where Wonkette isn’t broadly known. SmartNews, when it automatically stripped Wonkette’s headline of its association with Wonkette and presented it neutrally in the ad, epitomized that phenomenon.

Another SmartNews Facebook ad, this one warning Maryland residents about fake news regarding antifa.Source: Facebook Ad Library

SmartNews’s algorithms picked that headline for ads to appear on the Facebook feeds of people in almost every Oregon county, with a banner like “Charles County news” matching the name of the county where the ad was shown. It’s a strategy that the company uses thousands of times a day.

SmartNews vice president Rich Jaroslovsky said that in this case its algorithms did nothing wrong by choosing the tongue-in-cheek headline to show to existing readers. The problem, he says, was that the headline was shown to the wrong people.

SmartNews, he said, focuses “a huge amount of time, effort and talent” on its algorithms for recommending news stories to users of SmartNews’s app. Those algorithms would have aimed the antifa story at “people who presumably have a demonstrated interest in the kind of stuff Wonkette specializes in.” To those readers and in that context, he said, the story wasn’t problematic.

“The problems occurred when it was pulled out of its context and placed in a different one” for Facebook advertising that isn’t aimed by any criterion other than geography. “Obviously, this shouldn’t have happened, and we’re taking a number of steps to make sure we address the problems you pointed out,” Jaroslovsky said.

Jaroslovsky said Wonkette stories wouldn’t be used in ads in the future.

SmartNews targets its ads at people in particular geographic areas—in this case, 32 of Oregon’s 36 counties.

But Facebook had other ideas: Its algorithms chose to show the “antifa supersoldiers” ad overwhelmingly to people over 55 years old, according to Facebook’s published data about ads that it considers political. Undoubtedly, many of those viewers ignored the ad, or weren’t fooled by it, but the demographic Facebook chose is a demographic that a recent New York University study showed tends to share misinformation on social media more frequently.

This choice by Facebook’s algorithms is powerful: An academic paper showed that Facebook evaluates the content of ads and then sometimes steers them disproportionately to users with a particular gender, race, or political view. (The paper didn’t study age.)

Facebook also doesn’t make it possible to know exactly how many people saw SmartNews’s antifa supersoldiers ad. The company’s transparency portal says the ad was shown between 197 and 75,000 times, across about 75 variations (based on Android and iPhone and number of counties). Facebook declined to provide more specific data.

Facebook doesn’t consider the ads to have violated the company’s rules. Ads are reviewed “primarily” by automated mechanisms, Facebook spokesperson Devon Kearns told The Markup, so it’s unlikely that a human being at Facebook saw the ads before they ran. However, “ads that run with satirical headlines that are taken out of context are eligible” to be fact-checked, Kearns said, and ads found to be false are taken down. (Usually, satire and opinion in ads are exempt from being marked as “misinformation” under Facebook’s fact-checking policy, unless they’re presented out of context.)

Wonkette publisher Rebecca Schoenkopf told The Markup she wasn’t aware SmartNews was promoting her site’s content with Facebook ads but wasn’t necessarily against it. In theory, at least, it could have the effect of drawing more readers to her site.

Ironically, she says, Wonkette has a limited Facebook presence. In recent years, the reach of Wonkette’s posts on the platform had dwindled to almost nothing.

The SmartNews ad—inadvertently or not—joined in a chorus of false, misleading and racist posts from white nationalists in response to Black Lives Matter protests.Lindsay Schubiner, Western States Center

Following the chain of information from Perkins’s Facebook video all the way to the SmartNews ad makes it easy to see how a series of actors took the same original piece of content—a video of Perkins espousing conspiracy theories—and amplified it to suit their own motives. Each of those links created a potential for misinformation, where the context necessary for understanding could be stripped away.

Lindsay Schubiner, a program director at the Portland, Ore., based Western States Center, which works to counter far-right extremism in the Pacific Northwest, told The Markup that, while social media has had a democratizing effect on information, it’s also been an ideal format for spreading misinformation.

“The SmartNews ad—inadvertently or not—joined in a chorus of false, misleading and racist posts from white nationalists in response to Black Lives Matter protests,” Schubiner wrote in an email. “Many of these posts traded in anti-semitism, which has long been a go-to response for white nationalists looking to explain their political losses.”

In this case the ad, she said, potentially promulgated the common right-wing trope that Soros, who is Jewish, funds mass protests for left-wing causes.

“These bigoted conspiracy theories have helped fuel a surge in far-right activity and organizing,” she continued. “It’s certainly possible that the ads contributed to far-right organizing in Oregon in response to false rumors about anti-fascist gatherings in small towns.”

Those conspiracy theories have had real-world consequences. Firearm-toting residents in nearby Washington State harassed a multiracial family who were camping in a converted school bus, trapping them with felled trees, apparently mistakenly believing them to be antifa. The family was able to escape only with the help of some local high school students armed with chainsaws to clear their way to freedom.

Jeremy B. Merrill

SmartNews’s algorithms picked that headline for ads to appear on the Facebook feeds of people in almost every Oregon county, with a banner like “Charles County news” matching the name of the county where the ad was shown. It’s a strategy that the company uses thousands of times a day.

SmartNews vice president Rich Jaroslovsky said that in this case its algorithms did nothing wrong by choosing the tongue-in-cheek headline to show to existing readers. The problem, he says, was that the headline was shown to the wrong people.

SmartNews, he said, focuses “a huge amount of time, effort and talent” on its algorithms for recommending news stories to users of SmartNews’s app. Those algorithms would have aimed the antifa story at “people who presumably have a demonstrated interest in the kind of stuff Wonkette specializes in.” To those readers and in that context, he said, the story wasn’t problematic.

“The problems occurred when it was pulled out of its context and placed in a different one” for Facebook advertising that isn’t aimed by any criterion other than geography. “Obviously, this shouldn’t have happened, and we’re taking a number of steps to make sure we address the problems you pointed out,” Jaroslovsky said.

Jaroslovsky said Wonkette stories wouldn’t be used in ads in the future.

SmartNews targets its ads at people in particular geographic areas—in this case, 32 of Oregon’s 36 counties.

But Facebook had other ideas: Its algorithms chose to show the “antifa supersoldiers” ad overwhelmingly to people over 55 years old, according to Facebook’s published data about ads that it considers political. Undoubtedly, many of those viewers ignored the ad, or weren’t fooled by it, but the demographic Facebook chose is a demographic that a recent New York University study showed tends to share misinformation on social media more frequently.

This choice by Facebook’s algorithms is powerful: An academic paper showed that Facebook evaluates the content of ads and then sometimes steers them disproportionately to users with a particular gender, race, or political view. (The paper didn’t study age.)

Facebook also doesn’t make it possible to know exactly how many people saw SmartNews’s antifa supersoldiers ad. The company’s transparency portal says the ad was shown between 197 and 75,000 times, across about 75 variations (based on Android and iPhone and number of counties). Facebook declined to provide more specific data.

Facebook doesn’t consider the ads to have violated the company’s rules. Ads are reviewed “primarily” by automated mechanisms, Facebook spokesperson Devon Kearns told The Markup, so it’s unlikely that a human being at Facebook saw the ads before they ran. However, “ads that run with satirical headlines that are taken out of context are eligible” to be fact-checked, Kearns said, and ads found to be false are taken down. (Usually, satire and opinion in ads are exempt from being marked as “misinformation” under Facebook’s fact-checking policy, unless they’re presented out of context.)

Wonkette publisher Rebecca Schoenkopf told The Markup she wasn’t aware SmartNews was promoting her site’s content with Facebook ads but wasn’t necessarily against it. In theory, at least, it could have the effect of drawing more readers to her site.

Ironically, she says, Wonkette has a limited Facebook presence. In recent years, the reach of Wonkette’s posts on the platform had dwindled to almost nothing.

The SmartNews ad—inadvertently or not—joined in a chorus of false, misleading and racist posts from white nationalists in response to Black Lives Matter protests.Lindsay Schubiner, Western States Center

Following the chain of information from Perkins’s Facebook video all the way to the SmartNews ad makes it easy to see how a series of actors took the same original piece of content—a video of Perkins espousing conspiracy theories—and amplified it to suit their own motives. Each of those links created a potential for misinformation, where the context necessary for understanding could be stripped away.

Lindsay Schubiner, a program director at the Portland, Ore., based Western States Center, which works to counter far-right extremism in the Pacific Northwest, told The Markup that, while social media has had a democratizing effect on information, it’s also been an ideal format for spreading misinformation.

“The SmartNews ad—inadvertently or not—joined in a chorus of false, misleading and racist posts from white nationalists in response to Black Lives Matter protests,” Schubiner wrote in an email. “Many of these posts traded in anti-semitism, which has long been a go-to response for white nationalists looking to explain their political losses.”

In this case the ad, she said, potentially promulgated the common right-wing trope that Soros, who is Jewish, funds mass protests for left-wing causes.

“These bigoted conspiracy theories have helped fuel a surge in far-right activity and organizing,” she continued. “It’s certainly possible that the ads contributed to far-right organizing in Oregon in response to false rumors about anti-fascist gatherings in small towns.”

Those conspiracy theories have had real-world consequences. Firearm-toting residents in nearby Washington State harassed a multiracial family who were camping in a converted school bus, trapping them with felled trees, apparently mistakenly believing them to be antifa. The family was able to escape only with the help of some local high school students armed with chainsaws to clear their way to freedom.

Jeremy B. Merrill

Investigative Data Reporter

Aaron SankinInvestigative Reporter

Aaron SankinInvestigative Reporter

No comments:

Post a Comment