by Ingrid Fadelli , Tech Xplore

With the help of deep-learning and model-based control, the researchers’ risk-sensitive robot achieves safe and efficient navigation in real-world dynamic environments. Credit: Nishimura et al.

Humans are innately able to adapt their behavior and actions according to the movements of other humans in their surroundings. For instance, human drivers may suddenly stop, slow down, steer or start their car based on the actions of other drivers, pedestrians or cyclists, as they have a sense of which maneuvers are risky in specific scenarios.

However, developing robots and autonomous vehicles that can similarly predict human movements and assess the risk of performing different actions in a given scenario has so far proved highly challenging. This has resulted in a number of accidents, including the tragic death of a pedestrian who was struck by a self-driving Uber vehicle in March 2018.

Researchers at Stanford University and Toyota Research Institute (TRI) have recently developed a framework that could prevent these accidents in the future, increasing the safety of autonomous vehicles and other robotic systems operating in crowded environments. This framework, presented in a paper pre-published on arXiv, combines two tools, a machine learning algorithm and a technique to achieve risk-sensitive control.

"The main goal of our work is to enable self-driving cars and other robots to operate safely among humans (i.e., human drivers, pedestrians, bicyclists, etc.), by being mindful of what these humans intend to do in the future," Haruki Nishimura and Boris Ivanovic, lead authors of the paper, told TechXplore via email.

Nishimura, Ivanovic and their colleagues developed a machine-learning model and trained it to predict the future actions of humans in a robot's surroundings. Using this model, they then created an algorithm that can estimate the risk of collision associated with each of the robot's potential maneuvers at a given time. This algorithm can automatically select the optimal maneuver for the robot, which should minimize the risk of colliding with other humans or cars, while also allowing the robot to move towards completing its mission or goal.

"Existing methods for allowing autonomous cars and other robots to navigate among humans generally suffer from two important oversimplifications," the researchers told TechXplore via email. "Firstly, they make simplistic assumptions about what the humans will do in the future; secondly, they do not consider a trade-off between collision risk and progress for the robot. In contrast, our method uses a rich, stochastic model of human motion that is learned from data of real human motion."

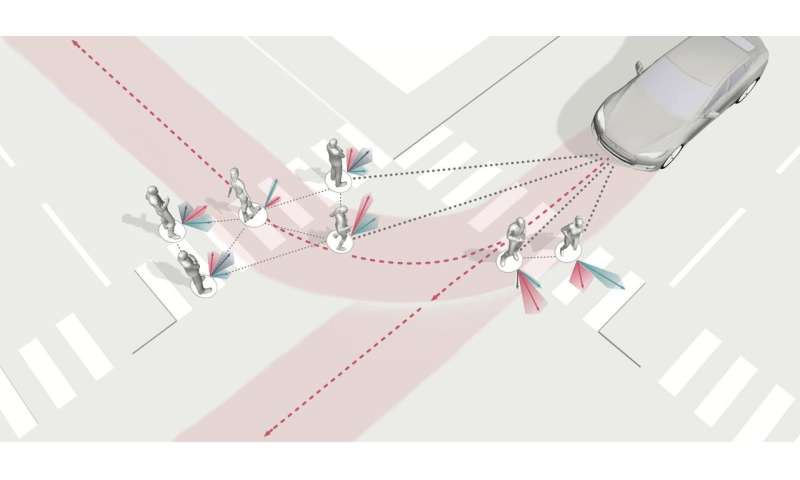

For safe human-robot interactions, robots (e.g., autonomous cars) need to first reason about the possibility of multiple outcomes of an interaction (denoted by the colored shaded arrows), and understand how their actions influence the actions of others (e.g., surrounding pedestrians). Such reasoning then has to be incorporated into the robot’s planning and control modules in order for it to successfully navigate dynamic environments alongside humans. Credit: Nishimura et al.

The stochastic model that the researchers' framework is based on does not offer a single prediction of future human movements, but rather a distribution of predictions. Moreover, the way in which the team used this model differs significantly from the way in which previously developed robot navigation techniques integrated stochastic models.

"We consider the full distribution of possible future human motions," Nishimura and Ivanovic explained. "We then choose our robot's next action to achieve both a low risk of collisions (i.e., the robot collides with none or very few of the many predicted motions of the humans), while still driving the robot in the direction in which it intends to move. This is called risk-sensitive optimal control, and it essentially allows us to determine a robot's next action in real-time. The computation it requires happens in a fraction of a second and is continuously repeated as the robot's moves around in its environment."

To evaluate their framework, Nishimura, Ivanovic and their colleagues carried out both a simulation study and a real-world experiment. In the simulation study, they compared their framework's performance with that of three commonly used collision avoidance algorithms in a task where a robot had to determine the best actions to safely navigate environments containing up to 50 moving humans. In the real-world experiment, on the other hand, they used their framework to guide the actions of a holonomic robot called Ouijabot within an indoor environment that was populated by five moving human subjects.

The results of both of these tests were highly promising, with the researchers' framework calculating optimal trajectories that minimized the risk of the robot colliding with humans in its surroundings. Remarkably, the framework also outperformed all the collision avoidance algorithms it was compared to.

"Our overarching goal is to make autonomous cars and other robots safer for humans," the researchers said. "To ensure the safe operation of robots around humans, we need to teach them to predict human motion from experience and endow them with a sensitivity to risk, so that they avoid risky behaviors that may lead to collisions. This is precisely what our algorithm does."

In the future, this navigation framework could increase the safety of robots and self-driving vehicles, allowing them to predict the actions of humans or vehicles in their surroundings and promptly respond to these actions to prevent collisions. Before it can be implemented on a large scale, however, the framework will need to be trained on large databases containing videos of humans moving in crowded environments similar to the one in which robots will be operating. To simplify this training process, Nishimura, Ivanovic and their colleagues plan to develop a method that allows robots to gather this training data online as they are operating.

"We would also like for robots to be able to identify a model that fits the specific behavior of the humans in its immediate environment," Nishimura and Ivanovic said. "It would be very useful, for example, if the robot could categorize an erratic driver or a drunk driver at any given moment, and avoid moving too close to that driver to mitigate the risk of collision. Human drivers do this naturally, but it is devilishly difficult to codify this in an algorithm that a robot can use."

The stochastic model that the researchers' framework is based on does not offer a single prediction of future human movements, but rather a distribution of predictions. Moreover, the way in which the team used this model differs significantly from the way in which previously developed robot navigation techniques integrated stochastic models.

"We consider the full distribution of possible future human motions," Nishimura and Ivanovic explained. "We then choose our robot's next action to achieve both a low risk of collisions (i.e., the robot collides with none or very few of the many predicted motions of the humans), while still driving the robot in the direction in which it intends to move. This is called risk-sensitive optimal control, and it essentially allows us to determine a robot's next action in real-time. The computation it requires happens in a fraction of a second and is continuously repeated as the robot's moves around in its environment."

To evaluate their framework, Nishimura, Ivanovic and their colleagues carried out both a simulation study and a real-world experiment. In the simulation study, they compared their framework's performance with that of three commonly used collision avoidance algorithms in a task where a robot had to determine the best actions to safely navigate environments containing up to 50 moving humans. In the real-world experiment, on the other hand, they used their framework to guide the actions of a holonomic robot called Ouijabot within an indoor environment that was populated by five moving human subjects.

The results of both of these tests were highly promising, with the researchers' framework calculating optimal trajectories that minimized the risk of the robot colliding with humans in its surroundings. Remarkably, the framework also outperformed all the collision avoidance algorithms it was compared to.

"Our overarching goal is to make autonomous cars and other robots safer for humans," the researchers said. "To ensure the safe operation of robots around humans, we need to teach them to predict human motion from experience and endow them with a sensitivity to risk, so that they avoid risky behaviors that may lead to collisions. This is precisely what our algorithm does."

In the future, this navigation framework could increase the safety of robots and self-driving vehicles, allowing them to predict the actions of humans or vehicles in their surroundings and promptly respond to these actions to prevent collisions. Before it can be implemented on a large scale, however, the framework will need to be trained on large databases containing videos of humans moving in crowded environments similar to the one in which robots will be operating. To simplify this training process, Nishimura, Ivanovic and their colleagues plan to develop a method that allows robots to gather this training data online as they are operating.

"We would also like for robots to be able to identify a model that fits the specific behavior of the humans in its immediate environment," Nishimura and Ivanovic said. "It would be very useful, for example, if the robot could categorize an erratic driver or a drunk driver at any given moment, and avoid moving too close to that driver to mitigate the risk of collision. Human drivers do this naturally, but it is devilishly difficult to codify this in an algorithm that a robot can use."

A framework for indoor robot navigation among humans

More information: Risk-sensitive sequential action control with multi-modal human trajectory forecasting for safe crowd-robot interaction. arXiv:2009.05702 [cs.RO]. arxiv.org/abs/2009.05702

© 2020 Science X Network

More information: Risk-sensitive sequential action control with multi-modal human trajectory forecasting for safe crowd-robot interaction. arXiv:2009.05702 [cs.RO]. arxiv.org/abs/2009.05702

© 2020 Science X Network

No comments:

Post a Comment