Study explores how a robot's inner speech affects a human user's trust

Trust is a very important aspect of human-robot interactions, as it could play a crucial role in the widespread implementation of robots in real-world settings. Nonetheless, trust is a considerably complex construct that can depend on psychological and environmental factors.

Psychological theories often describe trust, either as a stable trait that can be shaped by early life experiences or as an evolving state of mind that can be affected by numerous cognitive, emotional and social factors. Most researchers agree that trust is generally characterized by two features: positive attitudes and expectations about a trustee and the readiness to become vulnerable and accept the possible risks associated with trusting others.

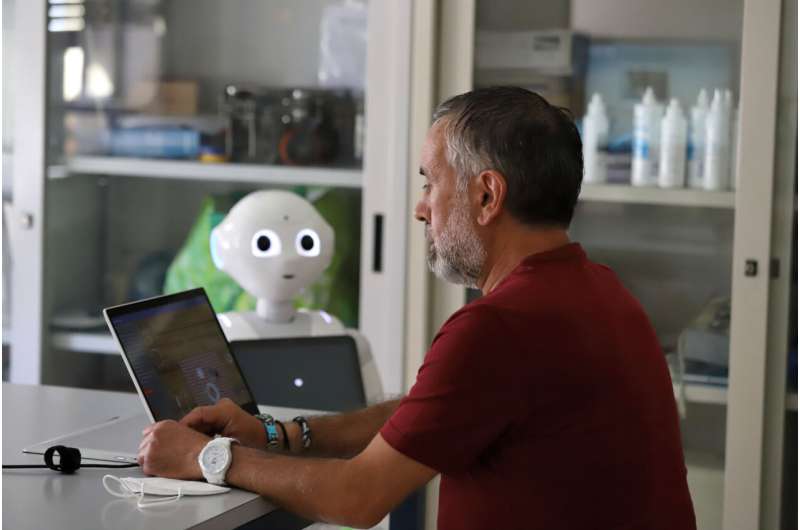

Researchers at University of Palermo have recently carried out a study investigating the effects of a robot's inner speech on a user's trust in it. In their paper, pre-published on arXiv, the team present findings of an experiment using a robot that can talk to itself out loud in a way that resembles humans' inner speech.

"Our recent paper is an outcome of the research we carried out at the RoboticsLab, at the University of Palermo," Arianna Pipitone, one of the researchers who carried out the study, told TechXplore. "The research explores the possibility of providing a robot with inner speech. In one of our previous works, we demonstrated that a robot's performance, in terms of transparency and robustness, improves when the robot talks to itself."

In their previous work, the researchers showed that a robot's performance can improve when it talks to itself. In their new work, they set out to investigate whether this ability to talk to itself can affect how users perceive a robot's trustworthiness and anthropomorphism (i.e., the extent to which it exhibits human characteristics).

The recent study was carried out on a group of 27 participants. Each of these participants were asked to complete the same questionnaire twice: before interacting with the robot and after they interacted with it.

"During the interactive session, the robot talked to itself," Pipitone explained. "The questionnaire we administered to participants is based on both the well-known inner speech scales (such as the Self Talk Scale) and the Godspeed test, which measure the aforementioned cues. By comparing questionnaires' results from the two different phases, we can observe how the robot's cues vary from the participants' perspective after the interaction, so inferring the effects of inner speech on them."

Understanding a robot's decision-making processes and why it performs specific behaviors is not always easy. The ability to talk to itself while completing a given task could thus make a robot more transparent, allowing its users to understand the different processes, considerations and calculations that lead to specific conclusions.

"Inner speech is a sort of explainable log," Pipitone explained. "Moreover, by inner speech, the robot could evaluate different strategies in collaboration with the human partner, leading to the fulfillment of specific goals. All these improvements make the robot more pleasant for people, and as shown, enhance the robot's trustworthiness and anthropomorphism."

Overall, Pipitone and her colleagues found that participants reported trusting the robot more after they interacted with it. In addition, they felt that the robot's ability to talk to itself out loud made it more human-like, or anthropomorphic.

In the future, the robotic inner speech mechanism developed by this team of researchers could help to make both existing and emerging robots more human-like, potentially encouraging more users to trust these robots and introduce them in their households or workplaces. Meanwhile, Pipitone and her colleagues plan to conduct further studies to confirm the validity of the initial results they collected.

"We now want to complete the experimental session by involving a large number of participants, to validate our initial results," Pipitone said. "Moreover, we want to compare the results from the interactive sessions during which the robot does not talk to itself, for refining the effective inner speech contribution on the cues. We will analyze many other features, such as the robot's emotions by inner speech. The human's inner speech plays a fundamental role in self-regulation, learning, focusing, so we would like to investigate these aspects."Pepper the robot talks to itself to improve its interactions with people

More information: Arianna Pipitone et al, Robot's inner speech effects on trust and anthropomorphic cues in human-robot cooperation. arXiv:2109.09388v1 [cs.RO], arxiv.org/abs/2109.09388

Arianna Pipitone et al, What robots want? Hearing the inner voice of a robot, iScience (2021). DOI: 10.1016/j.isci.2021.102371

Journal information: iScience

© 2021 Science X Network

No comments:

Post a Comment