Toyota gives robots a soft touch and better vision to amplify our intelligence

Thanks to decades of sci-fi movies, it's easy to think of robots as human replacements: Metal arms, hinged legs and electronic eyes will do that. But the future of robots may center on extending and boosting us, not so much replacing us. While that concept isn't entirely new, it came more sharply into focus recently when I visited Toyota Research Institute's headquarters in Los Altos, California. That's where robots amplifying humans is the mission.

The softer side of robots: TRI has developed soft touch robot "hands" that can not only handle things elegantly but also identify them.

"People here are passionate about making robots truly useful," says Max Bajracharya, VP of robotics at TRI and veteran of Google's robotics unit, Boston Dynamics and NASA's Mars rover team. Today he develops robots in mock-ups of home kitchens and grocery store aisles. "How can we really help people in their day-to-day lives?" TRI isn't charged with getting robots on the market but with figuring out the problems preventing that from happening.

One tangible example is a soft touch sensing gripper developed at TRI that allows a robot to handle delicate things the way we do, with a progressive, nuanced sense of pressure and recognition.

These soft grippers are like padded hooves with cameras on the inside that can figure out the perfect amount of force needed to grip something and can also help identify an object, essentially by touch. "Replicating our body is incredibly complex if you think about how many sensors we have" in our skin and the superlative "wetware" processor in our skull that makes sense of them all, according to Bajracharya.

Video: Track out-of-stock items with online robots (CNET)

This work in soft touch is about more than just grabbing and holding. AI and smartphone pioneer Jeff Hawkins subscribes to a theory that much of how we understand the world is achieved by comparing "memory frames" of how we've moved through it, either via literal touch or through virtual contact with concepts like liberty and love. That kind of learning about the world seems to have strong echoes in TRI's work.

TRI has also given its robots the ability to understand clear or reflective surfaces, something anyone with a cat knows can be remarkably hard. Transparencies and reflections can confuse a robot into thinking something is or isn't there when the opposite is true. From grocery aisles full of clear containers to homes full of mirrors, the places where this breakthrough can advance robotic relevance are numerous.

But the bigger goal remains a symphony of people and robots. "What you don't see today is humans and robots really interfacing together, which really limits how much robots can amplify humans' ability," says Bajracharya. That's where TRI turns AI on its head with something they call IA, or Intelligence Amplification. Simply put, it views robots as leveraging humans' superior intelligence and multiplying it with a robot's superior abilities in strength, precision, persistence and repeatability. Toyota has been focused on enhancing human mobility since a major announcement in 2017 by CEO Akiyo Toyoda at an event in Athens that I helped moderate.

All of this brings up the relatability of robots: I've long felt that they will need to be as relatable as they are capable to achieve maximum adoption, since humans can't help but anthropomorphize them. That doesn't mean being as adorably useless as Kuri, which took CES by storm in 2017 before vanishing 18 months later, but it does mean establishing some kind of relationship. "Some people actually prefer a robot because it's not a person," says Bajracharya, speaking of robots in home or health care settings. "But some people are very concerned about this machine in their environment" due to fear of the unknown or concerns that it will displace human workers.

The history of robotics is still only at its preface. But nuanced skills that amplify humans' savvy about the world while robots take over some of what dilutes our time and effort appear to be a formula for the next chapter.

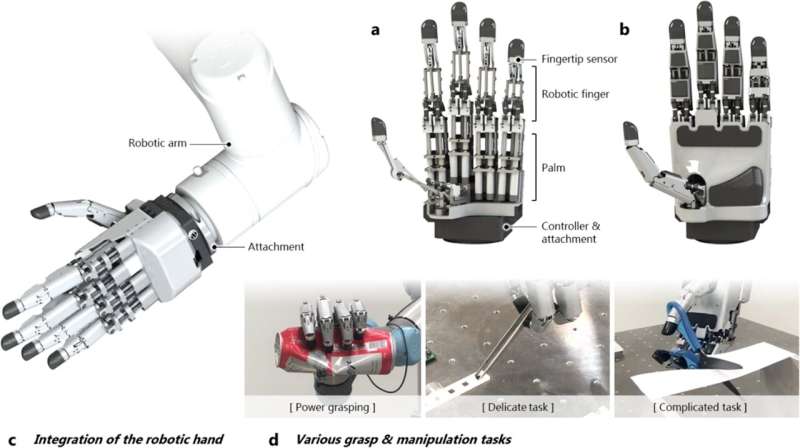

Robot hand moves closer to human abilities

A team of researchers affiliated with multiple institutions in Korea has developed a robot hand that has abilities similar to human hands. In their paper published in the journal Nature Communications, the group describes how they achieved a high level of dexterity while keeping the hand's size and weight low enough to attach to a robot arm.

Creating robot hands with the dexterity, strength and flexibility of human hands is a challenging task for engineers—typically, some attributes are discarded to allow for others. In this new effort, the researchers developed a new robot hand based on a linkage-driven mechanism that allows it to articulate similarly to the human hand. They began their work by conducting a survey of existing robot hands and assessing their strengths and weaknesses. They then drew up a list of features they believed their hand should have, such as fingertip force, a high degree of controllability, low cost and high dexterity.

The researchers call their new hand an integrated, linkage-driven dexterous anthropomorphic (IDLA) robotic hand, and just like its human counterpart, it has four fingers and a thumb, each with three joints. And also like the human hand, it has fingertip sensors. The hand is also just 22 centimeters long. Overall, it has 20 joints, which gives it 15 degrees of motion—it is also strong, able to exert a crushing force of 34 Newtons—and it weighs just 1.1.kg.

The researchers created several videos that demonstrate the capabilities of the hand, including crushing soda cans, cutting paper using scissors, and gently holding an egg. They also show the robot hand pulling a film off of a microchip, manipulating a tennis ball and lifting a heavy object. Perhaps most impressive is the ability of the hand to use a pair of tweezers to pick up small objects.

The researchers note that the hand is also completely self-contained, which means it can be easily fitted to virtually any robot arm. They also suggest that its abilities make it ideal for applications such as applying tiny chips to circuit boards.

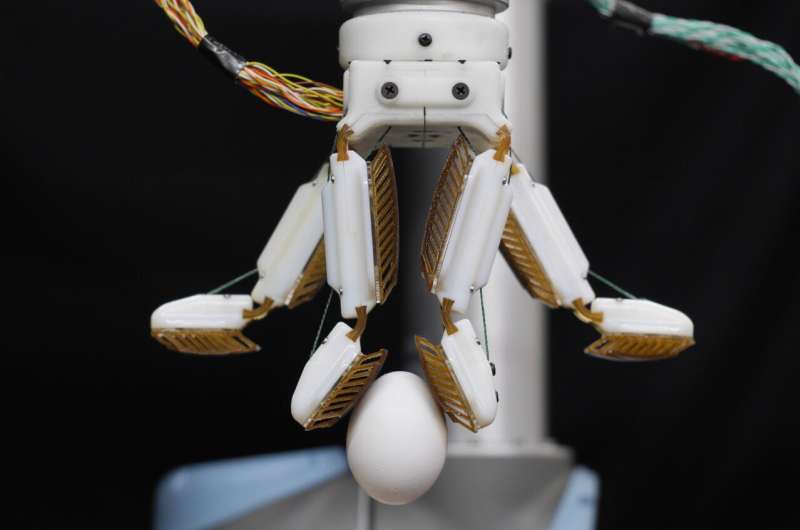

Engineers develop a robotic hand with a gecko-inspired grip

Across a vast array of robotic hands and clamps, there is a common foe: The heirloom tomato. You may have seen a robotic gripper deftly pluck an egg or smoothly palm a basketball—but, unlike human hands, one gripper is unlikely to be able to do both and a key challenge remains hidden in the middle ground.

"You'll see robotic hands do a power grasp and a precision grasp and then kind of imply that they can do everything in between," said Wilson Ruotolo, a former graduate student in the Biomimetics and Dextrous Manipulation Lab at Stanford University. "What we wanted to address is how to create manipulators that are both dexterous and strong at the same time."

The result of this goal is "farmHand," a robotic hand developed by engineers Ruotolo and Dane Brouwer, a graduate student in the Biomimetics and Dextrous Manipulation Lab, at Stanford (aka "the Farm") and detailed in a paper published Dec. 15 in Science Robotics. In their testing, the researchers demonstrated that farmHand is capable of handling a wide variety of items, including raw eggs, bunches of grapes, plates, jugs of liquids, basketballs and even an angle grinder.

FarmHand benefits from two kinds of biological inspiration. While the multi-jointed fingers are reminiscent of a human hand—albeit a four-fingered one—the fingers are topped with gecko-inspired adhesives. This grippy but not sticky material is based on the structure of gecko toes and has been developed over the last decade by the Biomimetics and Dextrous Manipulation Lab, led by Mark Cutkosky, the Fletcher Jones Professor in Stanford's School of Engineering, who is also senior author of this research.

Using the gecko-adhesive on a multi-fingered, anthropomorphic gripper for the first time was a challenge, which required special attention to the tendons controlling the fingers of farmHand and the design of the finger pads below the adhesive.

From the farm to space and back again

Like gecko's toes, the gecko adhesive creates a strong hold via microscopic flaps. When in full contact with a surface, these flaps create a Van der Waals force—a weak intermolecular force that results from subtle differences in the positions of electrons on the outsides of molecules. As a result, the adhesives can grip strongly but require little actual force to do so. Another bonus: They don't feel sticky to the touch or leave a residue behind.

"The first applications of the gecko adhesives had to do with climbing robots, climbing people or grasping very large, very smooth objects in space. But we've always had it in our minds to use them for more down-to-earth applications," said Cutkosky. "The problem is that it turns out that gecko adhesives are actually very fussy."

The fuss is that the gecko adhesives must connect with a surface in a particular way in order to activate the Van der Waals force. This is easy enough to control when they are applied smoothly onto a flat surface, but much more difficult when a grasp relies on multiple gecko adhesive patches contacting an object at various angles, such as with farmHand.

Pinching and buckling

Below the adhesives, farmHand's finger pads help address this challenge. They are made of a collapsible rib structure that buckles with little force. No matter the location or angle of contact, the ribs consistently buckle so as to ensure equal forces on the adhesive pads and prevent any single one from slipping prematurely.

"If you move these ribs, the buckling results in a similar force no matter where you start," said Brouwer. "It's a simple, physical behavior that could be deployed even in spaces outside of robotics, perhaps as shoe tread or all-terrain tires."

The hand's tendons are crucial as well because they enable a hyperextended pinch. While many robotic hands and clamps will pinch objects in a "C" shape, like picking something up with only the tip of your fingers, farmHand pinches with the end of its fingers pressed pad to pad. This gives the adhesives more surface area to work with.

Getting the design just right was especially hard because existing computer simulations have difficulty predicting real-world performance with soft objects—another factor in the heirloom tomato problem. But the researchers benefited immensely from being able to 3D print and test many of the hard and soft plastic components in relatively quick cycles. They go as far as to suggest that their success may not have been possible—or at least much slower—only five years ago.

Further improvements to farmHand could come in the form of feedback features that would help users understand how it is gripping and how it could grip better while the hand is in use. The researchers are also considering commercial applications for their work.

Cutkosky is also a member of Stanford Bio-X and the Wu Tsai Neurosciences Institute.

Gecko-inspired adhesives help soft robotic fingers get a better grip

More information: Wilson Ruotolo et al, From grasping to manipulation with gecko-inspired adhesives on a multifinger gripper, Science Robotics (2021). DOI: 10.1126/scirobotics.abi977

Journal information: Science Robotics

Provided by Stanford University

Mind-controlled robots now one step closer

Tetraplegic patients are prisoners of their own bodies, unable to speak or perform the slightest movement. Researchers have been working for years to develop systems that can help these patients carry out some tasks on their own. "People with a spinal cord injury often experience permanent neurological deficits and severe motor disabilities that prevent them from performing even the simplest tasks, such as grasping an object," says Prof. Aude Billard, the head of EPFL's Learning Algorithms and Systems Laboratory. "Assistance from robots could help these people recover some of their lost dexterity, since the robot can execute tasks in their place."

Prof. Billard carried out a study with Prof. José del R. Millán, who at the time was the head of EPFL's Brain-Machine Interface laboratory but has since moved to the University of Texas. The two research groups have developed a computer program that can control a robot using electrical signals emitted by a patient's brain. No voice control or touch function is needed; patients can move the robot simply with their thoughts. The study has been published in Communications Biology.

Avoiding obstacles

To develop their system, the researchers started with a robotic arm that had been developed several years ago. This arm can move back and forth from right to left, reposition objects in front of it and get around objects in its path. "In our study we programmed a robot to avoid obstacles, but we could have selected any other kind of task, like filling a glass of water or pushing or pulling an object," says Prof. Billard.

The engineers began by improving the robot's mechanism for avoiding obstacles so that it would be more precise. "At first, the robot would choose a path that was too wide for some obstacles, taking it too far away, and not wide enough for others, keeping it too close," says Carolina Gaspar Pinto Ramos Correia, a Ph.D. student at Prof. Billard's lab. "Since the goal of our robot was to help paralyzed patients, we had to find a way for users to be able to communicate with it that didn't require speaking or moving."

An algorithm that can learn from thoughts

This entailed developing an algorithm that could adjust the robot's movements based only on a patient's thoughts. The algorithm was connected to a headcap equipped with electrodes for running electroencephalogram (EEG) scans of a patient's brain activity. To use the system, all the patient needs to do is look at the robot. If the robot makes an incorrect move, the patient's brain will emit an "error message" through a clearly identifiable signal, as if the patient is saying "No, not like that." The robot will then understand that what it's doing is wrong—but at first it won't know exactly why. For instance, did it get too close to, or too far away from, the object? To help the robot find the right answer, the error message is fed into the algorithm, which uses an inverse reinforcement learning approach to work out what the patient wants and what actions the robot needs to take. This is done through a trial-and-error process whereby the robot tries out different movements to see which one is correct. The process goes pretty quickly—only three to five attempts are usually needed for the robot to figure out the right response and execute the patient's wishes. "The robot's AI program can learn rapidly, but you have to tell it when it makes a mistake so that it can correct its behavior," says Prof. Millán. "Developing the detection technology for error signals was one of the biggest technical challenges we faced." Iason Batzianoulis, the study's lead author, adds: "What was particularly difficult in our study was linking a patient's brain activity to the robot's control system—or in other words, 'translating' a patient's brain signals into actions performed by the robot. We did that by using machine learning to link a given brain signal to a specific task. Then we associated the tasks with individual robot controls so that the robot does what the patient has in mind."

Next step: A mind-controlled wheelchair

The researchers hope to eventually use their algorithm to control wheelchairs. "For now there are still a lot of engineering hurdles to overcome," says Prof. Billard. "And wheelchairs pose an entirely new set of challenges, since both the patient and the robot are in motion." The team also plans to use their algorithm with a robot that can read several different kinds of signals and coordinate data received from the brain with those from visual motor functions. Teaching robots to think like us: Brain cells, electrical impulses steer robot though maze

More information: Iason Batzianoulis et al, Customizing skills for assistive robotic manipulators, an inverse reinforcement learning approach with error-related potentials, Communications Biology (2021). DOI: 10.1038/s42003-021-02891-8

Journal information: Communications Biology

Provided by Ecole Polytechnique Federale de Lausanne

No comments:

Post a Comment