Rapid AI proliferation is a threat to democracy, experts say

Anna Tong and Sheila Dang

Wed, November 8, 2023

FILE PHOTO: Illustration shows Artificial Intelligence words

By Anna Tong and Sheila Dang

(Reuters) - The acceleration of artificial intelligence may already be disrupting democratic processes like elections and could even threaten human existence, AI experts warned at the Reuters NEXT conference in New York.

The explosion of generative AI - which can create text, photos and videos in response to open-ended prompts - in recent months has spurred both excitement about its potential as well as fears it could make some jobs obsolete, upend elections and even possibly overpower humans.

“The biggest immediate risk is the threat to democracy…there are a lot of elections around the world in 2024, and the chance that none of them will be swung by deep fakes and things like that is almost zero,” Gary Marcus, a professor at New York University, said in a panel at the Reuters NEXT conference in New York on Wednesday.

One major concern is that generative AI has turbocharged deepfakes - realistic yet fabricated videos created by AI algorithms trained on copious online footage - which surface on social media, blurring fact and fiction in politics.

While such synthetic media has been around for several years, what used to cost millions could now cost $300, Marcus said.

Companies are increasingly using AI to make decisions including about pricing, which could lead to discriminatory outcomes, experts warned at the conference.

Marta Tellado, CEO of the nonprofit Consumer Reports, said an investigation found that car owners who live in neighborhoods with a majority Black or brown population, and in close proximity with a neighborhood of mostly white residents, pay 30% higher car insurance premiums.

"There's no transparency to the consumer in any way," she said during a panel interview.

Another emerging threat that lawmakers and tech leaders must guard against is the possibility of AI becoming so powerful that it becomes a threat to humanity, Anthony Aguirre, founder and executive director of the Future of Life Institute, said in an interview at the conference.

“We should not underestimate how powerful these models are now and how rapidly they are going to get more powerful,” he said.

The Future of Life Institute, a nonprofit aimed at reducing catastrophic risks from advanced artificial intelligence, made headlines in March when it released an open letter calling for a six-month pause on the training of AI systems more powerful than OpenAI's GPT-4. It warned that AI labs have been "locked in an out-of-control race" to develop "powerful digital minds that no one – not even their creators – can understand, predict, or reliably control."

Developing ever-more powerful AI will also risk eliminating jobs to a point where it may be impossible for humans to simply learn new skills and enter other industries.

“Once that happens, I fear that it's not going to be so easy to go back to AI being a tool and AI as something that empowers people. And it's going to be more something that replaces people.”

To view the live broadcast of the World Stage go to the Reuters NEXT news page: https://www.reuters.com/world/reuters-next/

(Reporting by Anna Tong in San Francisco; Editing by Lisa Shumaker)

Rachyl Jones

Wed, November 8, 2023

The warnings about artificial intelligence are everywhere: The technology will put workers out of jobs, spread inaccurate information, and expose corporations who use AI to myriad legal risks.

Despite all the noise however, business leaders aren’t very concerned about AI—at least, according to a new survey of 2,800 managers and executives.

Not only is AI not the top risk that they cited for their companies, it didn’t even make the top 20. AI ranked as the 49th biggest threat for businesses, according to the survey conducted by management consulting firm Aon and published on Tuesday.

The No. 1 concern for business leaders is “cyber attack or data breach,” making a repeat appearance in the top spot after the last time Aon conducted the survey in 2021. Other top concerns included any kind of interruption in business operations, an economic slowdown, and the inability to attract and retain talent, according to participants, who spanned internal company roles, industries, and locations around the world.

The responses suggest a discrepancy in how those inside and outside the corporate world view AI risks. If there are 48 more pressing concerns for businesses, has the threat of AI been overhyped? Or are business leaders too laissez-faire about the new technology?

The survey participants might be getting it wrong, Aon said in its report. AI presents “a significant threat to organizations,” and it “should have been ranked higher by participants,” the company wrote.

Karim Lakhani, Harvard Business School professor who focuses on the role of AI in business, agrees: “People are completely missing the boat on this,” he told Fortune. “It’s a risk in so many ways—risk of not doing enough in the space, or doing it but doing it poorly.”

“It’s shocking they placed it 49th,” Lakhani said. “For me, it’s top five or top three.”

Buzzword fatigue

Adopting AI isn’t something that can be outsourced like other tech integrations, but it must be handled at the executive level, Lakhani said. “You have to do the work yourself, and I don’t think the CEOs, the managers, and the leaders at many companies are putting in the work to know what the problems and opportunities are.”

The reason might lie in the fatigue business executives have felt by following tech trends in recent years, he said. Buzzwords have crowded the business world—blockchain, web3, cryptocurrency, metaverse, augmented reality, virtual reality, and now AI. Not all trends have lived up to the hype, with the crypto world darkened by the fraudulent activities of Sam Bankman-Fried and uncertain demand for viewing the world through a VR headset. In seeing the slow uptake of technologies promised to be the future, business leaders may view AI as a problem to tackle tomorrow rather than today, when that’s just not the case, Lakhani said.

While the threat of AI looks trivial to survey participants in the present, they did acknowledge its future implications. Business leaders expect AI to be the 17th biggest risk in the future, the results show. But they expect cyber attacks to remain No. 1.

Many business sectors may not have had enough exposure to AI yet to consider it a serious threat. Six industries that have invested in AI—including professional services, financial institutions, insurance, technology, media and communications, and the public sector—all ranked AI in their top 10 risks for the future.

The big potatoes

Cyber attacks have consistently ranked as a major threat for businesses, and it’s clear why. The number of data compromises per year was at an all-time high by October 2023, according to the Identity Theft Resource Center. The organization tracked 2,100 hacks impacting 234 million people in the first three quarters of this year. While there are more hacks, the number of victims is down. In 2018, more than 2.2 billion people suffered from breaches. The number of yearly attacks and victims is likely much higher than the reported number, according to Fast Company, which shut down for eight days last year due to a hack.

Cyber breaches can halt revenue generation, damage the reputation of a company, and put individuals in danger. Last month, a hacker released data from genetic testing company 23andMe in a targeted attack against Ashkenazi Jews. The company is now facing a class action lawsuit over its security practices.

AI is in part such a risk for companies because it exacerbates the threat of cyber attacks, the UK government warned in an October report. AI systems allow perpetrators to perform hacks at a faster pace and larger scale with better results, it said. These new technologies not only improve old attack techniques, like sending a persuasive phishing email, but create new ones, like cloning the voice of a loved one and asking for money over the phone. But AI will also play an increasing role protecting companies from cyber attacks, according to the report.

“For the longest time, cyber was ignored by companies,” Lakhani said. “Over the past five years, it’s become a super important issue.” Boards have an increased focus on appointing leaders with cybersecurity backgrounds, hiring crisis teams that include a ransom negotiator, and educating those at the highest levels of companies.

The irony is that “the same thing is happening with AI,” he told Fortune. “People are ignoring it, and what’s going to happen is that the same issues from the board level to the individual employee are going to come up. These companies aren’t paying enough attention to it.”

AI and ethics: Business leaders know it’s important, but concerns linger

John Kell

Wed, November 8, 2023

Illustration by Nick Little

It takes fewer than five minutes into an interview with Tom Siebel before the tech billionaire begins to raise the alarm about artificial intelligence’s many risks.

“The way war is being reinvented, all of these new technologies are highly dependent on AI,” says Siebel, CEO and founder of enterprise AI software company C3.ai, in response to just the second question from Fortune.

The company provides AI applications to oil and gas companies ranging from Shell to Baker Hughes, as well as the U.S. defense and intelligence communities. But C3.ai won’t do business with nations that aren’t allies of democratic states, including China and Russia, because Siebel says “they will misuse these technologies in ways that we can’t possibly imagine.”

MIT Sloan Management Review and Boston Consulting Group recently assembled a panel of AI academics and practitioners and the final question they asked was: “As the business community becomes more aware of AI’s risks, companies are making adequate investments in responsible artificial intelligence.” Eleven out of 13 were reluctant to agree.

AI is changing how humans work, socialize, create, and experience the world. But a lot can go wrong. Bias in AI is when decisions are made that are systematically unfair to various groups of people. Critics fear bias can especially harm marginalized groups. Hallucinations perceive patterns that are imperceptible to humans and create inaccurate outputs. And drift is when large language models behave in unpredictable ways and require recalibration.

Americans are worried. A Pew Research Center survey this summer found that 52% were more concerned than excited about the increased use of AI. In the workplace, Americans oppose AI use in making final hiring decisions by a 71% to 7% margin. Women tend to view AI more negatively than men.

Yet it isn’t all doom and gloom. Half of organizations say risk factors are a critical consideration when evaluating new uses of AI. A large share of Americans do not believe the use of AI in the workplace will have a major impact on them personally.

“You should never have as a goal automating away a bunch of workers,” says Steve Mills, BCG’s chief AI ethics officer. “It is about how you pair people with AI to make their job better, allow them to do more, and take advantage of human creativity and ingenuity.”

AI works best when there’s human oversight. Many companies say their employees stringently review the AI models they create or use and that processes are put into place to ensure privacy and data security. Tech giants publicly share their responsible AI ethos to ease worries about the quickly evolving tech.

“I’m optimistic that AI will be a collaborative tool that workers will use,” says Todd Mobley, who represents employers in litigation for DLA Piper. “But it should be an ongoing, iterative process with discussions, training, and testing of the tools to ensure they are being used for the appropriate reasons. And that the tool is not creating unintended consequences.”

“We think AI can be very powerful for automating a lot of tasks, even automating decisions, but at some point you want a human in the loop to validate how decisions are being made,” says Rob Thomas, senior vice president of software and chief commercial officer at IBM.

Thomas stresses that there must be transparency to how AI is being built and where the data comes from. Regulation should oversee the use cases for AI, but not the technology’s development. And governance is critical to understand how models are performing.

To that end, before the end of this year, IBM will make watsonx.governance [sic] available to help businesses monitor and manage their AI activities, and employ software automation to mitigate risk, manage regulatory requirements, and address ethical concerns. “Our intent is to deliver software and capabilities that enables any company to deliver trust in AI,” says Thomas.

In September, German software giant SAP debuted a new generative AI copilot called Joule, which is being embedded in applications ranging from supply chain, to finance, to procurement. Users of some SAP products can ask a question or propose a problem and receive AI answers drawn from business data across SAP’s portfolio and third-party sources.

Thomas Saueressig, who runs SAP’s product engineering team and ethical AI development efforts, says it is critical to acknowledge that bias does exist in large language models and that SAP puts resources into mitigation efforts to ensure Joule’s prompts avoid bias. Saueressig says it is “absolutely essential” that AI development is human centered. “We believe it is a duet, not a duel.”

Every SAP employee has signed the company’s AI ethics policy since the beginning of 2022. “We have a very clear focus on the value of data protection and privacy,” says Saueressig.

Tony Habash thinks AI will drastically change how therapists practice psychology. As chief information officer at the American Psychological Association, Habash sees beneficial uses ranging from AI-powered note-taking, to providing treatment indicators for the therapist to use to improve care. There’s also potential for AI to advance medical research and make healthcare more accessible by lowering costs.

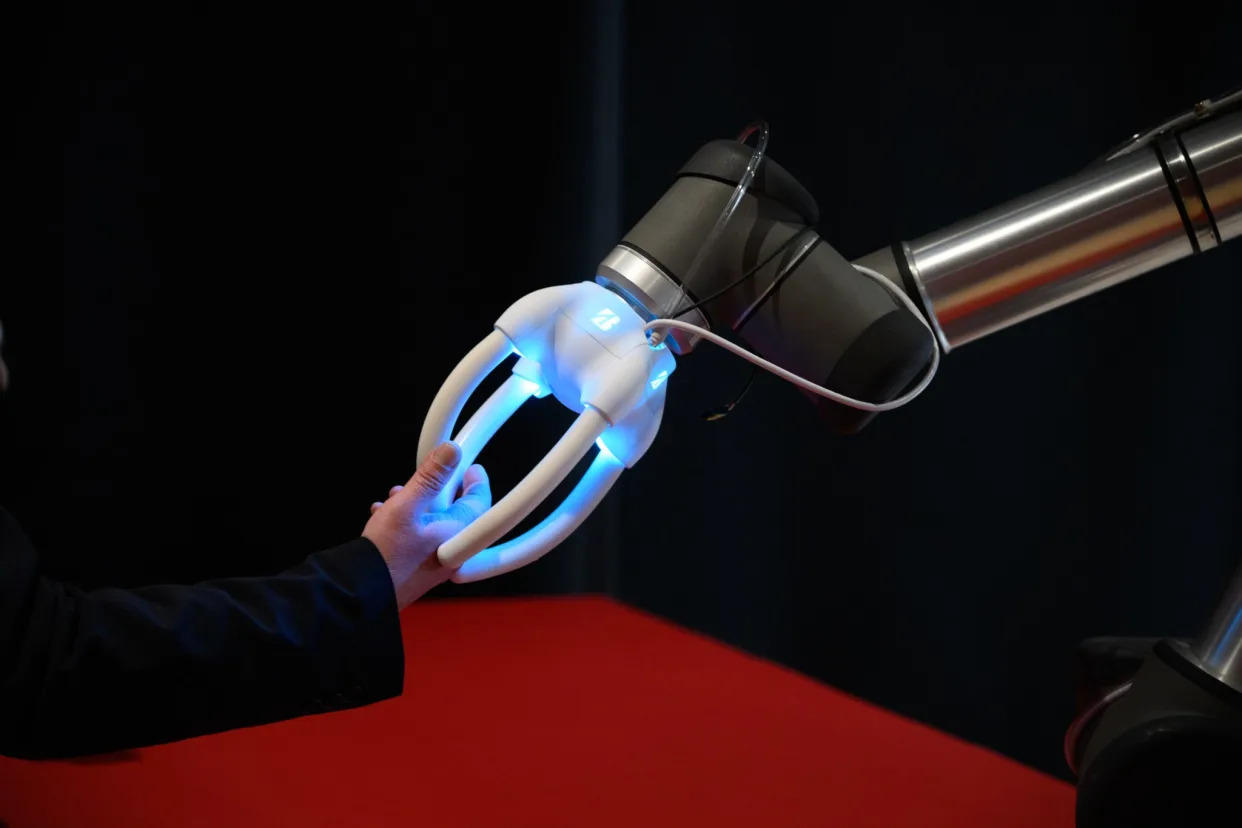

“We think the biggest change ahead of us is the human-machine relationship,” says Habash. Historically, humans had to learn programming languages, like Java, to tell a machine what to do. “And then we wake up and the machine is speaking our language with generative AI.” Habash says this raises ethical questions about how humans can create the confidence, best practices, and guidelines for the machine-human interaction.

“A clinician that is working with an AI system will need to clearly understand how it is working, what it is built for, and how they use AI to improve the quality of health care service and ensure the well-being of the patient,” says Sunil Senan, senior vice president and global head of data, analytics, and AI at Infosys.

There are ways AI can help humans behave more humanely. Take the example of Match Group’s “Are You Sure?” feature. Using AI, Match’s Tinder dating app is able to identify phrases that could be problematic and flag to the sender that the message they are about to send could be offensive. Similarly, “Does This Bother You?” is a prompt message receivers can use to flag harmful language to Match.

These features have reduced harassment on the app, and Match has expanded “Are You Sure?” to 18 languages today. “The people who saw this prompt and changed their message were less likely to be reported,” says Rory Kozoll, senior vice president of central platform and technologies for Match Group. “These models are really impressive at their ability to understand nuance and language.”

When Microsoft’s venture capital fund M12 looks to invest, it looks for startups that can deploy technology responsibly. To that end, M12 invested in Inworld, which creates AI-powered virtual characters in role-playing games that aren’t controlled by humans. These virtual conversations could go off the rails and become toxic, but Inworld sets strict guardrails about what can be said, allowing it to work with family-friendly clients like Disney.

“I’m always going to feel better sleeping at night knowing that the companies we’ve invested in have a clear line of sight of the use of data, data that goes into the model, the data the model is trained on, and then the ultimate use of the model will abide by guidelines and guardrails that are legal and commercially sound,” says Michael Stewart, a partner at M12.

Ally Financial, the largest all-digital bank in the U.S., uses AI in underwriting and chatbots. When deploying AI, Ally experiments with internal customers and always has a human involved. Sathish Muthukrishnan, Ally Financial’s chief information, data, and digital officer, says the AI tech used in chatbots must talk exactly like an associate would engage with a customer.

“AI models are learning how to avoid bias, and it is our responsibility to teach that,” says Muthukrishnan.

Some AI startups are emerging that aim to establish more trust in the technology. In September, Armilla AI debuted warranty coverage for AI products with insurers like Swiss Re to give customers third-party verification that the AI they are using is fair and secure. “A model works really well because it is biased,” explains Karthik Ramakrishnan, cofounder and CEO of Armilla AI. Bias, he explains, isn’t a negative word because AI must be trained on data to think a certain way.

“But what we are worried about is how the model treats different demographics and situations,” says Ramakrishnan.

Credo AI is an AI governance offering that automates AI oversight, risk mitigation, and regulatory compliance. The software provides accountability and oversight to a client’s entire tech stack, helping clearly define who is reviewing systems; is there an ethics board and who is part of it; and how are systems audited and approved.

“Enterprise leaders don’t even know where AI is actually used within their own organization,” says Credo AI CEO and founder Navrina Singh. “They have records of models but they don’t have records of applications.”

At C3.ai, Siebel remains worried about AI landing in the wrong hands. Privacy is his biggest concern. Siebel says society should be aligned that AI shouldn’t misuse private information, propagate social health hazards, interfere with democratic processes, or be used for sensitive military applications without civilian oversight. As for bias, that’s a more difficult nut to crack.

“We have hundreds of years of history in Western civilization,” says Siebel. “There’s nothing but bias. Be it gender, be it national origin, be it race. Tell me how we are going to fix that? It’s unfixable.”

This story was originally featured on Fortune.com

No comments:

Post a Comment