The Conversation

January 30, 2024

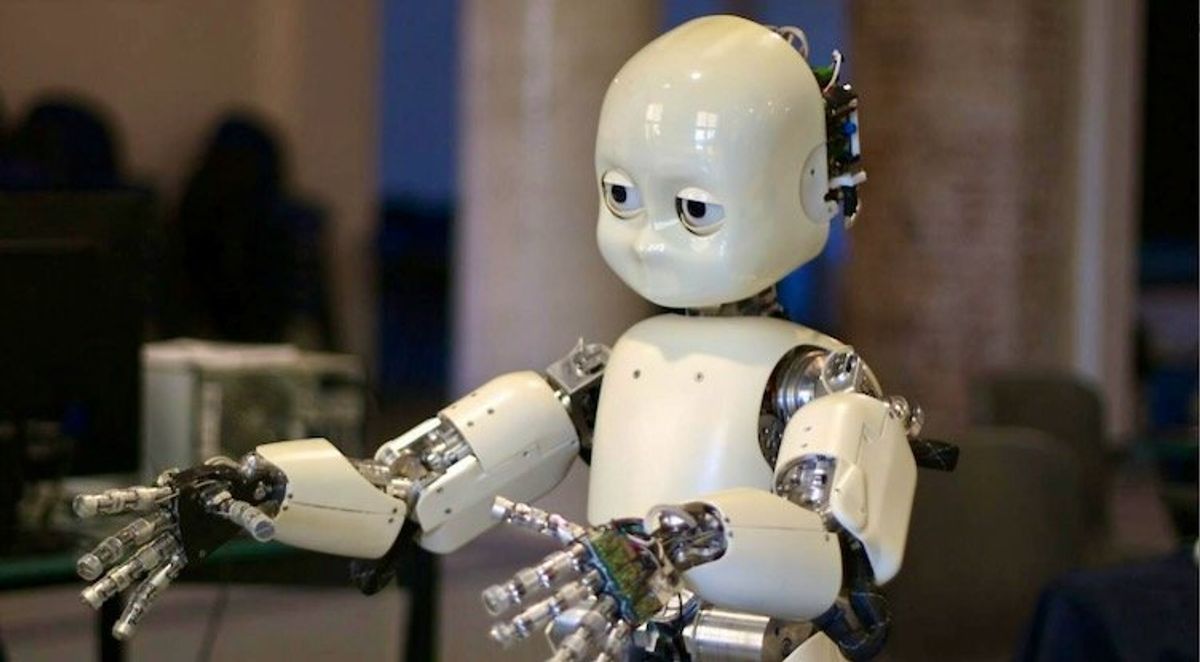

Robot (Jiuguang Wang/Flickr, CC BY-SA)

Problems of racial and gender bias in artificial intelligence algorithms and the data used to train large language models like ChatGPT have drawn the attention of researchers and generated headlines. But these problems also arise in social robots, which have physical bodies modeled on nonthreatening versions of humans or animals and are designed to interact with people.

The aim of the subfield of social robotics called socially assistive robotics is to interact with ever more diverse groups of people. Its practitioners’ noble intention is “to create machines that will best help people help themselves,” writes one of its pioneers, Maja Matarić. The robots are already being used to help people on the autism spectrum, children with special needs and stroke patients who need physical rehabilitation.

But these robots do not look like people or interact with people in ways that reflect even basic aspects of society’s diversity. As a sociologist who studies human-robot interaction, I believe that this problem is only going to get worse. Rates of diagnoses for autism in children of color are now higher than for white kids in the U.S. Many of these children could end up interacting with white robots.

So, to adapt the famous Twitter hashtag around the Oscars in 2015, why #robotssowhite?

Why robots tend to be white

Given the diversity of people they will be exposed to, why does Kaspar, designed to interact with children with autism, have rubber skin that resembles a white person’s? Why are Nao, Pepper and iCub, robots used in schools and museums, clad with shiny, white plastic? In The Whiteness of AI, technology ethicist Stephen Cave and science communication researcher Kanta Dihal discuss racial bias in AI and robotics and note the preponderance of stock images online of robots with reflective white surfaces.

What is going on here?

One issue is what robots are already out there. Most robots are not developed from scratch but purchased by engineering labs for projects, adapted with custom software, and sometimes integrated with other technologies such as robot hands or skin. Robotics teams are therefore constrained by design choices that the original developers made (Aldebaran for Pepper, Italian Institute of Technology for iCub). These design choices tend to follow the clinical, clean look with shiny white plastic, similar to other technology products like the original iPod.

Kaspar is a robot designed to interact with children with autism.

In a paper I presented at the 2023 American Sociological Association meeting, I call this “the poverty of the engineered imaginary.”

How society imagines robots

In anthropologist Lucy Suchman’s classic book on human-machine interaction, which was updated with chapters on robotics, she discusses a “cultural imaginary” of what robots are supposed to look like. A cultural imaginary is what is shared through representations in texts, images and films, and which collectively shapes people’s attitudes and perceptions. For robots, the cultural imaginary is derived from science fiction.

This cultural imaginary can be contrasted with the more practical concerns of how computer science and engineering teams view robot bodies, what Neda Atanasoski and Kalindi Vora call the “engineered imaginary.” This is a hotly contested area in feminist science studies, with, for example, Jennifer Rhee’s “The Robotic Imaginary” and Atanasoski and Vora’s “Surrogate Humanity” critical of the gendered and racial assumptions that lead people to design service robots – designed to carry out mundane tasks – as female.

The cultural imaginary that enshrines robots as white, and in fact usually female, stretches back to European antiquity, along with an explosion of novels and films at the height of industrial modernity. From the first mention of the word “android” in Auguste Villiers de l’Isle-Adam’s 1886 novel “The Future Eve,” the introduction of the word “robot” in Karel Čapek’s 1920 play “Rossum’s Universal Robots,” and the sexualized robot Maria in the 1925 novel “Metropolis” by Thea von Harbou – the basis of her husband Fritz Lang’s famous 1927 film of the same name – fictional robots were quick to be feminized and made servile.

Perhaps the prototype for this cultural imaginary lies in ancient Rome. A poem in Ovid’s “Metamorphoses” (8 C.E.) describes a statue of Galatea “of snow-white ivory” that its creator Pygmalion falls in love with. Pygmalion prays to Aphrodite that Galatea come to life, and his wish is granted. There are numerous literary, poetic and film adaptations of the story, including one of the first special effects in cinema in Méliès’ 1898 film. Paintings that depict this moment, for example by Raoux (1717), Regnault (1786), and Burne-Jones (1868-70 and 1878), accentuate the whiteness of Galatea’s flesh.

The painting Pygmalion and Galatea by Jean-Léon Gérôme depicts an ancient Roman tale of a statue brought to life. Peter Roan/Flickr, CC BY-NC

Interdisciplinary route to diversity and inclusion

What can be done to counter this cultural legacy? After all, all human-machine interaction should be designed with diversity and inclusion in mind, according to engineers Tahira Reid and James Gibert. But outside of Japan’s ethnically Japanese-looking robots, robots designed to be nonwhite are rare. And Japan’s robots tend to follow the subservient female gender stereotype.

The solution is not simply to encase machines in brown or black plastic. The problem goes deeper. The Bina48 “custom character robot” modeled on the head and shoulders of a millionaire’s African American wife, Bina Aspen, is notable, but its speech and interactions are limited. A series of conversations between Bina48 and the African American artist Stephanie Dinkins is the basis of a video installation.

The absurdity of talking about racism with a disembodied animated head becomes apparent in one such conversation – it literally has no personal experience to speak of, yet its AI-powered answers refer to an unnamed person’s experience of racism growing up. These are implanted memories, like the “memories” of the replicant androids in the “Blade Runner” movies.

Social science methods can help produce a more inclusive “engineered imaginary,” as I discussed at Edinburgh’s Being Human festival in November 2022. For example, working with Guy Hoffman, a roboticist from Cornell, and Caroline Yan Zheng, then a Ph.D. design student from Royal College of Art, we invited contributions for a publication titled Critical Perspectives on Affective Embodied Interaction.

One of the persistent threads in that collaboration and other work is just how much people’s bodies communicate to others through gesture and expression, as well as vocalization, and how this differs between cultures. In which case, making robots’ appearance reflect the diversity of people who benefit from their presence is one thing, but what about diversifying forms of interaction? Along with making robots less universally white and female, social scientists, interaction designers and engineers can work together to produce more cross-cultural sensitivity in gestures and touch, for example.

Such work promises to make human-robot interaction less scary and uncanny, especially for people who need assistance from the new breeds of socially assistive robots.

Mark Paterson, Professor of Sociology, University of Pittsburgh

This article is republished from The Conversation under a Creative Commons license. Read the original article.

In anthropologist Lucy Suchman’s classic book on human-machine interaction, which was updated with chapters on robotics, she discusses a “cultural imaginary” of what robots are supposed to look like. A cultural imaginary is what is shared through representations in texts, images and films, and which collectively shapes people’s attitudes and perceptions. For robots, the cultural imaginary is derived from science fiction.

This cultural imaginary can be contrasted with the more practical concerns of how computer science and engineering teams view robot bodies, what Neda Atanasoski and Kalindi Vora call the “engineered imaginary.” This is a hotly contested area in feminist science studies, with, for example, Jennifer Rhee’s “The Robotic Imaginary” and Atanasoski and Vora’s “Surrogate Humanity” critical of the gendered and racial assumptions that lead people to design service robots – designed to carry out mundane tasks – as female.

The cultural imaginary that enshrines robots as white, and in fact usually female, stretches back to European antiquity, along with an explosion of novels and films at the height of industrial modernity. From the first mention of the word “android” in Auguste Villiers de l’Isle-Adam’s 1886 novel “The Future Eve,” the introduction of the word “robot” in Karel Čapek’s 1920 play “Rossum’s Universal Robots,” and the sexualized robot Maria in the 1925 novel “Metropolis” by Thea von Harbou – the basis of her husband Fritz Lang’s famous 1927 film of the same name – fictional robots were quick to be feminized and made servile.

Perhaps the prototype for this cultural imaginary lies in ancient Rome. A poem in Ovid’s “Metamorphoses” (8 C.E.) describes a statue of Galatea “of snow-white ivory” that its creator Pygmalion falls in love with. Pygmalion prays to Aphrodite that Galatea come to life, and his wish is granted. There are numerous literary, poetic and film adaptations of the story, including one of the first special effects in cinema in Méliès’ 1898 film. Paintings that depict this moment, for example by Raoux (1717), Regnault (1786), and Burne-Jones (1868-70 and 1878), accentuate the whiteness of Galatea’s flesh.

The painting Pygmalion and Galatea by Jean-Léon Gérôme depicts an ancient Roman tale of a statue brought to life. Peter Roan/Flickr, CC BY-NC

Interdisciplinary route to diversity and inclusion

What can be done to counter this cultural legacy? After all, all human-machine interaction should be designed with diversity and inclusion in mind, according to engineers Tahira Reid and James Gibert. But outside of Japan’s ethnically Japanese-looking robots, robots designed to be nonwhite are rare. And Japan’s robots tend to follow the subservient female gender stereotype.

The solution is not simply to encase machines in brown or black plastic. The problem goes deeper. The Bina48 “custom character robot” modeled on the head and shoulders of a millionaire’s African American wife, Bina Aspen, is notable, but its speech and interactions are limited. A series of conversations between Bina48 and the African American artist Stephanie Dinkins is the basis of a video installation.

The absurdity of talking about racism with a disembodied animated head becomes apparent in one such conversation – it literally has no personal experience to speak of, yet its AI-powered answers refer to an unnamed person’s experience of racism growing up. These are implanted memories, like the “memories” of the replicant androids in the “Blade Runner” movies.

Social science methods can help produce a more inclusive “engineered imaginary,” as I discussed at Edinburgh’s Being Human festival in November 2022. For example, working with Guy Hoffman, a roboticist from Cornell, and Caroline Yan Zheng, then a Ph.D. design student from Royal College of Art, we invited contributions for a publication titled Critical Perspectives on Affective Embodied Interaction.

One of the persistent threads in that collaboration and other work is just how much people’s bodies communicate to others through gesture and expression, as well as vocalization, and how this differs between cultures. In which case, making robots’ appearance reflect the diversity of people who benefit from their presence is one thing, but what about diversifying forms of interaction? Along with making robots less universally white and female, social scientists, interaction designers and engineers can work together to produce more cross-cultural sensitivity in gestures and touch, for example.

Such work promises to make human-robot interaction less scary and uncanny, especially for people who need assistance from the new breeds of socially assistive robots.

Mark Paterson, Professor of Sociology, University of Pittsburgh

This article is republished from The Conversation under a Creative Commons license. Read the original article.

No comments:

Post a Comment