Forecasting El Niño with entropy—a year in advance

This would beat 6-month limit of current forecasts.

SCOTT K. JOHNSON - 12/28/2019, arstechnica.com

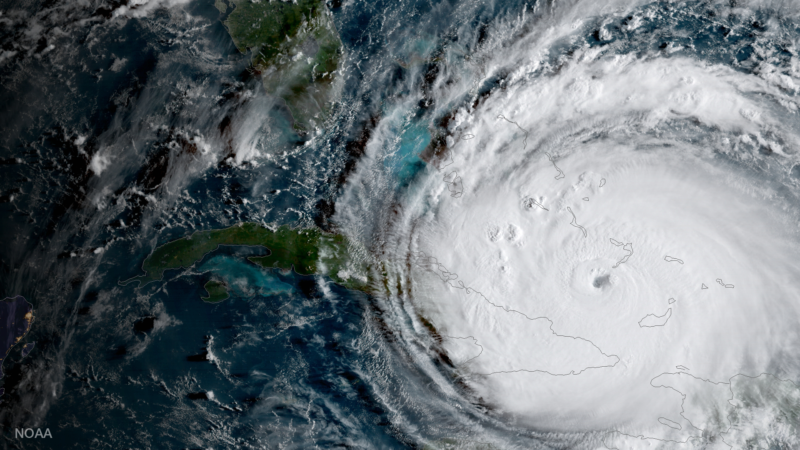

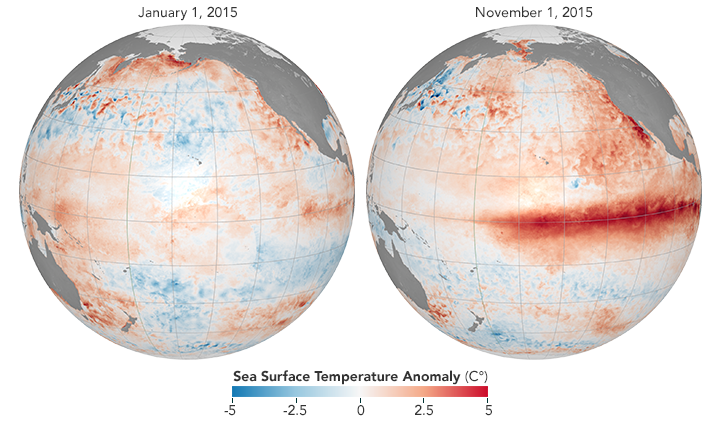

Enlarge / A strong El Niño developed in 2015, visible here from temperature departures from average.

We generally think of weather as something that changes by the day, or the week at the most. But there are also slower patterns that exist in the background, nudging your daily weather in one direction or another. One of the most consequential is the El Niño Southern Oscillation—a pattern of sea surface temperatures along the equatorial Pacific that affects temperature and precipitation averages in many places around the world.

In the El Niño phase of this oscillation, warm water from the western side of the Pacific leaks eastward toward South America, creating a broad belt of warm water at the surface. The opposite phase, known as La Niña, sees strong trade winds blow that warm water back to the west, pulling up cold water from the deeps along South America. The Pacific randomly wobbles between these phases from one year to the next, peaking late in the calendar.

Since this oscillation has such a meaningful impact on weather patterns—from heavy precipitation in California to drought in Australia—forecasting the wobble can provide useful seasonal outlooks. And because it changes fairly slowly, current forecasts are actually quite good out to about six months. It would be nice to extend that out further, but scientists have repeatedly run into what they've termed a “spring predictability barrier.” Until they see how the spring season plays out, the models have a hard time forecasting the rest of the year.

A new study led by Jun Meng, Jingfang Fan, and Josef Ludescher at the Potsdam Institute for Climate Impact Research showcases a creative new method that might hop that barrier.

This method doesn’t involve a better simulation model or some new source of data. Instead, it analyzes sea surface temperature data in a new way, generating a prediction of the strength of El Niño events a full year in advance. That analysis, borrowed from medical science, measures the degree of order or disorder (that is, entropy) in the data. It turns out that years with high disorder tend to precede strong El Niño events that peak a year later.

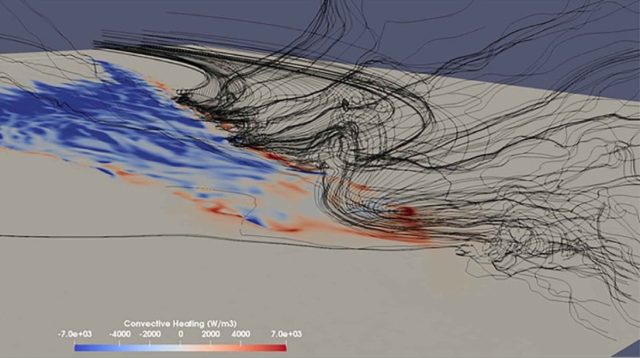

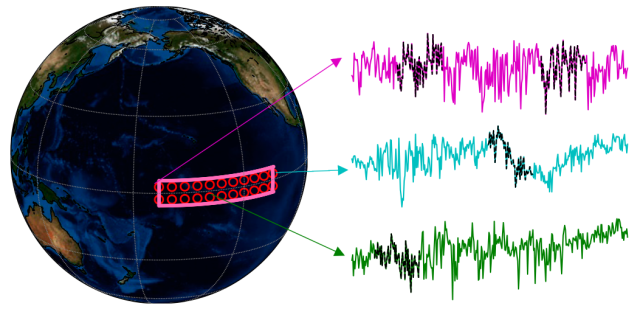

What does it mean for the data to be disorderly? Essentially, the analysis looks for signs that temperatures in different locations across the relevant portion of the Pacific are changing in sync with each other. The researchers broke the area into 22 grid boxes, comparing temperature in each box to the others for consistent patterns.

Enlarge / An example of temperature data from different grid boxes within the region used to measure the El Niño Southern Oscillation.

For a very simple example of how this works, they first tested the method on similar pairs of grid boxes—but using some pairs of neighboring boxes and some pairs that were in different parts of the world. Locations right next to each other tend to behave similarly, while distant locations experienced completely unrelated ups and downs.

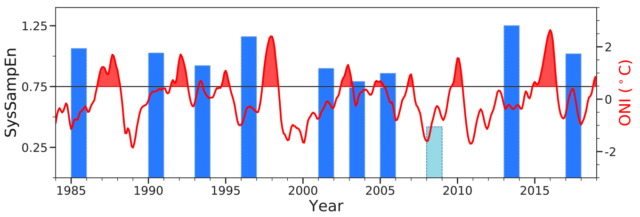

When they set this method loose on past Pacific temperature data going back to 1985, it worked surprisingly well. For the ten El Niño years in the dataset, their method indicated high disorder in the year previous nine times, missing only one of them. And for the rest of the years in the dataset, it only had three false positives, where it indicated a coming El Niño that never materialized.

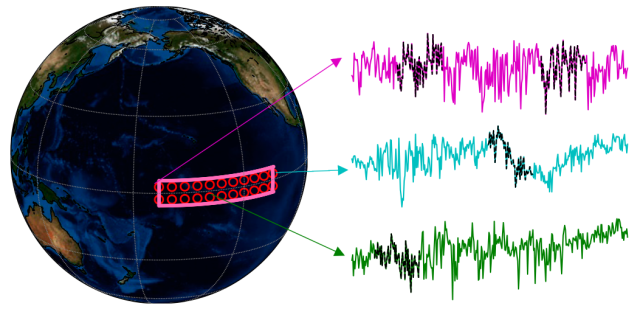

Enlarge / Forecasts of El Niño strength (blue bars) based on data in the year preceding actual El Niños (red).

What’s more, the degree of disorder correlated with the strength of the El Niño, allowing them to forecast the Pacific temperature within a couple tenths of a degree C. Most recently, the researchers calculated a 2018 forecast using the 2017 temperature data. El Niño/La Niña is measured by the average temperature across that region of the Pacific, with anything at least 0.5°C above normal qualifying as an El Niño. The 2018 forecast, calculated about 12 months ahead, comes in at +1.11°C (±0.23). The data show that 2018 actually hit about +0.9°C.

Statistics-based forecasts can be problematic, falling for meaningless correlations that have no physical basis and don’t hold up in the future. But in this case, the statistics don’t come from searching for correlations or fitting to existing data. It’s simply a real measurement that seems to pass the test pretty well. And there’s a plausible mechanism behind it, the researchers say.

FURTHER READING Current El Niño’s strength due partly to an earlier fizzle

Orderly temperature patterns could result from turbulent mixing of the ocean that helps temperature diffuse across the area. That is a common pattern during El Niño years, and it tends to see-saw. If temperatures are very orderly one year, they’re likely to become very disorderly the next, and vice versa. That sort of behavior has been noticed before, and this new method may be picking up on it.

If nothing else, efforts like this show the spring predictability barrier probably won’t stand forever. Seasonal weather outlooks might someday be a part of annual outlooks, though the task of forecasting next Tuesday’s weather will remain a separate endeavor.

PNAS, 2019. DOI: 10.1073/pnas.1917007117 (About DOIs).