Hubble Spies a Stunning Spiral in Constellation Coma Berenices

Hubble Space Telescope’s Wide Field Camera 3 was used to capture this cosmic portrait that features a stunning view of the spiral galaxy NGC 4571, which lies approximately 60 million light-years from Earth in the constellation Coma Berenices. Credit: ESA/Hubble & NASA, J. Lee and the PHANGS-HST Team

This cosmic portrait — captured with the NASA/ESA Hubble Space Telescope’s Wide Field Camera 3 — shows a stunning view of the spiral galaxy NGC 4571, which lies approximately 60 million light-years from Earth in the constellation Coma Berenices. This constellation — whose name translates as Bernice’s Hair — was named after an Egyptian queen who lived more than 2200 years ago.

As majestic as spiral galaxies like NGC 4571 are, they are far from the largest structures known to astronomers. NGC 4571 is part of the Virgo cluster, which contains more than a thousand galaxies. This cluster is in turn part of the larger Virgo supercluster, which also encompasses the Local Group which contains our own galaxy, the Milky Way. Even larger than superclusters are galaxy filaments — the largest known structures in the Universe.

This image comes from a large program of observations designed to produce a treasure trove of combined observations from two great observatories: Hubble and ALMA. ALMA, The Atacama Large Millimeter/submillimeter Array, is a vast telescope consisting of 66 high-precision antennas high in the Chilean Andes, which together observe at wavelengths between infrared and radio waves. This allows ALMA to detect the clouds of cool interstellar dust which give rise to new stars. Hubble’s razor-sharp observations at ultraviolet wavelengths, meanwhile, allows astronomers to pinpoint the location of hot, luminous, newly formed stars. Together, the ALMA and Hubble observations provide a vital repository of data to astronomers studying star formation, as well as laying the groundwork for future science with the NASA/ESA/CSA James Webb Space Telescope.

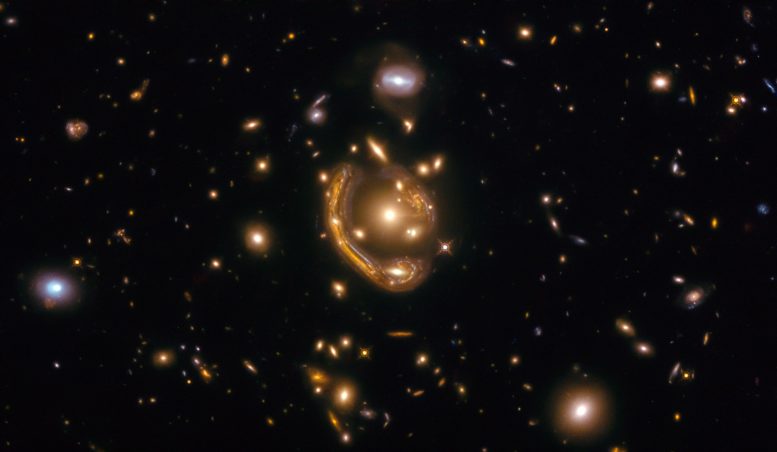

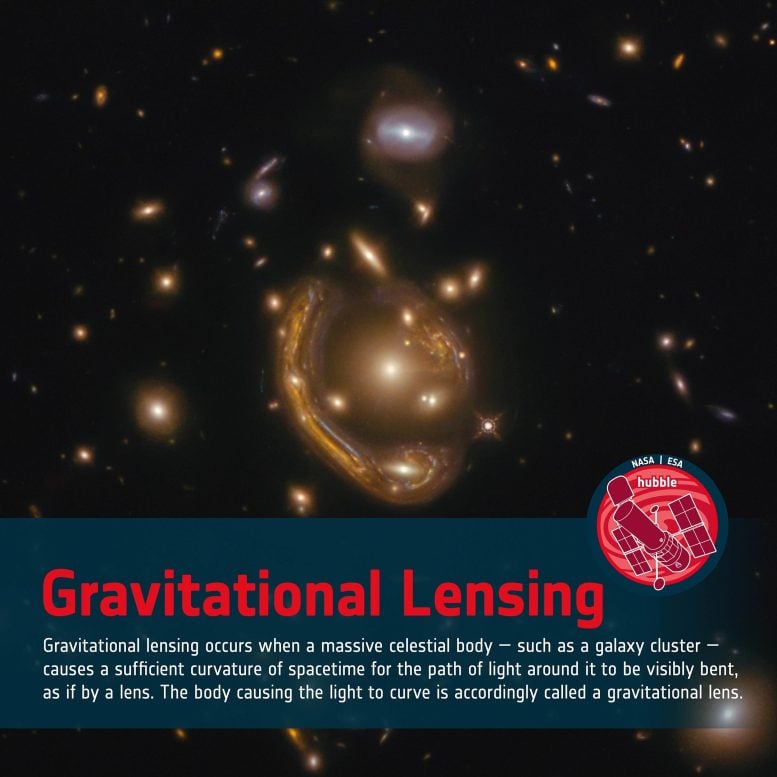

Astronomy & Astrophysics 101: Gravitational Lensing

NASA/ESA Hubble Space Telescope image of GAL-CLUS-022058s, located in the southern hemisphere constellation of Fornax (The Furnace). Credit: ESA/Hubble & NASA, S. Jha, Acknowledgement: L. Shatz

Gravitational lensing occurs when a massive celestial body — such as a galaxy cluster — causes a sufficient curvature of spacetime for the path of light around it to be visibly bent, as if by a lens. The body causing the light to curve is accordingly called a gravitational lens.

According to Einstein’s general theory of relativity, time and space are fused together in a quantity known as spacetime. Within this theory, massive objects cause spacetime to curve, and gravity is simply the curvature of spacetime. As light travels through spacetime, the theory predicts that the path taken by the light will also be curved by an object’s mass. Gravitational lensing is a dramatic and observable example of Einstein’s theory in action. Extremely massive celestial bodies such as galaxy clusters cause spacetime to be significantly curved. In other words, they act as gravitational lenses. When light from a more distant light source passes by a gravitational lens, the path of the light is curved, and a distorted image of the distant object — maybe a ring or halo of light around the gravitational lens — can be observed.

Gravitational lensing occurs when a massive celestial body — such as a galaxy cluster — causes a sufficient curvature of spacetime for the path of light around it to be visibly bent, as if by a lens. The body causing the light to curve is accordingly called a gravitational lens. Credit: ESA/Hubble (M. Kornmesser & L. L. Christensen)

An important consequence of this lensing distortion is magnification, allowing us to observe objects that would otherwise be too far away and too faint to be seen. Hubble makes use of this magnification effect to study objects that would otherwise be beyond the sensitivity of its 2.4-meter-diameter primary mirror, showing us thereby the most distant galaxies humanity has ever encountered.

This Space Sparks Episode explores the concept of gravitational lensing. This effect is only visible in rare cases and only the best telescopes — including the NASA/ESA Hubble Space Telescope — can observe the results of gravitational lensing. The strong gravity of a massive object, such as a cluster of galaxies, warps the surrounding space, and light from distant objects traveling through that warped space is curved away from its straight-line path. This video highlights how Hubble’s sensitivity and high resolution allow it to see details in these faint, distorted images of distant galaxies.

Hubble’s sensitivity and high resolution allow it to see faint and distant gravitational lenses that cannot be detected with ground-based telescopes whose images are blurred by the Earth’s atmosphere. The gravitational lensing results in multiple images of the original galaxy each with a characteristically distorted arc-like shape or even into rings. Hubble was the first telescope to resolve details within these multiple arc-shaped features. Its sharp vision can reveal the shape and internal structure of the lensed background galaxies directly.

An image released in 2020 as part of the ESA/Hubble Picture of the Week series of the object known as GAL-CLUS-022058s revealed the largest ring-shaped lensed image of a galaxy (known as an Einstein ring) ever discovered, also one of the most complete. The near exact alignment of the background galaxy with the central elliptical galaxy of the cluster warped and magnified the image of the background galaxy into an almost perfect ring.

Learn more about Hubble’s observations of gravitational lensing here.

A block with a carved foot found on the edge of Motya’s sacred pool probably was part of a statue of a Phoenician god that originally stood on a pedestal at the pool’s center, researchers say.L. NIGRO/ANTIQUITY 2022

A block with a carved foot found on the edge of Motya’s sacred pool probably was part of a statue of a Phoenician god that originally stood on a pedestal at the pool’s center, researchers say.L. NIGRO/ANTIQUITY 2022