When walked on, these wooden floors harvest enough energy to turn on a lightbulb

Researchers from Switzerland are tapping into an unexpected energy source right under our feet: wooden floorings. Their nanogenerator, presented September 1 in the journal Matter, enables wood to generate energy from our footfalls. They also improved the wood used in the their nanogenerator with a combination of a silicone coating and embedded nanocrystals, resulting in a device that was 80 times more efficient—enough to power LED lightbulbs and small electronics.

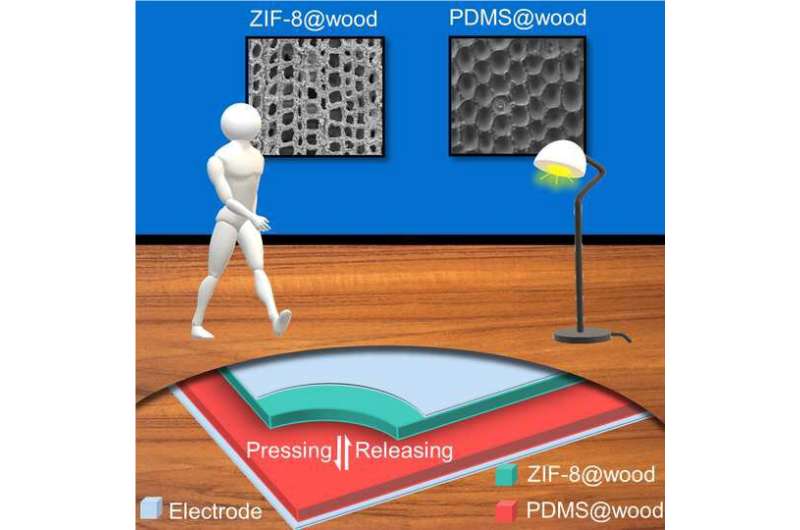

The team began by transforming wood into a nanogenerator by sandwiching two pieces of functionalized wood between electrodes. Like a shirt-clinging sock fresh out of the dryer, the wood pieces become electrically charged through periodic contacts and separations when stepped on, a phenomenon called the triboelectric effect. The electrons can transfer from one object to another, generating electricity. However, there's one problem with making a nanogenerator out of wood.

"Wood is basically triboneutral," says senior author Guido Panzarasa, group leader in the professorship of Wood Materials Science located at Eidgenössische Technische Hochschule (ETH) Zürich and Swiss Federal Laboratories for Materials Science and Technology (Empa) Dübendorf. "It means that wood has no real tendency to acquire or to lose electrons." This limits the material's ability to generate electricity, "so the challenge is making wood that is able to attract and lose electrons," Panzarasa explains.

To boost wood's triboelectric properties, the scientists coated one piece of the wood with polydimethylsiloxane (PDMS), a silicone that gains electrons upon contact, while functionalizing the other piece of wood with in-situ-grown nanocrystals called zeolitic imidazolate framework-8 (ZIF-8). ZIF-8, a hybrid network of metal ions and organic molecules, has a higher tendency to lose electrons. They also tested different types of wood to determine whether certain species or the direction in which wood is cut could influence its triboelectric properties by serving as a better scaffold for the coating.

The researchers found that a triboelectric nanogenerator made with radially cut spruce, a common wood for construction in Europe, performed the best. Together, the treatments boosted the triboelectric nanogenerator's performance: it generated 80 times more electricity than natural wood. The device's electricity output was also stable under steady forces for up to 1,500 cycles.

"Our focus was to demonstrate the possibility of modifying wood with relatively environmentally friendly procedures to make it triboelectric," says Panzarasa. "Spruce is cheap and available and has favorable mechanical properties. The functionalization approach is quite simple, and it can be scalable on an industrial level. It's only a matter of engineering."The researchers found that a wood floor prototype with a surface area slightly smaller than a piece of paper can produce enough energy to drive household LED lamps and small electronic devices such as calculators. They successfully lit up a lightbulb with the prototype when a human adult walked upon it, turning footsteps into electricity.

Besides being efficient, sustainable, and scalable, the newly developed nanogenerator also preserves the features that make the wood useful for interior design, including its mechanical robustness and warm colors. The researchers say that these features might help promote the use of wood nanogenerators as green energy sources in smart buildings. They also say that wood construction could help mitigate climate change by sequestering CO2 from the environment throughout the material's lifespan.

The next step for Panzarasa and his team is to further optimize the nanogenerator with chemical coatings that are more eco-friendly and easier to implement. "Even though we initially focused on basic research, eventually, the research that we do should lead to applications in the real world," says Panzarasa. "The ultimate goal is to understand the potentialities of wood beyond those already known and to enable wood with new properties for future sustainable smart buildings."