Three Myths About Renewable Energy and the Grid, Debunked

Renewable energy skeptics argue that because of their variability, wind and solar cannot be the foundation of a dependable electricity grid. But the expansion of renewables and new methods of energy management and storage can lead to a grid that is reliable and clean.

BY AMORY B. LOVINS AND M. V. RAMANA •

Published at theYale School of the Environment

About E360

As wind and solar power have become dramatically cheaper, and their share of electricity generation grows, skeptics of these technologies are propagating several myths about renewable energy and the electrical grid. The myths boil down to this: Relying on renewable sources of energy will make the electricity supply undependable.

Last summer, some commentators argued that blackouts in California were due to the “intermittency” of renewable energy sources, when in fact the chief causes were a combination of an extreme heat wave probably induced by climate change, faulty planning, and the lack of flexible generation sources and sufficient electricity storage. During a brutal Texas cold snap last winter, Gov. Greg Abbott wrongly blamed wind and solar power for the state’s massive grid failure, which was vastly larger than California’s. In fact, renewables outperformed the grid operator’s forecast during 90 percent of the blackout, and in the rest, fell short by at most one-fifteenth as much as gas plants. Instead, other causes — such as inadequately weatherized power plants and natural gas shutting down because of frozen equipment — led to most of the state’s electricity shortages.

In Europe, the usual target is Germany, in part because of its Energiewende (energy transformation) policies shifting from fossil fuels and nuclear energy to efficient use and renewables. The newly elected German government plans to accelerate the former and complete the latter, but some critics have warned that Germany is running “up against the limits of renewables.”

In reality, it is entirely possible to sustain a reliable electricity system based on renewable energy sources plus a combination of other means, including improved methods of energy management and storage. A clearer understanding of how to dependably manage electricity supply is vital because climate threats require a rapid shift to renewable sources like solar and wind power. This transition has been sped by plummeting costs —Bloomberg New Energy Finance estimates that solar and wind are the cheapest source for 91 percent of the world’s electricity — but is being held back by misinformation and myths.

Myth No. 1: A grid that increasingly relies on renewable energy is an unreliable grid.

Going by the cliché, “In God we trust; all others bring data,” it’s worth looking at the statistics on grid reliability in countries with high levels of renewables. The indicator most often used to describe grid reliability is the average power outage duration experienced by each customer in a year, a metric known by the tongue-tying name of “System Average Interruption Duration Index” (SAIDI). Based on this metric, Germany — where renewables supply nearly half of the country’s electricity — boasts a grid that is one of the most reliable in Europe and the world. In 2020, SAIDI was just 0.25 hours in Germany. Only Liechtenstein (0.08 hours), and Finland and Switzerland (0.2 hours), did better in Europe, where 2020 electricity generation was 38 percent renewable (ahead of the world’s 29 percent). Countries like France (0.35 hours) and Sweden (0.61 hours) — both far more reliant on nuclear power — did worse, for various reasons.

The United States, where renewable energy and nuclear power each provide roughly 20 percent of electricity, had five times Germany’s outage rate — 1.28 hours in 2020. Since 2006, Germany’s renewable share of electricity generation has nearly quadrupled, while its power outage rate was nearly halved. Similarly, the Texas grid became more stable as its wind capacity sextupled from 2007 to 2020. Today, Texas generates more wind power — about a fifth of its total electricity — than any other state in the U.S.

Myth No. 2: Countries like Germany must continue to rely on fossil fuels to stabilize the grid and back up variable wind and solar power.

Again, the official data say otherwise. Between 2010 — the year before the Fukushima nuclear accident in Japan — and 2020, Germany’s generation from fossil fuels declined by 130.9 terawatt-hours and nuclear generation by 76.3 terawatt hours. These were more than offset by increased generation from renewables (149.5 terawatt hours) and energy savings that decreased consumption by 38 terawatt hours in 2019, before the pandemic cut economic activity, too. By 2020, Germany’s greenhouse gas emissions had declined by 42.3 percent below its 1990 levels, beating the target of 40 percent set in 2007. Emissions of carbon dioxide from just the power sector declined from 315 million tons in 2010 to 185 million tons in 2020.

So as the percentage of electricity generated by renewables in Germany steadily grew, its grid reliability improved, and its coal burning and greenhouse gas emissions substantially decreased.

In Japan, following the multiple reactor meltdowns at Fukushima, more than 40 nuclear reactors closed permanently or indefinitely without materially raising fossil-fueled generation or greenhouse gas emissions; electricity savings and renewable energy offset virtually the whole loss, despite policies that suppressed renewables.

Myth No. 3: Because solar and wind energy can be generated only when the sun is shining or the wind is blowing, they cannot be the basis of a grid that has to provide electricity 24/7, year-round.

While variable output is a challenge, it is neither new nor especially hard to manage. No kind of power plant runs 24/7, 365 days a year, and operating a grid always involves managing variability of demand at all times. Even with no solar and wind power (which tend to work dependably at different times and seasons, making shortfalls less likely), all electricity supply varies.

Seasonal variations in water availability and, increasingly, drought reduce electricity output from hydroelectric dams. Nuclear plants must be shut down for refueling or maintenance, and big fossil and nuclear plants are typically out of action roughly 7 percent to 12 percent of the time, some much more. A coal plant’s fuel supply might be interrupted by the derailment of a train or failure of a bridge. A nuclear plant or fleet might unexpectedly have to be shut down for safety reasons, as was Japan’s biggest plant from 2007 to 2009. Every French nuclear plant was, on average, shut down for 96.2 days in 2019 due to “planned” or “forced unavailability.” That rose to 115.5 days in 2020, when French nuclear plants generated less than 65 percent of the electricity they theoretically could have produced. Comparing expected with actual performance, one might even say that nuclear power was France’s most intermittent 2020 source of electricity.

Climate- and weather-related factors have caused multiple nuclear plant interruptions, which have become seven times more frequent in the past decade. Even normally steady nuclear output can fail abruptly and lastingly, as in Japan after the Fukushima disaster, or in the northeastern U.S. after the 2003 regional blackout, which triggered abrupt shutdowns that caused nine reactors to produce almost no power for several days and take nearly two weeks to return to full output.

The Bungala Solar Farm in South Australia, where the grid has run almost exclusively on renewables for days on end.

Thus all sources of power will be unavailable sometime or other. Managing a grid has to deal with that reality, just as much as with fluctuating demand. The influx of larger amounts of renewable energy does not change that reality, even if the ways they deal with variability and uncertainty are changing. Modern grid operators emphasize diversity and flexibility rather than nominally steady but less flexible “baseload” generation sources. Diversified renewable portfolios don’t fail as massively, lastingly, or unpredictably as big thermal power stations.

The purpose of an electric grid is not just to transmit and distribute electricity as demand fluctuates, but also to back up non-functional plants with working plants: that is, to manage the intermittency of traditional fossil and nuclear plants. In the same way, but more easily and often at lower cost, the grid can rapidly back up wind and solar photovoltaics’ predictable variations with other renewables, of other kinds or in other places or both.This has become easier with today’s far more accurate forecasting of weather and wind speeds, thus allowing better prediction of the output of variable renewables. Local or onsite renewables are even more resilient because they largely or wholly bypass the grid, where nearly all power failures begin. And modern power electronics have reliably run the billion-watt South Australian grid on just sun and wind for days on end, with no coal, no hydro, no nuclear, and at most the 4.4-percent natural-gas generation currently required by the grid regulator.

Most discussions of renewables focus on batteries and other electric storage technologies to mitigate variability. This is not surprising because batteries are rapidly becoming cheaper and widely deployed. At the same time, new storage technologies with diverse attributes continue to emerge; the U.S. Department of Energy Global Energy Storage Database lists 30 kinds already deployed or under construction. Meanwhile, many other and less expensive carbon-free ways exist to deal with variable renewables besides giant batteries.

Many less expensive and carbon-free ways exist to deal with variable renewables besides giant batteries.

The first and foremost is energy efficiency, which reduces demand, especially during periods of peak use. Buildings that are more efficient need less heating or cooling and change their temperature more slowly, so they can coast longer on their own thermal capacity and thus sustain comfort with less energy, especially during peak-load periods.

A second option is demand flexibility or demand response, wherein utilities compensate electricity customers that lower their use when asked — often automatically and imperceptibly — helping balance supply and demand. One recent study found that the U.S. has 200 gigawatts of cost-effective load flexibility potential that could be realized by 2030 if effective demand response is actively pursued. Indeed, the biggest lesson from recent shortages in California might be the greater appreciation of the need for demand response. Following the challenges of the past two summers, the California Public Utilities Commission has instituted the Emergency Load Reduction Program to build on earlier demand response efforts.

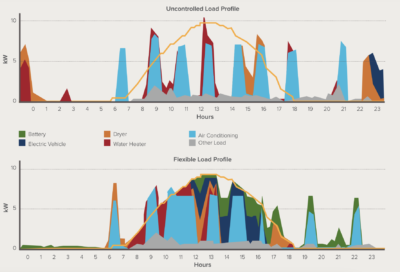

Some evidence suggests an even larger potential: An hourly simulation of the 2050 Texas grid found that eight types of demand response could eliminate the steep ramp of early-evening power demand as solar output wanes and household loads spike. For example, currently available ice-storage technology freezes water using lower-cost electricity and cooler air, usually at night, and then uses the ice to cool buildings during hot days. This reduces electricity demand from air conditioning, and saves money, partly because storage capacity for heating or cooling is far cheaper than storing electricity to deliver them. Likewise, without changing driving patterns, many electric vehicles can be intelligently charged when electricity is more abundant, affordable, and renewable.

The top graph shows daily solar power output (yellow line) and demand from various household uses. The bottom graph shows how to align demand with supply, running devices in the middle of the day when solar output is highest. ROCKY MOUNTAIN INSTITUTE

A third option for stabilizing the grid as renewable energy generation increases is diversity, both of geography and of technology — onshore wind, offshore wind, solar panels, solar thermal power, geothermal, hydropower, burning municipal or industrial or agricultural wastes. The idea is simple: If one of these sources, at one location, is not generating electricity at a given time, odds are that some others will be.

Finally, some forms of storage, such as electric vehicle batteries, are already economical today. Simulations show that ice-storage air conditioning in buildings, plus smart charging to and from the grid of electric cars, which are parked 96 percent of the time, could enable Texas in 2050 to use 100 percent renewable electricity without needing giant batteries.

To pick a much tougher case, the “dark doldrums” of European winters are often claimed to need many months of battery storage for an all-renewable electrical grid. Yet top German and Belgian grid operators find Europe would need only one to two weeks of renewably derived backup fuel, providing just 6 percent of winter output — not a huge challenge.

The bottom line is simple. Electrical grids can deal with much larger fractions of renewable energy at zero or modest cost, and this has been known for quite a while. Some European countries with little or no hydropower already get about half to three-fourths of their electricity from renewables with grid reliability better than in the U.S. It is time to get past the myths.

Amory B. Lovins is an adjunct professor of civil and environmental engineering at Stanford University, and co-founder and chairman emeritus of Rocky Mountain Institute.

https://www.globalissues.org/video/732/amory-lovins-natural-capitalism

2004-10-17 · Natural Capitalism Running time 5m 00s Filmed San Raphael, USA, October 17, 2004 Credits Marcus Morrell About Amory Lovins Energy Consultant, Author. Amory Lovins is one of the world’s foremost energy consultants and is the CEO of Rocky Mountain Institute, based in Colorado. His work focuses on developing advanced resource productivity and energy efficiency.

Natural Capitalism - Read the Book

https://www.natcap.org/sitepages/pid5.php

Natural Capitalism: Creating the Next Industrial Revolution, by Paul Hawken, Amory Lovins, and L. Hunter Lovins, is the first book to explore the lucrative opportunities for businesses in an era of approaching environmental limits. In this groundbreaking blueprint for a new economy, three leading business visionaries explain how the world is on the verge of a new industrial revolution-one that promises to …

Natural Capitalism: The Next Industrial Revolution

https://centerforneweconomics.org/publications/natural-capitalism-the...

BIOLOGIST; AUTHOR; MEMBER, BOARD OF DIRECTORS, SCHUMACHER CENTER FOR A NEW ECONOMICS. Amory Lovins is the co-founder and chief executive officer of the Rocky Mountain Institute, which has been around since 1982—a fifty-person, independent, nonprofit, applied research center in Snowmass, Colorado. The Institute’s objective is to foster efficient and restorative use of natural and …