The Jasons: The Secret History of the Science's Postwar Elite , ANN FINKBEINER , Viking, New York, 2006. $27.95 (304 pp.). ISBN 0-670-03489-4 Buy at Amazon

Physics Today 59, 10, 63 (2006); https://doi.org/10.1063/1.238708

Ann Finkbeiner’s The Jasons: The Secret History of Science’s Postwar Elite is an excellent book that not only thoughtfully recites the history of the JASON group but also identifies the conflict of values faced to this day by physicists who become involved in national security affairs while at the same time they try to preserve their independence. The subtitle of the book, however, is misleading: The author relates a great deal of detailed information without breaking any “secrets.” JASON members are generally less than eager to talk about their association and work with the group, and the majority of JASON reports are classified. Finkbeiner recounts some of the critical events without completing the story, which is more in deference to the wishes of those she interviewed during the process of generating her book than it is to maintaining secrecy.

During World War II, US physicists demonstrated that they could get things done if they were well supported but not directed by the federal government; accomplishments include developments in nuclear energy and weapons, radar, and rockets. Subsequently, most physicists involved in the war effort returned to their academic pursuits after the war ended. In an unorganized way, several of them continued to consult with government or industry on military matters, and some rotated between academia and industry. After Sputnik 1 was launched in 1957, the government promoted science advice to the presidential level and made a commitment to revitalize American science. Physicists themselves, mainly under the leadership of John A. Wheeler, attempted to create a full-time organization for military research, but their endeavors inspired little enthusiasm.

Separately, a small group, principally Marvin “Murph” Goldberger, Ken Watson, and Keith Brueckner, proposed to establish the JASON group to pursue national security work compatible with full-time academic duties. The name JASON, inspired by the Greek mythological hero who led the Argonauts in the search for the Golden Fleece, was suggested by Goldberger’s wife. The group was supported by Charles Townes, who at the time headed the Institute for Defense Analyses. In turn, IDA was supported by the Advanced Research Projects Agency (ARPA), which was under the leadership of Herbert York and reported to the newly created Directorate of Defense Research and Engineering (DDR&E) at the Pentagon. The entire lineup of JASON and the research agencies was populated by physicists who had been colleagues on the Manhattan Project. The contractor handling the administrative work, such as travel, security, and financial matters, shifted from IDA to SRI International to the MITRE Corporation.

JASON members have had a love–hate relationship with ARPA and its successor, the Defense Advanced Research Projects Agency (DARPA). Incoming heads of the agency generally resented the independence of JASON, but Finkbeiner reports that after some experience, each director recognized that the value generated by the JASONs exceeded their “nuisance.” The matter came to a climax under the administration of President George W. Bush, when in 2001 the incoming head of DARPA, Anthony Tether, demanded that JASON add to its ranks two nonacademics and one academic prescribed by the administration. Traditionally, members had been selected by the JASON steering committee; thus the JASONs objected to the administration’s plans, and DARPA cancelled their contract. However, the contract was later reinstated by DDR&E through a process not recounted by the author in polite deference to the administration.

During its history JASON made many technical contributions to military research, in addition to serving as a reviewer of frequently dubious initiatives from the military establishment. It made the concept of adaptive optics practical by proposing the use of a laser-generated, artificial guide star. JASON showed that ceasing nuclear tests of any nuclear yield would not harm security any more than permitting very small undetectable nuclear explosions. The work of JASON also diversified to include engineering, oceanography, climatology, computerand information sciences, and more recently biological issues with security implications.

The most controversial JASON contribution, which was developed during the Vietnam War, was the electronic barrier, whose origin and final demise is ably described in Finkbeiner’s book. The barrier required US sensors to be airdropped along potential infiltration routes. The goal of the sensors was to detect infiltrators on those routes for the US to bomb, thus discouraging the incursion of North Vietnamese troops along the Ho Chi Minh Trail. A JASON study had shown that the bombing campaigns in Vietnam were ineffective. The barrier was intended to decrease the level of violence, but it was never fully deployed. The instruments were instead diverted by the US Air Force to relieve the siege of Khe Sanh and assist the continued bombing campaign. The role of the JASONs in originating the electronic barrier became public and—notwithstanding their constructive intent—led to extensive protests, some of which were quite ugly, against JASON members on their home campuses.

The episode illustrates the tensions inherent in having academic physicists engage in military research. I believe that most JASON members, in conformance with the views of most fellow academics, are for arms control at heart and basically strive for a de-emphasis of violence as a means for settling international conflicts. At the same time, they make their skills available to the military establishment as independent scientists, maintaining with merit that the objective analyses will lead to more rationality in the military arena. The military is well aware of the basic outlook of most JASONs but appreciates their talents and objectivity.

To demonstrate JASON’s dilemma, Finkbeiner cites the well-known anecdote about three people, one of whom is a physicist, sentenced to death by guillotine. During the first two attempted executions, the blade gets stuck, and the two are freed. But the physicist takes a look at the guillotine and says, “I think I can tell you what’s wrong with it.” The correspondence to JASON’s activities may not be too remote.

Today, independent scientific advice on national security has largely been eliminated in the top levels of government. Thus the independence of outsiders who operate on the inside, like the JASONs, is a unique asset today in the national security arena. This fact is duly noted and documented in Finkbeiner's very readable book.

ROBOTS

|

By Ben Sullivan

|

Jan 19 2017, 10:45am

Elite Scientists Have Told the Pentagon That AI Won't Threaten Humanity

JASON advisory group says Elon Musk’s singularity warnings are unfounded, but a focus on AI for the Dept. of Defense is integral.

A new report authored by a group of independent US scientists advising the US Dept. of Defense (DoD) on artificial intelligence (AI) claims that perceived existential threats to humanity posed by the technology, such as drones seen by the public as killer robots, are at best "uninformed".

Still, the scientists acknowledge that AI will be integral to most future DoD systems and platforms, but AI that could act like a human "is at most a small part of AI's relevance to the DoD mission". Instead, a key application area of AI for the DoD is in augmenting human performance.

Perspectives on Research in Artificial Intelligence and Artificial General Intelligence Relevant to DoD, first reported by Steven Aftergood at the Federation of American Scientists, has been researched and written by scientists belonging to JASON, thehistorically secretive organization that counsels the US government on scientific matters.

Outlining the potential use cases of AI for the DoD, the JASON scientists make sure to point out that the growing public suspicion of AI is "not always based on fact", especially when it comes to military technologies. Highlighting SpaceX boss Elon Musk's opinion that AI "is our biggest existential threat" as an example of this, the report argues that these purported threats "do not align with the most rapidly advancing current research directions of AI as a field, but rather spring from dire predictions about one small area of research within AI, Artificial General Intelligence (AGI)".

A USAF Global Hawk UAV rests in its hangar on the flightline of this desert base. Image: Tech. Sgt. Mike Hammond/U.S. Air Force/U.S. Air Force

AGI, as the report describes, is the pursuit of developing machines that are capable of long-term decision making and intent, i.e. thinking and acting like a real human. "On account of this specific goal, AGI has high visibility, disproportionate to its size or present level of success," the researchers say.

Motherboard reached out to the MITRE Corporation, the non-profit organisation JASON's reports are run through, as well as Richard Potember, a scientist listed on the report, but neither responded to emails before this article was published. A spokesperson for the Defense Department told Motherboard in an email, "DoD relies on the technical insights provided by the JASONs to complement DoD's internal assessments as we set our strategic direction. All reports and recommendations are read and carefully considered in this context as we make investment decisions for research initiatives and future programs of record."

In an email on Thursday, Aftergood told Motherboard, "JASON reports are purely advisory. They do not set policy or determine DoD choices. On the other hand, they are highly valued, very informative and often influential. The reports are prepared only because DoD asks for them and is prepared to pay for them."

Aftergood said that JASON reports act as a "reality check" for Pentagon officials, helping them decide what's real and what's in the realm of possibility.

In recent years, the purported malicious intent of artificial intelligence is an idea that has flourished in the media, compounded by much more realistic fears of entire employment sectors being replaced by robots. This issue is not helped by the conflation of robotics and AI by some media outlets and even politicians, as illustrated last week by a debate among members of the European Parliament on whether robots should attain legal status as persons.

Highly publicized recent AI victories against humans, such as Google's AlphaGo win, don't illustrate any breakthrough in general machine cognition, the report argues. Instead, these wins rely on Deep Learning processes on Deep Neural Networks (DNNs)—processes that can be trained to generate an appropriate output in response to an input. Think a dog sitting to your command, rather than a dog knowing to sit itself.

"The two main approaches to AI are nothing like how humans must live and learn,"

Andrew Owen Martin, senior technical analyst at the Tungsten Network, a collaborative team consisting of math, AI, and computer science experts, and secretary of The Society for the Study of Artificial Intelligence and Simulation of Behaviour (AISB), agrees with the JASON report, arguing that the public's and other high-profile technologists' fear of existential threats is overblown.

"Any part of human experience that's at all interesting is too poorly defined to be described in either of the two main methods AI researchers have," he tells Motherboard. "The implication here is that the two main approaches to AI are nothing like how humans must live and learn, and hence there's no reason to assume they will ever achieve what human learning can."

Nevertheless, AGI is recognised by the JASON scientists as being somewhat pertinent in the DoD's future, but only if it were to make substantial progress. "That AI and—if it were to advance significantly—AGI are of importance to DoD is so self-evident that it needs little elucidation here," reads the report. "Weapons systems and platforms with varying degrees of autonomy exist today in all domains of modern warfare, including air, sea (surface and underwater), and ground."

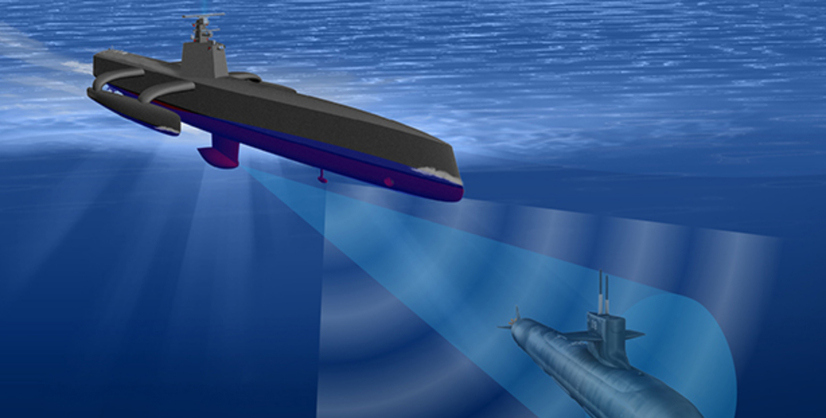

Northrop Grumman's X-47B uncrewed bomber is given as one example, and DARPA'sACTUV submarine hunter is given as another. Systems like these could no doubt be improved by enhanced artificial intelligence, but the scientists note that while these systems have some degrees of autonomy, they are in "no sense a step…towards 'autonomy' in the sense of AGI". Instead, AI is used to augment human operators, such as flying to pre-determined locations without the need for a human piloting the systems.

DARPA's ACTUV uncrewed submarine tracker. Image: DARPA

Yet, while not categorically autonomous, AI-augmented weapon systems are obviously are still pain points for opponents of their use in military scenarios. Max Tegmark, cosmologist and co-founder of the Future of Life Institute, a think tank established to support research into safeguarding the future of human life, tells Motherboard that he agrees with JASON's view that existential threats are unlikely in the near term. However, Tegmark believes the imminent issue of autonomous weapons is "crucial".

"All responsible nations will be better off if an international treaty can prevent an arms race in lethal autonomous weapons, which would ultimately proliferate and empower terrorists and other unscrupulous non-state actors," he says.

So human-like autonomy is still a long way off, if not impossible, and the goalposts keeps moving too, according to JASON. "The boundary between existing AI and hoped-for AGI keeps being shifted by AI successes, and will continue to be," say the scientists. Even military applications for technologies such as self-driving tanks may be at least a decade off. Discussing the progress of self-driving cars by civilian companies such as Google, the JASON scientists conclude, "going down this path will require at least a decade of challenging work. The work on self-driving cars has consumed substantial resources. After millions of hours of on-road experiments and training, performance is only now becoming acceptable in benign environments. Acceptability here refers to civilian standards of safety and trust; for military use the standards might be somewhat laxer, but the performance requirements would likely be tougher."

This article must be concluded, however, with the looming caveat that the entire JASON report is based on upon unclassified research. "The study looks at AI research at the '6.1' level (that is, unclassified basic research)," say the scientists. "We were not briefed on any DoD developmental efforts or programs of record. All briefings to JASON were unclassified, and were largely from the academic community."

Could America's military be working on top-secret artificial general intelligence programs years ahead of those known about in the public sphere? Probably not, ponders Martin. "AGI isn't around the corner, it's not even possible. I don't mean that it's 'too difficult' like 'man will never fly' or 'man will never land on the moon', I'm saying it's hopelessly misguided like 'man will never dig a tunnel to the moon'."

Update 01/23/17: This article has been updated to include a statement from the Department of Defense.

ALSO SEE

JASONS PART I

THE MILITARY INDUSTRIAL COMPLEX (MIC)

AND THE PERMANENT ARMS ECONOMY TODAY

No comments:

Post a Comment