By Rich Stanton

PC GAMER

'Tech otakus save the world' is the company motto, after all.

Developer miHoYo has been around since 2012, but 2020's Genshin Impact was its first global success: the game remains enormously popular, but in its first year made over $2 billion from the mobile version alone. The company has quite a charming motto—Tech otakus save the world—and, with this big ol' bunch of money burning a hole in its metaphorical pocket, has decided to have a go at living up to those words.

miHoYo recently led a funding round alongside NIO Capital, a Chinese investment firm, and a total of $63 million will be invested in a company called Energy Singularity (as per Beijing's PanDaily). The funds will be used for R&D of a "small tokamak experimental device based on high temperature superconducting material, and advanced magnet systems that can be used for the next generation of high-performance fusion devices."

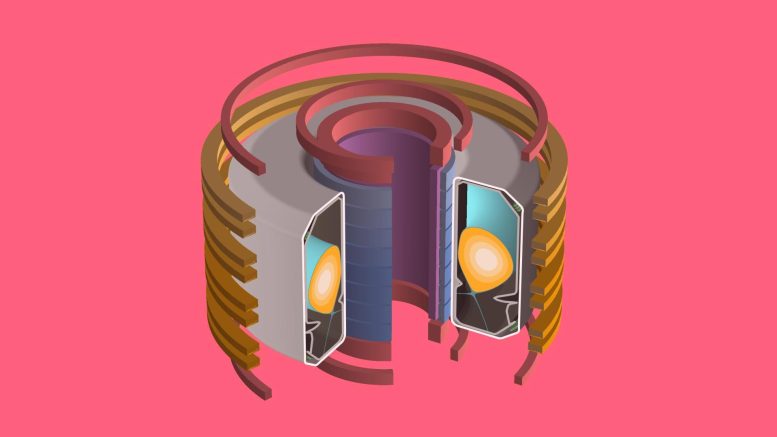

What's a tokamak to you? A tokamak is a plant design concept for nuclear fusion, wherein plasma is confined using magnetic fields in a donut shape: a torus. It is considered, by people who know about these things, to be the most realistic and achievable nuclear fusion design.

The long-and-short of it is that nuclear fusion (where two atomic nuclei combine to create a heavier nuclei, releasing energy) will in theory have huge advantages over nuclear fission (where a nucleus is split). However despite being theorised about and researched since the 1940s, no working nuclear fusion reactor has ever been built. If it can be done, this technology could change everything about global energy supplies and become a major tool in fighting climate change (fusion even produces less waste than fission).

So, perhaps in 100 years they'll be writing textbooks about how thirsty weebs inadvertently saved the planet by buying bunny costumes.

miHoYo's not just into nuclear fusion: last year it funded a lab studying brain-computer interface technologies, and how they could possibly be used to treat depression.

Yes that name does sound a bit like a boss fight. Genshin Impact, meanwhile, continues to receive regular updates, with 2.5 arriving just under a month ago and driving fans bonkers with its new characters.

Rich Stanton

Rich is a games journalist with 15 years' experience, beginning his career on Edge magazine before working for a wide range of outlets, including Ars Technica, Eurogamer, GamesRadar+, Gamespot, the Guardian, IGN, the New Statesman, Polygon, and Vice. He was the editor of Kotaku UK, the UK arm of Kotaku, for three years before joining PC Gamer. He is the author of a Brief History of Video Games, a full history of the medium, which the Midwest Book Review described as "[a] must-read for serious minded game historians and curious video game connoisseurs alike."

Swiss Plasma Center and DeepMind Use AI To Control Plasmas for Nuclear Fusion

Scientists at EPFL’s Swiss Plasma Center and DeepMind have jointly developed a new method for controlling plasma configurations for use in nuclear fusion research.

EPFL’s Swiss Plasma Center (SPC) has decades of experience in plasma physics and plasma control methods. DeepMind is a scientific discovery company acquired by Google in 2014 that’s committed to ‘solving intelligence to advance science and humanity. Together, they have developed a new magnetic control method for plasmas based on deep reinforcement learning, and applied it to a real-world plasma for the first time in the SPC’s tokamak research facility, TCV. Their study has just been published in Nature.

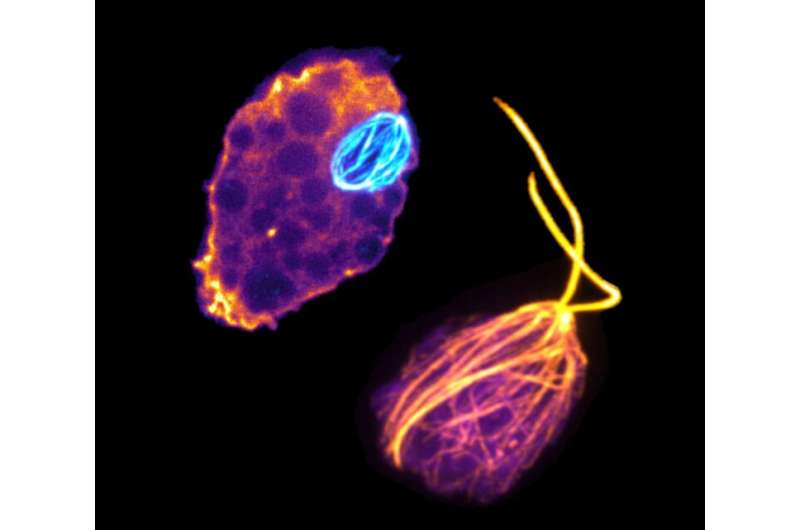

Tokamaks are donut-shaped devices for conducting research on nuclear fusion, and the SPC is one of the few research centers in the world that has one in operation. These devices use a powerful magnetic field to confine plasma at extremely high temperatures – hundreds of millions of degrees Celsius, even hotter than the sun’s core – so that nuclear fusion can occur between hydrogen atoms. The energy released from fusion is being studied for use in generating electricity. What makes the SPC’s tokamak unique is that it allows for a variety of plasma configurations, hence its name: variable-configuration tokamak (TCV). That means scientists can use it to investigate new approaches for confining and controlling plasmas. A plasma’s configuration relates to its shape and position in the device.

The controller trained with deep reinforcement learning steers the plasma through multiple phases of an experiment. On the left, there is an inside view in the tokamak during the experiment. On the right, you can see the reconstructed plasma shape and the target points we wanted to hit. Credit: DeepMind & SPC/EPFL

Controlling a substance as hot as the Sun

Tokamaks form and maintain plasmas through a series of magnetic coils whose settings, especially voltage, must be controlled carefully. Otherwise, the plasma could collide with the vessel walls and deteriorate. To prevent this from happening, researchers at the SPC first test their control systems configurations on a simulator before using them in the TCV tokamak. “Our simulator is based on more than 20 years of research and is updated continuously,” says Federico Felici, an SPC scientist and co-author of the study. “But even so, lengthy calculations are still needed to determine the right value for each variable in the control system. That’s where our joint research project with DeepMind comes in.”

3D model of the TCV vacuum vessel containing the plasma, surrounded by various magnetic coils to keep the plasma in place and to affect its shape. Credit: DeepMind & SPC/EPFL

DeepMind’s experts developed an AI algorithm that can create and maintain specific plasma configurations and trained it on the SPC’s simulator. This involved first having the algorithm try many different control strategies in simulation and gathering experience. Based on the collected experience, the algorithm generated a control strategy to produce the requested plasma configuration. This involved first having the algorithm run through a number of different settings and analyze the plasma configurations that resulted from each one. Then the algorithm was called on to work the other way – to produce a specific plasma configuration by identifying the right settings. After being trained, the AI-based system was able to create and maintain a wide range of plasma shapes and advanced configurations, including one where two separate plasmas are maintained simultaneously in the vessel. Finally, the research team tested their new system directly on the tokamak to see how it would perform under real-world conditions.

Range of different plasma shapes generated with the reinforcement learning controller. Credit: DeepMind & SPC/EPFL

The SPC’s collaboration with DeepMind dates back to 2018 when Felici first met DeepMind scientists at a hackathon at the company’s London headquarters. There he explained his research group’s tokamak magnetic-control problem. “DeepMind was immediately interested in the prospect of testing their AI technology in a field such as nuclear fusion, and especially on a real-world system like a tokamak,” says Felici. Martin Riedmiller, control team lead at DeepMind and co-author of the study, adds that “our team’s mission is to research a new generation of AI systems – closed-loop controllers – that can learn in complex dynamic environments completely from scratch. Controlling a fusion plasma in the real world offers fantastic, albeit extremely challenging and complex, opportunities.”

A win-win collaboration

After speaking with Felici, DeepMind offered to work with the SPC to develop an AI-based control system for its tokamak. “We agreed to the idea right away, because we saw the huge potential for innovation,” says Ambrogio Fasoli, the director of the SPC and a co-author of the study. “All the DeepMind scientists we worked with were highly enthusiastic and knew a lot about implementing AI in control systems.” For his part, Felici was impressed with the amazing things DeepMind can do in a short time when it focuses its efforts on a given project.

The collaboration with the SPC pushes us to improve our reinforcement learning algorithms.

— Brendan Tracey, senior research engineer, DeepMind

DeepMind also got a lot out of the joint research project, illustrating the benefits to both parties of taking a multidisciplinary approach. Brendan Tracey, a senior research engineer at DeepMind and co-author of the study, says: “The collaboration with the SPC pushes us to improve our reinforcement learning algorithms, and as a result can accelerate research on fusing plasmas.”

This project should pave the way for EPFL to seek out other joint R&D opportunities with outside organizations. “We’re always open to innovative win-win collaborations where we can share ideas and explore new perspectives, thereby speeding the pace of technological development,” says Fasoli.

Reference: “Magnetic control of tokamak plasmas through deep reinforcement learning” by Jonas Degrave, Federico Felici, Jonas Buchli, Michael Neunert, Brendan Tracey, Francesco Carpanese, Timo Ewalds, Roland Hafner, Abbas Abdolmaleki, Diego de las Casas, Craig Donner, Leslie Fritz, Cristian Galperti, Andrea Huber, James Keeling, Maria Tsimpoukelli, Jackie Kay, Antoine Merle, Jean-Marc Moret, Seb Noury, Federico Pesamosca, David Pfau, Olivier Sauter, Cristian Sommariva, Stefano Coda, Basil Duval, Ambrogio Fasoli, Pushmeet Kohli, Koray Kavukcuoglu, Demis Hassabis and Martin Riedmiller, 16 February 2022, Nature.

DOI: 10.1038/s41586-021-04301-9

Lattice confinement fusion eliminates massive magnets and powerful lasers

BAYARBADRAKH BARAMSAI THERESA BENYO LAWRENCE FORSLEY BRUCE STEINETZ

27 FEB 2022

EDMON DE HARO

PHYSICISTS FIRST SUSPECTED more than a century ago that the fusing of hydrogen into helium powers the sun. It took researchers many years to unravel the secrets by which lighter elements are smashed together into heavier ones inside stars, releasing energy in the process. And scientists and engineers have continued to study the sun’s fusion process in hopes of one day using nuclear fusion to generate heat or electricity. But the prospect of meeting our energy needs this way remains elusive.

The extraction of energy from nuclear fission, by contrast, happened relatively quickly. Fission in uranium was discovered in 1938, in Germany, and it was only four years until the first nuclear “pile” was constructed in Chicago, in 1942.

There are currently about 440 fission reactors operating worldwide, which together can generate about 400 gigawatts of power with zero carbon emissions. Yet these fission plants, for all their value, have considerable downsides. The enriched uranium fuel they use must be kept secure. Devastating accidents, like the one at Fukushima in Japan, can leave areas uninhabitable. Fission waste by-products need to be disposed of safely, and they remain radioactive for thousands of years. Consequently, governments, universities, and companies have long looked to fusion to remedy these ills.

Among those interested parties is NASA. The space agency has significant energy needs for deep-space travel, including probes and crewed missions to the moon and Mars. For more than 60 years, photovoltaic cells, fuel cells, or radioisotope thermoelectric generators (RTGs) have provided power to spacecraft. RTGs, which rely on the heat produced when nonfissile plutonium-238 decays, have demonstrated excellent longevity—both Voyager probes use such generators and remain operational nearly 45 years after their launch, for example. But these generators convert heat to electricity at roughly 7.5 percent efficiency. And modern spacecraft need more power than an RTG of reasonable size can provide.

One promising alternative is lattice confinement fusion (LCF), a type of fusion in which the nuclear fuel is bound in a metal lattice. The confinement encourages positively charged nuclei to fuse because the high electron density of the conductive metal reduces the likelihood that two nuclei will repel each other as they get closer together.

We and other scientists and engineers at NASA Glenn Research Center, in Cleveland, are investigating whether this approach could one day provide enough power to operate small robotic probes on the surface of Mars, for example. LCF would eliminate the need for fissile materials such as enriched uranium, which can be costly to obtain and difficult to handle safely. LCF promises to be less expensive, smaller, and safer than other strategies for harnessing nuclear fusion. And as the technology matures, it could also find uses here on Earth, such as for small power plants for individual buildings, which would reduce fossil-fuel dependency and increase grid resiliency.

Physicists have long thought that fusion should be able to provide clean nuclear power. After all, the sun generates power this way. But the sun has a tremendous size advantage. At nearly 1.4 million kilometers in diameter, with a plasma core 150 times as dense as liquid water and heated to 15 million °C, the sun uses heat and gravity to force particles together and keep its fusion furnace stoked.

On Earth, we lack the ability to produce energy this way. A fusion reactor needs to reach a critical level of fuel-particle density, confinement time, and plasma temperature (called the Lawson Criteria after creator John Lawson) to achieve a net-positive energy output. And so far, nobody has done that.

Lighting the Fusion Fire

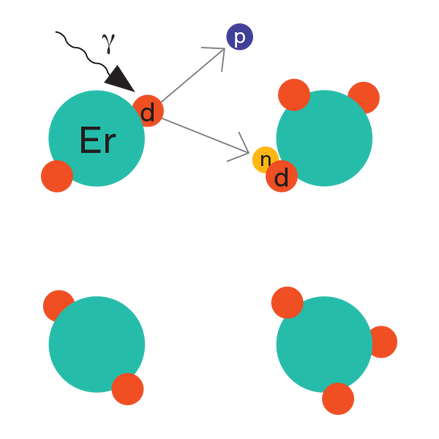

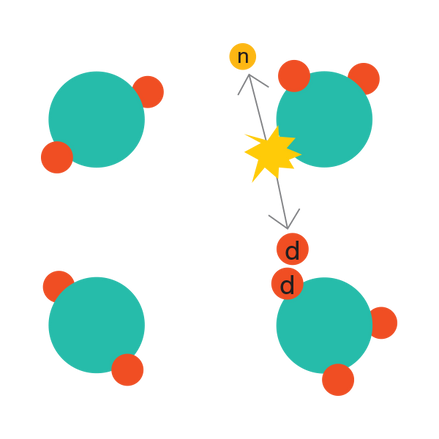

In lattice confinement fusion (LCF), a beam of gamma rays is directed at a sample of erbium [shown here] or titanium saturated with deuterons. Occasionally, gamma rays of sufficient energy will break apart a deuteron in the metal lattice into its constituent proton and neutron.

The neutron collides with another deuteron in the lattice, imparting some of its own momentum to the deuteron. The electron-screened deuteron is now energetic enough to overcome the Coulomb barrier, which would typically repel it from another deuteron.

Deuteron-Deuteron Fusion

When the energetic deuteron fuses with another deuteron in the lattice, it can produce a helium-3 nucleus (helion) and give off useful energy. A leftover neutron could provide the push for another energetic deuteron elsewhere.

Alternatively, the fusing of the two deuterons could result in a hydrogen-3 nucleus (triton) and a leftover proton. This reaction also produces useful energy.

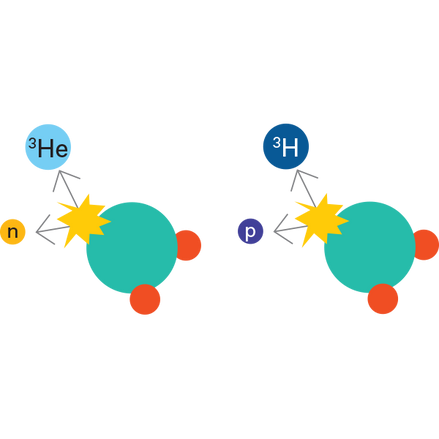

Stripping and OP Reaction

Another possible reaction in lattice confinement fusion would happen if an erbium atom instead rips apart the energetic deuteron and absorbs the proton. The extra proton changes the erbium atom to thulium and releases energy.

If the erbium atom absorbs the neutron, it becomes a new isotope of erbium. This is an Oppenheimer-Phillips (OP) stripping reaction. The proton from the broken-apart deuteron heats the lattice.

Fusion reactors commonly utilize two different hydrogen isotopes: deuterium (one proton and one neutron) and tritium (one proton and two neutrons). These are fused into helium nuclei (two protons and two neutrons)—also called alpha particles—with an unbound neutron left over.

Existing fusion reactors rely on the resulting alpha particles—and the energy released in the process of their creation—to further heat the plasma. The plasma will then drive more nuclear reactions with the end goal of providing a net power gain. But there are limits. Even in the hottest plasmas that reactors can create, alpha particles will mostly skip past additional deuterium nuclei without transferring much energy. For a fusion reactor to be successful, it needs to create as many direct hits between alpha particles and deuterium nuclei as possible.

In the 1950s, scientists created various magnetic-confinement fusion devices, the most well known of which were Andrei Sakharov’s tokamak and Lyman Spitzer’s stellarator. Setting aside differences in design particulars, each attempts the near-impossible: Heat a gas enough for it to become a plasma and magnetically squeeze it enough to ignite fusion—all without letting the plasma escape.

Inertial-confinement fusion devices followed in the 1970s. They used lasers and ion beams either to compress the surface of a target in a direct-drive implosion or to energize an interior target container in an indirect-drive implosion. Unlike magnetically confined reactions, which can last for seconds or even minutes (and perhaps one day, indefinitely), inertial-confinement fusion reactions last less than a microsecond before the target disassembles, thus ending the reaction.

Both types of devices can create fusion, but so far they are incapable of generating enough energy to offset what’s needed to initiate and maintain the nuclear reactions. In other words, more energy goes in than comes out. Hybrid approaches, collectively called magneto-inertial fusion, face the same issues.

Who’s Who in the Fusion Zoo

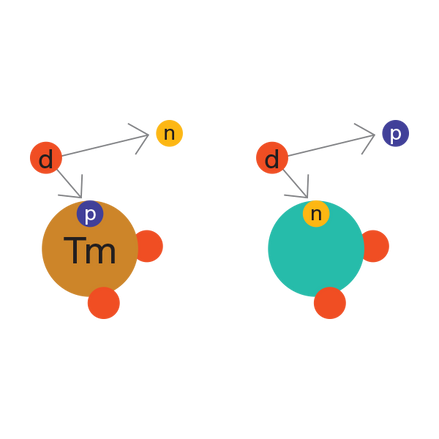

Proton: Positively charged protons (along with neutrons) make up atomic nuclei. One component of lattice confinement fusion (LCF) may occur when a proton is absorbed by an erbium atom in a deuteron stripping reaction.

Neutron: Neutrally charged neutrons (along with protons) make up atomic nuclei. In fusion reactions, they impart energy to other particles such as deuterons. They also can be absorbed in Oppenheimer-Phillips reactions.

Erbium & Titanium: Erbium and titanium are the metals of choice for LCF. Relatively colossal compared with the other particles involved, they hold the deuterons and screen them from one another.

Deuterium: Deuterium is hydrogen with one proton and one neutron in its nucleus (hydrogen with just the proton is protium). Deuterium’s nucleus, call a deuteron, is crucial to LCF.

Deuteron: The nucleus of a deuterium atom. Deuterons are vital to LCF—the actual fusion instances occur when an energetic deuteron smashes into another in the lattice. They can also be broken apart in stripping reactions.

Hydrogen-3 (Tritium): One possible resulting particle from deuteron-deuteron fusion, alongside a leftover proton. Tritium has one proton and two neutrons in its nucleus, which is also called a triton.

Helium-3: One possible resulting particle from deuteron-deuteron fusion, alongside a leftover neutron. Helium-3 has two protons and one neutron in its nucleus, which is also called a helion.

Alpha particle: The core of a normal helium atom (two protons and two neutrons). Alpha particles are a commonplace result of typical fusion reactors, which often smash deuterium and tritium particles together. They can also emerge from LCF reactions.

Gamma ray: Extremely energetic photons that are used to kick off the fusion reactions in a metal lattice by breaking apart deuterons.

Current fusion reactors also require copious amounts of tritium as one part of their fuel mixture. The most reliable source of tritium is a fission reactor, which somewhat defeats the purpose of using fusion.

The fundamental problem of these techniques is that the atomic nuclei in the reactor need to be energetic enough—meaning hot enough—to overcome the Coulomb barrier, the natural tendency for the positively charged nuclei to repel one another. Because of the Coulomb barrier, fusing atomic nuclei have a very small fusion cross section, meaning the probability that two particles will fuse is low. You can increase the cross section by raising the plasma temperature to 100 million °C, but that requires increasingly heroic efforts to confine the plasma. As it stands, after billions of dollars of investment and decades of research, these approaches, which we’ll call “hot fusion,” still have a long way to go.

The barriers to hot fusion here on Earth are indeed tremendous. As you can imagine, they’d be even more overwhelming on a spacecraft, which can’t carry a tokamak or stellarator onboard. Fission reactors are being considered as an alternative—NASA successfully tested the Kilopower fission reactor at the Nevada National Security Site in 2018 using a uranium-235 core about the size of a paper towel roll. The Kilopower reactor could produce up to 10 kilowatts of electric power. The downside is that it required highly enriched uranium, which would have brought additional launch safety and security concerns. This fuel also costs a lot.

But fusion could still work, even if the conventional hot-fusion approaches are nonstarters. LCF technology could be compact enough, light enough, and simple enough to serve for spacecraft.

How does LCF work? Remember that we earlier mentioned deuterium, the isotope of hydrogen with one proton and one neutron in its nucleus. Deuterided metals—erbium and titanium, in our experiments—have been “saturated” with either deuterium or deuterium atoms stripped of their electrons (deuterons). This is possible because the metal naturally exists in a regularly spaced lattice structure, which creates equally regular slots in between the metal atoms for deuterons to nest.

In a tokamak or a stellarator, the hot plasma is limited to a density of 10 14 deuterons per cubic centimeter. Inertial-confinement fusion devices can momentarily reach densities of 1026 deuterons per cubic centimeter. It turns out that metals like erbium can indefinitely hold deuterons at a density of nearly 1023 per cubic centimeter—far higher than the density that can be attained in a magnetic-confinement device, and only three orders of magnitude below that attained in an inertial-confinement device. Crucially, these metals can hold that many ions at room temperature.

The deuteron-saturated metal forms a plasma with neutral charge. The metal lattice confines and electron-screens the deuterons, keeping each of them from “seeing” adjacent deuterons (which are all positively charged). This screening increases the chances of more direct hits, which further promotes the fusion reaction. Without the electron screening, two deuterons would be much more likely to repel each other.

Using a metal lattice that has screened a dense, cold plasma of deuterons, we can jump-start the fusion process using what is called a Dynamitron electron-beam accelerator. The electron beam hits a tantalum target and produces gamma rays, which then irradiate thumb-size vials containing titanium deuteride or erbium deuteride.

When a gamma ray of sufficient energy—about 2.2 megaelectron volts (MeV)—strikes one of the deuterons in the metal lattice, the deuteron breaks apart into its constituent proton and neutron. The released neutron may collide with another deuteron, accelerating it much as a pool cue accelerates a ball when striking it. This second, energetic deuteron then goes through one of two processes: screened fusion or a stripping reaction.

In screened fusion, which we have observed in our experiments, the energetic deuteron fuses with another deuteron in the lattice. The fusion reaction will result in either a helium-3 nucleus and a leftover neutron or a hydrogen-3 nucleus and a leftover proton. These fusion products may fuse with other deuterons, creating an alpha particle, or with another helium-3 or hydrogen-3 nucleus. Each of these nuclear reactions releases energy, helping to drive more instances of fusion.

In a stripping reaction, an atom like the titanium or erbium in our experiments strips the proton or neutron from the deuteron and captures that proton or neutron. Erbium, titanium, and other heavier atoms preferentially absorb the neutron because the proton is repulsed by the positively charged nucleus (called an Oppenheimer-Phillips reaction). It is theoretically possible, although we haven’t observed it, that the electron screening might allow the proton to be captured, transforming erbium into thulium or titanium into vanadium. Both kinds of stripping reactions would produce useful energy.

As it stands, after billions of dollars of investment and decades of research, these approaches, which we’ll call “hot fusion,” still have a long way to go.

To be sure that we were actually producing fusion in our vials of erbium deuteride and titanium deuteride, we used neutron spectroscopy. This technique detects the neutrons that result from fusion reactions. When deuteron-deuteron fusion produces a helium-3 nucleus and a neutron, that neutron has an energy of 2.45 MeV. So when we detected 2.45 MeV neutrons, we knew fusion had occurred. That’s when we published our initial results in Physical Review C.

Electron screening makes it seem as though the deuterons are fusing at a temperature of 11 million °C. In reality, the metal lattice remains much cooler than that, although it heats up somewhat from room temperature as the deuterons fuse.

Overall, in LCF, most of the heating occurs in regions just tens of micrometers across. This is far more efficient than in magnetic- or inertial-confinement fusion reactors, which heat up the entire fuel amount to very high temperatures. LCF isn’t cold fusion—it still requires energetic deuterons and can use neutrons to heat them. However, LCF also removes many of the technologic and engineering barriers that have prevented other fusion schemes from being successful.

Although the neutron recoil technique we’ve been using is the most efficient means to transfer energy to cold deuterons, producing neutrons from a Dynamitron is energy intensive. There are other, lower energy methods of producing neutrons including using an isotopic neutron source, like americium-beryllium or californium-252, to initiate the reactions. We also need to make the reaction self-sustaining, which may be possible using neutron reflectors to bounce neutrons back into the lattice—carbon and beryllium are examples of common neutron reflectors. Another option is to couple a fusion neutron source with fission fuel to take advantage of the best of both worlds. Regardless, there’s more development of the process required to increase the efficiency of these lattice-confined nuclear reactions.

We’ve also triggered nuclear reactions by pumping deuterium gas through a thin wall of a palladium-silver alloy tubing, and by electrolytically loading palladium with deuterium. In the latter experiment, we’ve detected fast neutrons. The electrolytic setup is now using the same neutron-spectroscopy detection method we mentioned above to measure the energy of those neutrons. The energy measurements we get will inform us about the kinds of nuclear reaction that produce them.

We’re not alone in these endeavors. Researchers at Lawrence Berkeley National Laboratory, in California, with funding from Google Research, achieved favorable results with a similar electron-screened fusion setup. Researchers at the U.S. Naval Surface Warfare Center, Indian Head Division, in Maryland have likewise gotten promising initial results using an electrochemical approach to LCF. There are also upcoming conferences: the American Nuclear Society’s Nuclear and Emerging Technologies for Space conference in Cleveland in May and the International Conference on Cold Fusion 24, focused on solid-state energy, in Mountain View, Calif., in July.

Any practical application of LCF will require efficient, self-sustaining reactions. Our work represents just the first step toward realizing that goal. If the reaction rates can be significantly boosted, LCF may open an entirely new door for generating clean nuclear energy, both for space missions and for the many people who could use it here on Earth.

FROM YOUR SITE ARTICLES

Magnetic-Confinement Fusion Without the Magnets - IEEE Spectrum ›

RELATED ARTICLES AROUND THE WEB

NASA work on lattice confinement fusion grabs attention -- ANS ... ›

is a systems engineer at NASA Glenn Research Center contributing to on the lattice confinement fusion project.,

Theresa Benyo

is a physicist and the principal investigator for the lattice confinement fusion project at NASA Glenn Research Center.,

Lawrence Forsley

is the deputy principal investigator for NASA’s lattice confinement fusion project, based at NASA Glenn Research Center.

is a senior technologist at NASA Glenn Research Center involved in the lattice confinement fusion project.