How much wildfire smoke is infiltrating our homes?

Though overall air quality in the U.S. has improved dramatically in recent decades, smoke from catastrophic wildfires is now creating spells of extremely hazardous air pollution in the Western U.S. And, while many people have learned to reduce their exposure by staying inside, keeping windows closed and running air filtration systems on smoky days, data remains limited on how well these efforts are paying off.

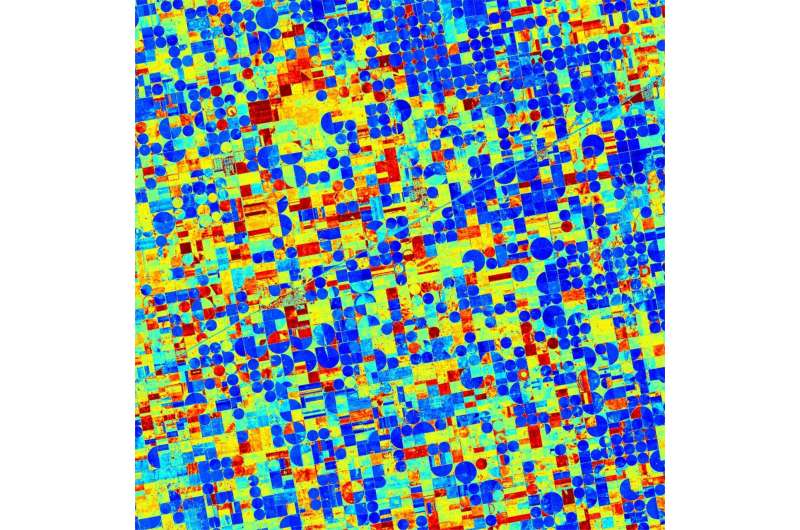

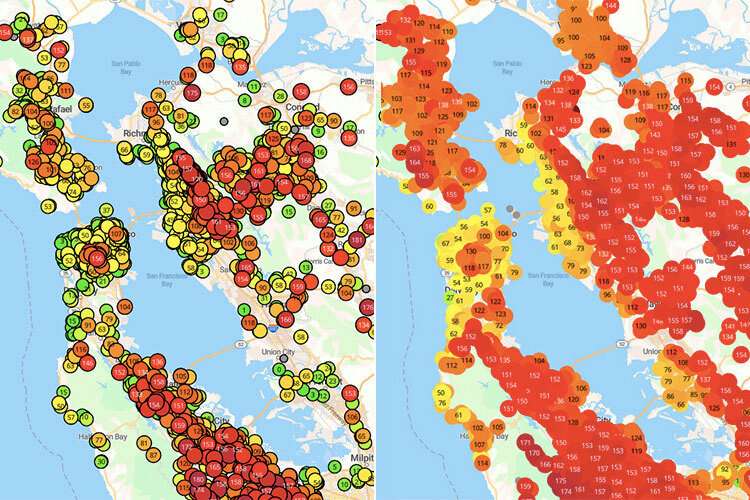

In a new study, scientists from the University of California, Berkeley, used data from 1,400 indoor air sensors and even more outdoor air sensors included on the crowdsourced PurpleAir network to find out how well residents of the San Francisco and Los Angeles metropolitan areas were able to protect the air inside their homes on days when the air outside was hazardous.

They found that, by taking steps like closing up their houses and using filtration indoors, people were able to cut the infiltration of PM2.5 particulate matter to their homes by half on wildfire days compared to non-wildfire days.

"While the particulate matter indoors was still three times higher on wildfire days than on non-wildfire days, it was much lower than it would be if people hadn't closed up their buildings and added filtration," said study senior author Allen Goldstein, a professor of environmental engineering and of environmental science, policy and management at UC Berkeley. "This shows that when people have information about the smoke coming their way, they are acting to protect themselves, and they are doing it effectively."

While individuals can take steps to reduce smoke infiltration to their homes, the ability for air pollution to get inside can also depend heavily on the nature of the building itself. To study these effects, the researchers also used the real estate website Zillow to estimate the characteristics of buildings in the sensor network, including the structure's relative age, the type of building and the socio-economic status of the neighborhood.

Not surprisingly, they found that newly constructed homes and those that were built with central air conditioning were significantly better at keeping wildfire smoke out.

"One of the things that makes this study exciting is it shows what you can learn with crowdsourced data that the government was never collecting before," said study co-author Joshua Apte, an assistant professor of civil and environmental engineering and of public health at UC Berkeley. "The Environmental Protection Agency (EPA) has a mandate to measure outdoor air quality and not indoor air quality—that's just how our air quality regulations are set up. So, these kinds of crowdsourced data sets allow us to learn about how populations are affected indoors, where they spend most of their time."

Protecting the air of the great indoors

Like many residents of the Western U.S., both Goldstein and Apte regularly use websites like AirNow and PurpleAir to check how wildfire smoke is affecting the air quality where they live. Both have even installed their own PurpleAir sensors to track the concentrations of PM2.5 particulate matter inside their homes.

So, when UC Berkeley graduate student Yutong Liang wrote a term paper using data from the PurpleAir network to study the impact of wildfire smoke on indoor air quality, Goldstein and Apte thought the work was worth expanding to a full research study.

"Our friends and neighbors and colleagues were all looking at this real-time data from PurpleAir to find out when smoke was affecting their area, and using that information to decide how to behave," Goldstein said. "We wanted to use actual data from this network to find out how effective that behavior was at protecting them."

The analysis compared indoor and outdoor sensor data collected during August and September of 2020, when both San Francisco and Los Angeles experienced a number of "fire days," which the researchers defined as days when the average PM2.5 measured by the EPA exceeded 35ug/m3. This value corresponds to an Air Quality Index (AQI) of approximately 100, which represents the boundary between PM2.5 levels that the EPA considers "moderate" and those that are considered "unhealthy for sensitive groups."

While scientists are still working to puzzle out just what types of chemical compounds are found in this particulate matter from wildfire smoke, a growing body of research now suggests that it may be even worse for human health than other types of PM2.5 air pollution.

"Wildfires create thousands of different organic chemicals as particulate matter and gases that can cause respiratory and cardiovascular problems in people," said Liang, who is lead author of the study. "The Goldstein research group is trying to identify these unique compounds, as well as the ways that they react to transform the composition of wildfire smoke over time."

To protect indoor air during fire season, the research team suggests closing up your home before smoke arrives in your area and investing in an air filtration system. If you can't afford a commercial air filter—or they are all sold out—you can also build your own for less than $50 using a box fan, a MERV-rated furnace filter and some tape.

"There are lots of very informative Twitter threads about how to build a good DIY system, and if you are willing to go a little crazy—spend $90 instead of $50—you can build an even better design," Apte said. "Every air quality researcher I know has played around with these because they are so satisfying and simple and fun, and they work."

Where you put the filters also matters. If you only have one, Apte suggests putting it in your bedroom and leaving the door closed while you sleep, to keep the air in your bedroom as clean as possible.

Finally, the researchers suggest cooking as little as possible during smoky days. Cooking can generate surprising amounts of both particulate matter and gases, neither of which can be easily ventilated out of the house without inviting wildfire smoke in.

"Air filters can help remove particulate matter from cooking, but running a kitchen or bathroom exhaust fan during smoke events can actually pull PM2.5-laden air from outdoors to indoors," Goldstein said.

In the future, the researchers hope to find ways to sample the indoor air quality of a more diverse array of households. Because PurpleAir sensors cost at least $200 apiece, households that contribute data to the network tend to be affluent, and the Zillow estimates show that the average price of homes in the network is about 20% higher than median property values in their areas.

"One thing that we're deeply interested in is understanding what happens to people in indoor environments, because that's where people spend most of their time, and there's still an awful lot we don't know about indoor pollution exposure," Apte said. "I think that these new methods of sensing the indoor environment are going to allow us to grapple a lot more with questions of environmental justice and find out more about who gets to breathe cleaner air indoors."