Fossil expands ancient fish family tree

A second ancient lungfish has been discovered in Africa, adding another piece to the jigsaw of evolving aquatic life forms more than 400 million years ago.

The new fossil lungfish genus Isityumzi mlomomde was found about 10,000km from a previous species described in Morocco, and is of interest because it existed in a high latitude (70 degrees south) or polar environment at the time.

Flinders University researcher Dr. Alice Clement says the "scrappy" fossil remains including tooth plates and scales were found in the Famennian Witpoort Formation off the western cape of South Africa.

"This lungfish material is significant for a number of reasons," Dr. Clement says.

"Firstly it represents the only Late Devonian lungfish known from Western Gondwana (when South America and Africa were one continent). During this period, about 372-359 million years ago, South Africa was situated next to the South Pole," she says.

"Secondly, the new taxa from the Waterloo Farm Formation seems to have lived in a thriving ecosystem, indicating this region was not as cold as the polar regions of today."

Dr. Clement says the animal would still have been subject to long periods of winter darkness, very different to the freshwater habitats that lungfish live in today when there are only six known species of lungfish living only in Africa, South America and Australia.

Isityumzi mlomomde means "a long-mouthed device for crushing" in isiXhosa, one of the official languages of South Africa.

Around 100 kinds of primitive lungfish (Dipnoi) evolved from the early Devonian period more than 410 million years ago. More than 25 originated in Australian (Gondwanan) and others are known to have lived in temperate tropical and subtropical waters of China and Morocco in the Northern Hemisphere.

Lungfish are a group of fish most closely related to all tetrapods—all terrestrial vertebrates including amphibians, reptiles, birds and mammals.

"In this way, a lungfish is more closely related to humans than it is to a goldfish!" says Dr. Clement, who has been involved in naming three other new ancient lungfish.

The paper, "A high latitude Devonian lungfish, from the Famennian of South Africa" (2019) by RW Gess and AM Clement has been published in PeerJ.

Lungfishes are not airheads

It's November, a month to ruminate on all of the things we are thankful for while we ruminate copious amounts of food (at least in the United States). I've been contemplating all of the things that I am thankful for, besides the usual suspects (you know, friends, family, a pretty cool research project, and, of course, the PLOS Paleo Community!).

You know what else I am thankful for? I'm thankful for lungfishes.

Lungfishes are pretty spectacular organisms, and also utterly bizarre. In fact, our knowledge of extant lungfishes, their biology, and their evolutionary relationships to other fishes or tetrapods was confusing at first. The South American lungfish, Lepidosiren paradoxa, got its specific name due to its mosaic of fish and tetrapod characteristics, and was thought to have been a reptile when it was described in 1836. The West African Lungfish, Protopterus annectans, was thought to be an amphibian when it was described in 1837. These critters confused a lot of taxonomists for a lot of years, but eventually it was realized that they belonged within Dipnoi, the lungfishes, a group within Sarcopterygii (a group that includes coelacanths and, well, ourselves!). Now, almost all morphological and molecular phylogenetic studies accept that lungfishes are more closely related to tetrapods than coelacanths are to tetrapods.

Lungfishes have a massive evolutionary history, with their peak diversity of around 100 species occurring around 359–420 million years ago during the Devonian Period. Nowadays, their family get-togethers are a little smaller, with just six living species occurring in South America and Africa (Lepidosiren and Protopterus, the Lepidosirenidae), and Australia (Neoceratodus, a single species belonging to Neoceratodontidae). These two groups are thought to have diverged sometime during the Permian (~277 Ma), and when you've been away from your relatives for that long, it can be expected that you'll become quite different. While both have thick bodies with broad tails and distinguishing toothplates used for crushing prey, notable external differences include the filamentous "noodle" pectoral and pelvic fins of the Lepidosirenidae compared to the thicker, paddle-like fins of Neoceratodus.

There's a lot we still don't know about the closest-living relatives of all tetrapods. A paper that came out last month in PLOS ONE by Alice M. Clement, Johan Nysjö, Robin Strand, and Per E. Ahlberg set out to study one such aspect of lungfishes: the brain/cranial endocast relationship.

When lacking soft tissue, as with most fossils, paleontologists use the size of the cranial cavity (the endocast) to elucidate the size of the brain, which obviously can help us infer the relative intelligence or cognition of the organism when comparing the size of the brain to the size of the organism itself. This can be problematic though, depending on what group you are studying. Clement et al. (2015) note that the brain-endocast relationship of tetrapods (birds, reptiles, mammals, etc.) is more tightly constrained that what is observed in some fishes. For example, some living chondrichthyans, such as the basking shark Cetorhinus, can have a brain size that occupies only around 6% of the endocranial cavity. Even stranger still is the living coelacanth Latimeria, who's brain occupies a tiny 1% of the endocranial cavity. On the flipside, Clement et al. (2015) notes, ray-finned fishes can have a close match in brain size to endocast size. This variability in brain-to-endocast relationship is unusual, and one that author Clement told me can only be understood by expanding datasets and the taxa for which we know the brain-endocranial relationship, something that she and her colleagues are continuing to work on.

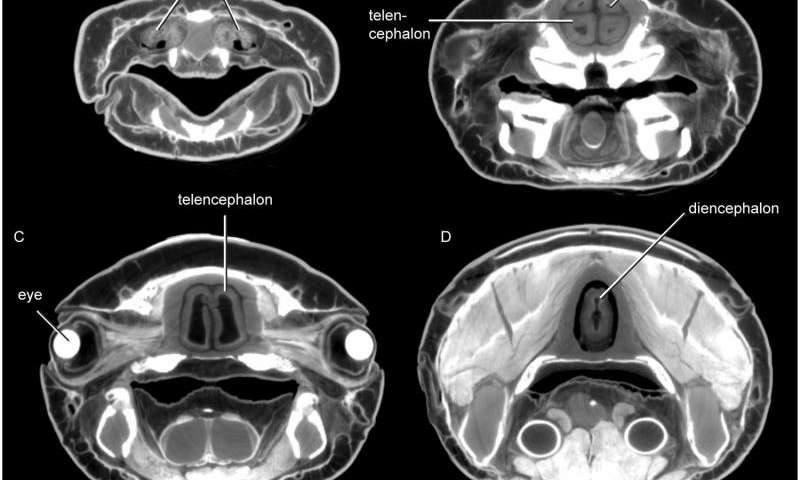

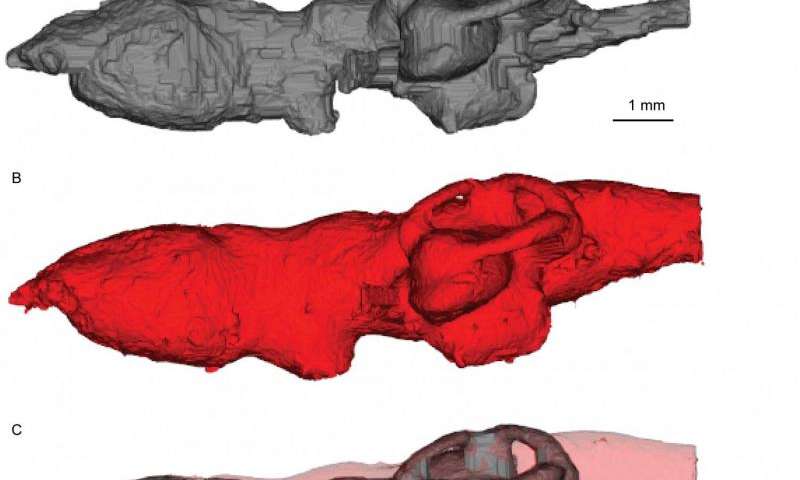

Where do lungfishes fit in this brain-endocast relationship spectrum? Clement et al. (2015) used specimens of the Australian lungfish Neoceratodus fosteri to examine this relationship. Using high-resolution X-ray Computed Tomography (CT) scanning techniques and computer analyses outlined in detail in the paper, Clement and colleagues examined in detail the size, anatomy, and morphology of the brain of Neoceratodus.

They concluded that brain fits the endocast pretty closely, particularly in the forebrain and labyrinth (inner ear) regions. The paper diagrams beautifully the relationship of brain-to-endocast spatial relationship.

A PLOS ONE paper from last year by two of the authors here (Clement and Ahlberg, 2014) examined the endocast of a fossil lungfish Rhinodipterus from the Devonian Gogo Formation of Australia, and found similarity between it and the brain of Neoceratodus. Some general inferences about the functional significance of different sections of the brain can be made. Clement and Ahlberg (2014) note that the enlarging of the telencephalic region of lungfishes over time (between Devonian Rhinodipterus and the extant Neoceratodus) is probably related to increased reliance upon this part of the brain.

"The forebrain is associated with olfaction; perhaps as lungfishes moved from open marine environments in the Devonian to murkier, freshwater, swamp-like environments (like we see them in today), their reliance on smell increased," Clement told me. She continues, "Similarly, the midbrain (where the optic lobes are) is greatly reduced in lungfishes, suggesting that they don't rely on sight very much, compared to most actinopterygian fishes."

The work by Clement and colleagues has implications beyond lungfish anatomy. Clement et al. (2015) clearly demonstrates the care that paleontologists, specifically paleoneurologists, should use when studying the cranial endocasts of fossil taxa. Clement notes, "I think we must always use caution when interpreting the endocasts of fossils in terms of gross brain morphology, as we can't know the brain-endocranial relationship in [extinct] taxa. However, the fact remains that no brain region can be larger than the endocranial cavity that housed it, so we are given maximal proportions at least."

Clement further states, "Endocasts themselves are often highly rich in morphological characters (whether related to the brain inside them or not) useful for comparative, and probably also phylogenetic, analyses across taxa. In my opinion, the great advances in scanning technology mean that virtual palaeoneurology is on the cusp of a boom!"