Noor Al-Sibai

Wed, October 9, 2024

Both of the men who won this year's Nobel Prize in Physics are artificial intelligence pioneers — and one of them is considered the technology's "godfather."

As Reuters reports, American physicist John Hopfield and AI expert Geoffrey Hinton were awarded the coveted prize this week. Considered the "godfather of AI," Hinton's research in 2012 laid the groundwork for today's neural networks — but in 2023, he quit his job at Google to join a chorus of critics sounding alarm bells about the technology.

In an interview with the New York Times last year about leaving his job as a vice president and engineering fellow at the tech giant, Hinton said he'd previously thought of Google as a "proper steward" of the powerful technology. That's until Microsoft partnered with OpenAI to unleash the latter's GPT-4 large language model (LLM), which powers ChatGPT, onto the masses.

Though he didn't believe that AI was anywhere near its zenith at the time, the 76-year-old computer scientist suggested he saw the writing on the wall with the Microsoft-OpenAI deal.

"Most people thought it was way off. And I thought it was way off," Hinton told the newspaper at the time. "I thought it was 30 to 50 years or even longer away."

"Obviously," he continued, "I no longer think that."

Prior to leaving Google and joining the likes of Elon Musk and other luminaries in signing an open letter calling for a pause on AI development, Hinton took to CBS News to warn that the world had reached a "pivotal moment" in terms of the technology.

"I think it's very reasonable for people to be worrying about these issues now," he told CBS at the time, "even though it's not going to happen in the next year or two."

Now a professor emeritus at the University of Toronto, Hinton has made it abundantly clear in the roughly 18 months since his Google departure that he thinks that AI may escape human control at any time — and once it does, all hell may break loose.

"Here we’re dealing with something where we have much less idea of what’s going to happen and what to do about it," the computer scientist said during a conversation with the Nobel committee. "I wish I had a sort of simple recipe that if you do this, everything’s going to be okay. But I don’t."

Considered the leading AI "doomer" for his grim outlook on the technology he helped birth, Hinton said when speaking to the Nobel committee that he was very surprised to learn he'd won the award and had been unaware that he'd even been nominated.

"Hopefully it’ll make me more credible," he said of winning the Nobel, "when I say these things really do understand what they’re saying."

More on AI doomers: AI Researcher Slams OpenAI, Warns It Will Become the "Most Orwellian Company of All Time"

AI is having its Nobel moment. Do scientists need the tech industry to sustain it?

MATT O'BRIEN

Updated Fri, October 11, 2024

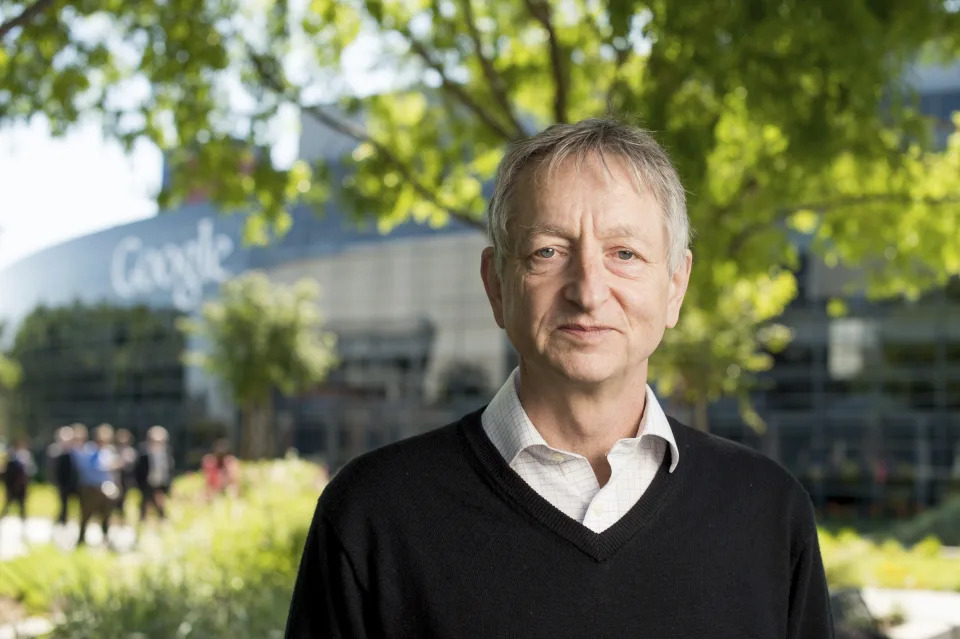

Computer scientist Geoffrey Hinton, who studies neural networks used in artificial intelligence applications, poses at Google's Mountain View, Calif,. (AP Photo/Noah Berger, File)

Hours after the artificial intelligence pioneer Geoffrey Hinton won a Nobel Prize in physics, he drove a rented car to Google's California headquarters to celebrate.

Hinton doesn't work at Google anymore. Nor did the longtime professor at the University of Toronto do his pioneering research at the tech giant.

But his impromptu party reflected AI's moment as a commercial blockbuster that has also reached the pinnacles of scientific recognition.

That was Tuesday. Then, early Wednesday, two employees of Google's AI division won a Nobel Prize in chemistry for using AI to predict and design novel proteins.

“This is really a testament to the power of computer science and artificial intelligence,” said Jeanette Wing, a professor of computer science at Columbia University.

Asked about the historic back-to-back science awards for AI work in an email Wednesday, Hinton said only: “Neural networks are the future.”

It didn't always seem that way for researchers who decades ago experimented with interconnected computer nodes inspired by neurons in the human brain. Hinton shares this year's physics Nobel with another scientist, John Hopfield, for helping develop those building blocks of machine learning.

Neural network advances came from “basic, curiosity-driven research,” Hinton said at a press conference after his win. “Not out of throwing money at applied problems, but actually letting scientists follow their curiosity to try and understand things.”

Such work started well before Google existed. But a bountiful tech industry has now made it easier for AI scientists to pursue their ideas even as it has challenged them with new ethical questions about the societal impacts of their work.

One reason why the current wave of AI research is so closely tied to the tech industry is that only a handful of corporations have the resources to build the most powerful AI systems.

“These discoveries and this capability could not happen without humongous computational power and humongous amounts of digital data,” Wing said. “There are very few companies — tech companies — that have that kind of computational power. Google is one. Microsoft is another.”

The chemistry Nobel Prize awarded Wednesday went to Demis Hassabis and John Jumper of Google’s London-based DeepMind laboratory along with researcher David Baker at the University of Washington for work that could help discover new medicines.

Hassabis, the CEO and co-founder of DeepMind, which Google acquired in 2014, told the AP in an interview Wednesday his dream was to model his research laboratory on the “incredible storied history” of Bell Labs. Started in 1925, the New Jersey-based industrial lab was the workplace of multiple Nobel-winning scientists over several decades who helped develop modern computing and telecommunications.

“I wanted to recreate a modern day industrial research lab that really did cutting-edge research,” Hassabis said. “But of course, that needs a lot of patience and a lot of support. We’ve had that from Google and it’s been amazing.”

Hinton joined Google late in his career and quit last year so he could talk more freely about his concerns about AI’s dangers, particularly what happens if humans lose control of machines that become smarter than us. But he stops short of criticizing his former employer.

Hinton, 76, said he was staying in a cheap hotel in Palo Alto, California when the Nobel committee woke him up with a phone call early Tuesday morning, leading him to cancel a medical appointment scheduled for later that day.

By the time the sleep-deprived scientist reached the Google campus in nearby Mountain View, he “seemed pretty lively and not very tired at all” as colleagues popped bottles of champagne, said computer scientist Richard Zemel, a former doctoral student of Hinton’s who joined him at the Google party Tuesday.

“Obviously there are these big companies now that are trying to cash in on all the commercial success and that is exciting,” said Zemel, now a Columbia professor.

But Zemel said what’s more important to Hinton and his closest colleagues has been what the Nobel recognition means to the fundamental research they spent decades trying to advance.

Guests included Google executives and another former Hinton student, Ilya Sutskever, a co-founder and former chief scientist and board member at ChatGPT maker OpenAI. Sutskever helped lead a group of board members who briefly ousted OpenAI CEO Sam Altman last year in turmoil that has symbolized the industry's conflicts.

An hour before the party, Hinton used his Nobel bully pulpit to throw shade at OpenAI during opening remarks at a virtual press conference organized by the University of Toronto in which he thanked former mentors and students.

“I’m particularly proud of the fact that one of my students fired Sam Altman,” Hinton said.

Asked to elaborate, Hinton said OpenAI started with a primary objective to develop better-than-human artificial general intelligence “and ensure that it was safe.”

"And over time, it turned out that Sam Altman was much less concerned with safety than with profits. And I think that’s unfortunate,” Hinton said.

In response, OpenAI said in a statement that it is “proud of delivering the most capable and safest AI systems” and that they “safely serve hundreds of millions of people each week.”

Conflicts are likely to persist in a field where building even a relatively modest AI system requires resources “well beyond those of your typical research university,” said Michael Kearns, a professor of computer science at the University of Pennsylvania.

But Kearns, who sits on the committee that picks the winners of computer science's top prize — the Turing Award — said this week marks a “great victory for interdisciplinary research” that was decades in the making.

Hinton is only the second person to win both a Nobel and Turing. The first, Turing-winning political scientist Herbert Simon, started working on what he called “computer simulation of human cognition” in the 1950s and won the Nobel economics prize in 1978 for his study of organizational decision-making.

Wing, who met Simon in her early career, said scientists are still just at the tip of finding ways to apply computing's most powerful capabilities to other fields.

“We’re just at the beginning in terms of scientific discovery using AI,” she said.

——

AP Business Writer Kelvin Chan contributed to this report.

How a subfield of physics led to breakthroughs in AI – and from there to this year’s Nobel Prize

Veera Sundararaghavan, University of Michigan

Wed, October 9, 2024

Neural networks have their roots in statistical mechanics. BlackJack3D/iStock via Getty Images Plus

John J. Hopfield and Geoffrey E. Hinton received the Nobel Prize in physics on Oct. 8, 2024, for their research on machine learning algorithms and neural networks that help computers learn. Their work has been fundamental in developing neural network theories that underpin generative artificial intelligence.

A neural network is a computational model consisting of layers of interconnected neurons. Like the neurons in your brain, these neurons process and send along a piece of information. Each neural layer receives a piece of data, processes it and passes the result to the next layer. By the end of the sequence, the network has processed and refined the data into something more useful.

While it might seem surprising that Hopfield and Hinton received the physics prize for their contributions to neural networks, used in computer science, their work is deeply rooted in the principles of physics, particularly a subfield called statistical mechanics.

As a computational materials scientist, I was excited to see this area of research recognized with the prize. Hopfield and Hinton’s work has allowed my colleagues and me to study a process called generative learning for materials sciences, a method that is behind many popular technologies like ChatGPT.

What is statistical mechanics?

Statistical mechanics is a branch of physics that uses statistical methods to explain the behavior of systems made up of a large number of particles.

Instead of focusing on individual particles, researchers using statistical mechanics look at the collective behavior of many particles. Seeing how they all act together helps researchers understand the system’s large-scale macroscopic properties like temperature, pressure and magnetization.

For example, physicist Ernst Ising developed a statistical mechanics model for magnetism in the 1920s. Ising imagined magnetism as the collective behavior of atomic spins interacting with their neighbors.

In Ising’s model, there are higher and lower energy states for the system, and the material is more likely to exist in the lowest energy state.

One key idea in statistical mechanics is the Boltzmann distribution, which quantifies how likely a given state is. This distribution describes the probability of a system being in a particular state – like solid, liquid or gas – based on its energy and temperature.

Ising exactly predicted the phase transition of a magnet using the Boltzmann distribution. He figured out the temperature at which the material changed from being magnetic to nonmagnetic.

Phase changes happen at predictable temperatures. Ice melts to water at a specific temperature because the Boltzmann distribution predicts that when it gets warm, the water molecules are more likely to take on a disordered – or liquid – state.

In materials, atoms arrange themselves into specific crystal structures that use the lowest amount of energy. When it’s cold, water molecules freeze into ice crystals with low energy states.

Similarly, in biology, proteins fold into low energy shapes, which allow them to function as specific antibodies – like a lock and key – targeting a virus.

Neural networks and statistical mechanics

Fundamentally, all neural networks work on a similar principle – to minimize energy. Neural networks use this principle to solve computing problems.

For example, imagine an image made up of pixels where you only can see a part of the picture. Some pixels are visible, while the rest are hidden. To determine what the image is, you consider all possible ways the hidden pixels could fit together with the visible pieces. From there, you would choose from among what statistical mechanics would say are the most likely states out of all the possible options.

In statistical mechanics, researchers try to find the most stable physical structure of a material. Neural networks use the same principle to solve complex computing problems. Veera Sundararaghavan

Hopfield and Hinton developed a theory for neural networks based on the idea of statistical mechanics. Just like Ising before them, who modeled the collective interaction of atomic spins to solve the photo problem with a neural network, Hopfield and Hinton imagined collective interactions of pixels. They represented these pixels as neurons.

Just as in statistical physics, the energy of an image refers to how likely a particular configuration of pixels is. A Hopfield network would solve this problem by finding the lowest energy arrangements of hidden pixels.

However, unlike in statistical mechanics – where the energy is determined by known atomic interactions – neural networks learn these energies from data.

Hinton popularized the development of a technique called backpropagation. This technique helps the model figure out the interaction energies between these neurons, and this algorithm underpins much of modern AI learning.

The Boltzmann machine

Building upon Hopfield’s work, Hinton imagined another neural network, called the Boltzmann machine. It consists of visible neurons, which we can observe, and hidden neurons, which help the network learn complex patterns.

In a Boltzmann machine, you can determine the probability that the picture looks a certain way. To figure out this probability, you can sum up all the possible states the hidden pixels could be in. This gives you the total probability of the visible pixels being in a specific arrangement.

My group has worked on implementing Boltzmann machines in quantum computers for generative learning.

In generative learning, the network learns to generate new data samples that resemble the data the researchers fed the network to train it. For example, it might generate new images of handwritten numbers after being trained on similar images. The network can generate these by sampling from the learned probability distribution.

Generative learning underpins modern AI – it’s what allows the generation of AI art, videos and text.

Hopfield and Hinton have significantly influenced AI research by leveraging tools from statistical physics. Their work draws parallels between how nature determines the physical states of a material and how neural networks predict the likelihood of solutions to complex computer science problems.

This article is republished from The Conversation, a nonprofit, independent news organization bringing you facts and trustworthy analysis to help you make sense of our complex world. It was written by: Veera Sundararaghavan, University of Michigan

Read more:

What is a neural network? A computer scientist explains

A new type of material called a mechanical neural network can learn and change its physical properties to create adaptable, strong structures

Neuroscience and artificial intelligence can help improve each other

Veera Sundararaghavan receives external funding for research unrelated to the content of this article.

No comments:

Post a Comment