Climate costs lowest if warming is limited to 2 degrees Celsius

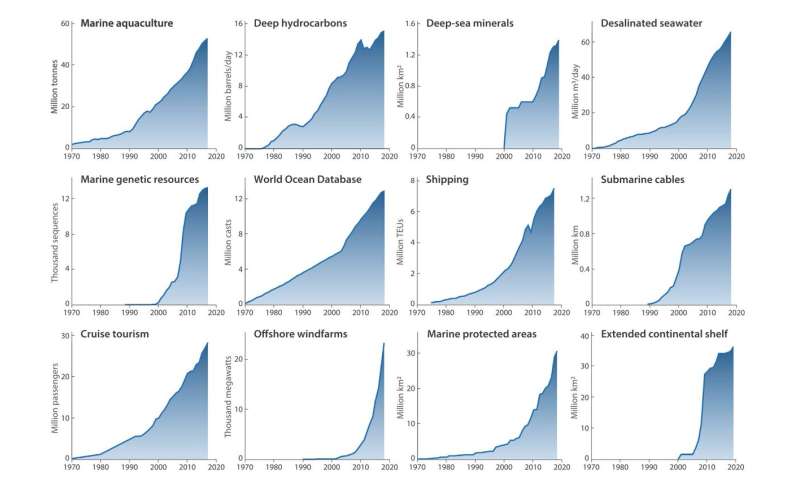

Using computer simulations of a model by U.S. Nobel Laureate William Nordhaus, researchers have weighted climate damage from increasing weather extremes, decreasing labor productivity and other factors against the costs of cutting greenhouse gas emission by phasing out coal and oil. Interestingly, the most economically cost-efficient level of global warming turns out to be 2 degrees Celsius, the level to which more than 190 nations agreed in the Paris Climate Agreement. So far, however, CO2 reductions promised by nations worldwide are insufficient to reach this goal.

"To secure economic welfare for all people in these times of global warming, we need to balance the costs of climate change damage and those of climate change mitigation. Now, our team has found what we should aim for," says Anders Levermann from the Potsdam Institute for Climate Impact Research (PIK) and Columbia University's LDEO, New York, head of the team conducting the study. "We did a lot of thorough testing with our computers. And we have been amazed to find that limiting the global temperature increase to 2 degrees Celsius, as agreed in the science-based but highly political process leading to the 2015 Paris Agreement, indeed emerges as economically optimal."

Striving for economic growth

Climate policies such as the replacement of coal-fired power plants by windmills and solar energy or the introduction of CO2 pricing entail economic costs. The same is true for climate damage. Cutting greenhouse gas emissions clearly reduces the damage, but so far, observed temperature-induced losses in economic production have not really been accounted for in computations of economically optimal policy pathways. The researchers have now done that. They fed up-to-date research on economic damages driven by climate change effects into one of the most renowned computer simulation systems, the Dynamic Integrated Climate-Economy model developed by the Nobel Laureate of Economics, William Nordhaus, and used in the past for U.S. policy planning. The computer simulation is trained to strive for economic growth.

"It is remarkable how robustly reasonable the temperature limit of more or less 2 degrees Celsius is, standing out in almost all the cost-curves we've produced," says Sven Willner, also from PIK and an author of the study. The researchers tested a number of uncertainties in their study. For instance, they accounted for people's preference for consumption today instead of consumption tomorrow versus the notion that tomorrow's generations should not have less consumption means. The result, that limiting temperature increase to 2 degrees Celsius is the most cost-efficient, was also true for the full range of possible climate sensitivities; hence, the amount of warming that results from a doubling of CO2 in the atmosphere.

"The world is running out of excuses for doing nothing"

"Since we have already increased the temperature of the planet by more than one degree, 2 degrees Celsius requires fast and fundamental global action," says Levermann. "Our analysis is based on the observed relation between temperature and economic growth, but there could be other effects that we cannot anticipate yet." Changes in the response of societies to climate stress—especially a violent flare-up of smoldering conflicts or the crossing of tipping points for critical elements in the Earth system—could shift the cost-benefit analysis toward even more urgent action.

"The world is running out of excuses to justify sitting back and doing nothing—all those who have been saying that climate stabilization would be nice but is too costly can see now that it is really unmitigated global warming that is too expensive," Levermann concludes. "Business as usual is clearly not a viable economic option anymore. We either decarbonize our economies or we let global warming fire up costs for businesses and societies worldwide."

The study is published in Nature Communications.

More information: Nicole Glanemann, Sven N. Willner, Anders Levermann (2020): Paris Climate Agreement passes the cost-benefit text. Nature Communications. DOI: 10.1038/s41467-019-13961-1