By John Rieder

NOVEMBER 1, 2019

THE FUTURE HAS invaded the present. Or, rather, it has merged into the present, constituting a new “hyper now” that, far from intensifying our material presence in the world, more than ever before abstracts and alienates the contingencies of human labor, time, and agency, through the systematic imperatives of contemporary capitalism. The force driving this invasion is speculative finance, embodied most tellingly in the financial instruments called derivatives. Derivatives are essentially bets on the future prices of things, and the traffic in derivatives, enabled by digital technology and algorithmically driven trading programs that compress and volatilize time (while reducing place to a mere anachronism in the financial market), has now overleaped material reality to such an extent that, as one commentator estimates, it would take the entire resources of at least four Earth-sized planets to actually pay out the fictional capital currently invested in them. The effect of this hypertrophied financial speculation is to evacuate the circuit of capital production that runs from investment of money to production of commodities to realization of profit — schematically represented, in Marx’s rendering, as M-C-M’ — to the seemingly magical birth of profit from money itself (M-M’), where capital seems to increase through mere circulation.

Thus runs the organizing narrative of this hefty special issue of New Centennial Review, co-edited by David Higgins and Hugh C. O’Connell. “Speculative Finance/Speculative Fiction” is made up of 11 substantial critical essays and one piece of “speculative financial fiction” addressing the topic, as announced by the editors, of “the contemporary era’s characteristic shift from production to financialization and the consequences of this shift for the social, political, and aesthetic spheres.” To the economic premise that 21st-century financial speculation marks a decisive change in the overall character of capital accumulation, the editors add a cultural corollary: that the apparent obsolescence of actual commodities in the finance sector’s generation of profits “creates new demands on representation, resulting in a crisis for contemporary narrative.” The special issue’s project is to explore that cultural crisis by “mapping the global systemic effects [speculative finance] creates as well as the ways in which it colonizes the individual at the level of everyday life.”

Following the editors’ introduction, the collection leads off with an essay on “Promissory Futures: Reality and Imagination in Finance and Fiction” by well-established and highly respected scholar Sherryl Vint. In a kind of keynote to the rest of the volume, Vint advances a thesis about the opportunities speculative fiction affords in relation to the fictions promulgated by speculative finance. Vint’s essay more than any other in the volume delves into the particular devices of what might be called the storytelling practices of contemporary financial speculation. These include mark-to-market accounting, which “allows a corporation to count projected or future profit (after purchasing an asset; based on ongoing research) as ‘real’ profit when calculating the present worth of its stock,” and the inclusion of “forward-looking statements,” which are projections of expected income based on the “plans and objectives of management for future operations” in the prospectuses that corporations furnish investors. Such practices, Vint proposes, can be thought of as “fictions, speculations of the kind we find in speculative fiction, extrapolations of possible futures based on elements in the present.” Indeed, she points out, in some industries, such as genomics research, corporate value is based far more heavily on the promise of future information and its possible applications than on the present state of affairs.

But, Vint notes, the extrapolated futures of speculative finance suffer from a certain narrowness of vision. Primary among the limiting assumptions underlying these practices is “the inviolable importance of shareholder value,” such that the duty of maximizing it allows traders to “continue to believe in their narrative” in spite of the widespread human immiseration and environmental degradation that neoliberal restructuring of the global economy has patently exacerbated. Thus the chronic indebtedness of much of the world and the growing inequality of the distribution of wealth are casually accepted as inevitable or brushed aside as outside the realm of corporate responsibility.

In contrast to such speculative finance, speculative fiction offers the possibility of imagining the future in different ways, “making visible what is expelled from this narrow pursuit of profit, working against the normalizing effects of the discourses of speculative finance.” Speculative fiction can envision futures shaped by meaningful social change rather than being limited to the assessment of financial risk and probability within the narrow purview of corporate forecasting. “The speculative finance iconography,” says Vint, “is all setting, images of what a future might look like, and graphs that show possibilities for increased profit. […] In contrast, speculative fiction remains propelled by characters,” so that we are made to think through how “patterns of daily life, from ways of earning a living to kinds of family structures,” might be performed and experienced differently. It is not, she is quick to point out, that all speculative fiction achieves or even attempts a critical vision of the future, but rather that speculative fiction has “a greater potential to be oriented toward the genuinely transformative rather than the predictably profitable.” One possible function of criticism at the present time is to encourage writers to realize that potential.

Each of the other 10 critical essays in “Speculative Finance/Speculative Fiction” focuses on one or a small set of fictional narratives through the lenses of financial and fictional speculation. The major 21st-century speculative fiction under inspection includes the novels Pattern Recognition (2003) by William Gibson, Jerusalem (2016) by Alan Moore, New York 2140 (2017) by Kim Stanley Robinson, and The Dervish House (2010) by Ian McDonald, along with Frank Herbert’s 1965 classic, Dune. Film and TV texts include the Twilight (2008–2012) and The Hunger Games (2012–15) series, Duncan Jones’s Moon (2009) and Source Code (2011), Andrew Niccol’s In Time (2011), an episode of the anthology series Black Mirror (2011–), and Christopher Nolan’s Interstellar (2014). The basic evaluative options are that a piece of fiction successfully offers critical and potentially transformative insight into late capitalist society or that it falls prey to the logic underpinning speculative finance even as, in many cases, it appears to criticize its practices. Most of the readings discern both of these possibilities in the same texts: in other words, sometimes the fictions are held to successfully resist neoliberalism’s insistence that “there is no alternative” to the current status quo, while at others they are said to act as its unintentionally symptomatic exemplars. All of the essays are well written, intelligently conceived, and coherently argued, and all of them offer striking insights that will help readers better appreciate the particular texts they examine as well as the cultural moment we are living through.

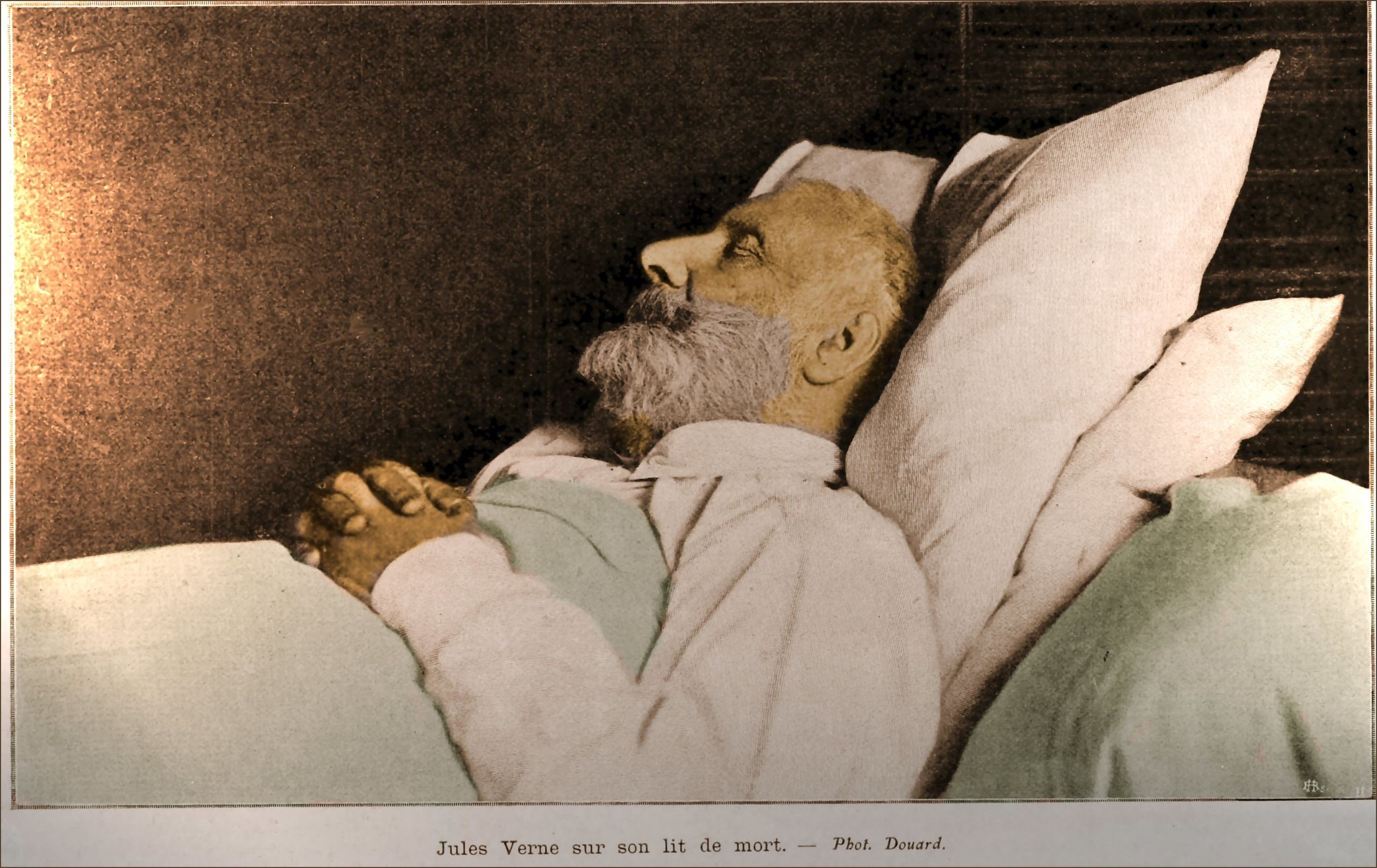

A set of texts left out of the previous summary are those addressed in Steve Asselin’s essay on three late-19th-century science-fictional short stories — by Robert Barr, George C. Griffith, and John Mills — about ecological catastrophe. While he notes the same pattern of critical acumen and ideological limitation in these stories as many of the other readings, Asselin’s essay adds to the collection a welcome reminder that the boom-and-bust cycles of capitalism, the unsustainable fantasy of eternal economic growth, and the environmental irresponsibility that fantasy entails were indeed objects of anxiety and criticism long before the late capitalist developments discussed elsewhere in the volume.

Asselin recounts a moment delightfully, if grotesquely, resonant with contemporary climate change denial in Robert Barr’s “Within an Ace of the End of the World” (1900). A new industrial technology extracts nitrogen from the air to be used as agricultural fertilizer, to the vast enrichment of its inventor and the monopolistic corporation he founds. But it also produces an excessively oxygen-rich atmosphere that causes everyone breathing it to be seized by an irrational, baseless euphoria that renders them incapable of dealing with or even recognizing the ongoing environmental disaster — which culminates in the over-oxygenated air catching fire and killing almost everyone on earth. Asselin concludes:

Just as the narratives I discussed illustrated a period of prosperity upon the exploitation of a new resource followed by a crash reified in the natural world, so too would I suggest that, from a historical perspective, our exploitation of fossil fuels has been one long bubble, and the onrushing threat of climate change is the consequent crash.

The least homogeneous ingredient in Higgins and O’Connell’s thoughtful collection is a piece that the editors describe as a “speculative theory-fiction interlude.” “The Great Dividuation” by Joel E. Mason (with Michael Hornblow and anique yael vered) is difficult to categorize or even to describe. It is a loosely moored narrative punctuated by verse, some of which turns out to have been sent back by the narrator from where he arrives at the end of the story “into the middle of the text / without explanation.” Indeed, a deficit of explanation is one of the piece’s core strategies, as it immerses the reader in a surreal landscape that can only gradually be comprehended as a kind of post-cyberpunk future structured by virtuality, corporate power, contracts, and credit exchanges. The story climaxes with the narrator and his companion stepping through a portal — a device neither foreshadowed, described, nor explained — and undergoing a transformation that strikes me as a kind of ode to accelerationism, the idea that the only way out of the current impasses of our society is to go through them:

We step through the portal, arm in arm, and I drift away in 10,000 different directions, my vision, my sense, my sight all dividualize. all of a sudden, I distend. I am broken, loosed, freed, bound to movement, all at the same time. i am north, south, east, west. i am bird wing and bottom feeder. bacon shoulder. i am the hedge, the short, the spread, the bet — all in not one, not all in a many-fingered one. we all capsize and spread. [sic]

The fantasy, I take it, is that of attaining a subject position structured by the unmappable space and temporal dissolution of global finance but free of its economic grasp: “[T]he portal was power and we built it so no one and everyone owned it.” I do not think this is necessarily what Vint has in mind when she praises speculative fiction’s ability to help us imagine different “patterns of daily life,” but providing what’s asked for in quite unexpected ways has always been thought a sign of artistic success, hasn’t it?

Nonetheless the distended, “dividuated” subject imagined in the theory/fiction interlude can be contrasted with a more common-sense insistence on the material embodiment of value in several of the critical essays. The missing component in speculative finance’s transformation of the circuit of capital from M-C-M’ to M-M’ — that is, the addition of value that takes place in the production of commodities — is crucially dependent on the contribution of labor power, a quantity measurable in terms of the socially necessary time spent in a task. This is the true basis of value as such, according to Marx and the political economists whose work he built upon.

Joe Conway explores the film In Time precisely because it imagines a future in which that equation of labor power with value has been literalized by turning time itself into the society’s currency — a currency immediately embodied by determining the lifespan of the individual holding it. Similarly, David P. Pierson analyzes the role of the clones and avatars in the films Moon and Source Code as ways to extend into speculative infinity the expropriation of the workers’ labor power. Hugh C. O’Connell’s reading of McDonald’s Dervish House emphasizes the way the corporate logic depicted there attempts to turn humans into machines while making machines perform the tasks of humans, thus eliding the difference between what Marx called constant and variable capital and evacuating labor power as such from the circuit of capital reproduction. And, in the final essay in the volume, Marcia Klotz analyzes the recent popularity of time-loop narratives, where an intervention from the future solves a problem (in the case of Interstellar, a scientific problem) in the present. According to Klotz,

What gets elided in the process […] is the moment of knowledge production, the actual labor of scientific discovery […] The elision of that moment of production captures what is at stake in the increasing reliance on futures trading — the broad shift in wealth production from M-C-M’ to finance’s foreshortened formula of M-M’.

She concludes that “the contemporary economic emphasis on finance is unsustainable because its growth no longer fosters but ultimately serves to undermine the value-producing economic activity on which it is based.”

As fictional capital accumulates its vast but unrealizable profits, the material world spirals into irredeemable debt — yet another version of the self-destructive dilemma that has produced global warming. “Speculative Finance/Speculative Fiction” does not issue any explicit call to political contestation of the contemporary political-economic order, but it clearly outlines why the survival of our civilization may depend upon it.

¤

John Rieder is Professor Emeritus of English at the University of Hawaiʻi at Mānoa.