Soy scaffolds: Breakthrough in cultivated meat production

Researchers from the Technion-Israel Institute of Technology and Aleph Farms have achieved a breakthrough in the production of cultivated meat grown outside an animal's body. In findings published today in Nature Food, soy protein, which is readily available and economically efficient, can be used as scaffolds for growing bovine tissue.

The innovative technology, whereby cultured meat is grown on scaffolds made of soy protein, was spearheaded by Professor Shulamit Levenberg, dean of Technion's Faculty of Biomedical Engineering, over the past few decades. The technology was originally intended for medical applications, in particular for tissue engineering for transplants in humans.

There are several incentives for developing cultured meat. These include environmental damage caused by the meat-production industry, increased use of antibiotics that accelerates the growth of drug-resistant bacteria, ethical reservations about the suffering of animals during the meat production process, and the industry's detrimental ecological impact due to the intensive use of natural resources.

Aleph Farms is the first company to successfully grow slaughter-free steaks, using original technology developed by Prof. Levenberg and her team. Prof. Levenberg is the company's founding partner and chief scientist, and the current research was supervised by doctoral student Tom Ben-Arye and Dr. Yulia Shandalov.

The article in Nature Food presents an innovative process for growing cultured meat tissue in only three-to-four weeks that resembles the texture and taste of beef. The process is inspired by nature, meaning that the cells grow in a controlled setting similar to the way they would grow inside a cow's body.

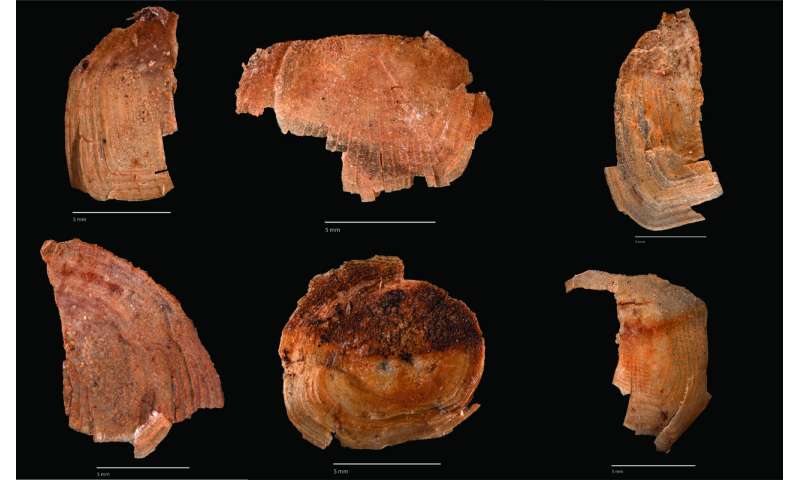

The cells grow on a scaffold that replaces the extracellular matrix (ECM) found in animals. Since this is a food product, the scaffold must be edible, and therefore only edible alternatives were considered. Soy protein was selected as the scaffold on which the cells adhere and proliferate with the help of myogenesis-related growth factors, similarly to the tissue engineering technology developed by Prof. Levenberg.

Soy protein, an inexpensive byproduct obtained during the production of soy oil, is readily available and rich in protein. It is a porous material, and its structure promotes cell and tissue growth. Soy protein's tiny holes are suitable for cell adherence, division, and proliferation. It also has larger holes that transmit oxygen and nutrients essential for building muscle tissue. Furthermore, soy protein scaffolds for growing cultured meat can be produced in different sizes and shapes, as required.

The cultured meat in this research underwent testing that confirmed its resemblance to slaughtered steak in texture and taste. According to Prof. Levenberg, "We expect that in the future it will be possible to also use other vegetable proteins to build the scaffolds. However, the current research using soy protein is important in proving the feasibility of producing meat from several types of cells on plant-based platforms, which increases its similarity to conventional bovine meat."

More information: Tom Ben-Arye et al. Textured soy protein scaffolds enable the generation of three-dimensional bovine skeletal muscle tissue for cell-based meat, Nature Food (2020). DOI: 10.1038/s43016-020-0046-5