The climate project that changed how we understand extreme weather

When a handful of scientists tried to publish rapid research into the role of climate change in record rainfall that lashed Britain in 2015, they were told their high-speed approach was "not science".

Fast forward to 2021.

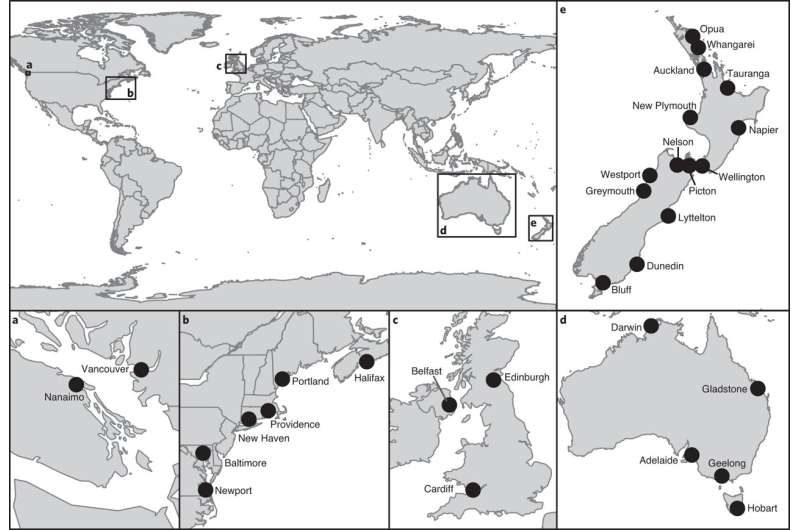

As extreme heat scorched North America, the same scientists from the World Weather Attribution (WWA) group concluded that the record-shattering temperatures would have been "virtually impossible" without human-caused climate change.

This time people paid attention.

The finding made headlines worldwide and news stories replaced vague references to the impact of global heating on extreme weather with precise details.

And that was exactly the idea of WWA, a network of scientists who wanted to shift understanding of how climate change impacts the real world.

"We wanted to change the conversation, but we never expected that it would be so successful," said climatologist Friederike Otto, who conceived WWA with Dutch scientist Geert Jan van Oldenborgh in 2014.

In September, Otto and van Oldenborgh, who worked for the Royal Dutch Meteorological Institute (KNMI), were among Time magazine's 100 most influential people of 2021.

Their work "means that people reading about our accelerating string of disasters increasingly get the most important information of all: it's coming from us", Time said.

Before he died from cancer last week, van Oldenborgh responded with characteristic modesty.

"We never aimed to be influential, just give scientifically defensible answers to questions how climate change influences extreme weather," he tweeted.

Otto said van Oldenborgh, who would have been 60 on Friday, had a "very strong moral compass" to do science for the good of society, particularly for those most vulnerable to climate change.

"I would be really hard pressed to think of anyone of his generation who has done more, and more important, science," said Otto, a lead author in the UN's Intergovernmental Panel on Climate Change.

"But he was so laid back and did not have an inflated ego that I don't think many people recognise this."

Frustration

The WWA's revolutionary approach allows scientists for the first time to specifically link an individual weather event to manmade warming.

The beginnings of extreme weather attribution can be traced back to 2004, when a British study in the journal Nature found that the punishing European heatwave the previous year was made more likely by climate change.

But by the time this type of research passed peer review for publication, it was often months after the event.

So when confronted with an extreme heat wave or ferocious storm, scientists and the media were reluctant to blame it specifically on human-caused heating.

It was "very frustrating", said Otto.

In one of their earliest studies, the WWA team looked at record rainfall in Britain from Storm Desmond in 2015 and found climate change aggravated the flood hazard.

But their subsequent paper submitted to a scientific journal was rejected.

"There were lots of people in the scientific community saying 'this is too fast. This is not science,'," she said.

A few years later they revisited the research and were able to publish it with the same numbers.

Off the scale

To investigate whether climate change plays a role in an event, WWA compares possible weather today—after about 1.2 degrees Celsius of global warming since the mid-1800s—with a simulated climate without that heating.

They also work with local experts to assess exposure and vulnerability and decisions on the ground, like evacuation orders.

The Red Cross was an early partner, as was the US-based science organisation Climate Central, which provided some funding.

WWA has now published peer-reviewed methods and showed that rapid attribution can be an "operational activity" said Robert Vautard, also an IPCC lead author and director of France's Pierre-Simon Laplace Institute.

"You don't publish a paper each time you do a weather forecast," he told AFP.

But when it came to a heatwave in western Canada and the northwestern US in June, temperatures went "off the scale", he added.

The Canadian village of Lytton was almost completely destroyed by fire days after it set the national temperature record of 49.6C.

WWA concluded that in today's climate, it was a once-in-a-thousand-year event.

There are lingering questions, such as whether some new effect made the heatwave so extreme.

"Crossing a tipping point, if you like," said Sarah Kew, who oversaw the research with fellow KNMI scientist Sjoukje Philip.

At the time, van Oldenborgh said the heatwave was something "nobody thought possible".

He continued to work even from his hospital bed, Kew said. He wanted to pass on his knowledge.

With extreme events accelerating, WWA scientists insist they will continue the work.

"Everyone knows that we have a big gap now," said Philip.

"But everyone is also willing to try to fill this gap together."