UNICORN TECH

Compact Fusion Power Plant Concept Uses State-of-the-Art Physics To Improve Energy Production

The Compact Advanced Tokamak (CAT) is a potentially economical solution for fusion energy production that takes advantage of advances in simulation and technology. Credit: Image courtesy of General Atomics. Tokamak graphic modified from F. Najmabadi et al., The ARIES-AT advanced tokamak, Advanced technology fusion power plant, Fusion Engineering Design, 80, 3-23 (2006).

Fusion power plants use magnetic fields to hold a ball of current-carrying gas (called a plasma). This creates a miniature sun that generates energy through nuclear fusion. The Compact Advanced Tokamak (CAT) concept uses state-of-the-art physics models to potentially improve fusion energy production. The models show that by carefully shaping the plasma and the distribution of current in the plasma, fusion plant operators can suppress turbulent eddies in the plasma. These eddies can cause heat loss. This will enable operators to achieve higher pressures and fusion power with lower current. This advance could help achieve a state where the plasma sustains itself and drives most of its own current.

In this approach to tokamak reactors, the improved performance at reduced plasma current reduces stress and heat loads. This alleviates some of the engineering and materials challenges facing fusion plant designers. Higher pressure also increases an effect where the motion of particles in the plasma naturally generates the current required. This greatly reduces the need for expensive current drive systems that sap a fusion plant’s potential electric power output. It also enables a stationary “always-on” configuration. This approach leads to plants that suffer less stress during operation than typical pulsed approaches to fusion power, enabling smaller, less expensive power plants.

Over the past year, the Department of Energy’s (DOE) Fusion Energy Sciences Advisory Committee and the National Academies of Sciences, Engineering, and Medicine have released roadmaps calling for the aggressive development of fusion energy in the United States. Researchers believe that achieving that goal requires development of more efficient and economical approaches to creating fusion energy than currently exist. The approach used to create the CAT concept developed novel reactor simulations that leverage the latest physics understanding of plasma to improve performance. Researchers combined state-of-the-art theory validated at the DIII-D National Fusion Facility with leading-edge computing using the Cori supercomputer at the National Energy Research Scientific Computing Center. These simulations identified a path to a concept enabling a higher-performance, largely self-sustaining configuration that holds energy more efficiently than typical pulsed configurations, allowing it to be built at reduced scale and cost.

Reference: “The advanced tokamak path to a compact net electric fusion pilot plant” by R.J. Buttery, J.M. Park, J.T. McClenaghan, D. Weisberg, J. Canik, J. Ferron, A. Garofalo, C.T. Holcomb, J. Leuer, P.B. Snyder and The Atom Project Team, 19 March 2021, Nuclear Fusion.

DOI: 10.1088/1741-4326/abe4af

This work was supported by the Department of Energy Office of Science, Office of Fusion Energy Sciences, based on the DIII-D National Fusion Facility, a DOE Office of Science user facility, and the AToM Scientific Discovery through Advanced Computing project.

Integrating hot cores and cool edges in fusion reactors

Future fusion reactors have a conundrum: maintain a plasma core that is hotter than the surface of the sun without melting the walls that contain the plasma. Fusion scientists refer to this challenge as "core-edge integration." Researchers working at the DIII-D National Fusion Facility at General Atomics have recently tackled this problem in two ways: the first aims to make the fusion core even hotter, while the second focuses on cooling the material that reaches the wall. Protecting the plasma facing components could make them last longer, making future fusion power plants more cost-effective.

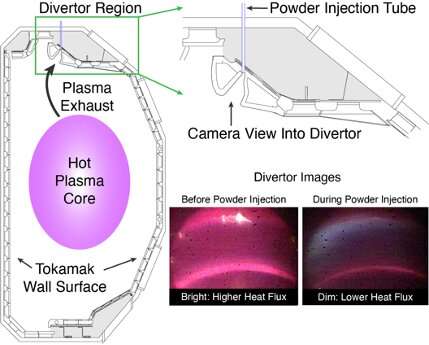

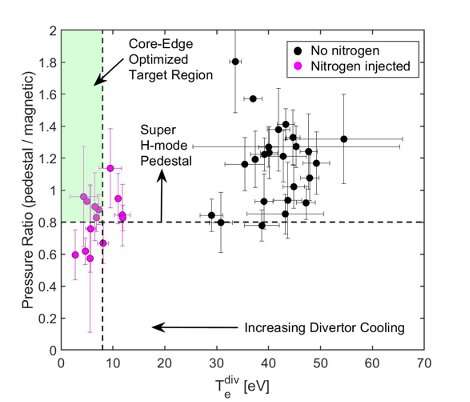

Just like the more familiar internal combustion engine, vessels used in fusion research must exhaust heat and particles during operation. Like a car's exhaust pipe, this exit path is designed to handle high heat and material loads but only within certain limits. One key strategy for reducing the heat coming from the plasma core is to inject impurities—particles heavier than the mostly hydrogen plasma—into the exhaust region. These impurities help remove excess heat in the plasma before it hits the wall, helping the plasma-facing materials last longer. These same impurities, however, can travel back into regions where fusion reactions are occurring, reducing overall performance of the reactor.

Past impurity injection experiments have relied on gaseous impurities, but a research team from the U.S. Department of Energy's Princeton Plasma Physics Laboratory experimented with the injection of a powder consisting of boron, boron nitride, and lithium (Figure 1). The use of powder rather than gas offers several advantages. It allows a larger range of potential impurities, which can also be made purer and less likely to chemically react with the plasma. Experiments using powder injection on DIII-D are aimed at cooling the boundary of the plasma while maintaining the heat in the core of the plasma. Measurements showed only a marginal decrease in fusion performance during the heat production.

The experiments developed a balanced approach that achieved significant edge cooling with only modest effects on core performance. Incorporating powder injection or the use of the Super H-mode into future reactor designs may allow them to maintain high levels of fusion performance while increasing the lifetime of divertor surfaces that exhaust waste heat. Both sets of experimental results, coupled with theoretical simulations, suggest that these approaches would be compatible with larger devices like ITER, the international tokamak under construction in France, and would facilitate core-edge integration in future fusion power plants.

Fast flows prevent buildup of impurities on the edge of tokamak plasmas

More information: GI02.00001. Mitigation of plasma-materials interactions with low-Z powders in DIII-D H-mode discharges

Provided by American Physical Society

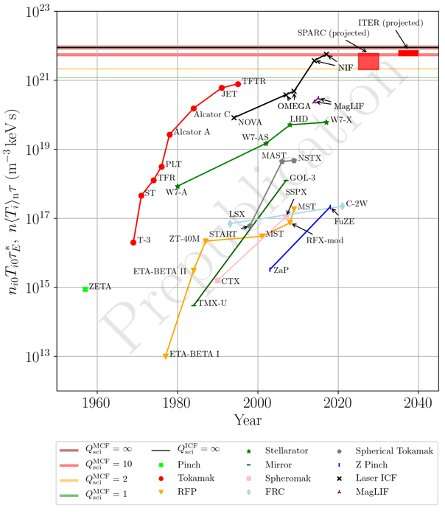

Unveiling the steady progress toward fusion energy gain

The march towards fusion energy gain, required for commercial fusion energy, is not always visible. Progress occurs in fits and starts through experiments in national laboratories, universities, and more recently at private companies. Sam Wurzel, a Technology-to-Market Advisor at the Advanced Research Projects Agency-Energy (ARPA-E), details and highlights this progress over the last 60 years by extracting and cataloging the performance of over 70 fusion experiments in this time span. The work illustrates the history and development of different approaches including magnetic-fusion devices such as tokamaks, stellarators and other "alternate concepts," laser-driven devices such as inertial confinement fusion (ICF), and hybrid approaches including liner-imploded and z-pinch concepts.

A minimum condition for developing fusion research into a viable energy source for society is the achievement of large energy gain—that is, much more energy released due to fusion reactions than the energy put into the system in the first place. In 1955, a British engineer named J.D. Lawson identified the requirements for achieving high levels of energy gain: high temperatures, and a high product of density and energy confinement (or burn) time. Multiplying all three parameters into a single value called the "fusion triple product" gives a metric that allows for the comparison of different fusion concepts along the axis of energy gain. By extracting data from dozens of fusion journal articles and reports over the last six decades, Wurzel shows that progress was rapid from the 1960s to the 1990s.

Past the nineties, however, the increase in fusion triple product did not grow as steadily, but has jumped significantly in recent years in laser-based ICF at the National Ignition Facility (NIF), which has achieved the largest fusion triple product values to date. Newer, lower-cost concepts pursued by private fusion companies, like the sheared-flow stabilized Z-pinch and the field-reversed configuration (FRC) and other novel configurations are showing progress and promise, surpassing the performance of early tokamaks.

The key figures from Wurzel's invited tutorial talk, based on the manuscript by Wurzel and Hsu, provide a comprehensive framework, inclusive of all thermonuclear fusion concepts, for tracking and understanding the physics progress of fusion toward energy breakeven and gain (Figure 1).Researchers report argon fluoride laser fusion research findings

More information: PT02.00001. Progress Toward Fusion Energy Breakeven and Gain as Measured Against the Lawson Criterion

Provided by American Physical Society

Researchers at the brink of fusion ignition at National Ignition Facility

After decades of inertial confinement fusion research, a record yield of more than 1.3 megajoules (MJ) from fusion reactions was achieved in the laboratory for the first time during an experiment at Lawrence Livermore National Laboratory's (LLNL) National Ignition Facility (NIF) on Aug. 8, 2021. These results mark an 8-fold improvement over experiments conducted in spring 2021 and a 25-fold increase over NIF's 2018 record yield (Figure 1).

NIF precisely guides, amplifies, reflects, and focuses 192 powerful laser beams into a target about the size of a pencil eraser in a few billionths of a second. NIF generates temperatures in the target of more than 180 million F and pressures of more than 100 billion Earth atmospheres. Those extreme conditions cause hydrogen atoms in the target to fuse and release energy in a controlled thermonuclear reaction.

LLNL physicist Debbie Callahan will discuss this achievement during a plenary session at the 63rd Annual Meeting of the APS Division of Plasma Physics. While there has been significant media coverage of this achievement, this talk will represent the first opportunity to address these results and the path forward in a scientific conference setting.

Achieving these large yields has been a long-standing goal for inertial confinement fusion research and puts researchers at the threshold of fusion ignition, an important goal of NIF, the world's largest and most energetic laser.

The fusion research community uses many technical definitions for ignition, but the National Academy of Science adopted the definition of "gain greater than unity" in a 1997 review of NIF, meaning fusion yield greater than laser energy delivered. This experiment produced fusion yield of roughly two-thirds of the laser energy that was delivered, tantalizingly close to that goal.

The experiment built on several advances developed over the last several years by the NIF team including new diagnostics; target fabrication improvements in the capsule shell, fill tube and hohlraum (a gold cylinder that holds the target capsule); improved laser precision; and design changes to increase the energy coupled to the implosion and the compression of the implosion.

These advances open access to a new experimental regime, with new avenues for research and the opportunity to benchmark modeling used to understand the proximity to ignition.Unveiling the steady progress toward fusion energy gain

More information: Abstract: AR01.00001. Achieving a Burning Plasma on the National Ignition Facility (NIF) Laser

Provided by American Physical Society

New Insights Into Heat Pathways Advances Understanding of Fusion Plasma

Physicist Suying Jin with computer-generated images showing the properties of heat pulse propagation in plasma. Credit: Headshot courtesy of Suying Jin / Collage courtesy of Kiran Sudarsanan

A high-tech fusion facility is like a thermos — both keep their contents as hot as possible. Fusion facilities confine electrically charged gas known as plasma at temperatures 10 times hotter than the sun, and keeping it hot is crucial to stoking the fusion reactions that scientists seek to harness to create a clean, plentiful source of energy for producing electricity.

Now, researchers at the U.S. Department of Energy’s (DOE) Princeton Plasma Physics Laboratory (PPPL) have made simple changes to equations that model the movement of heat in plasma. The changes improve insights that could help engineers avoid the conditions that could lead to heat loss in future fusion facilities.

Fusion, the power that drives the sun and stars, combines light elements in the form of plasma — the hot, charged state of matter composed of free electrons and atomic nuclei — that generates massive amounts of energy. Scientists are seeking to replicate fusion on Earth for a virtually inexhaustible supply of power to generate electricity.

“The whole magnetic confinement fusion approach basically boils down to holding a plasma together with magnetic fields and then getting it as hot as possible by keeping heat confined,” said Suying Jin, a graduate student in the Princeton Program for Plasma Physics and lead author of a paper reporting the results in Physical Review E. “To accomplish this goal, we have to fundamentally understand how heat moves through the system.”

Scientists had been using an analysis technique that assumed that the heat flowing among electrons was substantially unaffected by the heat flowing among the much larger ions, Jin said. But she and colleagues found that the two pathways for heat actually interact in ways that can profoundly affect how measurements are interpreted. By allowing for that interaction, scientists can measure the temperatures of electrons and ions more accurately. They also can infer information about one pathway from information about the other.

“What’s exciting about this is that it doesn’t require different equipment,” Jin said. “You can do the same experiments and then use this new model to extract much more information from the same data.”

Jin became interested in heat flow during earlier research into magnetic islands, plasma blobs formed from swirling magnetic fields. Modeling these blobs depends on accurate measurements of heat flow. “Then we noticed gaps in how other people had measured heat flow in the past,” Jin said. “They had calculated the movement of heat assuming that it moved only through one channel. They didn’t account for interactions between these two channels that affect how the heat moves through the plasma system. That omission led both to incorrect interpretations of the data for one species and missed opportunities to get further insights into the heat flow through both species.”

Jin’s new model provides fresh insights that weren’t available before. “It’s generally easier to measure electron heat transport than it is to measure ion heat transport,” said PPPL physicist Allan Reiman, a paper co-author. “These findings can give us an important piece of the puzzle in an easier way than expected.”

“It is remarkable that even minimal coupling between electrons and ions can profoundly change how heat propagates in plasma,” said Nat Fisch, Professor of Astrophysical Sciences at Princeton University and a co-author of the paper. “This sensitivity can now be exploited to inform our measurements.”

The new model will be used in future research. “We are looking at proposing another experiment in the near future, and this model will give us some extra knobs to turn to understand the results,” Reiman said. “With Jin’s model, our inferences will be more accurate. We now know how to extract the additional information we need.”

Reference: “Coupled heat pulse propagation in two-fluid plasmas” by S. Jin, A. H. Reiman and N. J. Fisch, 4 May 2021, Physical Review E.

DOI: 10.1103/PhysRevE.103.053201

.png)