by: Maya Posch

January 23, 2020

It’s hardly a secret that nuclear fusion has had a rough time when it comes to its image in the media: the miracle power source that is always ‘just ten years away’. Even if no self-respecting physicist would ever make such a statement, the arrival of commercial nuclear fusion power cannot come quickly enough for many. With the promise of virtually endless, clean energy with no waste, it does truly sound like something from a science-fiction story.

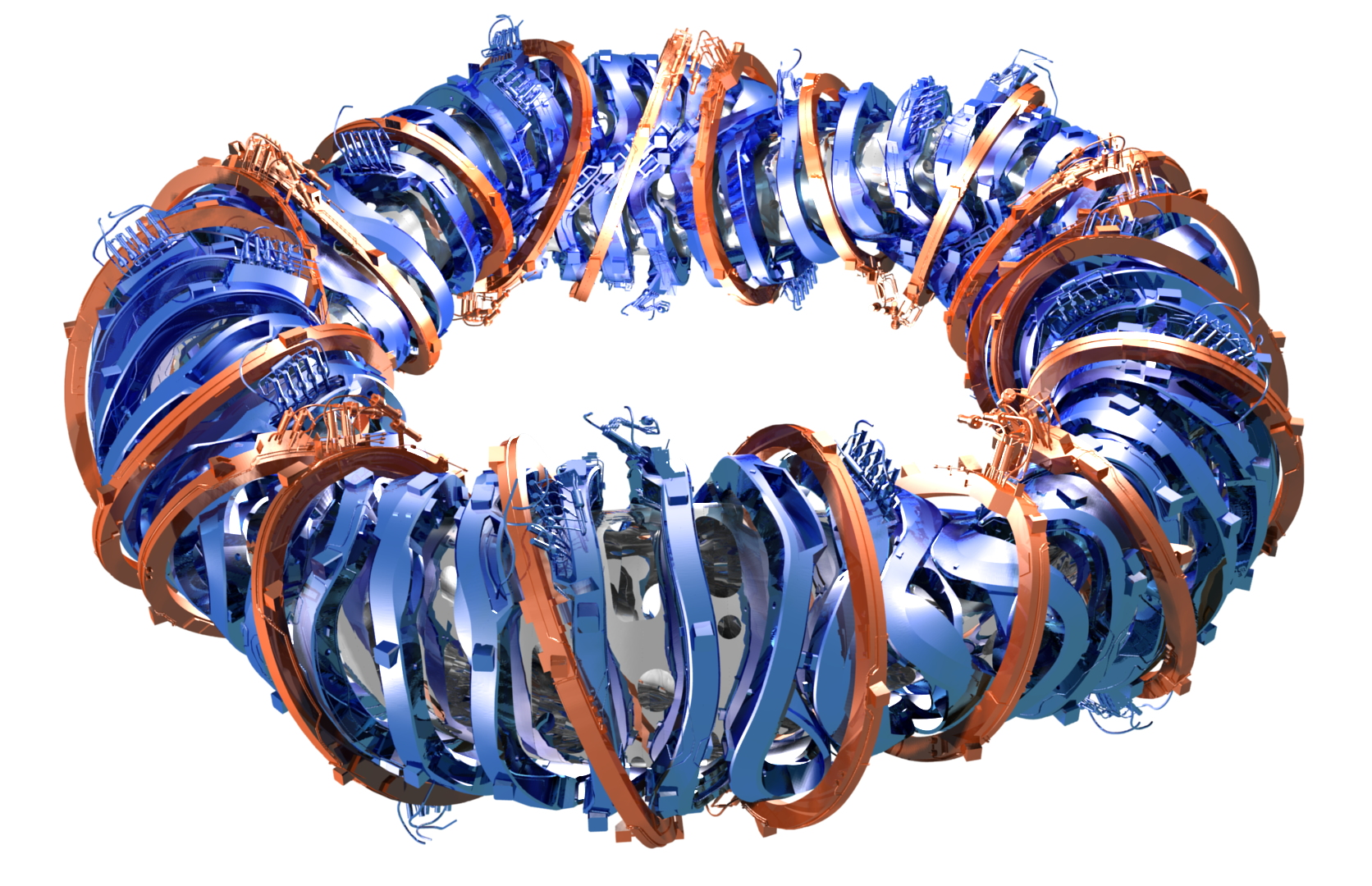

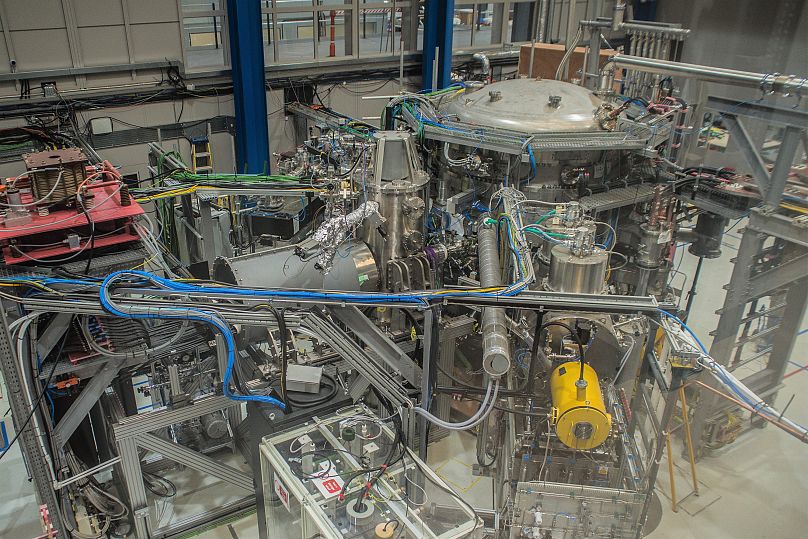

Meanwhile, in the world of non-fiction, generations of scientists have dedicated their careers to understanding better how plasma in a reactor behaves, how to contain it and what types of fuels would work best for a fusion reactor, especially one that has to run continuously, with a net positive energy output. In this regard, 2020 is an exciting year, with the German Wendelstein 7-X stellarator reaching its final configuration, and the Chinese HL-2M tokamak about to fire up.

Join me after the break as I look into what a century of progress in fusion research has brought us and where it will take us next.

PREVIOUSLY IN PURSUIT OF NUCLEAR FUSION

The discovery that the total mass equivalent of four hydrogen atoms is more than that of a single helium (4He) atom was made in 1920 by British physicist Francis William Aston. This observation led to the conclusion that net energy can be released when one fuses hydrogen cores together, for example in the common deuterium (2D) tritium (3T) reaction:

Deuterium (2H, or D) is a common, stable isotope of hydrogen that occurs naturally in abundance, to the rate of 0.02% of the hydrogen in Earth’s oceans. Tritium (3H, or T) is an unstable, radioactive (beta emitter) isotope of hydrogen, with a half-life of 12.32 years. Tritium is formed naturally by the interaction with cosmic rays, but can be easily bred from lithium metal, either in a breeder blanket in a fusion reactor, or from a fission reactor that uses heavy water (deuterium), like Canada’s CANDU reactors.

The first neutrons from nuclear fusion were detected in 1933 by staff members of Ernest Rutherfords’ at the University of Cambridge. This involved the acceleration of protons towards a target with energies of up to 600 keV. Research on the topic during the 1930s would lay the foundation for the development of the first concepts for fusion reactors, initially involving the Z-pinch concept which uses the Lorentz force to contain the plasma.

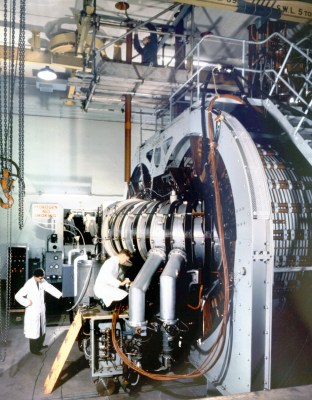

Teams around the world worked in absolute secrecy, with all fusion-related research being classified. The British created the ZETA stabilized pinch fusion reactor, with the hope that this would prove to be a viable blueprint for commercial fusion reactors. Unfortunately, ZETA proved that the Z-pinch design would always suffer from instabilities, and by 1961 the Z-pinch concept was abandoned.

Meanwhile, the Russians had developed the tokamak reactor concept during the 1950s, partially based on the Z-pinch design. The tokamak design proved to be able to suppress the instabilities that had plagued Z-pinch reactors, as well as early stellerator designs. These days, most fusion reactors in operation are of the tokamak design, though the stellarator has seen a resurgence recently, especially in the form of the Wendelstein 7-X project.

As mentioned in the article introduction, Wendelstein 7-X has reached an important milestone. Since we first wrote about this project back in 2015, the project has worked through all of its targets except the final one: cooled divertor operation. The reactor is currently being upgraded with these divertors which should theoretically allow for steady-state operation, allowing for impurities to be removed from the plasma during operation.

Installing the new divertors and cryopumps will continue into much of 2020, involving the running of a 55 meter long transfer line to the cryoplant, along with the installation of new storage tanks for helium gas and liquid nitrogen. With some luck we’ll see the first tests of the new system this year, but most likely the first continuous operations of WX-7 will take place in 2021.

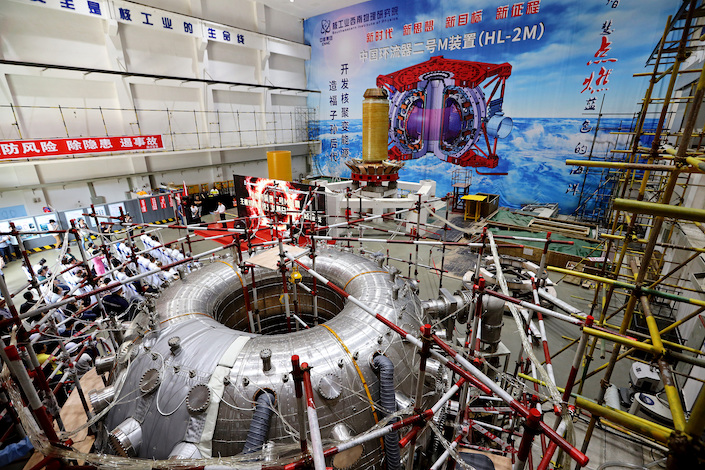

Over in China, the final touches have been put on its HL-2M tokamak, which is the latest in a range of tokamak designs since the 1960s. The HL-2M is the new configuration of the HL-2A tokamak, one of three tokamaks currently in use in China (EAST and J-TEXT being the other two). HL-2M has seen big changes to its coil configuration that allow for the creation of many types of plasma, along with the testing of various types of divertor configurations. This year HL-2M will see its first plasma.

Depending on the performance of HL-2M, it will allow for the CFETR (China Fusion Engineering Test Reactor) project to start its construction phase in the 2020s. In its first phase, the CFETR tokamak would demonstrate steady-state operation and tritium breeding. In its second phase CFETR would be updated to allow for a power output of 1 GW (compared to ITER’s 500 MW) and a fusion gain (Q) higher than 12.

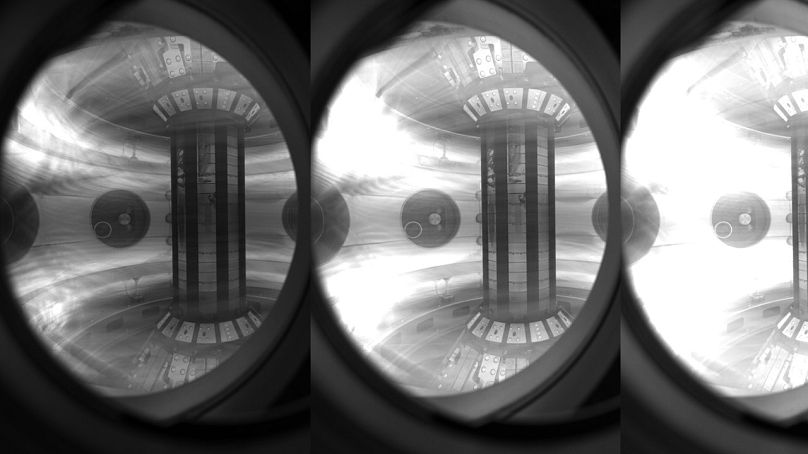

FUSION IS HARD

The main reason why nuclear fusion is taking so long compared to nuclear fission is that the former requires the ideal conditions to even occur, let alone persist. This means high temperatures, high pressures, high currents, or a combination thereof. Even then plasma containment is notoriously complicated, as the plasma isn’t a nice, calm gaseous state that’s just kinda floating there. Rather it’s a seething collection of plasma at high pressures and many millions of degrees Celsius that’ll breach through the magnetic confinement any chance it gets. Although significant progress has been made here, the coming years will tell whether we’ll avoid a Z-pinch-like dead-end with the tokamak and stellarator designs.

Many aspects of running a nuclear fusion reactor remain somewhat of a mystery, much as it was with the first fission reactors, where early generation II designs saw countless changes to materials used, along with fundamental changes to the overall design to improve performance and safety. Although fusion reactors are less challenging in this regard, they do have to deal with the neutron bombardment of materials in the core, which can weaken them. This also leads to the only waste that is produced by fusion reactors: the reactor core itself.

A combination of activation through this exposure to neutrons, along with contamination with tritium will render core materials radioactive, requiring handling of the resulting low- to intermediate- level radioactive waste like steel and other building materials at the end of the reactor’s lifespan. Fortunately, studies have shown that intermediate storage of up to 100 years is sufficient to render these materials safe.

Regardless, the neutron exposure is one aspect which can likely be dealt with in a more direct way through material selection or through neutron capture mechanisms in future reactor designs. As China’s program evolves through HL-2M into CFETR and ITER into DEMO, the hope is that we can catch any issues and make improvements before fusion hits prime time.

LOW ENERGY NUCLEAR REACTIONS

Tangentially related to fusion, LENR is what used to be called ‘Cold Fusion’. Marred by decades of ridicule, a number of scientists have nonetheless persisted in examining the phenomenon that lit up the world with promises of fusion power at room temperature. Dismissing the original theory of hydrogen atoms fusing, the current theory is that protons and electrons can be merged to form neutrons. For a detailed overview, see for example this video presentation by Prof. Peter Hagelstein, an MIT associate professor.

According to the Widom-Larsen theory, the reason why the original 1989 experiment was so hard to recreate is because it relies on hydrogen atoms settling on active sites on the palladium (or equivalent) layer. This means that one needs to create a suitable surface on a nano level, something which was not realistic in the 1980s.

Even if LENR never turns into anything more than a curiosity, it does give us a glimpse in another way that atoms seem to behave. Best case it might provide us with ways to improve fusion reactors in ways that we cannot fathom today, by lowering pressure and temperature requirements.

ENERGY DOMINANCE IS THE GAME

A commercial fusion reactor would be essentially the pinnacle of energy generation, barring the development of anti-matter reactors or such. As an energy source, its fuel is practically unlimited, with the deuterium-tritium type having enough fuel on Earth for millions of years, and the deuterium process version offering billions of years of energy production using just the deuterium available on Earth. The first nation to master this capability stands to gain a lot.

Fusion reactors are inherently safe, due to the complexity of maintaining the proper conditions for the fusion process to take place. During operation, no waste is produced, but instead very useful helium (4He) is created which has countless applications in everything from industry to running MRI scanners, to filling up party balloons. While nuclear fusion will very likely co-exist with nuclear fission (possibly using FNRs) in a complementary fashion, the former being ideally suited to handle electricity and heat generation for all population centers on Earth.

Exactly when we’ll see the first commercially viable fusion reactor appear is hard to tell at this point. With ITER not projected to see first plasma until 2035, we might see China with its CFETR and successor reactors reach the point of commercial viability by the 2030s or 2040s. Yet as the UK learned with ZETA, in plasma physics nothing is certain.

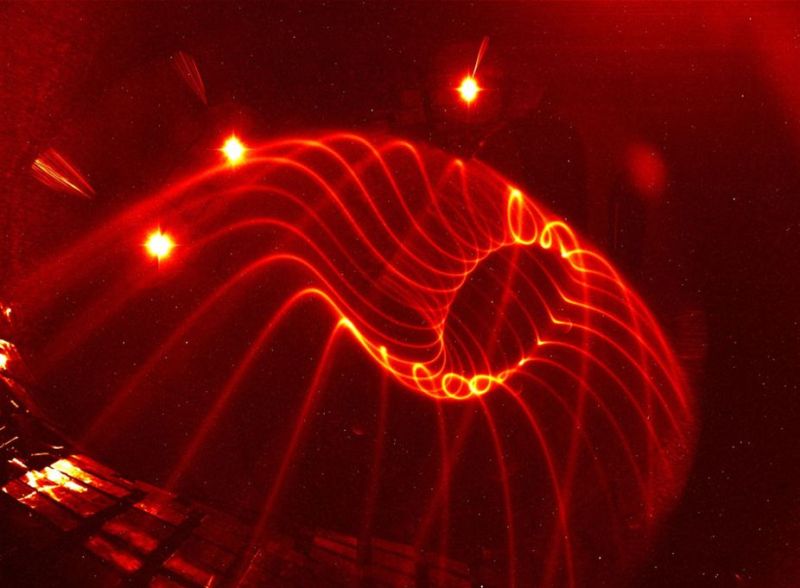

[Main image: Experimental visualization of the field line on a magnetic surface in Wendelstein 7-X. CC-BY 4.0, Wendelstein 7-X team]Posted in Featured, green hacks, Interest, Science, SliderTagged cfetr, deuterium, hl-2m, ITER, nuclear fission, nuclear fusion, Tritium, wendelstein 7-x, wx-7