Toward fusion energy, team models plasma turbulence on the nation's fastest supercomputer

A team modeled plasma turbulence on the nation's fastest supercomputer to better understand plasma behavior

The same process that fuels stars could one day be used to generate massive amounts of power here on Earth. Nuclear fusion—in which atomic nuclei fuse to form heavier nuclei and release energy in the process—promises to be a long-term, sustainable, and safe form of energy. But scientists are still trying to fine-tune the process of creating net fusion power.

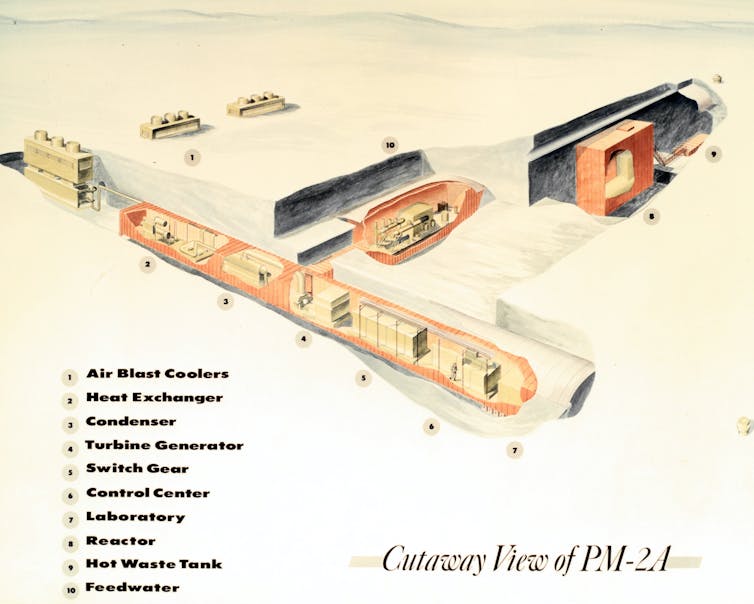

A team led by computational physicist Emily Belli of General Atomics has used the 200-petaflop Summit supercomputer at the Oak Ridge Leadership Computing Facility (OLCF), a US Department of Energy (DOE) Office of Science user facility at Oak Ridge National Laboratory (ORNL), to simulate energy loss in fusion plasmas. The team used Summit to model plasma turbulence, the unsteady movement of plasma, in a nuclear fusion device called a tokamak. The team's simulations will help inform the design of next-generation tokamaks like ITER with optimum confinement properties. ITER is the world's largest tokamak, which is being built in the south of France.

"Turbulence is the main mechanism by which particle losses happen in the plasma," Belli said. "If you want to generate a plasma with really good confinement properties and with good fusion power, you have to minimize the turbulence. Turbulence is what moves the particles and energy out of the hot core where the fusion happens."

The simulation results, which were published in Physics of Plasmas earlier this year, provided estimates for the particle and heat losses to be expected in future tokamaks and reactors. The results will help scientists and engineers understand how to achieve the best operating scenarios in real-life tokamaks.

A balancing act

In the fusion that occurs in stars like our sun, two hydrogen ions (i.e., positively charged proton particles) fuse to form helium ions. However, in experiments on Earth, scientists must use hydrogen isotopes to create fusion. Each hydrogen isotope has one positively charged proton particle, but different isotopes carry different numbers of neutrons. These neutral particles don't have a charge, but they do add mass to the atom.

Traditionally, physicists have used pure deuterium—a hydrogen isotope with one neutron—to generate fusion. Deuterium is readily available and easier to handle than tritium, a hydrogen isotope with two neutrons. However, physicists have known for decades that using a mixture of 50 percent deuterium and 50 percent tritium yields the highest fusion output at the lowest temperature.

"Even though they've known this mixture gives the greatest amount of fusion output, almost all experiments for the last few decades have only used pure deuterium," Belli said. "Experiments using this mixture have only been done a few times over the past few decades. The last time it was done was more than 20 years ago."

To ensure the plasma is confined in a reactor and that energy is not lost, both the deuterium and tritium in the mixture must have equal particle fluxes, an indicator of density. Scientists aim to maintain a 50-50 density throughout the tokamak core.

"You want the deuterium and the tritium to stay in the hot core to maximize the fusion power," Belli said.

Supercomputing powers fusion simulations

To study the phenomenon, the team competed for and won computing allocations on Summit through two allocation programs at the OLCF. These were the Advanced Scientific Computing Research Leadership Computing Challenge, or ALCC, and the Innovative and Novel Computational Impact on Theory and Experiment, or INCITE, programs.

The researchers modeled plasma turbulence on Summit using the CGYRO code codeveloped by Jeff Candy, director of theory and computational sciences at General Atomics and co-principal investigator on the project. CGYRO was developed in 2015 from the GYRO legacy computational plasma physics code. The developers designed CGYRO to be compatible with the OLCF's Summit system, which debuted in 2018.

"We realized in 2015 that we wanted to upgrade our models to handle these self-sustaining plasma regimes better and to handle the multiple scales that arise when you have different types of ions and electrons, like in these deuterium-tritium plasmas," Belli said. "It became clear that if we wanted to update our models and have them be highly optimized for next-generation architectures, then we should start from the ground up and completely rewrite the code. So that's what we did."

With Summit, the team could include both isotopes—deuterium and tritium—in their simulations.

"Up until now, almost all simulations have only included one of these isotopes—either deuterium or tritium," Belli said. "The power of Summit enabled us to include both as two separate species, model the full dimensions of the problem, and resolve it at different time and spatial scales."

Results for the real world

Experiments using deuterium-tritium fuel mixtures are now being carried out for the first time since 1997 at the Joint European Torus (JET), a fusion research facility at the Culham Centre for Fusion Energy in Oxfordshire, UK. The experiments at the JET facility will help scientists and engineers develop fuel control practices for maintaining a 50-50 ratio of deuterium to tritium. Belli said it will likely be the last time deuterium-tritium experiments are run until ITER, the world's largest tokamak, is built.

"The experimental team is getting results as we speak, and in the next few months, the data will be analyzed," Belli said.

The results will give scientists a better idea of the behavior of deuterium-tritium fuel for a practical fusion reactor.

"This fuel gives you the highest fusion output at the lowest temperature, so you don't have to heat it quite as hot to get an enormous amount of fusion power out of it," Belli said.

"Because it's been so long since these kinds of experiments have been done, our simulations are important to predict the behavior of this fuel mixture to plan for ITER. Summit is giving us the power to do just that."Isotope movement holds key to the power of fusion reactions

More information: E. A. Belli et al, Asymmetry between deuterium and tritium turbulent particle flows, Physics of Plasmas (2021). DOI: 10.1063/5.0048620

Journal information: Physics of Plasmas

Provided by Oak Ridge National Laboratory

Unraveling a puzzle to speed the development of fusion energy

Researchers at the U.S. Department of Energy's (DOE) Princeton Plasma Physics Laboratory have developed an effective computational method to simulate the crazy-quilt movement of free electrons during experimental efforts to harness on Earth the fusion power that drives the sun and stars. The method cracks a complex equation that can enable improved control of the random and fast-moving moving electrons in the fuel for fusion energy.

Fusion produces enormous energy by combining light elements in the form of plasma—the hot, charged gas composed of free electrons and atomic nuclei, or ions, that makes up 99 percent of the visible universe. Scientists around the world are seeking to reproduce the fusion process to provide a safe, clean and abundant power to generate electricity.

Solving the equation

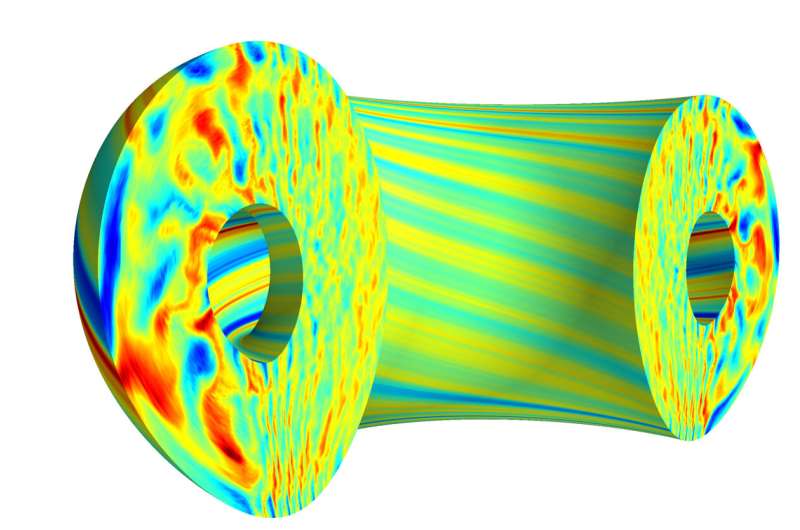

A key hurdle for researchers developing fusion on doughnut-shaped devices called tokamaks, which confine the plasma in magnetic fields, has been solving the equation that describes the motion of free-wheeling electrons as they collide and bounce around. Standard methods for simulating this motion, technically called pitch-angle scattering, have proven unsuccessful due to the complexity of the equation.

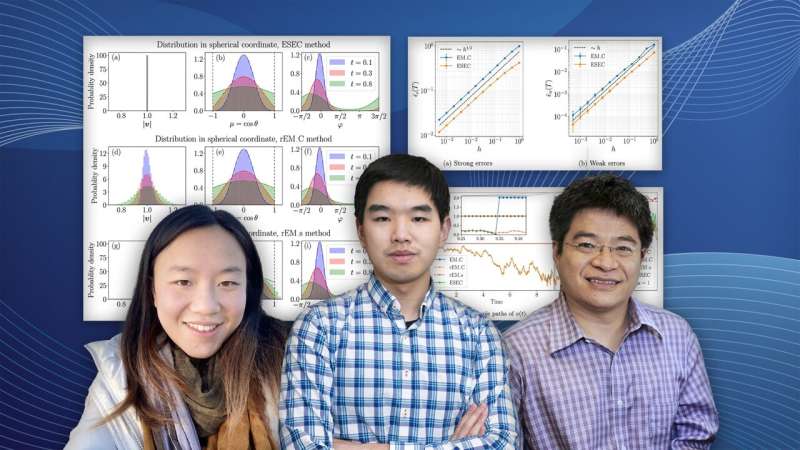

A successful set of computational rules, or algorithm, would solve the equation while conserving the energy of the speeding particles. "Solving the stochastic differential equation gives the probability of every path the scattered electrons can take," said Yichen Fu, a graduate student in the Princeton Program in Plasma Physics at PPPL and lead author of a paper in the Journal of Computational Physics that proposes a solution. Such equations yield a pattern that can be analyzed statistically but not determined precisely.

The accurate solution describes the trajectories of the electrons being scattered. "However, the trajectories are probabilistic and we don't know exactly where the electrons would go because there are many possible paths," Fu said. "But by solving the trajectories we can know the probability of electrons choosing every path, and knowing that enables more accurate simulations that can lead to better control of the plasma."

A major benefit of this knowledge is improved guidance for fusion researchers who pump electric current into tokamak plasmas to create the magnetic field that confines the superhot gas. Another benefit is better understanding of the pitch-angle scattering on energetic runaway electrons that pose danger to the fusion devices.

Rigorous proof

The finding provides a rigorous mathematical proof of the first working algorithm for solving the complex equation. "This gives experimentalists a better theoretical description of what's going on to help them design their experiments," said Hong Qin, a principal research physicist, advisor to Fu and a coauthor of the paper. "Previously, there was no working algorithm for this equation, and physicists got around this difficulty by changing the equation."

The reported study represents the research activity in algorithms and applied math of the recently launched Computational Sciences Department (CSD) at PPPL and expands an earlier paper coauthored by Fu, Qin and graduate student Laura Xin Zhang, a coauthor of this paper. While that work created a novel energy-conserving algorithm for tracking fast particles, the method did not incorporate magnetic fields and the mathematical accuracy was not rigorously proven.

The CSD, founded this year as part of the Lab's expansion into a multi-purpose research center, supports the critical fusion energy sciences mission of PPPL and serves as the home for computationally intensive discoveries. "This technical advance displays the role of the CSD," Qin said. "One of its goals is to develop algorithms that lead to improved fusion simulations."Advancing fusion energy through improved understanding of fast plasma particles