Computers that power self-driving cars could be a huge driver of global carbon emissions

In the future, the energy needed to run the powerful computers on board a global fleet of autonomous vehicles could generate as many greenhouse gas emissions as all the data centers in the world today.

That is one key finding of a new study from MIT researchers that explored the potential energy consumption and related carbon emissions if autonomous vehicles are widely adopted.

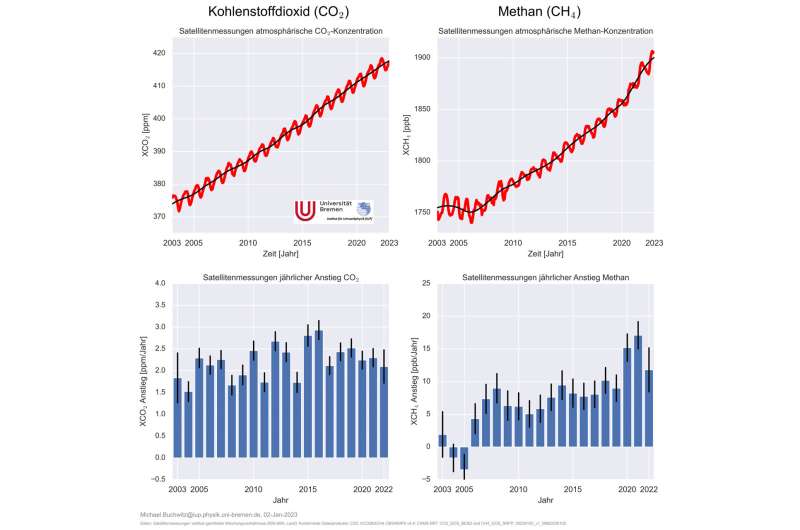

The data centers that house the physical computing infrastructure used for running applications are widely known for their large carbon footprint: They currently account for about 0.3 percent of global greenhouse gas emissions, or about as much carbon as the country of Argentina produces annually, according to the International Energy Agency. Realizing that less attention has been paid to the potential footprint of autonomous vehicles, the MIT researchers built a statistical model to study the problem. They determined that 1 billion autonomous vehicles, each driving for one hour per day with a computer consuming 840 watts, would consume enough energy to generate about the same amount of emissions as data centers currently do.

The researchers also found that in over 90 percent of modeled scenarios, to keep autonomous vehicle emissions from zooming past current data center emissions, each vehicle must use less than 1.2 kilowatts of power for computing, which would require more efficient hardware. In one scenario—where 95 percent of the global fleet of vehicles is autonomous in 2050, computational workloads double every three years, and the world continues to decarbonize at the current rate—they found that hardware efficiency would need to double faster than every 1.1 years to keep emissions under those levels.

"If we just keep the business-as-usual trends in decarbonization and the current rate of hardware efficiency improvements, it doesn't seem like it is going to be enough to constrain the emissions from computing onboard autonomous vehicles. This has the potential to become an enormous problem. But if we get ahead of it, we could design more efficient autonomous vehicles that have a smaller carbon footprint from the start," says first author Soumya Sudhakar, a graduate student in aeronautics and astronautics.

Sudhakar wrote the paper with her co-advisors Vivienne Sze, associate professor in the Department of Electrical Engineering and Computer Science (EECS) and a member of the Research Laboratory of Electronics (RLE); and Sertac Karaman, associate professor of aeronautics and astronautics and director of the Laboratory for Information and Decision Systems (LIDS). The research appears in the January-February issue of IEEE Micro.

Modeling emissions

The researchers built a framework to explore the operational emissions from computers on board a global fleet of electric vehicles that are fully autonomous, meaning they don't require a back-up human driver.

The model is a function of the number of vehicles in the global fleet, the power of each computer on each vehicle, the hours driven by each vehicle, and the carbon intensity of the electricity powering each computer.

"On its own, that looks like a deceptively simple equation. But each of those variables contains a lot of uncertainty because we are considering an emerging application that is not here yet," Sudhakar says.

For instance, some research suggests that the amount of time driven in autonomous vehicles might increase because people can multitask while driving and the young and the elderly could drive more. But other research suggests that time spent driving might decrease because algorithms could find optimal routes that get people to their destinations faster.

In addition to considering these uncertainties, the researchers also needed to model advanced computing hardware and software that doesn't exist yet.

To accomplish that, they modeled the workload of a popular algorithm for autonomous vehicles, known as a multitask deep neural network because it can perform many tasks at once. They explored how much energy this deep neural network would consume if it were processing many high-resolution inputs from many cameras with high frame rates, simultaneously.

When they used the probabilistic model to explore different scenarios, Sudhakar was surprised by how quickly the algorithms' workload added up.

For example, if an autonomous vehicle has 10 deep neural networks processing images from 10 cameras, and that vehicle drives for one hour a day, it will make 21.6 million inferences each day. One billion vehicles would make 21.6 quadrillion inferences. To put that into perspective, all of Facebook's data centers worldwide make a few trillion inferences each day (1 quadrillion is 1,000 trillion).

"After seeing the results, this makes a lot of sense, but it is not something that is on a lot of people's radar. These vehicles could actually be using a ton of computer power. They have a 360-degree view of the world, so while we have two eyes, they may have 20 eyes, looking all over the place and trying to understand all the things that are happening at the same time," Karaman says.

Autonomous vehicles would be used for moving goods, as well as people, so there could be a massive amount of computing power distributed along global supply chains, he says. And their model only considers computing—it doesn't take into account the energy consumed by vehicle sensors or the emissions generated during manufacturing.

Keeping emissions in check

To keep emissions from spiraling out of control, the researchers found that each autonomous vehicle needs to consume less than 1.2 kilowatts of energy for computing. For that to be possible, computing hardware must become more efficient at a significantly faster pace, doubling in efficiency about every 1.1 years.

One way to boost that efficiency could be to use more specialized hardware, which is designed to run specific driving algorithms. Because researchers know the navigation and perception tasks required for autonomous driving, it could be easier to design specialized hardware for those tasks, Sudhakar says. But vehicles tend to have 10- or 20-year lifespans, so one challenge in developing specialized hardware would be to "future-proof" it so it can run new algorithms.

In the future, researchers could also make the algorithms more efficient, so they would need less computing power. However, this is also challenging because trading off some accuracy for more efficiency could hamper vehicle safety.

Now that they have demonstrated this framework, the researchers want to continue exploring hardware efficiency and algorithm improvements. In addition, they say their model can be enhanced by characterizing embodied carbon from autonomous vehicles—the carbon emissions generated when a car is manufactured—and emissions from a vehicle's sensors.

While there are still many scenarios to explore, the researchers hope that this work sheds light on a potential problem people may not have considered.

"We are hoping that people will think of emissions and carbon efficiency as important metrics to consider in their designs. The energy consumption of an autonomous vehicle is really critical, not just for extending the battery life, but also for sustainability," says Sze.

More information: Soumya Sudhakar et al, Data Centers on Wheels: Emissions From Computing Onboard Autonomous Vehicles, IEEE Micro (2022). DOI: 10.1109/MM.2022.3219803