Story by By Colette Luke and Steve Gorman • Yesterday

U.S. Poet Laureate Ada Limón sits for interview with Reuters in Washington

© Thomson Reuters

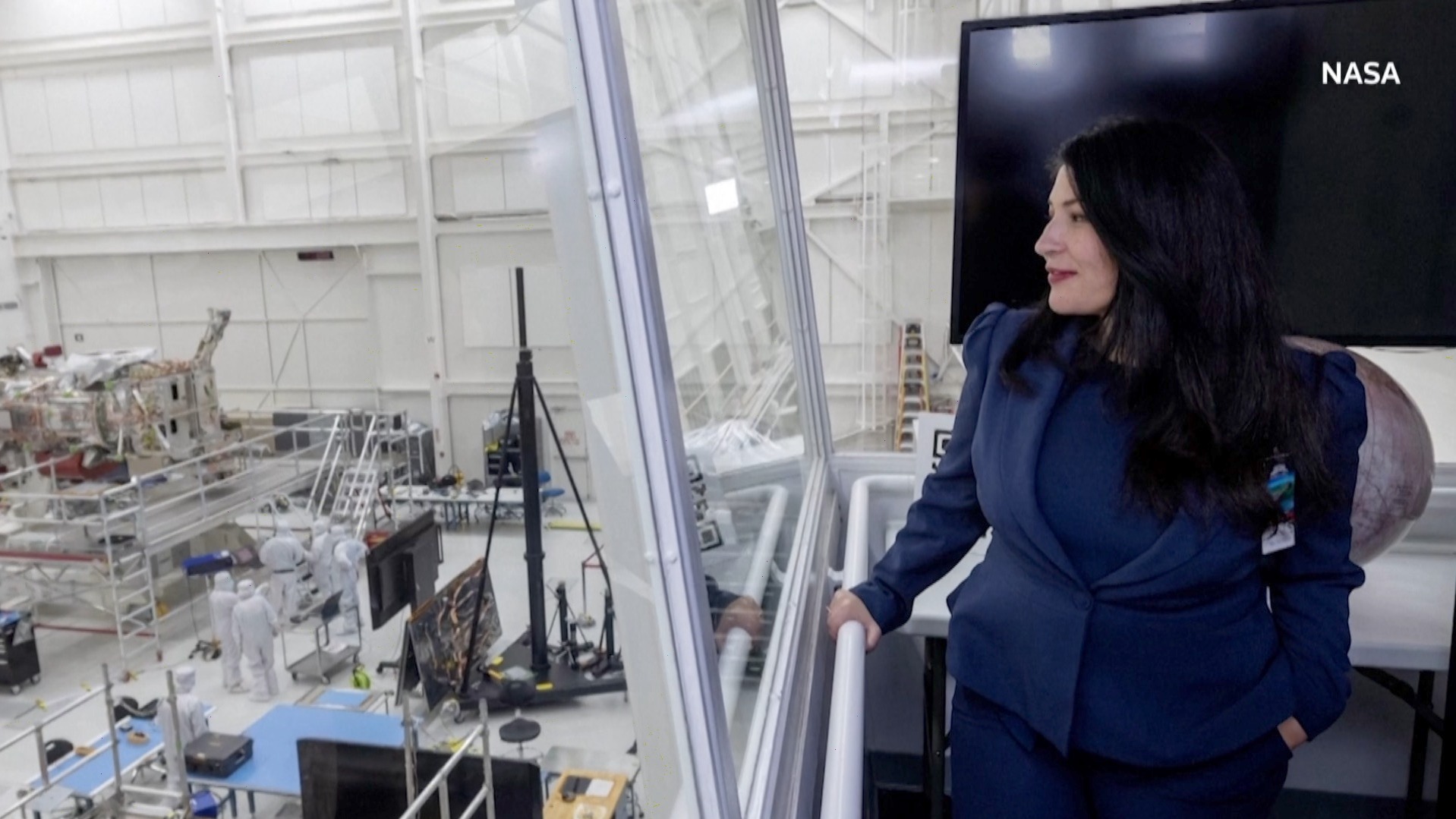

WASHINGTON (Reuters) - When U.S. Poet Laureate Ada Limon was asked to write a poem for inscription on a NASA spacecraft headed to Jupiter's icy moon Europa, she felt a rush of excitement at the honor, followed by bewilderment at the seeming enormity of the task.

U.S. Poet Laureate Ada Limón is interviewed by Reuters in Washington

WASHINGTON (Reuters) - When U.S. Poet Laureate Ada Limon was asked to write a poem for inscription on a NASA spacecraft headed to Jupiter's icy moon Europa, she felt a rush of excitement at the honor, followed by bewilderment at the seeming enormity of the task.

U.S. Poet Laureate Ada Limón is interviewed by Reuters in Washington

© Thomson Reuters

"Where do you start a poem like that?" she recalled thinking just after receiving the invitation in a call at the Library of Congress, where the 47-year-old poet is serving a two-year second term as the nation's top bard.

On Thursday night, exactly one year later in a ceremony at the library, across the street from the U.S. Capitol, Limon's 21-line creation, "In Praise of Mystery: a Poem for Europa," was unveiled and read aloud to a public audience for the first time, receiving a standing ovation.

The entire poem, a free-verse ode consisting of seven three-line stanzas, or tercets, will be engraved in Limon's handwriting on the exterior of the Europa Clipper, due for launch from NASA's Kennedy Space Center in Florida in October 2024.

U.S. Poet Laureate Ada Limón interview with Reuters in Washington

"Where do you start a poem like that?" she recalled thinking just after receiving the invitation in a call at the Library of Congress, where the 47-year-old poet is serving a two-year second term as the nation's top bard.

On Thursday night, exactly one year later in a ceremony at the library, across the street from the U.S. Capitol, Limon's 21-line creation, "In Praise of Mystery: a Poem for Europa," was unveiled and read aloud to a public audience for the first time, receiving a standing ovation.

The entire poem, a free-verse ode consisting of seven three-line stanzas, or tercets, will be engraved in Limon's handwriting on the exterior of the Europa Clipper, due for launch from NASA's Kennedy Space Center in Florida in October 2024.

U.S. Poet Laureate Ada Limón interview with Reuters in Washington

© Thomson Reuters

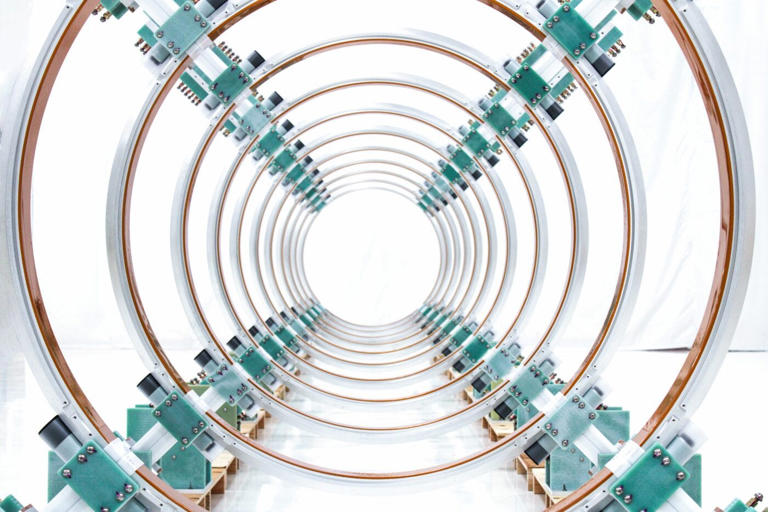

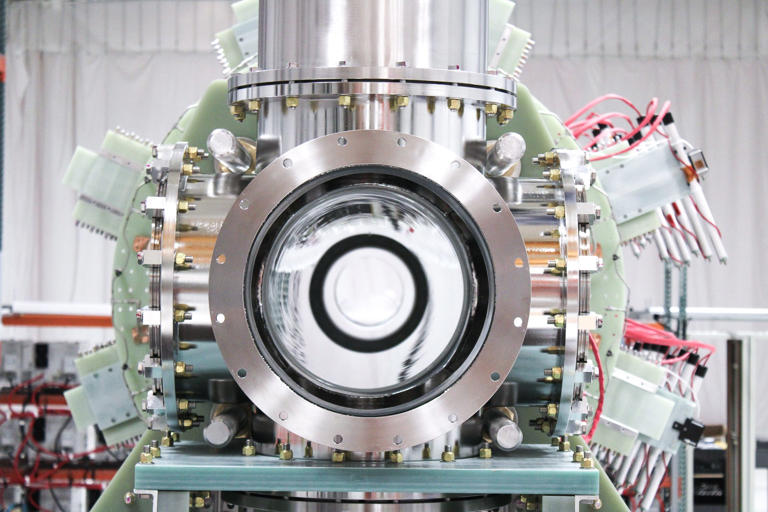

Now being assembled at NASA's Jet Propulsion Laboratory near Los Angeles, the spacecraft - larger than any other flown by NASA on an interplanetary mission - should reach Jovian orbit in 2030 after a 1.6 billion-mile (2.6 billion km) journey.

Now being assembled at NASA's Jet Propulsion Laboratory near Los Angeles, the spacecraft - larger than any other flown by NASA on an interplanetary mission - should reach Jovian orbit in 2030 after a 1.6 billion-mile (2.6 billion km) journey.

In praise of mystery. A poem for Europa.

Duration 1:58 View on Watch

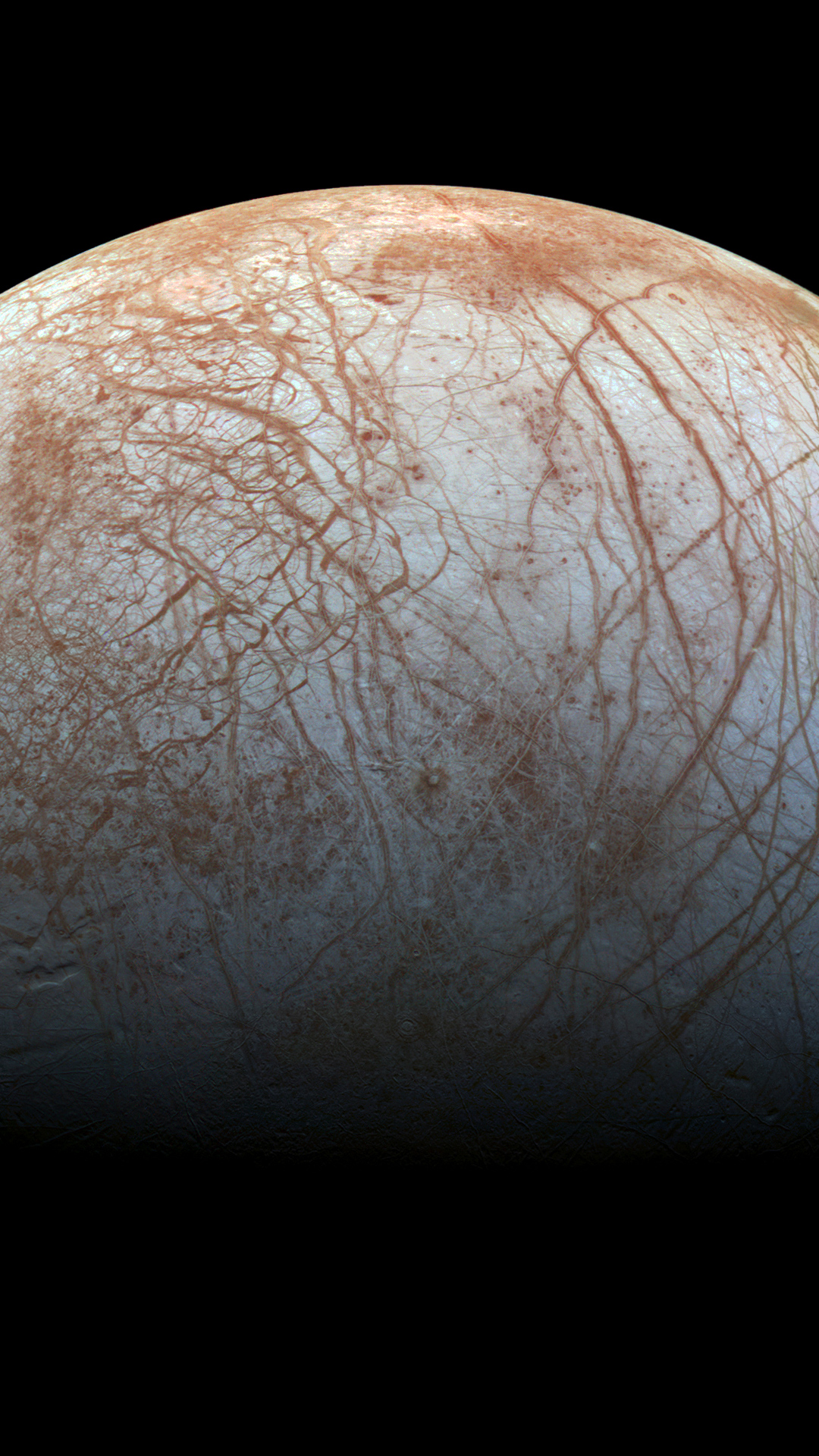

The solar-powered Clipper will have an array of instruments designed to study the vast ocean of water that scientists strongly believe lies beneath Europa's icy crust, potentially harboring conditions suitable for life.

During its mission, the spacecraft is expected to make nearly 50 fly-bys of Europa, rather than continuously orbit the moon, because doing so would bring it too close for too long to Jupiter's powerfully harsh radiation belts.

UNITING TWO WATER WORLDS

Limon's "Poem for Europa" is less a meditation on science - though its first line seems to allude to a rocket launch - as it is an ode to nature and the awe it can inspire in humankind.

Except for its title, it does not mention Europa explicitly but refers to its place among Jupiter's natural satellites, and to the commonality of water that it shares with Earth: "O second moon, we too are made of water, of vast and beckoning seas."

It concludes: "We, too are made of wonders, of great / and ordinary loves, of small invisible worlds / of a need to call out through the dark."

"I wanted to point back to the Earth, and I think the biggest part of the poem is that it unites those two things," she told Reuters in an interview in the Library of Congress poetry room hours before the piece was unveiled. "It unites both space and this incredible planet that we live on."

Limon, who won the National Book Critics Circle Award for her poetry collection "The Carrying," recounted great difficulty when she first tried composing the Europa poem at a writers retreat in Hawaii.

Her breakthrough came on a suggestion from her husband, who Limon said encouraged her to "stop writing a NASA poem" and to create "a poem that you would write" instead. "That changed everything," she remembered.

The only firm parameters NASA gave her were to relate something about the mission, to make it understandable to readers as young as 9, and to write no more than 200 words.

At the Library of Congress on Thursday night, Limon said she considers the Europa commission "the greatest honor and privilege of my life."

Reflecting earlier on what the assignment meant, Limon said she wonders at "all of the human eyes and human ears and human hearts that will receive this poem and ... it's the audience that really overwhelms me."

A writer of Mexican ancestry, Limon became the first Latina U.S. poet laureate and the 24th individual to hold the title when she was first appointed in September 2022.

(Reporting by Steve Gorman in Los Angeles and Colette Luke in Washington; Additional reporting by Kimberley Vinnell in Washington. Editing by Gerry Doyle)