SPACE

Astronomers explore the chromosphere of peculiar white dwarfs

Using the 3.6-m New Technology Telescope (NTT) at the La Silla Observatory in Chile, astronomers have observed three peculiar white dwarfs of the DAHe subtype. In their results, they found dipolar chromospheres in two of these objects. The findings were reported in a paper published July 5 on the preprint server arXiv.

White dwarfs (WDs) are stellar cores left behind after a star has exhausted its nuclear fuel. Due to their high gravity, they are known to have atmospheres of either pure hydrogen or pure helium. However, a small fraction of WDs shows traces of heavier elements.

DAHe (D: degenerate, A: Balmer lines strongest, H: magnetic line splitting, e: emission) is a relatively new and small class of magnetic white dwarfs that showcase Zeeman-split Balmer emission lines. To date, only a few dozen DAHe WDs are known. The first of them was GD 356—an isolated white dwarf discovered nearly 40 years ago.

A team of astronomers led by Jay Farihi of the University College London, U.K., decided to investigate three objects of this rare class, in order to better understand the nature of the entire population. For this purpose, they employed ULTRACAM—a frame-transfer CCD imaging camera mounted on the NTT telescope. The study was complemented by data from NASA's Transiting Exoplanet Survey Satellite (TESS).

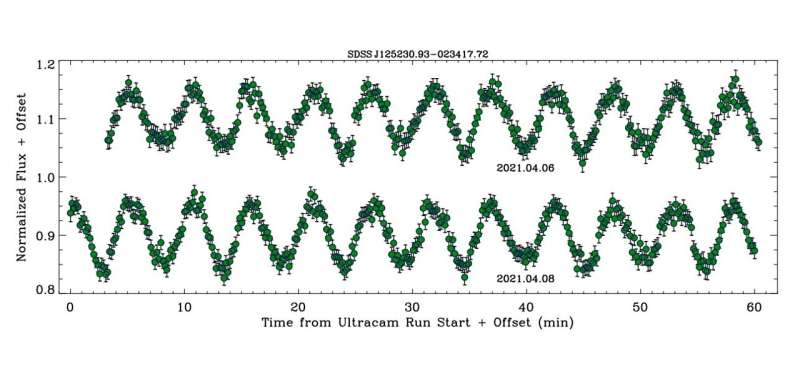

"This study focuses on light curves and the resulting periodicities of three DAHe white dwarfs, using both ground- and space-based photometric monitoring," the researchers wrote.

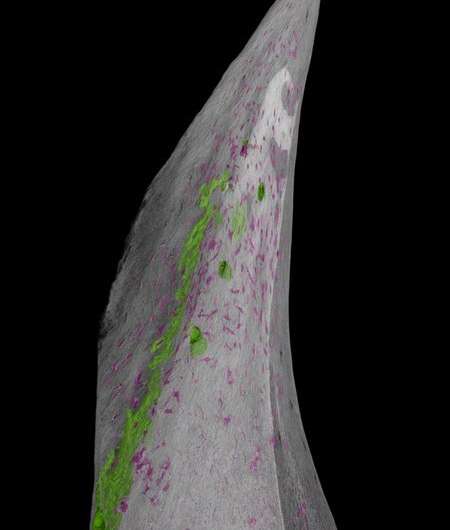

The three observed DAHe WDs were: SDSS J125230.93−023417.7 (or SDSS J1252 for short), LP 705-64 and WD J143019.29−562358.3 (WD J1430). It turned out that the folded ULTRACAM light curves of SDSS J1252 and LP 705-64 exhibit alternating minima that are indicative of two distinct star spots 180 degrees out-of-phase during rotation. For WD J1430, the light curves reveal a single maximum and minimum.

The astronomers found that the amplitudes of the multi-band photometric variability reported for all the three DAHe white dwarfs are all several times larger than that in GD 356. They noted that all the known DAHe stars have light curve amplitudes that increase toward the blue in correlated ratios, which points to cool spots that produce higher contrasts at shorter wavelengths.

According to the authors of the paper, their findings suggest that some magnetic WDs create intrinsic chromospheres as they cool, and that no external source is responsible for the observed temperature inversion.

"Given the lack of additional periodic signals and the compelling evidence of DAHe white dwarf clustering in the HR diagram (Walters et al, 2021; Reding et al, 2023; Manser et al, 2023), an intrinsic mechanism is the most likely source for the spotted regions and chromospheric activity," the researchers concluded.

More information: J. Farihi et al, Discovery of Dipolar Chromospheres in Two White Dwarfs, arXiv (2023). DOI: 10.48550/arxiv.2307.02543

Journal information: arXiv

© 2023 Science X Network

Astronomers discover eight new cataclysmic variables

Two White Dwarfs

|

SLEEPYXRISM mission to study ‘rainbow’ of X-raysIMAGE: XRISM, SHOWN IN THIS ARTIST’S CONCEPT, IS AN X-RAY MISSION THAT WILL STUDY SOME OF THE MOST ENERGETIC OBJECTS IN THE UNIVERSE. view more CREDIT: NASA'S GODDARD SPACE FLIGHT CENTER CONCEPTUAL IMAGE LAB A new satellite called XRISM (X-ray Imaging and Spectroscopy Mission, pronounced “crism”) aims to pry apart high-energy light into the equivalent of an X-ray rainbow. The mission, led by JAXA (Japan Aerospace Exploration Agency), will do this using an instrument called Resolve. XRISM is scheduled to launch from Japan’s Tanegashima Space Center on Aug. 25, 2023 (Aug. 26 in Japan). “Resolve will give us a new look into some of the universe’s most energetic objects, including black holes, clusters of galaxies, and the aftermath of stellar explosions,” said Richard Kelley, NASA’s XRISM principal investigator at NASA’s Goddard Space Flight Center in Greenbelt, Maryland. “We’ll learn more about how they behave and what they’re made of using the data the mission collects after launch.” Resolve is an X-ray microcalorimeter spectrometer instrument collaboration between NASA and JAXA. It measures tiny temperature changes created when an X-ray hits its 6-by-6-pixel detector. To measure that minuscule increase and determine the X-ray’s energy, the detector needs to cool down to around minus 460 Fahrenheit (minus 270 Celsius), just a fraction of a degree above absolute zero. The instrument reaches its operating temperature after a multistage mechanical cooling process inside a refrigerator-sized container of liquid helium. By collecting thousands or even millions of X-rays from a cosmic source, Resolve can measure high-resolution spectra of the object. Spectra are measurements of light’s intensity over a range of energies. Prisms spread visible light into its different energies, which we know better as the colors of the rainbow. Scientists used prisms in early spectrometers to look for spectral lines, which occur when atoms or molecules absorb or emit energy. Now astronomers use spectrometers, tuned to all kinds of light, to learn about cosmic objects’ physical states, motions, and compositions. Resolve will do spectroscopy for X-rays with energies ranging from 400 to 12,000 electron volts by measuring the energies of individual X-rays to form a spectrum. (For comparison, visible light energies range from about 2 to 3 electron volts.) “The spectra XRISM collects will be the most detailed we’ve ever seen for some of the phenomena we’ll observe,” said Brian Williams, NASA’s XRISM project scientist at Goddard. “The mission will provide us with insights into some of the most difficult places to study, like the internal structures of neutron stars and near-light-speed particle jets powered by black holes in active galaxies.” The mission’s other instrument, developed by JAXA, is called Xtend. It will give XRISM one of the largest fields of view of any X-ray imaging satellite flown to date, observing an area about 60% larger than the average apparent size of the full Moon. Resolve and Xtend rely on two identical X-ray Mirror Assemblies developed at Goddard. XRISM is a collaborative mission between JAXA and NASA, with participation by ESA (European Space Agency). NASA’s contribution includes science participation from the Canadian Space Agency. SwRI team identifies giant swirling waves at the edge of Jupiter’s magnetosphereWaves produced by Kelvin-Helmholtz instabilities transfer energy in the solar system Peer-Reviewed PublicationIMAGE: AN SWRI-LED TEAM IDENTIFIED INTERMITTENT EVIDENCE OF KELVIN-HELMHOLTZ INSTABILITIES, GIANT SWIRLING WAVES, AT THE BOUNDARY BETWEEN JUPITER’S MAGNETOSPHERE AND THE SOLAR WIND THAT FILLS INTERPLANETARY SPACE, MODELED HERE BY UNIVERSITY CORPORATION FOR ATMOSPHERIC RESEARCH SCIENTISTS IN A 2017 GRL PAPER. view more CREDIT: UCAR/ZHANG, ET.AL. SAN ANTONIO — July 17, 2023 —A team led by Southwest Research Institute (SwRI) and The University of Texas at San Antonio (UTSA) has found that NASA’s Juno spacecraft orbiting Jupiter frequently encounters giant swirling waves at the boundary between the solar wind and Jupiter’s magnetosphere. The waves are an important process for transferring energy and mass from the solar wind, a stream of charged particles emitted by the Sun, to planetary space environments. JOURNALGeophysical Research Letters METHOD OF RESEARCHObservational study SUBJECT OF RESEARCHNot applicable Ring-sheared drop experiment on ISS expandedNew NSF grant supports ongoing research into proteins Grant and Award AnnouncementIMAGE: RPI'S RING-SHEARED DROP EXPERIMENT ON THE INTERNATIONAL SPACE STATION view more CREDIT: RENSSELAER POLYTECHNIC INSTITUTE Rensselaer Polytechnic Institute (RPI) researchers Amir Hirsa, professor of mechanical, aerospace, and nuclear engineering, and Patrick Underhill, professor of chemical and biological engineering, have received a new three-year grant from the National Science Foundation (NSF) for $452,847 to study the physics of protein solutions using the ring-sheared drop module aboard the International Space Station. The grant starts on August 1, almost 10 years from the start of the ongoing NASA grant that initiated this technology. The ring-sheared drop concept requires a microgravity environment, like the one found on orbit, where surface tension alone can hold a volume of liquid together. The team was looking for a way to study fluid dynamics without interference from the solid walls of a container, which would typically be necessary to hold a fluid being studied on Earth. Proteins are large, flexible macromolecules that perform a vast range of functions within living organisms. Their functions span from copying genetic material to providing structural integrity to cells and organisms. Because of their size, flexibility, and biochemistry, proteins can undergo structural changes that dictate their function, or sometimes cause disease. The ability to understand and predict how the conditions that proteins experience affect their structure and conformation, and in turn their functioning in solution, is a holy grail in science, according to Hirsa. This work will be used for the development of predictive models for both fundamental science and industry, including development of first-principle models and manufacturing of pharmaceuticals. The purpose of the new grant is to gain deeper scientific understanding of protein association, aggregation, and gelation in systems with high protein concentration in the presence of free surfaces. About Rensselaer Polytechnic Institute: Founded in 1824, Rensselaer Polytechnic Institute is America’s first technological research university. Rensselaer encompasses five schools, over 30 research centers, more than 140 academic programs including 25 new programs, and a dynamic community made up of over 6,800 students and 110,000 living alumni. Rensselaer faculty and alumni include upwards of 155 National Academy members, six members of the National Inventors Hall of Fame, six National Medal of Technology winners, five National Medal of Science winners, and a Nobel Prize winner in Physics. With nearly 200 years of experience advancing scientific and technological knowledge, Rensselaer remains focused on addressing global challenges with a spirit of ingenuity and collaboration. To learn more, please visit www.rpi.edu. Contact: For general inquiries: newsmedia@rpi.edu Visit the Rensselaer research and discovery blog: https://everydaymatters.rpi.edu/ Follow us on Twitter: @RPINews Astronomers discover striking evidence of ‘unusual’ stellar evolutionMagnetic activity plays key role in exoplanet habitability Peer-Reviewed PublicationCOLUMBUS, Ohio – Astronomers have found evidence that some stars boast unexpectedly strong surface magnetic fields, a discovery that challenges current models of how they evolve. In stars like our sun, surface magnetism is linked to stellar spin, a process similar to the inner workings of a hand-cranked flashlight. Strong magnetic fields are seen in the hearts of magnetic sunspot regions, and cause a variety of space weather phenomena. Until now, low-mass stars – celestial bodies of lower mass than our sun that can rotate either very rapidly or relatively slowly – were thought to exhibit very low levels of magnetic activity, an assumption which has primed them as ideal host stars for potentially habitable planets. In a new study, published today in The Astrophysical Journal Letters, researchers from The Ohio State University argue that a new internal mechanism called core-envelope decoupling – when the surface and core of the star start out spinning at the same rate, then drift apart – might be responsible for enhancing magnetic fields on cool stars, a process which could intensify their radiation for billions of years and impact the habitability of their nearby exoplanets. The research was made possible due to a technique that Lyra Cao, lead author of the study and a graduate student in astronomy at Ohio State, and co-author Marc Pinsonneault, a professor of astronomy at Ohio State, developed earlier this year to make and characterize starspot and magnetic field measurements. Although low-mass stars are the most common stars in the Milky Way and are often hosts to exoplanets, scientists know comparatively little about them, said Cao. For decades, it was assumed that the physical processes of lower mass stars followed those of solar-type stars. Because stars gradually lose their angular momentum as they spin down, astronomers can use stellar spins as a device to understand the nature of a star’s physical processes, and how they interact with their companions and their surroundings. However, there are times where the stellar rotation clock appears to stop in place, Cao said. Using public data from the Sloan Digital Sky Survey to study a sample of 136 stars in M44, a star crib also known as Praesepe, or the Beehive cluster, the team found that the magnetic fields of the low-mass stars in the region appeared much stronger than current models could explain. While previous research revealed that the Beehive cluster is home to many stars that defy current theories of rotational evolution, one of Cao’s team’s most exciting discoveries was determining that these stars’ magnetic fields may be just as unusual – far stronger than predicted by current models. “To see a link between the magnetic enhancement and rotational anomalies was incredibly exciting,” said Cao. “It indicates that there might be some interesting physics at play here.” The team also hypothesized that the process of syncing up a star’s core and the envelope might induce a magnetism found in these stars that would have a starkly different origin from the kind seen on the sun. “We’re finding evidence that there’s a different kind of dynamo mechanism driving the magnetism of these stars,” said Cao. “This work shows that stellar physics can have surprising implications for other fields.” According to the study, these findings have important implications for our understanding of astrophysics, particularly on the hunt for life on other planets. “Stars experiencing this enhanced magnetism are likely going to be battering their planets with high-energy radiation,“ Cao said. “This effect is predicted to last for billions of years on some stars, so it’s important to understand what it might do to our ideas of habitability.” But these findings shouldn’t put a damper on the search for extraplanetary existence. With further research, the team’s discovery could help provide more insight into where to look for planetary systems capable of hosting life. But here on Earth, Cao believes her team’s discoveries might lead to better simulations and theoretical models of stellar evolution. “The next thing to do is verify that enhanced magnetism happens on a much larger scale,” said Cao. “If we can understand what’s going on in the interiors of these stars as they experience shear-enhanced magnetism, it’s going to lead the science in a new direction.” The study was supported by The Alfred P. Sloan Foundation, the U.S. Department of Energy Office of Science and the National Science Foundation. Jennifer van Saders from the University of Hawaii was also a co-author. # Contact: Lyra Cao, Cao.861@osu.edu Written by: Tatyana Woodall, Woodall.52@osu.edu JOURNALThe Astrophysical Journal Letters METHOD OF RESEARCHObservational study ARTICLE TITLECore-envelope Decoupling Drives Radial Shear Dynamos in Cool Stars ARTICLE PUBLICATION DATE17-Jul-2023 |