Mapping methane emissions from rivers around globe reveals surprising sources

Freshwater ecosystems account for half of global emissions of methane, a potent greenhouse gas that contributes to global warming. Rivers and streams, especially, are thought to emit a substantial amount of that methane, but the rates and patterns of these emissions at global scales remain largely undocumented.

An international team of researchers, including University of Wisconsin–Madison freshwater ecologists, has changed that with a new description of the global rates, patterns and drivers of methane emissions from running waters. Their findings, published today in the journal Nature, will improve methane estimates and models of climate change, and point to land-management changes and restoration opportunities that can reduce the amount of methane escaping into the atmosphere.

The new study confirms that rivers and streams do, indeed, produce a lot of methane and play a major role in climate change dynamics. But the study also reveals some surprising results about how—and where—that methane is produced.

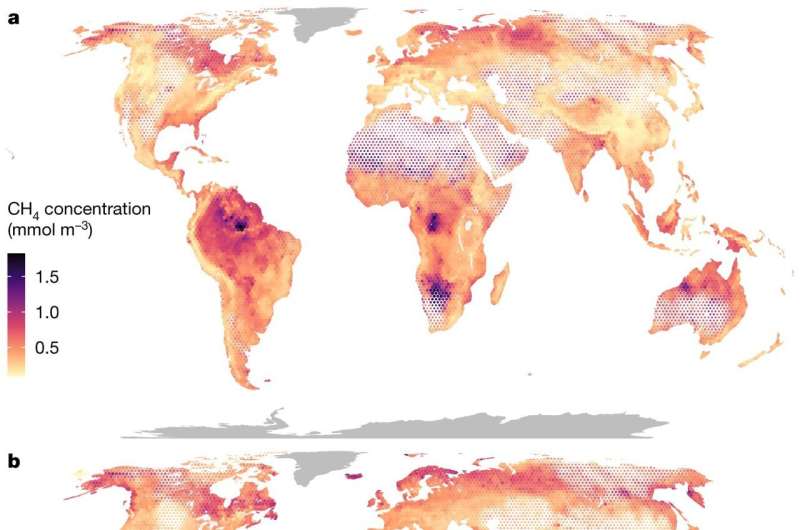

"We expected to find the highest methane emissions at the tropics, because the biological production of methane is highly sensitive to temperature," says Emily Stanley, a professor at UW–Madison's Center for Limnology and co-author of the Nature report. Instead, she says, their team found that methane emissions in the tropics were comparable to those in the much colder streams and rivers of boreal forests—pine-dominant forests that stretch around the Northern Hemisphere—and Arctic tundra habitats.

Temperature, it turns out, isn't the primary variable driving aquatic methane emissions. Instead, the study found, "the amount of methane coming out of streams and rivers regardless of their latitude or temperature was primarily controlled by the surrounding habitat connected to them," Stanley says.

Rivers and streams in boreal forests and polar regions at high latitudes are often tied to peatlands and wetlands, while the dense forests of the Amazon and Congo river basins also supply the waters running through them with soils rich in organic matter. Both systems produce substantial amounts of methane because they often result in low-oxygen conditions preferred by microbes that produce methane while breaking down all that organic matter.

However, not all high methane rivers and streams come by these emissions naturally. In parts of the world, freshwater methane emissions are primarily controlled by human activity in both urban and rural communities.

"Humans are actively modifying river networks worldwide and, in general, these changes seem to favor methane emissions," says Gerard Rocher, lead author of the report and a postdoctoral researcher with both the Swedish University of Agricultural Sciences and the Blanes Centre of Advanced Studies in Spain.

Habitats that have been highly modified by humans—like ditched streams draining agricultural fields, rivers below wastewater treatment plants, or concrete stormwater canals—also often result in the organic-matter-rich, oxygen-poor conditions that promote high methane production.

The significance of human involvement can be considered good news, according to Rocher.

"One implication of this finding is that freshwater conservation and restoration efforts could lead to a reduction in methane emissions," he says.

Slowing the flow of pollutants like fertilizer, human and animal waste or excessive topsoil into rivers and streams would help limit the ingredients that lead to high methane production in freshwater systems.

"From a climate change perspective, we need to worry more about systems where humans are creating circumstances that produce methane than the natural cycles of methane production," Stanley says.

The study also demonstrates the importance of teams of scientists working to compile and examine gigantic datasets in understanding the scope of climate change. The results required a years-long collaboration between the Swedish University of Agricultural Sciences, Umeå University, UW–Madison and other institutions around the world. They collected methane measurements on rivers and streams across several countries, employed state-of-the-art computer modeling and machine learning to "massively expand" a dataset Stanley first began to compile with her graduate students back in 2015.

Now, Stanley says, "we have a lot more confidence in methane estimates." The researchers hope their results lead to better understanding of the magnitude and spatial patterns of all sources of methane into Earth's atmosphere, and that the new data improves large-scale models used to understand global climate and predict its future.

More information: Gerard Rocher-Ros et al, Global methane emissions from rivers and streams, Nature (2023). DOI: 10.1038/s41586-023-06344-6

Journal information: Nature

Provided by University of Wisconsin-Madison Delaying methane mitigation increases risk of breaching Paris Agreement climate goal, study finds