How Airbnb is fuelling gentrification in Toronto

The average asking price for a rental unit in Canada reached $2,042 in June, marking a 7.5 percent increase from 2022. Metropolitan districts are particularly affected by rising rental costs, with some local families forced to relocate due to a lack of affordable housing.

While several factors may contribute to this, some have pointed to Airbnb as one of the reasons for the rental crisis. Airbnb says it is not the cause of the housing affordability crisis.

Despite the significant public interest in how short-term rentals like Airbnb might make housing less affordable, empirical evidence of exactly how, and to what extent this is happening, is sparse.

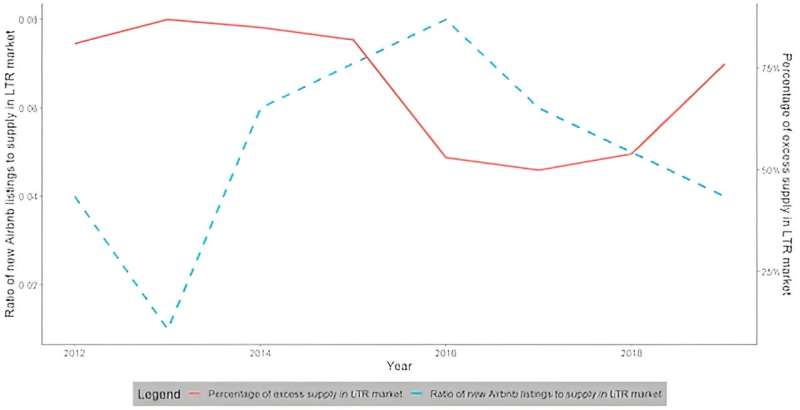

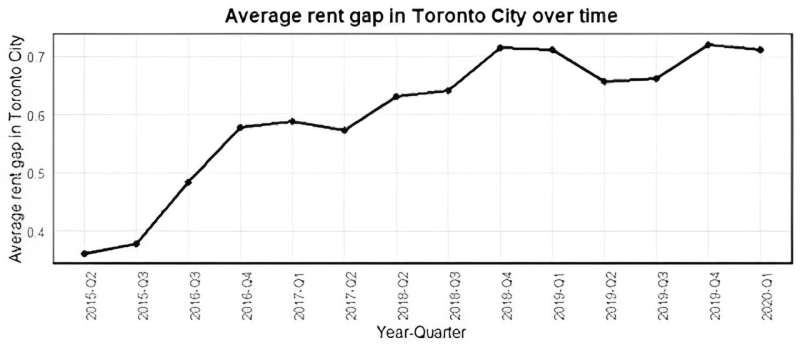

Our preliminary study of Toronto's rental market (which will be submitted later this summer to the Social Science Research Network, an open-access repository of academic research papers), used data from Toronto Regional Real Estate Board and Airbnb listings from 2015 to 2020, and suggested there were two ways Airbnb was affecting the rental market during this period: reducing the number of available rentals and contributing to the gentrification of neighborhoods.

How Airbnb may lead to gentrification

Short-term rentals, like those offered by Airbnb, bring in outsiders, often with little regard for local community norms, leading to conflicts and complaints.

While dealing with these temporary disturbances is usually possible with traditional policing and communication, such short-term rentals can have lasting impacts on neighborhoods.

When homeowners convert their properties into Airbnb rentals, it may reduce the long-term rental supply in their neighborhoods. This could increase rental prices, stretching the budget of lower-income families.

The lucrative short-term market may also attract new housing investments targeted at Airbnb rentals. This could further squeeze local families, who may find themselves in bidding wars. Eventually, the economic pressure could force these families out of their neighborhoods, leaving only the wealthier population in place.

Property values could increase as vacated homes are filled by wealthier families moving in from outside, who can afford the high prices. Over time, the neighborhood could change to comprise mostly relatively wealthier citizens in a process called gentrification.

Is Airbnb driving up prices in Toronto?

With 6.6 million active listings spanning over 220 countries and 100,000 cities, Airbnb offers three types of accommodations: entire homes or apartments, private rooms and shared rooms.

Our analysis focused on the entire homes or apartments category. In the time period of the study, owners of these accommodations were able to choose between the long-term and short-term rental markets, but those who only rented out a portion of their residence were less likely to be part of the long-term market.

We found that Airbnb rentals can squeeze out long-term rentals in neighborhoods. As the number of Airbnb rentals in a neighborhood increased, the availability of long-term rentals decreased and vice versa.

On average, we estimate that an increase of one percent in Airbnb listings per square kilometer in a district, is associated with a 0.09 percent increase in long-term rental rates. A similar study conducted in the United States, estimated an average increase of 0.018 percent. While the numbers may not be easily comparable since one is for a metropolitan area and another is for the whole country, they are indicative of the potential impact.

We found evidence that Airbnb may be leading to higher potential rent income for property owners. This difference in income between the potential short-term rentals and traditional long-term rentals, known as the rent gap, draws investors to properties that can be used for short-term rentals.

The reduced availability of long-term rentals can lead to bidding wars for housing, which can lead to even higher rents. As telltale evidence we found that a 10 percent increase in this rent gap is associated with a 3.1 percent surge in long-term rental prices. This is equivalent to a $80 monthly rent hike for the average one-bedroom property in Toronto.

These results offer tentative evidence of the potential impact of Airbnb on long-term rental rates during the time period of the study.

Mixed social impact

Despite evidence that Airbnb may be associated with rising rents, its broader social impact remains controversial.

For homeowners, Airbnb offers a new income source. Travelers can boost local employment opportunities as retailers, restaurants and other businesses cater to their needs. A flow of young people can energize neighborhoods with their joie de vivre and creativity.

Yet affordable housing is a basic need for our society. With almost 40,000 total listings in Toronto, Vancouver and Montréal, Airbnb is a big player in the economy, but is only one part of the larger picture affecting the availability of affordable housing.

Attempts to mitigate Airbnb's effect on housing affordability have had challenges. Toronto's short-term rental bylaw, which was upheld in 2019, limits Airbnb stays in principal residences to a maximum of 180 days per year. The city subsequently began enforcing the licensing and registration of short-term rentals in 2021.

Narrowly focused policy interventions may not only be ineffective, but may have unexpected negative impacts. In fact, there is also evidence that restricting Airbnb rentals reduces the development of new housing units, leading to less housing availability. These factors illustrate how Airbnb is part of a bigger picture and addressing this complex issue will require more studies and creative policy measures.

This is an updated version of a story originally published on Aug. 13, 2023. The updated version makes clear the context of the research cited in the article is for the period 2015-20 only and does not analyze the rental market since then.

This article is republished from The Conversation under a Creative Commons license. Read the original article.![]()