OpenAI staff are putting their visas at risk to get Sam Altman back as CEO

Tom Carter

Tue, November 21, 2023

OpenAI CEO Sam Altman was fired by the company's board on Friday.Justin Sullivan/Getty Images

700 OpenAI employees have signed a letter threatening to quit if Sam Altman isn't reinstated as CEO.

Some of them say they are on work-dependent visas, which they could lose if they are forced to quit.

It's a sign of how much loyalty Altman has inspired among staff.

OpenAI's employees are calling on the company's board to bring back Sam Altman — and some are even willing to put their visas at risk to get him back as CEO.

A number of OpenAI employees say they have signed a letter threatening to quit if Sam Altman is not brought back as CEO despite being on work-related visas, meaning they could lose the right to remain in the US should they resign.

As of Monday evening, over 700 of OpenAI's 770 employees had signed the letter threatening to quit and join Altman at Microsoft if the AI startup's board does not reinstate him as CEO and resign.

That includes senior figures such as CTO Mira Murati and chief scientist Ilya Sutskever — who had a change of heart after initially backing the board coup against Altman.

"I am on an H-1B, in the process of getting my green card and relocating my family to the US," said OpenAI technical staffer Reiichiro Nakano in a post on X.

"Me and many other colleagues in a similar situation have signed this letter. I do not know what will happen next, but I am confident we will be taken care of. The board should resign," he said.

Boris Power, OpenAI's head of applied research, also posted on X that he risked losing his visa should he quit the company.

"I'm on a research visa too that I will lose if I resign. These are details — onwards with the mission!" he said.

OpenAI's employees have publicly backed Altman to the hilt since he was unexpectedly fired by the company's board on Friday, posting coordinated messages on social media and reportedly refusing to attend an all-hands hosted by new boss Emmett Shear.

A number of senior OpenAI employees are already expected to follow Altman and ex-OpenAI president Greg Brockman to Microsoft.

Altman has also hinted that workers who choose to resign from OpenAI will be welcomed into the new AI team he is heading up at Microsoft, posting on X that "we are all going to work together some way or other, and I'm so excited."

In the letter to the board calling for its resignation, OpenAI's employees said that Microsoft "assured us there are positions for all OpenAI employees" at the company — although sources told Business Insider that these assurances were strictly verbal and not set in stone.

But Microsoft boss Satya Nadella said in a conversation with tech journalist Kara Swisher on an episode of her podcast that aired on Monday that it would "definitely have a place for all AI talent."

OpenAI did not immediately respond to a request for comment from Business Insider, made outside normal working hours.

OpenAI Engineers Earning $800,000 a Year Turn Rare Skillset Into Leverage

Jo Constantz

Wed, November 22, 2023

(Bloomberg) -- OpenAI reinstated Chief Executive Officer Sam Altman after hundreds of workers threatened to quit over the ChatGPT creator's ouster, highlighting just how much leverage the tech industry's most valued workers hold right now.

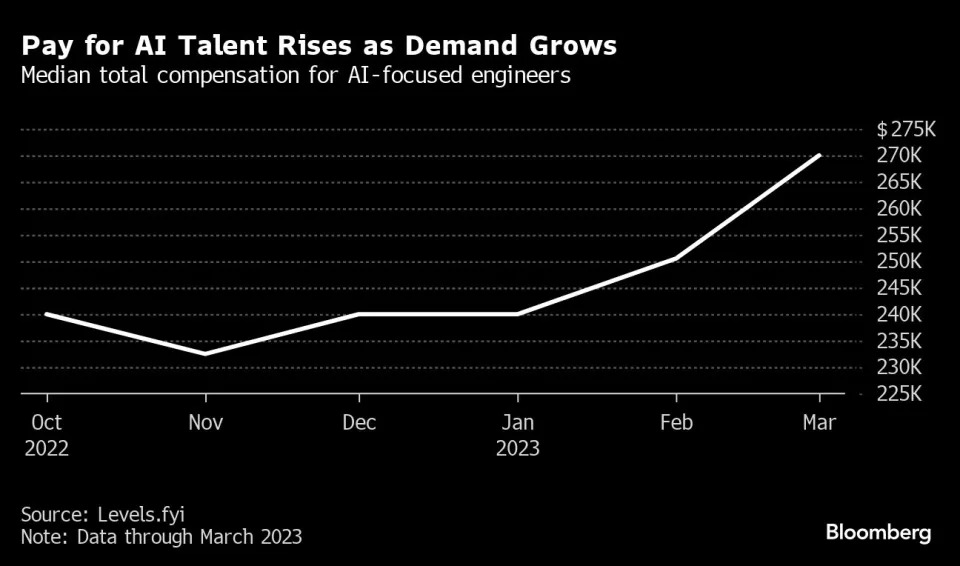

Artificial intelligence engineers earn anywhere from 8% to 12.5% more than their non-AI counterparts, according to an analysis by compensation data platform Levels.fyi published in May.

The most common salary range for an engineering job listed on OpenAI’s website is $200,000 to $370,000, though a handful of more specialized roles advertise ranges from $300,000 to $450,000, said Roger Lee, co-founder of compensation benchmarking firm Comprehensive.io. Salary ranges don’t include bonuses or stock awards, which can bring an annual salary of $300,000 closer to $800,000 in total compensation, according to Levels.fyi.

In an industry where talent is the scarcest resource, the kind of exodus threated at OpenAI would have been catastrophic. “For emerging technologies like AI, you only have a very small, small group of people who are experienced. They are the product, they are the company,” said Julia Pollak, chief economist at job site ZipRecruiter.

That put OpenAI employees in an unusually powerful position to exert direct pressure on the company’s board.

“The supply constraint is a very real, binding one, especially in the short- to medium-term,” she said. “You can’t easily train these people, you can’t easily recruit them from elsewhere. Retaining the ones you’ve got is the most important strategy.”

As for recruiting from universities, there’s a big difference between understanding AI models on a theoretical level and having the skills and experience to actually apply them. OpenAI’s highly specialized software systems also makes its current developers even more valuable.

“It takes a long time to learn an actual company’s code and tech stack. An AI engineer inside the company is worth three AI engineers from outside the company, given that dynamic,” Pollak said.

OpenAI staff already had job offers waiting. Before Altman was reinstated, Microsoft Corp. said they would be welcome to join its new AI research lab. Microsoft has a roughly 49% stake in OpenAI.

Exclusive-OpenAI researchers warned board of AI breakthrough ahead of CEO ouster, sources say

Wed, November 22, 2023

By Anna Tong, Jeffrey Dastin and Krystal Hu

(Reuters) -Ahead of OpenAI CEO Sam Altman’s four days in exile, several staff researchers wrote a letter to the board of directors warning of a powerful artificial intelligence discovery that they said could threaten humanity, two people familiar with the matter told Reuters.

The previously unreported letter and AI algorithm were key developments before the board's ouster of Altman, the poster child of generative AI, the two sources said. Prior to his triumphant return late Tuesday, more than 700 employees had threatened to quit and join backer Microsoft in solidarity with their fired leader.

The sources cited the letter as one factor among a longer list of grievances by the board leading to Altman's firing, among which were concerns over commercializing advances before understanding the consequences. Reuters was unable to review a copy of the letter. The staff who wrote the letter did not respond to requests for comment.

After being contacted by Reuters, OpenAI, which declined to comment, acknowledged in an internal message to staffers a project called Q* and a letter to the board before the weekend's events, one of the people said. An OpenAI spokesperson said that the message, sent by long-time executive Mira Murati, alerted staff to certain media stories without commenting on their accuracy.

Some at OpenAI believe Q* (pronounced Q-Star) could be a breakthrough in the startup's search for what's known as artificial general intelligence (AGI), one of the people told Reuters. OpenAI defines AGI as autonomous systems that surpass humans in most economically valuable tasks.

Given vast computing resources, the new model was able to solve certain mathematical problems, the person said on condition of anonymity because the individual was not authorized to speak on behalf of the company. Though only performing math on the level of grade-school students, acing such tests made researchers very optimistic about Q*’s future success, the source said.

Reuters could not independently verify the capabilities of Q* claimed by the researchers.

'VEIL OF IGNORANCE'

Researchers consider math to be a frontier of generative AI development. Currently, generative AI is good at writing and language translation by statistically predicting the next word, and answers to the same question can vary widely. But conquering the ability to do math — where there is only one right answer — implies AI would have greater reasoning capabilities resembling human intelligence. This could be applied to novel scientific research, for instance, AI researchers believe.

Unlike a calculator that can solve a limited number of operations, AGI can generalize, learn and comprehend.

In their letter to the board, researchers flagged AI’s prowess and potential danger, the sources said without specifying the exact safety concerns noted in the letter. There has long been discussion among computer scientists about the danger posed by highly intelligent machines, for instance if they might decide that the destruction of humanity was in their interest.

Researchers have also flagged work by an "AI scientist" team, the existence of which multiple sources confirmed. The group, formed by combining earlier "Code Gen" and "Math Gen" teams, was exploring how to optimize existing AI models to improve their reasoning and eventually perform scientific work, one of the people said.

Altman led efforts to make ChatGPT one of the fastest growing software applications in history and drew investment - and computing resources - necessary from Microsoft to get closer to AGI.

In addition to announcing a slew of new tools in a demonstration this month, Altman last week teased at a summit of world leaders in San Francisco that he believed major advances were in sight.

"Four times now in the history of OpenAI, the most recent time was just in the last couple weeks, I've gotten to be in the room, when we sort of push the veil of ignorance back and the frontier of discovery forward, and getting to do that is the professional honor of a lifetime," he said at the Asia-Pacific Economic Cooperation summit.

A day later, the board fired Altman.

(Anna Tong and Jeffrey Dastin in San Francisco and Krystal Hu in New York; Editing by Kenneth Li and Lisa Shumaker)

Tue, November 21, 2023

From left to right: Ilya Sutskever, Tasha McCauley, Adam D’Angelo and Helen Toner.

After a weekend of boardroom drama at OpenAI, the fate of its cofounder and ousted CEO Sam Altman is still undecided. Although Altman—along with his cofounder and former OpenAI president Greg Brockman—have accepted new jobs at Microsoft (MSFT), the move isn’t a done deal and Altman is still hoping to get his old job back, The Verge reported yesterday (Nov. 20). Top OpenAI investors are also pushing the company’s board to reinstate Altman as CEO, the Wall Street Journal reported today (Nov. 21)

Due to OpenAI’s unusual structure as a capped profit arm under a nonprofit organization, the company’s board has total control over matters like CEO appointment, even though the majority of the board don’t actually work at the company. Microsoft, which has invested $13 billion in OpenAI and owns about half of the company, has no say in corporate governance.

At the center of the ongoing crisis is OpenAI’s chief scientist Ilya Sutskever, a board member who voted to oust Altman last week but now says he regrets the decision. Over the weekend, he cosigned a letter to OpenAI’s four-person board, threatening to leave the company unless Altman is reinstated. The letter has been signed by more than 700 of OpenAI’s approximately 770 employees.

The Verge reported Altman and Brockman are willing to return to OpenAI if the remaining three board members step aside.

Who’s on OpenAI’s all-powerful board?

Atlman and Brockman both held seats on OpenAI’s board. After their exits last week, the board has four remaining members:

Ilya Sutskever, 37 or 38, is a computer scientist known for his contribution to the field of deep learning. He is one of inventors of AlexNet, a convolutional neural network architecture, and a co-author of the AlphaGo paper published in 2016. Sutskever was born in Russia and grew up in Israel, where he attended college before moving with his family to Canada.

Sutskever was a research scientist at Google Brain, an A.I. research unit of Google, from 2013 to 2015. In late 2015, Sutskever left Google to cofound OpenAI and serve as its chief scientist. He has been on the company’s board since 2015.

Adam D’Angelo, 39, is the cofounder and CEO of Quora, a question-and-answer social networking site. Before founding Quora in 2009, D’Angelo served as chief technology officer and head of engineering of Facebook, now Meta (META), from 2006 to 2008. While attending high school in the early 2000s, D’Angelo co-developed a music suggestion software called Synapse Media Player with his classmate Mark Zuckerberg, according to David Kirkpatrick’s 2010 book The Facebook Effect.

D’Angelo graduated from the California Institute of Technology in 2006 with a bachelor’s degree in Computer Science. He joined OpenAI’s board of directors in 2018.

Tasha McCauley is an adjunct senior management scientist at the think tank Rand Corporation, a job she started earlier this year, according to her LinkedIn profile. She is a cofounder of GeoSim Systems, a geospatial technology startup where she served as CEO until last year. In the early 2010s, McCauley was a teaching fellow in robotics and A.I. at Singularity University, which offers executive educational programs.

McCauley joined OpenAI’s board in 2018. She has been married to actor Joseph Gordon-Levitt since 2014.

Helen Toner is a director of strategy at Georgetown University’s Center for Security and Emerging Technology since 2018. Before that, Toner spent less than a year at the University of Oxford’s Center for the Governance of AI, according to her LinkedIn profile. Between 2015 and 2018, she was a research analyst at Open Philanthropy, a nonprofit cofounded by Facebook cofounder Dustin Moskovitz. Toner is the newest member of OpenAI’s board, joining in late 2021.

Why did the board insist on firing Altman?

OpenAI’s board fired Altman in a public announcement on Nov. 17 and reportedly gave little notice to the company’s management team, investors or Altman himself. Over the weekend, OpenAI’s leadership team pressed the board to explain what drove their abrupt decision but didn’t receive much of an answer, according to the Journal.

In a message to employees on Nov. 19, OpenAI’s board reaffirmed its decision to oust Altman and said their decision was “not about any singular incident” but because Altman has “lost the trust of the board of directors.”

Sources told the Journal one of the board’s concerns was Altman’s involvement in two outside business endeavors recently: a consumer hardware device he’s been building with Jony Ive, Apple’s former chief design officer, and an A.I. chip startup for which he’s been raising money.

Wed, November 22, 2023

By Aditya Soni

(Reuters) - Sam Altman's return as OpenAI's chief executive will strengthen his grip on the startup and may leave the ChatGPT creator with fewer checks on his power as the company introduces technology that could upend industries, corporate governance experts and analysts said.

OpenAI is bringing Altman back just days after his ouster as well as installing a revamped board that could bring sharper scrutiny to the startup at the heart of the AI boom, but strong support from investors including Microsoft may give Altman more leeway to commercialize the technology.

"Sam's return may put an end to the turmoil on the surface, but there may continue to be deep governance issues," said Mak Yuen Teen, director of the centre for investor protection at the National University of Singapore Business School.

"Altman seems awfully powerful and it is unclear that any board would be able to oversee him. The danger is the board becomes a rubber stamp," he said.

OpenAI's new board will boast more experience at the top level and strong ties to both the U.S. government and Wall Street.

The board fired Altman last week with little explanation and attempted to move on by naming an interim CEO twice. However, pressure from Microsoft — and the 38-year-old's strong loyalty among the 700-plus OpenAI employees that caused nearly all of them to threaten to leave the company — led to Altman's reinstatement as of Wednesday.

"Altman has been invigorated by the last few days," GlobalData analyst Beatriz Valle said. But that could come at a cost, she said, adding that he has "too much power now."

Bret Taylor, former co-CEO of Salesforce who also played a key role in forcing through Elon Musk's $44 billion purchase of Twitter as a director, will be chairing the board.

Other members include former U.S. Treasury Secretary Larry Summers, a Harvard academic and longtime economic aide to Democratic presidents.

"The fact that Summers and Taylor will join OpenAI is quite extraordinary and marks a dramatic reversal of fortunes in the company," Valle said.

Summers, who also sits on the board of Jack Dorsey's fintech firm Block, has in recent months been vocal about the potential job losses and disruption that could be caused by AI.

"ChatGPT is coming for the cognitive class. It's going to replace what doctors do," he said in a post on X in April.

Larry Summers in 2022. REUTERS/Brendan McDermid (Brendan McDermid / reuters)

OpenAI's previous board consisted of entrepreneur Tasha McCauley, Helen Toner, director of strategy at Georgetown's Center for Security and Emerging Technology, OpenAI chief scientist Ilya Sutskever, as well as Quora CEO Adam D'Angelo, who also sits on the new board.

It was not immediately clear if any of the other directors would remain, including Sutskever, who joined in the effort to fire Altman then signed onto an employee letter demanding his return, expressing regret for her "participation in the board's actions."

OpenAI on X said it was "collaborating to figure out the details" of the new board.

Microsoft declined to comment. Summers and OpenAI did not immediately respond to requests for comment. Sutskever, Altman and Taylor could not be immediately reached for comment.

Some analysts say the management fiasco will ensure that OpenAI executives proceed cautiously, as the high-flying startup will now be subject to more scrutiny. Several noted that companies such as Facebook parent Meta have flourished with a powerful CEO despite concerns about corporate governance.

"Sam definitely comes out stronger but also dirtied and will have more of a microscope from the AI and broader tech and business community," Gartner analyst Jason Wong said. "He can no longer do no wrong."

(Reporting by Aditya Soni in Bengaluru; Editing by Mark Porter)

Kyle Wiggers

Wed, November 22, 2023

Darrell Etherington with files from Getty under license

The OpenAI power struggle that captivated the tech world after co-founder Sam Altman was fired has finally reached its end -- at least for the time being. But what to make of it?

It feels almost as though some eulogizing is called for -- like OpenAI died and a new, but not necessarily improved, startup stands in its midst. Ex-Y Combinator president Altman is back at the helm, but is his return justified? OpenAI's new board of directors is getting off to a less diverse start (i.e. it's entirely white and male), and the company's founding philanthropic aims are in jeopardy of being co-opted by more capitalist interests.

That's not to suggest that the old OpenAI was perfect by any stretch.

As of Friday morning, OpenAI had a six-person board -- Altman, OpenAI chief scientist Ilya Sutskever, OpenAI president Greg Brockman, tech entrepreneur Tasha McCauley, Quora CEO Adam D'Angelo and Helen Toner, director at Georgetown’s Center for Security and Emerging Technologies. The board was technically tied to a nonprofit that had a majority stake in OpenAI's for-profit side, with absolute decision-making power over the for-profit OpenAI's activities, investments and overall direction.

OpenAI's unusual structure was established by the company's co-founders, including Altman, with the best of intentions. The nonprofit's exceptionally brief (500-word) charter outlines that the board make decisions ensuring "that artificial general intelligence benefits all humanity," leaving it to the board's members to decide how best to interpret that. Neither "profit" nor "revenue" get a mention in this North Star document; Toner reportedly once told Altman's executive team that triggering OpenAI's collapse "would actually be consistent with the [nonprofit's] mission."

Maybe the arrangement would have worked in some parallel universe; for years, it appeared to work well enough at OpenAI. But once investors and powerful partners got involved, things became... trickier.

Altman's firing unites Microsoft, OpenAI's employees

After the board abruptly canned Altman on Friday without notifying just about anyone, including the bulk of OpenAI's 770-person workforce, the startup's backers began voicing their discontent in both private and public.

Satya Nadella, the CEO of Microsoft, a major OpenAI collaborator, was allegedly “furious” to learn of Altman’s departure. Vinod Khosla, the founder of Khosla Ventures, another OpenAI backer, said on X (formerly Twitter) that the fund wanted Altman back. Meanwhile, Thrive Capital, the aforementioned Khosla Ventures, Tiger Global Management and Sequoia Capital were said to be contemplating legal action against the board if negotiations over the weekend to reinstate Altman didn't go their way.

Now, OpenAI employees weren't unaligned with these investors from outside appearances. On the contrary, close to all of them -- including Sutskever, in an apparent change of heart -- signed a letter threatening the board with mass resignation if they opted not to reverse course. But one must consider that these OpenAI employees had a lot to lose should OpenAI crumble -- job offers from Microsoft and Salesforce aside.

OpenAI had been in discussions, led by Thrive, to possibly sell employee shares in a move that would have boosted the company's valuation from $29 billion to somewhere between $80 billion and $90 billion. Altman's sudden exit -- and OpenAI's rotating cast of questionable interim CEOs -- gave Thrive cold feet, putting the sale in jeopardy.

Altman won the five-day battle, but at what cost?

But now after several breathless, hair-pulling days, some form of resolution's been reached. Altman -- along with Brockman, who resigned on Friday in protest over the board's decision -- is back, albeit subject to a background investigation into the concerns that precipitated his removal. OpenAI has a new transitionary board, satisfying one of Altman's demands. And OpenAI will reportedly retain its structure, with investors' profits capped and the board free to make decisions that aren't revenue-driven.

Salesforce CEO Marc Benioff posted on X that "the good guys" won. But that might be premature to say.

https://platform.twitter.com/widgets.js

Sure, Altman "won," besting a board that accused him of "not [being] consistently candid" with board members and, according to some reporting, putting growth over mission. In one example of this alleged rogueness, Altman was said to have been critical of Toner over a paper she co-authored that cast OpenAI's approach to safety in a critical light -- to the point where he attempted to push her off the board. In another, Altman "infuriated" Sutskever by rushing the launch of AI-powered features at OpenAI's first developer conference.

The board didn't explain themselves even after repeated chances, citing possible legal challenges. And it's safe to say that they dismissed Altman in an unnecessarily histrionic way. But it can't be denied that the directors might have had valid reasons for letting Altman go, at least depending on how they interpreted their humanistic directive.

The new board seems likely to interpret that directive differently.

Currently, OpenAI's board consists of former Salesforce co-CEO Bret Taylor, D'Angelo (the only holdover from the original board) and Larry Summers, the economist and former Harvard president. Taylor is an entrepreneur's entrepreneur, having co-founded numerous companies, including FriendFeed (acquired by Facebook) and Quip (through whose acquisition he came to Salesforce). Meanwhile, Summers has deep business and government connections -- an asset to OpenAI, the thinking around his selection probably went, at a time when regulatory scrutiny of AI is intensifying.

The directors don't seem like an outright "win" to this reporter, though -- not if diverse viewpoints were the intention. While six seats have yet to be filled, the initial four set a rather homogenous tone; such a board would in fact be illegal in Europe, which mandates companies reserve at least 40% of their board seats for women candidates.

Why some AI experts are worried about OpenAI's new board

I'm not the only one who's disappointed. A number of AI academics turned to X to air their frustrations earlier today.

Noah Giansiracusa, a math professor at Bentley University and the author of a book on social media recommendation algorithms, takes issue both with the board's all-male makeup and the nomination of Summers, who he notes has a history of making unflattering remarks about women.

"Whatever one makes of these incidents, the optics are not good, to say the least -- particularly for a company that has been leading the way on AI development and reshaping the world we live in," Giansiracusa said via text. "What I find particularly troubling is that OpenAI's main aim is developing artificial general intelligence that 'benefits all of humanity.' Since half of humanity are women, the recent events don't give me a ton of confidence about this. Toner most directly representatives the safety side of AI, and this has so often been the position women have been placed in, throughout history but especially in tech: protecting society from great harms while the men get the credit for innovating and ruling the world."

Christopher Manning, the director of Sanford's AI Lab, is slightly more charitable than -- but in agreement with -- Giansiracusa in his assessment:

"The newly formed OpenAI board is presumably still incomplete," he told TechCrunch. "Nevertheless, the current board membership, lacking anyone with deep knowledge about responsible use of AI in human society and comprising only white males, is not a promising start for such an important and influential AI company."

https://platform.twitter.com/widgets.js

Inequity plagues the AI industry, from the annotators who label the data used to train generative AI models to the harmful biases that often emerge in those trained models, including OpenAI's models. Summers, to be fair, has expressed concern over AI's possibly harmful ramifications -- at least as they relate to livelihoods. But the critics I spoke with find it difficult to believe that a board like OpenAI's present one will consistently prioritize these challenges, at least not in the way that a more diverse board would.

It raises the question: Why didn't OpenAI attempt to recruit a well-known AI ethicist like Timnit Gebru or Margaret Mitchell for the initial board? Were they "not available"? Did they decline? Or did OpenAI not make an effort in the first place? Perhaps we'll never know.

OpenAI has a chance to prove itself wiser and worldlier in selecting the five remaining board seats -- or three, should Altman and a Microsoft executive take one each (as has been rumored). If they don't go a more diverse way, what Daniel Colson, the director of the think tank the AI Policy Institute, said on X may well be true: a few people or a single lab can't be trusted with ensuring AI is developed responsibly.

Inside the Coups and Concessions That Brought Altman Back to OpenAI

Shirin Ghaffary, Rachel Metz and Emily Chang

Wed, November 22, 2023

(Bloomberg) -- The braintrust that turned OpenAI into the world’s best-known artificial intelligence startup huddled at Sam Altman’s home in San Francisco on Tuesday for another day of fighting with the company’s board to reinstate him as the chief executive officer in what had already become one of the most dramatic corporate power struggles in Silicon Valley history.“Still working on it…,” Mira Murati, OpenAI’s chief technology officer and very briefly its interim CEO, wrote in a Slack message on Tuesday to the entire company, which was viewed by Bloomberg News. She included a picture of her and other top executives sitting in a semi-circle at Altman’s home, with the ousted CEO wearing bright green sweatpants and staring intently at his screen. The photo received hundreds of supportive emoji reactions from employees who had spent the previous five days uncertain about their jobs, their equity and the direction of the company.

Late on Tuesday, employees finally got an answer. OpenAI announced that it had reached an agreement for Altman to return as CEO alongside an overhauled board led by Bret Taylor, a former co-CEO of Salesforce Inc. The other directors on the initial board are Larry Summers, the former US Treasury Secretary, and existing member Adam D’Angelo, the co-founder and CEO of Quora Inc. “We are collaborating to figure out the details,” OpenAI said in a post on X, formerly Twitter.Dozens of people still in OpenAI's San Francisco offices cheered and celebrated, according to a person who was there. On OpenAI's company Slack, employees rejoiced in reaction to a message posted by Murati, which said the company will "get back to work" on Monday. An impromptu party soon followed.Despite the palpable sense of relief, and the intention to return to business as usual, quite a few details remain unresolved. The final makeup of the board has not been set and there’s still little clarification on what specifically prompted the board to oust Altman in the first place. OpenAI will also have to confront a new reputation as a dysfunctional company that happens to be developing very powerful — and, to some, frightening — technology. But for now, Altman’s return pulls one of the most influential, and highly valued, startups back from the brink.

OpenAI transformed how the public thinks about AI a year ago with the launch of its hugely successful chatbot, ChatGPT, and turned Altman into the face of the artificial intelligence industry. But he was fired by the board after disagreements with members over how quickly to develop and commercialize generative AI, people with knowledge of the matter have said. His firing shocked investors and prompted nearly all employees to threaten to quit and follow Altman to Microsoft Corp., OpenAI’s biggest backer, which had agreed to hire him to head a new in-house AI unit.

OpenAI’s board largely refused to engage with Altman following his firing on Friday, despite the immense pressure to reinstate him. Instead, the board named Twitch co-founder and former chief Emmett Shear its second interim CEO on Sunday night, after Murati advocated in favor of Altman returning to the company. Later that night, Ilya Sutskever, the company’s chief scientist and board member, joined Shear in attempting to corral OpenAI employees for a meeting at its San Francisco headquarters, but hardly anyone showed up, according to a person familiar with the matter who asked not to be named discussing private information.As of Tuesday – after more than 700 of OpenAI’s 770 employees had signed a letter threatening to quit – Altman was back in discussions with board member D’Angelo, said people with knowledge of the matter. (Sutskever was among the employees who signed the letter, after expressing “regret” for his “participation in the board’s actions.”)The negotiating parties made key concessions in order to reach an agreement, people familiar with the matter said. Altman agreed not to join the initial board, people said, though some expect he will become a director eventually. The parties also agreed to an independent investigation into Altman and the events surrounding his ouster, people said.

Shear’s decision to join the deliberations was also critical to reaching a deal, one person said. Shear had been vocal about the existential risks of AI, a position that was compelling for the board directors at OpenAI, Bloomberg reported. “Coming into OpenAI, I wasn’t sure what the right path would be,” Shear wrote on X after Altman’s return was announced. “This was the pathway that maximized safety alongside doing right by all stakeholders involved.”As the parties worked to hammer out an agreement, they also had to contend with logistical issues. One board member was on a plane for several hours during negotiations and was out of communication, one person said. There was also a push to resolve the leadership chaos before Thanksgiving, people said, in the hope that employees wouldn’t spend the holiday with uncertainty looming about the state of their jobs.Many workers had more than their jobs on the line. The company was set to orchestrate the sale of employee shares to investors at a valuation of $86 billion, but those plans had been jeopardized by the leadership upheaval. Some people at the company stood to make millions in the deal, which wouldn’t happen if more than 90% of OpenAI’s staff quit. (The tender offer, which was set to be led by Thrive Capital, is now back on track, according to people familiar with the matter.)One competing AI company said that it had fielded multiple nervous inquiries from OpenAI employees asking about potential jobs, according to a person who asked not to be identified discussing private overtures. Several tech executives, such as Salesforce CEO Marc Benioff, made it clear on social media that they’d be happy to have them. And some rival AI companies experienced an uptick in demand from customers.Hours before an agreement was announced on Tuesday, an OpenAI executive encouraged employees to “get back to shipping” products. Employees, who have this week off, were told they could also expense pizza. “To call this a challenging last few days would be an understatement,” a company vice president, Peter Deng, wrote in a message on Slack and viewed by Bloomberg News. He stressed that the company was committed to its mission. “Raise a slice and share a photo in the thread so we can enjoy this moment together.”After Altman’s return was announced, OpenAI’s General Counsel Che Chang invited employees to the office for a “quick celebration” with Altman, according to a Slack message. By Wednesday morning, however, the celebrations had died down. Employees were exhausted from the days-long saga, one person said, and most were going into “Thanksgiving mode.”

--With assistance from Katie Roof, Edward Ludlow and Gillian Tan.

Bloomberg Businessweek

Sam Altman Wasn’t Ousted From OpenAI Due to ‘Malfeasance,’ COO Says

Published 11/18/23

OpenAI chief operating officer Brad Lightcap said Sam Altman’s departure as chief executive was due to a “breakdown in communication” between Altman and the company’s board of directors.

“We can say definitively that the board’s decision was not made in response to malfeasance or anything related to our financial, business, safety, or security/privacy practices,” Lightcap wrote in a memo sent to employees early Saturday, published by CNBC. “This was a breakdown in communication between Sam and the board. Our position as a company remains extremely strong, and Microsoft remains fully committed to our partnership.”

Lightcap wrote that the announcement of Altman’s departure “took us all by surprise” and that leadership has “had multiple conversations with the board to try to better understand the reasons and process behind their decision.”

Altman, the startup’s co-founder, revealed he was stepping down as CEO on Friday after making misleading statements to the startup's board.

Following a deliberative review process, the directors concluded that Altman “was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities. The board no longer has confidence in his ability to continue leading OpenAI.”

Mira Murati, the company's chief technology officer, was appointed as interim CEO while the search for a permanent successor is underway. Murati joined OpenAI in 2018 and became chief technology officer last year. Lightcap wrote in Saturday’s memo that while Murati leadership’s full support as CEO, they still share in employees’ concerns about how Altman’s removal was handled.

“I’m sure you all are feeling confusion, sadness, and perhaps some fear,” Lightcap wrote. “We are fully focused on handling this, pushing toward resolution and clarity, and getting back to work.”

Altman started OpenAI as a nonprofit in 2015 after raising $1 billion in funding from the likes of Elon Musk, LinkedIn's Reid Hoffman and other notable figures in tech.

Its AI chatbot, ChatGPT, exploded in popularity this year, already boasting 100 million active users within one year of launching.

OpenAI CEO Sam Altman Awarded Indonesia’s First ‘Golden Visa’

Sam Altman Says Governments Lack The ‘Will’ To Lead Technology Innovation

OpenAI’s Sam Altman Leaves CEO Role Amid Board Dispute

OpenAI CEO Invests in Startup Touting Blood-Testing Tech Similar to Disgraced Theranos

Artificial Intelligence Tsar Sam Altman Moves To Take Nuclear Energy Start-Up Public

Analysis by David Goldman,

Updated 7:42 AM EST, Mon November 20, 2023

Former OpenAI CEO Sam AltmanJustin Sullivan/Getty Images

New YorkCNN —

OpenAI’s overseers worried that the company was making the technological equivalent of a nuclear bomb, and its caretaker, Sam Altman, was moving so fast that he risked a global catastrophe.

So the board fired him. That may ultimately have been the logical solution.

But the manner in which Altman was fired – abruptly, opaquely and without warning to some of OpenAI’s largest stakeholders and partners – defied logic. And it risked inflicting more damage than if the board took no such action at all

A company’s board of directors has an obligation, first and foremost, to its shareholders. OpenAI’s most important shareholder is Microsoft, the company that gave Altman & Co. $13 billion to help Bing, Office, Windows and Azure leapfrog Google and stay ahead of Amazon, IBM and other AI wannabes.

Yet Microsoft was not informed of Altman’s firing until “just before” the public announcement, according to CNN contributor Kara Swisher, who spoke to sources knowledgeable about the board’s ousting of its CEO. Microsoft’s stock sank after Altman was let go.

Employees weren’t told the news ahead of time, either. Neither was Greg Brockman, the company’s co-founder and former president, who said in a post on X that he found out about Altman’s firing moments before it happened. Brockman, a key supporter of Altman and his strategic leadership of the company, resigned Friday. Other Altman loyalists also headed for the exits.

Suddenly, OpenAI was in crisis. Reports that Altman and ex-OpenAI loyalists were about to start their own venture risked undoing everything that the company had worked so hard to achieve over the past several years.

So a day later, the board reportedly asked for a mulligan and tried to woo Altman back. It was a shocking turn of events and an embarrassing self-own by a company that its widely regarded as the most promising producer of the most exciting new technology.

Strange board structure

The bizarre structure of OpenAI’s board complicated matters.

The company is a nonprofit. But Altman, Brockman and Chief Scientist Ilya Sutskever in 2019 formed OpenAI LP, a for-profit entity that exists within the larger company’s structure. That for-profit company took OpenAI from worthless to a valuation of $90 billion in just a few years – and Altman is largely credited as the mastermind of that plan and the key to the company’s success.

Yet a company with big backers like Microsoft and venture capital firm Thrive Capital has an obligation to grow its business and make money. Investors want to ensure they’re getting bang for their buck, and they’re not known to be a patient bunch.

That probably led Altman to push the for-profit company to innovate faster and go to market with products. In the great “move fast and break things” tradition of Silicon Valley, those products don’t always work so well at first.

That’s fine, perhaps, when it’s a dating app or a social media platform. It’s something entirely different when it’s a technology so good at mimicking human speech and behavior that it can fool people into believing its fake conversations and images are real.

And that’s what reportedly scared the company’s board, which remained majority controlled by the nonprofit wing of the company. Swisher reported that OpenAI’s recent developer conference served as an inflection point: Altman announced that OpenAI would make tools available so anyone could create their own version of ChatGPT.

For Sutskever and the board, that was a step too far.

A warning not without merit

By Altman’s own account, the company was playing with fire.

When Altman set up OpenAI LP four years ago, the new company noted in its charter that it remained “concerned” about AI’s potential to “cause rapid change” for humanity. That could happen unintentionally, with the technology performing malicious tasks because of bad code – or intentionally by people subverting AI systems for evil purposes. So the company pledged to prioritize safety – even if that meant reducing profit for its stakeholders.

Altman also urged regulators to set limits on AI to prevent people like him from inflicting serious damage on society.

Proponents of AI believe the technology has the potential to revolutionize every industry and better humanity in the process. It has the potential to improve education, finance, agriculture and health care.

But it also has the potential to take jobs away from people – 14 million positions could disappear in the next five years, the World Economic Forum warned in April. AI is particularly adept at spreading harmful disinformation. And some, including former OpenAI board member Elon Musk, fear the technology will surpass humanity in intelligence and could wipe out life on the planet.

Not how to handle a crisis

With those threats – real or perceived – it’s no wonder the board was concerned that Altman was moving at too rapid of a pace. It may have felt obligated to get rid of him and replace him with someone who, in their view, would be more careful with the potentially dangerous technology.

But OpenAI isn’t operating in a vacuum. It has stakeholders, some of them with billions poured into the company. And the so-called adults in the room were acting, as Swisher put it: like a “clown car that crashed into a gold mine,” quoting a famous Meta CEO Mark Zuckerberg line about Twitter.

Involving Microsoft in the decision, informing employees, working with Altman on a dignified exit plan…all of those would have been solutions more typically employed by a board of a company OpenAI’s size – and all with potentially better outcomes.

Microsoft, despite its massive stake, does not hold an OpenAI board seat, because of the company’s strange structure. Now that could change, according to multiple news reports, including the Wall Street Journal and New York Times. One of the company’s demands, including the return of Altman, is to have a seat at the table.

With OpenAI’s ChatGPT-like capabilities embedded in Bing and other core products, Microsoft believed it had invested wisely in the promising new tech of the future. So it must have come as a shock to CEO Satya Nadella and his crew when they learned about Altman’s firing along with the rest of the world on Friday evening.

The board angered a powerful ally and could be forever changed because of the way it handled Altman’s ouster. It could end up with Altman back at the helm, a for-profit company on its nonprofit board – and a massive culture shift at OpenAI.

Alternatively, it could become a competitor to Altman, who may ultimately decide to start a new company and drain talent from OpenAI.

Either way, OpenAI is probably left off in a worse position now than it was in on Friday before it fired Altman. And it was a problem it could have avoided, ironically, by slowing down.

Sam Altman was fundraising in the Middle East for a new chip venture to rival Nvidia before OpenAI’s board ousted him

BYEDWARD LUDLOW, ASHLEE VANCE AND BLOOMBERG

November 19, 2023

Sam Altman was fired as OpenAI CEO on Friday by the company's board.

JUSTIN SULLIVAN/GETTY IMAGES

In the weeks leading up to his shocking ouster from OpenAI, Sam Altman was actively working to raise billions from some of the world’s largest investors for a new chip venture, according to people familiar with the matter.

Altman had been traveling to the Middle East to fundraise for the project, which was code-named Tigris, the people said. The OpenAI chief executive officer planned to spin up an AI-focused chip company that could produce semiconductors that compete against those from Nvidia Corp., which currently dominates the market for artificial intelligence tasks. Altman’s chip venture is not yet formed and the talks with investors are in the early stages, said the people, who asked not to be named as the discussions were private.

Altman has also been looking to raise money for an AI-focused hardware device that he’s been developing in tandem with former Apple Inc. design chief Jony Ive. Altman has had talks about these ventures with SoftBank Group Corp., Saudi Arabia’s Public Investment Fund, Mubadala Investment Company and others, as he sought tens of billions of dollars for these new companies, the people said.

Many details of the scale and focus of Altman’s chip ambitions as well as the project’s codename have not been previously reported.

Altman’s fundraising efforts came at an important moment for the AI startup. OpenAI has been working to finalize a tender offer, led by Thrive Capital, that would let employees sell their shares at an $86 billion valuation. SoftBank and others had hoped to be part of this deal, one person said, but were put on a waitlist for a similar deal at a later date. In the interim, Altman urged investors to consider his new ventures, two people said.

A representative for Saudi Arabia’s PIF did not immediately respond to a request for comment. OpenAI, SoftBank and Mubadala declined to comment.

OpenAI said Friday that Altman was ousted from his role after an internal review found “he was not consistently candid in his communications with the board.” The board and Altman had differences of opinion on AI safety, the speed of development of the technology and the commercialization of the company, according to a person familiar with the matter. Altman’s ambitions and side ventures added complexity to an already strained relationship with the board.

In a memo to staff, Brad Lightcap, OpenAI’s chief operating officer, said: “We can say definitively that the board’s decision was not made in response to malfeasance or anything related to our financial, business, safety, or security/privacy practices. This was a breakdown in communication between Sam and the board.”

OpenAI’s board is currently under pressure from investors to reinstate Altman, with one possibility being that the board resigns. Even if Altman returns, however, he may still need to navigate his side ventures with the assent of OpenAI’s board.

Altman’s pitch was for a startup that would aim to build Tensor Processing Units, or TPUs — semiconductors that are designed to handle high volume specialized AI workloads. The goal is to provide lower-cost competition to market incumbent Nvidia and, according to people familiar, aid OpenAI by lowering the ongoing costs of running its own services like ChatGPT and Dall-E.

Custom-designed chips like TPUs are seen as one day having the potential to outperform the AI accelerators made by Nvidia — which are coveted by artificial intelligence companies — but the timeline for development is long and complex.

A number of prominent venture firms, including some existing investors in OpenAI, are ready to back any new venture Altman forms, people familiar said. Microsoft Corp., OpenAI’s biggest investor, is also interested in backing Altman’s chips venture, according to people familiar. Microsoft declined to comment.

In a statement on X, formerly Twitter, venture capitalist Vinod Khosla said that his firm wanted Altman “back at OpenAI but will back him in whatever he does next.”

— With assistance from Dina Bass and Rachel Metz