Google is ‘all hands on deck’ to develop AI products to take on ChatGPT

Story by MobileSyrup • Thursday

OpenAI’s ChatGPT is a machine-learning dependent AI chat bot that generates human-like responses based on the input it receives. The chatbot has taken the world by storm, having crossed one million users earlier this month.

Google is ‘all hands on deck’ to develop AI products to take on ChatGPT© Provided by MobileSyrup

The ChatGPT storm has been noticed by Google, and it is reportedly taking an ‘all hand on deck’ approach to respond.

As reported by The New York Times, Google has declared a “code red,” and has tasked several departments to “respond to the threat that ChatGPT poses.”

“From now until a major conference expected to be hosted by Google in May, teams within Google’s research, Trust and Safety, and other departments have been reassigned to help develop and release new A.I. prototypes and products.”

Related video: “Google can now decode doctors' bad handwriting thanks to AI” (DeFiance)

Duration 1:08 View on Watch

The Wall Street JournalCheating With ChatGPT: Can an AI Chatbot Pass AP Lit?

6:58

QuickTakeThe Problem With ChatGPT's Human-Like Responses

2:18

DeFiance"Google has reportedly issued a code red to internal staff over the popularity of ChatGPT"

2:26

The likely point in future where Google describes its advancement in AI would be at its annual I/O where it shows off progress made on LaMDA, Google’s own AI chat bot.

Alphabet CEO Sundar Pichai hinted the company has “a lot” planned in the space in 2023 but added that “This is an area where we need to be bold and responsible, so we have to balance that,” according to a recent CNBC report.

Earlier this year, Google suspended one of its engineers, Blake Lemoine, after he claimed the company’s ‘LaMDA’ chatbot system had achieved sentience. Read more about it here.

Image credit: Google

Story by MobileSyrup • Thursday

OpenAI’s ChatGPT is a machine-learning dependent AI chat bot that generates human-like responses based on the input it receives. The chatbot has taken the world by storm, having crossed one million users earlier this month.

Google is ‘all hands on deck’ to develop AI products to take on ChatGPT© Provided by MobileSyrup

The ChatGPT storm has been noticed by Google, and it is reportedly taking an ‘all hand on deck’ approach to respond.

As reported by The New York Times, Google has declared a “code red,” and has tasked several departments to “respond to the threat that ChatGPT poses.”

“From now until a major conference expected to be hosted by Google in May, teams within Google’s research, Trust and Safety, and other departments have been reassigned to help develop and release new A.I. prototypes and products.”

Related video: “Google can now decode doctors' bad handwriting thanks to AI” (DeFiance)

Duration 1:08 View on Watch

The Wall Street JournalCheating With ChatGPT: Can an AI Chatbot Pass AP Lit?

6:58

QuickTakeThe Problem With ChatGPT's Human-Like Responses

2:18

DeFiance"Google has reportedly issued a code red to internal staff over the popularity of ChatGPT"

2:26

The likely point in future where Google describes its advancement in AI would be at its annual I/O where it shows off progress made on LaMDA, Google’s own AI chat bot.

Alphabet CEO Sundar Pichai hinted the company has “a lot” planned in the space in 2023 but added that “This is an area where we need to be bold and responsible, so we have to balance that,” according to a recent CNBC report.

Earlier this year, Google suspended one of its engineers, Blake Lemoine, after he claimed the company’s ‘LaMDA’ chatbot system had achieved sentience. Read more about it here.

Image credit: Google

Aaron Mok

Wed, December 21, 2022

Google CEO Sundar Pichai told some teams to switch gears and work on developing artificial-intelligence products, The New York Times reported.

Brandon Wade/Reuters

Google has issued a "code red" over the rise of the AI bot ChatGPT, The New York Times reported.

CEO Sundar Pichai redirected some teams to focus on building out AI products, the report said.

The move comes as talks abound over whether ChatGPT could one day replace Google's search engine.

Google's management has issued a "code red" amid the launch of ChatGPT — a buzzy conversational-artificial-intelligence chatbot created by OpenAI — as it's sparked concerns over the future of Google's search engine, The New York Times reported Wednesday.

Sundar Pichai, the CEO of Google and its parent company, Alphabet, has participated in several meetings around Google's AI strategy and directed numerous groups in the company to refocus their efforts on addressing the threat that ChatGPT poses to its search-engine business, according to an internal memo and audio recording reviewed by The Times.

In particular, teams in Google's research, trust, and safety division, among other departments, have been directed to switch gears to assist in the development and launch of AI prototypes and products, The Times reported. Some employees have been tasked with building AI products that generate art and graphics, similar to OpenAI's DALL-E, which is used by millions of people, according to The Times.

A Google spokesperson did not immediately respond to a request for comment.

Google's move to build out its AI-product portfolio comes as Google employees and experts alike debate whether ChatGPT — run by Sam Altman, a former Y Combinator president — has the potential to replace the search engine and, in turn, hurt Google's ad-revenue business model.

Sridhar Ramaswamy, who oversaw Google's ad team between 2013 and 2018, said ChatGPT could prevent users from clicking on Google links with ads, which generated $208 billion — 81% of Alphabet's overall revenue — in 2021, Insider reported.

ChatGPT, which amassed over 1 million users five days after its public launch in November, can generate singular answers to queries in a conversational, humanlike way by collecting information from millions of websites. Users have asked the chatbot to write a college essay, provide coding advice, and even serve as a therapist.

But some have been quick to say the bot is often riddled with errors. ChatGPT is unable to fact-check what it says and can't distinguish between a verified fact and misinformation, AI experts told Insider. It can also make up answers, a phenomenon that AI researchers call "hallucinations."

The bot is also capable of generating racist and sexist responses, Bloomberg reported.

Its high margin of error and vulnerability to toxicity are some of the reasons Google is hesitant to release its AI chatbot LaMDA — short for Language Model for Dialogue Applications — to the public, The Times reported. A recent CNBC report said Google execs were reluctant to release it widely in its current state over concerns over "reputational risk."

Chatbots are "not something that people can use reliably on a daily basis," Zoubin Ghahramani, who leads the Google's AI lab Google Brain, told The Times before ChatGPT was released.

Instead, Google may focus on improving its search engine over time rather than taking it down, experts told The Times.

As Google reportedly works full steam ahead on new AI products, we might get an early look at them at Google's annual developer conference, I/O, which is expected to take place in May.

Google has issued a "code red" over the rise of the AI bot ChatGPT, The New York Times reported.

CEO Sundar Pichai redirected some teams to focus on building out AI products, the report said.

The move comes as talks abound over whether ChatGPT could one day replace Google's search engine.

Google's management has issued a "code red" amid the launch of ChatGPT — a buzzy conversational-artificial-intelligence chatbot created by OpenAI — as it's sparked concerns over the future of Google's search engine, The New York Times reported Wednesday.

Sundar Pichai, the CEO of Google and its parent company, Alphabet, has participated in several meetings around Google's AI strategy and directed numerous groups in the company to refocus their efforts on addressing the threat that ChatGPT poses to its search-engine business, according to an internal memo and audio recording reviewed by The Times.

In particular, teams in Google's research, trust, and safety division, among other departments, have been directed to switch gears to assist in the development and launch of AI prototypes and products, The Times reported. Some employees have been tasked with building AI products that generate art and graphics, similar to OpenAI's DALL-E, which is used by millions of people, according to The Times.

A Google spokesperson did not immediately respond to a request for comment.

Google's move to build out its AI-product portfolio comes as Google employees and experts alike debate whether ChatGPT — run by Sam Altman, a former Y Combinator president — has the potential to replace the search engine and, in turn, hurt Google's ad-revenue business model.

Sridhar Ramaswamy, who oversaw Google's ad team between 2013 and 2018, said ChatGPT could prevent users from clicking on Google links with ads, which generated $208 billion — 81% of Alphabet's overall revenue — in 2021, Insider reported.

ChatGPT, which amassed over 1 million users five days after its public launch in November, can generate singular answers to queries in a conversational, humanlike way by collecting information from millions of websites. Users have asked the chatbot to write a college essay, provide coding advice, and even serve as a therapist.

But some have been quick to say the bot is often riddled with errors. ChatGPT is unable to fact-check what it says and can't distinguish between a verified fact and misinformation, AI experts told Insider. It can also make up answers, a phenomenon that AI researchers call "hallucinations."

The bot is also capable of generating racist and sexist responses, Bloomberg reported.

Its high margin of error and vulnerability to toxicity are some of the reasons Google is hesitant to release its AI chatbot LaMDA — short for Language Model for Dialogue Applications — to the public, The Times reported. A recent CNBC report said Google execs were reluctant to release it widely in its current state over concerns over "reputational risk."

Chatbots are "not something that people can use reliably on a daily basis," Zoubin Ghahramani, who leads the Google's AI lab Google Brain, told The Times before ChatGPT was released.

Instead, Google may focus on improving its search engine over time rather than taking it down, experts told The Times.

As Google reportedly works full steam ahead on new AI products, we might get an early look at them at Google's annual developer conference, I/O, which is expected to take place in May.

AI can now write like a human. Some teachers are worried.

Mike Bebernes

·Senior Editor

Wed, December 21, 2022

“The 360” shows you diverse perspectives on the day’s top stories and debates

Scroll back up to restore default view.

What’s happening

Artificial intelligence has advanced at an extraordinary pace over the past few years. Today, these incredibly complex algorithms are capable of creating award-winning art, penning scripts that can be turned into real films and — in the latest step that has dazzled people in the tech and media industries — mimic writing at a level so convincing that it’s impossible to tell whether the words were put together by a human or a machine.

A few weeks ago, the research company OpenAI released ChatGPT, a language model that can construct remarkably well-structured arguments based on simple prompts provided by a user. The system — which uses a massive repository of online text to predict what words should come next — is able to create new stories in the style of famous writers, write news articles about itself and produce essays that could easily receive a passing grade in most English classes.

That last use has raised concern among academics, who worry about the implications of an easily accessible platform that, in a matter of seconds, can put together prose on par with — if not better than — the writing of a typical student.

Cheating in school is not new, but ChatGPT and other language models are categorically different from the hacks students have used to cut corners in the past. The writing these language models produce is completely original, meaning that it can’t be detected by even the most sophisticated plagiarism software. The AI also goes beyond just providing students with information they should be finding themselves. It organizes that information into a complete narrative.

Why there’s debate

Some educators see ChatGPT as a sign that AI will soon lead to the demise of the academic essay, a crucial tool used in schools at every level. They argue that it will simply be impossible to root out cheating, since there will be no tools to determine whether writing is authentic or machine-made. But beyond potential academic integrity issues, some teachers worry that the true value of learning to write — like analysis, critical thinking, creativity and the ability to structure an argument — will be lost when AI can do all those complex things in a matter of seconds.

Others say these concerns are overblown. They make the case that, as impressive as AI writing is, its prose is too rigid and formulaic to pass as original work from most students — especially those in lower grades. ChatGPT also has no ability to tell truth from fiction and often fabricates information to fill in blanks in its writing, which could make it easy to spot during grading.

Some even celebrate advances in AI, viewing them as an opportunity to improve the way we teach children to write and make language more accessible. They believe AI text generators could be a major tool to help students who struggle with writing, either due to disabilities or because English isn’t their first language, to be judged on the same terms as their peers. Others say AI will force schools to think more creatively about how they teach writing and may inspire them to abandon a curriculum that emphasizes structure over process and creativity.

What’s next

When asked whether AI will kill the academic essay, ChatGPT expressed no concern. It wrote: “While AI technology has made great strides in natural language processing and can assist with tasks such as proofreading and grammar checking, it is not currently capable of fully replicating the critical thinking and analysis that is a key part of academic writing.”

With the technology just emerging, it may be several years before it becomes clear whether that contention will prove correct.

Perspectives

AI could kill the academic essay for good

“The majority of students do not see writing as a worthwhile skill to cultivate. … They have no interest in exploring nuance in tone and rhythm. … Which is why I wonder if this may be the end of using writing as a benchmark for aptitude and intelligence.” — Daniel Herman, Atlantic

AI can’t replace the most important parts of writing education

“Contrary to popular belief, we writing teachers believe more in the process of writing than the product. If we have done our jobs well and students have learned, reading that final draft during this time of year is often a formality. The process tells us the product will be amazing.” — Matthew Boedy, Atlanta Journal-Constitution

AI will create a cheating crisis

“An unexpected insidious academic threat is on the scene: a revolution in artificial intelligence has created powerful new automatic writing tools. These are machines optimised for cheating on school and university papers, a potential siren song for students that is difficult, if not outright impossible, to catch.” — Rob Reich, Guardian

Any competent teacher can easily spot AI-generated writing

“Many students would be hard-pressed to read with comprehension AI-generated essays, let alone pass them off as their own work.” — Robert Pondiscio, American Enterprise Institute

AI can make writing more accessible to everyone

“I think there's a lot of potential for helping people express themselves in ways that they hadn't necessarily thought about. This could be particularly useful for students who speak English as a second language, or for students who aren't used to the academic writing style.” — Leah Henrickson, digital media researcher, to Business Insider

Something incredibly important is lost when people don’t learn to write the hard way

“We lose the journey of learning. We might know more things but we never learned how we got there. We’ve said forever that the process is the best part and we know that. The satisfaction is the best part. That might be the thing that’s nixed from all of this. … I don’t know what a person is like if they’ve never had to struggle through learning. I don’t know the behavioral implications of that.” — Peter Laffin, writing instructor, to Vice

AI can enhance creativity by helping students sort through the routine parts of writing

“Keep in mind, language models are just math and massive processing power, without any real cognition or meaning behind their text generation. Human creativity is far more powerful, and who knows what can be unlocked if such creativity is augmented with AI?” — Marc Watkins, Inside Higher Ed

Educators may not be able to rely on essays to evaluate students much longer

“AI is here to stay whether we like it or not. Provide unscrupulous students the ability to use these shortcuts without much capacity for the educator to detect them, combined with other crutches like outright plagiarism, and companies that sell papers, homework, and test answers, and it’s a recipe for—well, not disaster, but the further degradation of a type of assignment that has been around for centuries.” — Aki Peritz, Slate

AI won’t kill anything we’ll miss

“By privileging surface-level correctness and allowing that to stand in for writing proficiency, we've denied a generation (or two) of students the chance to develop their writing and critical thinking skills. … Now we have GPT3, which, in seconds, can generate surface-level correct prose on just about any prompt. That this seems like it could substitute for what students produce in school is mainly a comment on what we value when we assign and assess writing in school contexts.” — John Warner, author of Why They Can’t Write

Educators shouldn’t overreact, but they need to have a plan

“Whenever there’s a new technology, there’s a panic around it. It’s the responsibility of academics to have a healthy amount of distrust — but I don’t feel like this is an insurmountable challenge.” — Sandra Wachter, technology researcher, to Nature

Quora launches Poe, a way to talk to AI chatbots like ChatGPT

Kyle Wiggers

Wed, December 21, 2022

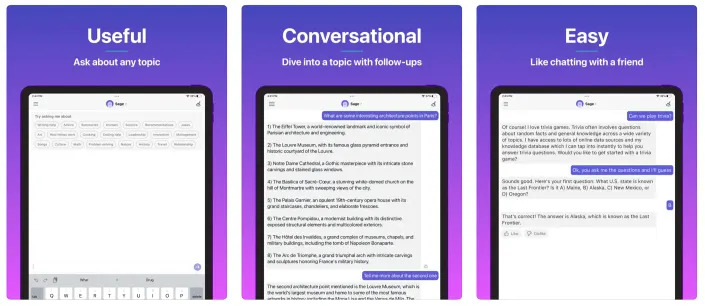

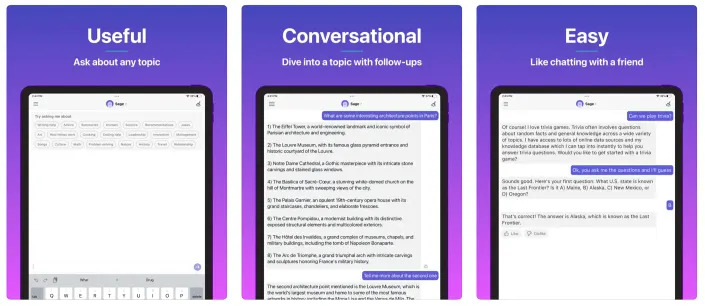

Signaling its interest in text-generating AI systems like ChatGPT, Quora this week launched a platform called Poe that lets people ask questions, get instant answers and have a back-and-forth dialogue with AI chatbots.

Short for "Platform for Open Exploration," Poe -- which is invite-only and currently only available on iOS -- is "designed to be a place where people can easily interact with a number of different AI agents," a Quora spokesperson told TechCrunch via text message.

"We have learned a lot about building consumer internet products over the last 12 years building and operating Quora. And we are specifically experienced in serving people who are looking for knowledge," the spokesperson said. "We believe much of what we’ve learned can be applied to this new domain where people are interfacing with large language models."

Poe, then, isn't an attempt to build a ChatGPT-like AI model from scratch. ChatGPT -- which has an aptitude for answering questions on topics ranging from poetry to coding -- has been the subject of controversy for its ability to sometimes give answers that sound convincing but aren't factually true. Earlier this month, Q&A coding site Stack Overflow temporarily banned users from sharing content generated by ChatGPT, saying the AI made it too easy for users to generate responses and flood the site with dubious answers.

Quora might've found itself in hot water if, for instance, it trained a chatbot on its platform's vast collection of crowdsourced questions and answers. Users might've taken issue with their content being used that way -- particularly given that some AI systems have been shown to regurgitate parts of the data on which they were trained (e.g. code). Some parties have protested against generative art systems like Stable Diffusion and DALL-E 2 and code-generating systems such as GitHub's Copilot, which they see as stealing and profiting from their work.

To wit, Microsoft, GitHub and OpenAI are being sued in a class action lawsuit that accuses them of violating copyright law by allowing Copilot to regurgitate sections of licensed code without providing credit. And on the art community portal ArtStation, which earlier this year began allowing AI-generated art on its platform, members began widely protesting by placing "No AI Art" images in their portfolios.

Quora Poe, a way to talk to chatbots like ChatGPT

Image Credits: Quora

At launch, Poe provides access to several text-generating AI models, including ChatGPT. (OpenAI doesn't presently offer a public API for ChatGPT; the Quora spokesperson refused to say whether Quora has a partnership with OpenAI for Poe or another form of early access.) Poe's like a text messaging app, but for AI models -- users can chat with the models separately. Within the chat interface, Poe provides a range of different suggestions for conversation topics and use cases, like "writing help," "cooking," "problem solving" and "nature."

Poe ships with only a handful of models at launch, but Quora plans to provide a way for model providers -- e.g. companies -- to submit their models for inclusion in the near future.

"We think this will be a fun way for people to interact with and explore different language models. Poe is designed to be the best way for someone to get an instant answer to any question they have, using natural conversation," the spokesperson said. "There is an incredible amount of research and development going into advancing the capabilities of these models, but in order to bring all that value to people around the world, there is a need for good interfaces that are easy to use. We hope we can provide that interface so that as all of this development happens over the years ahead, everyone around the world can share as much as possible in the benefits."

It's pretty well-established that AI chatbots, including ChatGPT, can generate biased, racist and otherwise toxic content -- not to mention malicious code. Quora's not taking steps itself to combat this, instead relying on the providers of the models in Poe to moderate and filter the content themselves.

"The model providers have put in a lot of effort to prevent the bots from generating unsafe responses," the spokesperson said.

The spokesperson was quite clear that Poe isn't a part of Quora for now -- nor will it be in the future necessarily. Quora sees it as a separate, independent project, much like Google's AI Test Kitchen, it plans to iterate and refine over time.

When asked about the business motivations behind Poe, the spokesperson demurred, saying that it's early days. But it isn't tough to conceive how Quora, which makes most of its money through paywalls and advertising, might build premium features into Poe if it grows.

For now, though, Quora says it's focused on working out scalability, getting feedback from beta testers and addressing issues that come up.

"The whole field is moving very rapidly now and we’re more interested in figuring out what problems we can solve for people with Poe," the spokesperson said.

Mike Bebernes

·Senior Editor

Wed, December 21, 2022

“The 360” shows you diverse perspectives on the day’s top stories and debates

Scroll back up to restore default view.

What’s happening

Artificial intelligence has advanced at an extraordinary pace over the past few years. Today, these incredibly complex algorithms are capable of creating award-winning art, penning scripts that can be turned into real films and — in the latest step that has dazzled people in the tech and media industries — mimic writing at a level so convincing that it’s impossible to tell whether the words were put together by a human or a machine.

A few weeks ago, the research company OpenAI released ChatGPT, a language model that can construct remarkably well-structured arguments based on simple prompts provided by a user. The system — which uses a massive repository of online text to predict what words should come next — is able to create new stories in the style of famous writers, write news articles about itself and produce essays that could easily receive a passing grade in most English classes.

That last use has raised concern among academics, who worry about the implications of an easily accessible platform that, in a matter of seconds, can put together prose on par with — if not better than — the writing of a typical student.

Cheating in school is not new, but ChatGPT and other language models are categorically different from the hacks students have used to cut corners in the past. The writing these language models produce is completely original, meaning that it can’t be detected by even the most sophisticated plagiarism software. The AI also goes beyond just providing students with information they should be finding themselves. It organizes that information into a complete narrative.

Why there’s debate

Some educators see ChatGPT as a sign that AI will soon lead to the demise of the academic essay, a crucial tool used in schools at every level. They argue that it will simply be impossible to root out cheating, since there will be no tools to determine whether writing is authentic or machine-made. But beyond potential academic integrity issues, some teachers worry that the true value of learning to write — like analysis, critical thinking, creativity and the ability to structure an argument — will be lost when AI can do all those complex things in a matter of seconds.

Others say these concerns are overblown. They make the case that, as impressive as AI writing is, its prose is too rigid and formulaic to pass as original work from most students — especially those in lower grades. ChatGPT also has no ability to tell truth from fiction and often fabricates information to fill in blanks in its writing, which could make it easy to spot during grading.

Some even celebrate advances in AI, viewing them as an opportunity to improve the way we teach children to write and make language more accessible. They believe AI text generators could be a major tool to help students who struggle with writing, either due to disabilities or because English isn’t their first language, to be judged on the same terms as their peers. Others say AI will force schools to think more creatively about how they teach writing and may inspire them to abandon a curriculum that emphasizes structure over process and creativity.

What’s next

When asked whether AI will kill the academic essay, ChatGPT expressed no concern. It wrote: “While AI technology has made great strides in natural language processing and can assist with tasks such as proofreading and grammar checking, it is not currently capable of fully replicating the critical thinking and analysis that is a key part of academic writing.”

With the technology just emerging, it may be several years before it becomes clear whether that contention will prove correct.

Perspectives

AI could kill the academic essay for good

“The majority of students do not see writing as a worthwhile skill to cultivate. … They have no interest in exploring nuance in tone and rhythm. … Which is why I wonder if this may be the end of using writing as a benchmark for aptitude and intelligence.” — Daniel Herman, Atlantic

AI can’t replace the most important parts of writing education

“Contrary to popular belief, we writing teachers believe more in the process of writing than the product. If we have done our jobs well and students have learned, reading that final draft during this time of year is often a formality. The process tells us the product will be amazing.” — Matthew Boedy, Atlanta Journal-Constitution

AI will create a cheating crisis

“An unexpected insidious academic threat is on the scene: a revolution in artificial intelligence has created powerful new automatic writing tools. These are machines optimised for cheating on school and university papers, a potential siren song for students that is difficult, if not outright impossible, to catch.” — Rob Reich, Guardian

Any competent teacher can easily spot AI-generated writing

“Many students would be hard-pressed to read with comprehension AI-generated essays, let alone pass them off as their own work.” — Robert Pondiscio, American Enterprise Institute

AI can make writing more accessible to everyone

“I think there's a lot of potential for helping people express themselves in ways that they hadn't necessarily thought about. This could be particularly useful for students who speak English as a second language, or for students who aren't used to the academic writing style.” — Leah Henrickson, digital media researcher, to Business Insider

Something incredibly important is lost when people don’t learn to write the hard way

“We lose the journey of learning. We might know more things but we never learned how we got there. We’ve said forever that the process is the best part and we know that. The satisfaction is the best part. That might be the thing that’s nixed from all of this. … I don’t know what a person is like if they’ve never had to struggle through learning. I don’t know the behavioral implications of that.” — Peter Laffin, writing instructor, to Vice

AI can enhance creativity by helping students sort through the routine parts of writing

“Keep in mind, language models are just math and massive processing power, without any real cognition or meaning behind their text generation. Human creativity is far more powerful, and who knows what can be unlocked if such creativity is augmented with AI?” — Marc Watkins, Inside Higher Ed

Educators may not be able to rely on essays to evaluate students much longer

“AI is here to stay whether we like it or not. Provide unscrupulous students the ability to use these shortcuts without much capacity for the educator to detect them, combined with other crutches like outright plagiarism, and companies that sell papers, homework, and test answers, and it’s a recipe for—well, not disaster, but the further degradation of a type of assignment that has been around for centuries.” — Aki Peritz, Slate

AI won’t kill anything we’ll miss

“By privileging surface-level correctness and allowing that to stand in for writing proficiency, we've denied a generation (or two) of students the chance to develop their writing and critical thinking skills. … Now we have GPT3, which, in seconds, can generate surface-level correct prose on just about any prompt. That this seems like it could substitute for what students produce in school is mainly a comment on what we value when we assign and assess writing in school contexts.” — John Warner, author of Why They Can’t Write

Educators shouldn’t overreact, but they need to have a plan

“Whenever there’s a new technology, there’s a panic around it. It’s the responsibility of academics to have a healthy amount of distrust — but I don’t feel like this is an insurmountable challenge.” — Sandra Wachter, technology researcher, to Nature

Quora launches Poe, a way to talk to AI chatbots like ChatGPT

Kyle Wiggers

Wed, December 21, 2022

Signaling its interest in text-generating AI systems like ChatGPT, Quora this week launched a platform called Poe that lets people ask questions, get instant answers and have a back-and-forth dialogue with AI chatbots.

Short for "Platform for Open Exploration," Poe -- which is invite-only and currently only available on iOS -- is "designed to be a place where people can easily interact with a number of different AI agents," a Quora spokesperson told TechCrunch via text message.

"We have learned a lot about building consumer internet products over the last 12 years building and operating Quora. And we are specifically experienced in serving people who are looking for knowledge," the spokesperson said. "We believe much of what we’ve learned can be applied to this new domain where people are interfacing with large language models."

Poe, then, isn't an attempt to build a ChatGPT-like AI model from scratch. ChatGPT -- which has an aptitude for answering questions on topics ranging from poetry to coding -- has been the subject of controversy for its ability to sometimes give answers that sound convincing but aren't factually true. Earlier this month, Q&A coding site Stack Overflow temporarily banned users from sharing content generated by ChatGPT, saying the AI made it too easy for users to generate responses and flood the site with dubious answers.

Quora might've found itself in hot water if, for instance, it trained a chatbot on its platform's vast collection of crowdsourced questions and answers. Users might've taken issue with their content being used that way -- particularly given that some AI systems have been shown to regurgitate parts of the data on which they were trained (e.g. code). Some parties have protested against generative art systems like Stable Diffusion and DALL-E 2 and code-generating systems such as GitHub's Copilot, which they see as stealing and profiting from their work.

To wit, Microsoft, GitHub and OpenAI are being sued in a class action lawsuit that accuses them of violating copyright law by allowing Copilot to regurgitate sections of licensed code without providing credit. And on the art community portal ArtStation, which earlier this year began allowing AI-generated art on its platform, members began widely protesting by placing "No AI Art" images in their portfolios.

Quora Poe, a way to talk to chatbots like ChatGPT

Image Credits: Quora

At launch, Poe provides access to several text-generating AI models, including ChatGPT. (OpenAI doesn't presently offer a public API for ChatGPT; the Quora spokesperson refused to say whether Quora has a partnership with OpenAI for Poe or another form of early access.) Poe's like a text messaging app, but for AI models -- users can chat with the models separately. Within the chat interface, Poe provides a range of different suggestions for conversation topics and use cases, like "writing help," "cooking," "problem solving" and "nature."

Poe ships with only a handful of models at launch, but Quora plans to provide a way for model providers -- e.g. companies -- to submit their models for inclusion in the near future.

"We think this will be a fun way for people to interact with and explore different language models. Poe is designed to be the best way for someone to get an instant answer to any question they have, using natural conversation," the spokesperson said. "There is an incredible amount of research and development going into advancing the capabilities of these models, but in order to bring all that value to people around the world, there is a need for good interfaces that are easy to use. We hope we can provide that interface so that as all of this development happens over the years ahead, everyone around the world can share as much as possible in the benefits."

It's pretty well-established that AI chatbots, including ChatGPT, can generate biased, racist and otherwise toxic content -- not to mention malicious code. Quora's not taking steps itself to combat this, instead relying on the providers of the models in Poe to moderate and filter the content themselves.

"The model providers have put in a lot of effort to prevent the bots from generating unsafe responses," the spokesperson said.

The spokesperson was quite clear that Poe isn't a part of Quora for now -- nor will it be in the future necessarily. Quora sees it as a separate, independent project, much like Google's AI Test Kitchen, it plans to iterate and refine over time.

When asked about the business motivations behind Poe, the spokesperson demurred, saying that it's early days. But it isn't tough to conceive how Quora, which makes most of its money through paywalls and advertising, might build premium features into Poe if it grows.

For now, though, Quora says it's focused on working out scalability, getting feedback from beta testers and addressing issues that come up.

"The whole field is moving very rapidly now and we’re more interested in figuring out what problems we can solve for people with Poe," the spokesperson said.

SEE

No comments:

Post a Comment