The Internet has been on fire since the August 4 discovery (disclosed publicly by Mathew Green) that Apple will be monitoring photos uploaded to iCloud for child sexual abuse material (CSAM). Some see this as a great move by Apple that will protect children. Others view this as a potentially dangerous slide away from privacy that may not actually protect children—and, in fact, could actually cause some children to come to harm.

How does this work?

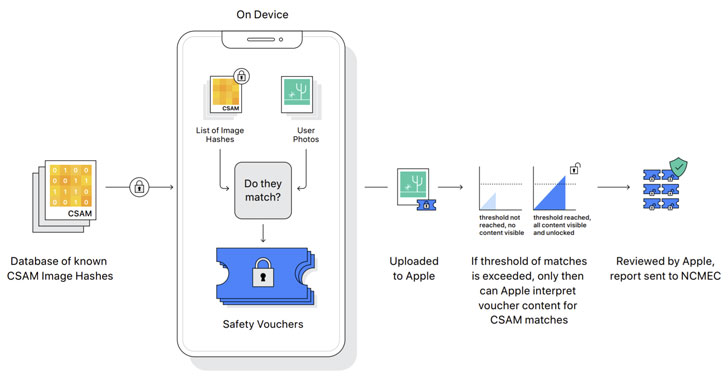

It’s important to understand that, contrary to what it sounds like, Apple will not be rifling through all your photos on iCloud. All scanning for CSAM material will be done on the device itself, by an artificial intelligence algorithm. That system, called neuralMatch, will perform two functions.

The first is to create a hash of any photos on the device before they are uploaded to iCloud. (A “hash” is a computed value that should be a unique representation of a file, but that cannot be reversed to obtain a copy of the file itself.) This hash will be compared to a database of hashes of known CSAM materials on the device. The result is recorded cryptographically and stored on iCloud alongside the photo. If the user passes some minimum threshold of photos that match known CSAM hashes, Apple will be able to access those photos and the iCloud account will be shut down.

The second function is to protect children under 13 against sending or receiving CSAM images. Apple’s AI will attempt to detect whether images sent or received via iMessage have such content. In such cases, the child will be warned, and if they choose to view or send the content anyway, their parents will be notified. For children between 13 and 18, the same warning will be shown, but parents will apparently not be notified. This all relies on the child’s Apple ID being managed under a family account.

Why should I worry about monitoring a child’s texts?

There are a lot of potential problems with this. This can be a serious violation of a child’s privacy, and the behavior of this feature is predicated on an assumption that may not be true: That a child’s relationship with their parents is a healthy one. This is not always the case. Parents or guardians could be complicit in the creation of CSAM content. Further, an abusive parent could see a warning about a legitimate photo that was falsely identified as CSAM content, and could harm the child based on false information. (Yes, the parent would have the option to view the photo, but it’s possible a parent may choose not to. I certainly wouldn’t want such an image of my child in my head.)

Also, consider the fact that this applies to being sent an image, not just sending an image. Imagine the trouble a bully or scammer could cause by sending CSAM material, or the damage that could be done if a child of an abusive parent were sent a CSAM image and viewed it without fully understanding why it was being blocked or what the consequences would be!

And finally, as the EFF’s Eva Galperin pointed out on Twitter there is also the danger that this well intentioned functionality “is going to out a lot of queer kids to their homophobic parents”.

What’s the problem with monitoring photos uploaded to iCloud?

Although a comparison of a hash to a file has a low chance of false positives, it can definitely happen. Apple claims that there should be a one in one trillion chance of false positives, but it remains to be seen if that is true in practice.

Apple is providing a process to appeal in cases where an account is wrongly closed because of false positives. However, anyone who has been involved in reviews and appeals with Apple knows they don’t always go your way, nor are they always speedy. Sometimes they are, sometimes not. Time will tell how big a problem this is.

What about the privacy issues?

For a company that has constantly talked about protecting users’ privacy, this seems like a reversal. However, Apple has clearly put a lot of thought into this, and is emphasizing the fact that none of this happens on their servers. Apple states that all the processing happens on the device, and that it does not see the images (unless it’s determined that abuse is happening).

Further, CSAM is a big problem. I don’t think there’s anyone—other than pedophiles—who wouldn’t want to see all production of and trafficking in CSAM brought to an end. So many will praise Apple for taking this action.

This doesn’t mean there aren’t issues, though. Many view this as a first step onto a slippery slope. Blocking CSAM is a good thing, but there’s nothing to prevent the tools that Apple has built from being used for other things. For example, suppose the US government puts pressure on Apple to start detecting terrorism-related content. What exactly would that look like, if Apple decided to—or was forced to—comply? What would happen if a law-abiding person’s iCloud account was flagged as being involved in terrorist activity due to false positives on their photos? And what about tracking more prosaic crimes, such as drug use?

I could go on, as there are lots of things that governments of the world—including the US government—might want Apple to track. Although I tend to be willing to extend trust to Apple, this may not be something that is entirely within Apple’s control. They are a US company, and it’s possible for future US law to force Apple to do things their leadership wouldn’t have wanted to do.

We’ve also seen Apple bend to the desires of governments before. For example, Apple has conceded to demands from the government of China that are counter to Apple’s philosophy. Although the cynical point to this as evidence that Apple is more interested in profits from China’s large market (and they’re not entirely wrong) there’s more to it than that. Most of Apple’s manufacturing is done in China, and they’d be in a huge pile of trouble if China decided to shut down Apple’s ability to do business there. This means China has leverage they it use to make Apple bend to its wishes, at least within China.

Why is Apple doing this?

I’m sure there will be a lot of debate and speculation on this topic. Part of it is undoubtedly a desire to protect children and prevent distribution of CSAM. Part of it may be marketing.

To me, though, this all boils down to a political move. Apple has been a fantastic advocate for encryption and privacy, even going to the extreme of refusing the FBI’s demands relating to gaining access to a suspected terrorist’s iPhone.

It’s a common request from law enforcement to tech companies to give them “backdoors.” Essentially, this boils down to some kind of private access to users’ data, in theory accessible only to law enforcement agents. The problem with such backdoors is that they don’t tend to remain secret. Hackers can find them and gain access, or rogue government agents can abuse or even sell their access. There is no such thing as a secure backdoor.

Apple’s refusal to create backdoors for government access has angered many who believe that Apple is preventing law enforcement from doing their jobs. A common refrain for people trying to push for backdoors is the old standby, “but think of the children!” CSAM is frequently brought up as a reason why access to messaging, file storage, etc, is needed. This is actually a somewhat clever argument, by making it seem (falsely) like arguing against backdoors is also an argument in support of pedophiles.

By taking specific action against CSAM, Apple has effectively neutered this argument. Politicians will no longer be able to (in essence) accuse Apple of protecting pedophiles as a means of pushing for legislation to require backdoors.

Conclusion

In the end, this is something that is going to cause a lot of controversy and differences of opinion. Some are in support of Apple’s actions, while others are adamantly in opposition. Apple seems to be trying to do the right thing here, and appears to have put a lot of effort into ensuring that the way this is done is most respectful of privacy, but there are some legitimate reasons to question whether this new feature is a good idea.

Those reasons should not be conflated with support for or opposition to CSAM, which we can all agree is a very bad thing. There’s a lot of discussion that should be had on this topic, but CSAM is a very emotional subject, and we should all try to prevent that from coloring our evaluation of the potential problems with Apple’s approach.