Some academics are calling the controversial practice a "scientific veneer for racism."

BY COURTNEY LINDERJUL 20, 2020

TASOS KATOPODIS

Mathematicians at universities across the country are halting collaborations with police departments across the U.S.

A June 15 letter was sent to the trade journal Notices of the American Mathematical Society, announcing the boycott.

Typically, mathematicians work with police departments to build algorithms, conduct modeling work, and analyze data.

Several prominent academic mathematicians want to sever ties with police departments across the U.S., according to a letter submitted to Notices of the American Mathematical Society on June 15. The letter arrived weeks after widespread protests against police brutality, and has inspired over 1,500 other researchers to join the boycott.

These mathematicians are urging fellow researchers to stop all work related to predictive policing software, which broadly includes any data analytics tools that use historical data to help forecast future crime, potential offenders, and victims. The technology is supposed to use probability to help police departments tailor their neighborhood coverage so it puts officers in the right place at the right time.

"Given the structural racism and brutality in U.S. policing, we do not believe that mathematicians should be collaborating with police departments in this manner," the authors write in the letter. "It is simply too easy to create a 'scientific' veneer for racism. Please join us in committing to not collaborating with police. It is, at this moment, the very least we can do as a community."

Some of the mathematicians include Cathy O'Neil, author of the popular book Weapons of Math Destruction, which outlines the very algorithmic bias that the letter rallies against. There's also Federico Ardila, a Colombian mathematician currently teaching at San Francisco State University, who is known for his work to diversify the field of mathematics.

Lawmakers Want To Stop Militarizing the Police

"This is a moment where many of us have become aware of realities that have existed for a very long time," says Jayadev Athreya, associate professor at the University of Washington's Department of Mathematics who signed the letter, told Popular Mechanics. "And many of us felt that it was very important to make a clear statement about where we, as mathematicians, stand on these issues."

What Is Predictive Policing?

This content is imported from YouTube. You may be able to find the same content in another format, or you may be able to find more information, at their web site.

The Electronic Frontier Foundation, a nonprofit digital rights group, defines predictive policing as "the use of mathematical analytics by law enforcement to identify and deter potential criminal activity."

That can include statistical or machine learning algorithms that rely on police records detailing the time, location, and nature of past crimes in a bid to predict if, when, where, and who may commit future infractions. In theory, this should help authorities use resources more wisely and spend more time policing certain neighborhoods that they think will yield higher crime rates.

Predictive policing is not the same thing as facial recognition technology, which is more often used after a crime is committed to attempt to identify a perpetrator. Police may use these technologies together, but they are fundamentally different.

For example, if predictive policing software shows that a bar sees heightened crime at 2 a.m. on Saturday nights, a police department might deploy more officers there. If and when a crime does occur there, the department might use facial recognition technology to sift through surveillance footage feeds to find and identify the individual.

RAND

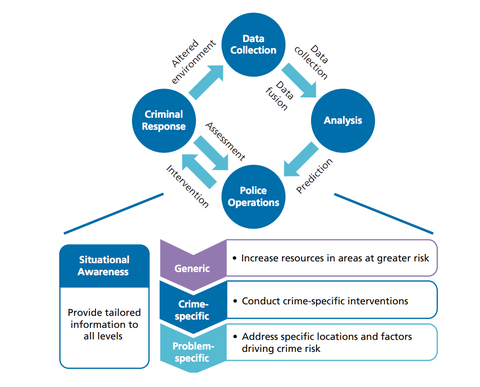

According to a 2013 research briefing from the RAND Corporation, a nonprofit think tank in Santa Monica, California, predictive policing is made up of a four-part cycle (shown above). In the first two steps, researchers collect and analyze data on crimes, incidents, and offenders to come up with predictions. From there, police intervene based on the predictions, usually taking the form of an increase in resources at certain sites at certain times. The fourth step is, ideally, reducing crime.

"Law enforcement agencies should assess the immediate effects of the intervention to ensure that there are no immediately visible problems," the authors note. "Agencies should also track longer-term changes by examining collected data, performing additional analysis, and modifying operations as needed."

In many cases, predictive policing software was meant to be a tool to augment police departments that are facing budget crises with less officers to cover a region. If cops can target certain geographical areas at certain times, then they can get ahead of the 911 calls and maybe even reduce the rate of crime.

But in practice, the accuracy of the technology has been contested—and it's even been called racist.

A Cause for Concern

EDUARD MUZHEVSKYI / SCIENCE PHOTO LIBRARYGETTY IMAGES

Part of the impetus behind the mathematicians' move to distance themselves from predictive policing dates back to an August 2016 workshop that advocated for mathematicians' involvement with police departments.

The Institute for Computational and Experimental Research in Mathematics (ICERM) at Brown University in Providence, Rhode Island—which is funded by the National Science Foundation—put on the workshop for 20 to 25 researchers. PredPol, a Santa Cruz, California-based technology firm that develops and sells predictive policing tools to departments across the U.S., was one of the partners.

According to a notice for the event, the one-week program included work alongside the Providence Police Department. Small teams focused on real problems with real crime and policing data to brainstorm mathematical methods and models that could help the officers, even "creating code to implement ideas as necessary."

"WE'RE NOT GOING TO COLLABORATE WITH ORGANIZATIONS THAT ARE KILLING PEOPLE."

The event organizers said at the time that they "fully anticipate that lasting collaborations will be formed, and that work on the projects will continue after the workshop ends."

Christopher Hoffman, a professor at the University of Washington's Department of Mathematics who also signed the letter, tells Popular Mechanics that the institutional buy-in concerned him and his colleagues. "When a large institute does that, it's like saying 'this is something that we, as a community, value,'" he says.

Athreya says that he attended an ICERM workshop prior to the one on predictive policing and voiced his concerns at the time. Mathematicians should not be building this software, or investing in it, he says, noting that the researchers have, at times, both an intellectual and financial stake in the software.

"We have been a part of these really problematic institutions, and this is a moment for us to reflect and decide that we're not going to do this as a community, we're not going to collaborate with organizations that are killing people."

Accounting for Bias

The Atlanta Police Department displays a city map through PredPol, a predictive crime algorithm used to map hotspots for potential crime, at the Operation Shield Video Integration Center on January 15, 2015 in Atlanta, Georgia. CHRISTIAN SCIENCE MONITOR GETTY IMAGES

The researchers take particular issue with PredPol, the high-profile company that helped put on the ICERM workshop, claiming in the letter that its technology creates racist feedback loops. In other words, they believe that the software doesn't help to predict future crime, but instead reinforces the biases of the officers.

But CEO Brian MacDonald tells Popular Mechanics that PredPol never uses arrest data, "because that has the possibility for officer bias." Instead, he says, the company only uses data that victims have reported to police, themselves. So if your car has been broken into, you might call the police to give them information about the type of crime, the location, and the timing. Police officers might take this information over the phone, or have you fill out an online form, he says.

Tarik Aougab, an assistant professor of mathematics at Haverford College and letter signatory, tells Popular Mechanics that keeping arrest data from the PredPol model is not enough to eliminate bias.

"The problem with predictive policing is that it's not merely individual officer bias," Aougab says. "There's a huge structural bias at play, which amongst other things might count minor shoplifting, or the use of a counterfeit bill, which is what eventually precipitated the murder of George Floyd, as a crime to which police should respond to in the first place."

This content is imported from {embed-name}. You may be able to find the same content in another format, or you may be able to find more information, at their web site.

"In general, there are lots of people, many whom I know personally, who wouldn't call the cops," he says, "because they're justifiably terrified about what might happen when the cops do arrive."

That idea resurfaces in how the software actually works. As a February 2019 Vice story reports, PredPol uses a statistical modeling method used to predict earthquake aftershocks.

MacDonald says that the same approach works in predicting crime because "both of these problems have a location and time element for which to be solved." However, the Vice story echoes Aougab's concern that some crimes go underreported or unreported, meaning that outside influences could skew the time and location data.

How Prevalent Is This Tech?

Los Angeles, CA - October 24: Sgt. Charles Coleman of the LAPD Foothill Division explains during an interview the possible sources of crime on a map for patrols using predictive policing zone maps from the Los Angeles Police Department on Monday, October 24, 2016 in the Pacoima neighborhood of Los Angeles.

THE WASHINGTON POSTGETTY IMAGES

MacDonald says that PredPol has about 50 customers at the moment. For context, there are about 18,000 police departments in the U.S. But Athreya says a better metric comes from PredPol's own website: one in 33 Americans are protected by the software. He says that the figures seem so divergent because some of the largest police departments in the country are using the technology.

Of course, PredPol doesn't exist in a bubble. In 2011, the LAPD began using predictive policing software called the Los Angeles Strategic Extraction and Restoration (LASER), which it eventually stopped using in April 2019.

"The [Los Angeles Police Department] looked into this, and found almost no conclusion could be made about the effectiveness of the software," Hoffman says. "We don't even really know if it makes a difference in where police are patrolling."

Meanwhile, the New York City Police Department uses three different predictive policing tools: Azavea, Keystats, and PredPol, as well as its own in-house predictive policing algorithms that date back to 2013, Athreya says.

"IT'S VERY DIFFICULT FOR PEOPLE TO GET INFORMATION ABOUT WHO IS USING THIS SOFTWARE."

In Chicago, officers used an in-house database called the "Strategic Subject List" until last November, when the department decommissioned its use. "The RAND corporation found that this list included every single person arrested or fingerprinted in Chicago since 2013," according to Athreya.

A January statement from Chicago's Office of the Inspector General noted that some of the major issues with the technology included: "the unreliability of risk scores and tiers; improperly trained sworn personnel; a lack of controls for internal and external access; interventions influenced by PTV risk models which may have attached negative consequences to arrests that did not result in convictions; and a lack of a long-term plan to sustain the PTV models."

Just a few weeks ago, the Santa Cruz Police Department banned the use of predictive policing tools. Back in 2011, the department began a predictive policing pilot project that was meant to ease the strain on officers who were swamped with service calls at a time when the city was slashing police budgets.

While the Santa Cruz Police Department's outright ban on the technology might've been influenced by recent Black Lives Matter protests, the department had already placed a moratorium on the technology back in 2017.

Police Chief Andy Mills told the Los Angeles Times that predictive policing could have been effective if it had been used to work together with the community to solve problems, rather than "to do purely enforcement."

"You try different things and learn later as you look back retrospectively," Mills told the LA Times. "You say, 'Jeez, that was a blind spot I didn’t see.' I think one of the ways we can prevent that in the future is sitting down with community members and saying, 'Here's what we are interested in using. Give us your take on it. What are your concerns?'

Still, Hoffman says there's no way to know just how prevalent the technology is in the U.S. "It's very difficult for people to get information about who is using this software and what are they using this for," he says.

A Step Toward Better Policing

Athreya wants to make it clear that their boycott is not just a "theoretical concern." But if the technology continues to exist, there should at least be some guidelines for its implementation, the mathematicians say. They have a few demands, but they mostly boil down to the concepts of transparency and community buy-in.

Among them include:

Any algorithms with "potential high impact" should face a public audit.

Experts should participate in that audit process as proactive way to use mathematics to "prevent abuses of power."

Mathematicians should work with community groups, oversight boards, and other organizations like Black in AI and Data 4 Black Lives to develop alternatives to "oppressive and racist" practices.

Academic departments with data science courses should implement learning outcomes that address the "ethical, legal, and social implications" of such tools.

Since the letter went live, at least 1,500 other researchers have signed on through a Google Form, Athreya says. And he welcomes that response.

"I don't think predictive policing should ever exist," he says, "especially when it’s costing people their lives."

Among them include:

Any algorithms with "potential high impact" should face a public audit.

Experts should participate in that audit process as proactive way to use mathematics to "prevent abuses of power."

Mathematicians should work with community groups, oversight boards, and other organizations like Black in AI and Data 4 Black Lives to develop alternatives to "oppressive and racist" practices.

Academic departments with data science courses should implement learning outcomes that address the "ethical, legal, and social implications" of such tools.

Since the letter went live, at least 1,500 other researchers have signed on through a Google Form, Athreya says. And he welcomes that response.

"I don't think predictive policing should ever exist," he says, "especially when it’s costing people their lives."

No comments:

Post a Comment