Study: Large language models can’t effectively recognize users’ motivation, but can support behavior change for those ready to act

Large language model-based chatbots can’t effectively recognize users’ motivation when they are hesitant about making healthy behavior changes, but they can support those committed to take action, say University of Illinois Urbana-Champaign researcher

IMAGE:

LARGE LANGUAGE MODEL-BASED CHATBOTS CAN’T EFFECTIVELY RECOGNIZE USERS’ MOTIVATION WHEN THEY ARE HESITANT ABOUT MAKING HEALTHY BEHAVIOR CHANGES, BUT THEY CAN SUPPORT THOSE WHO ARE COMMITTED TO TAKE ACTION, SAY INFORMATION SCIENCES DOCTORAL STUDENT MICHELLE BAK AND INFORMATION SCIENCES PROFESSOR JESSIE CHIN.

view moreCREDIT: COURTESY MICHELLE BAK

CHAMPAIGN, Ill. — Large language model-based chatbots have the potential to promote healthy changes in behavior. But researchers from the ACTION Lab at the University of Illinois Urbana-Champaign have found that the artificial intelligence tools don’t effectively recognize certain motivational states of users and therefore don’t provide them with appropriate information.

Michelle Bak, a doctoral student in information sciences, and information sciences professor Jessie Chin reported their research in the Journal of the American Medical Informatics Association.

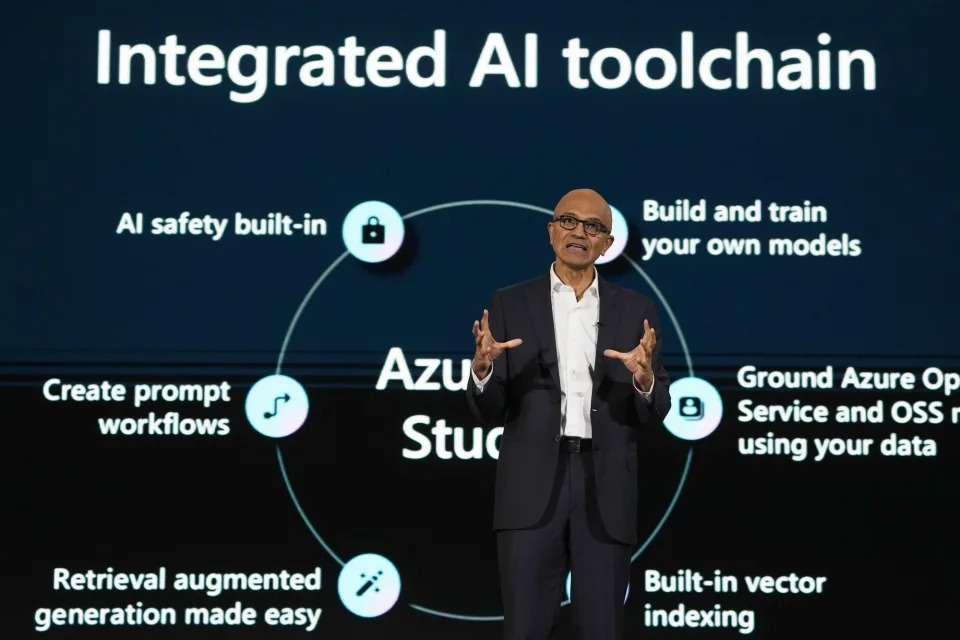

Large language model-based chatbots — also known as generative conversational agents — have been used increasingly in healthcare for patient education, assessment and management. Bak and Chin wanted to know if they also could be useful for promoting behavior change.

Chin said previous studies showed that existing algorithms did not accurately identify various stages of users’ motivation. She and Bak designed a study to test how well large language models, which are used to train chatbots, identify motivational states and provide appropriate information to support behavior change.

They evaluated large language models from ChatGPT, Google Bard and Llama 2 on a series of 25 different scenarios they designed that targeted health needs that included low physical activity, diet and nutrition concerns, mental health challenges, cancer screening and diagnosis, and others such as sexually transmitted disease and substance dependency.

In the scenarios, the researchers used each of the five motivational stages of behavior change: resistance to change and lacking awareness of problem behavior; increased awareness of problem behavior but ambivalent about making changes; intention to take action with small steps toward change; initiation of behavior change with a commitment to maintain it; and successfully sustaining the behavior change for six months with a commitment to maintain it.

The study found that large language models can identify motivational states and provide relevant information when a user has established goals and a commitment to take action. However, in the initial stages when users are hesitant or ambivalent about behavior change, the chatbot is unable to recognize those motivational states and provide appropriate information to guide them to the next stage of change.

Chin said that language models don’t detect motivation well because they are trained to represent the relevance of a user’s language, but they don’t understand the difference between a user who is thinking about a change but is still hesitant and a user who has the intention to take action. Additionally, she said, the way users generate queries is not semantically different for the different stages of motivation, so it’s not obvious from the language what their motivational states are.

“Once a person knows they want to start changing their behavior, large language models can provide the right information. But if they say, ‘I’m thinking about a change. I have intentions but I’m not ready to start action,’ that is the state where large language models can’t understand the difference,” Chin said.

The study results found that when people were resistant to habit change, the large language models failed to provide information to help them evaluate their problem behavior and its causes and consequences and assess how their environment influenced the behavior. For example, if someone is resistant to increasing their level of physical activity, providing information to help them evaluate the negative consequences of sedentary lifestyles is more likely to be effective in motivating users through emotional engagement than information about joining a gym. Without information that engaged with the users’ motivations, the language models failed to generate a sense of readiness and the emotional impetus to progress with behavior change, Bak and Chin reported.

Once a user decided to take action, the large language models provided adequate information to help them move toward their goals. Those who had already taken steps to change their behaviors received information about replacing problem behaviors with desired health behaviors and seeking support from others, the study found.

However, the large language models didn’t provide information to those users who were already working to change their behaviors about using a reward system to maintain motivation or about reducing the stimuli in their environment that might increase the risk of a relapse of the problem behavior, the researchers found.

“The large language model-based chatbots provide resources on getting external help, such as social support. They’re lacking information on how to control the environment to eliminate a stimulus that reinforces problem behavior,” Bak said.

Large language models “are not ready to recognize the motivation states from natural language conversations, but have the potential to provide support on behavior change when people have strong motivations and readiness to take actions,” the researchers wrote.

Chin said future studies will consider how to finetune large language models to use linguistic cues, information search patterns and social determinants of health to better understand a users’ motivational states, as well as providing the models with more specific knowledge for helping people change their behaviors.

Editor’s notes: To contact Michelle Bak, email chaewon7@illinois.edu. To contact Jessie Chin, email chin5@illinois.edu.

The paper “The potential and limitations of large language models in identification of the states of motivations for facilitating health behavior change” is available online. DOI: doi.org/10.1093

JOURNAL

Journal of the American Medical Informatics Association

METHOD OF RESEARCH

Experimental study

SUBJECT OF RESEARCH

Not applicable

ARTICLE TITLE

The potential and limitations of large language models in identification of the states of motivations for facilitating health behavior change