Surveys identify relationship between waves, coastal cliff erosion

Study shows waves, rainfall important parts of erosion process, providing new opportunity to improve forecasts

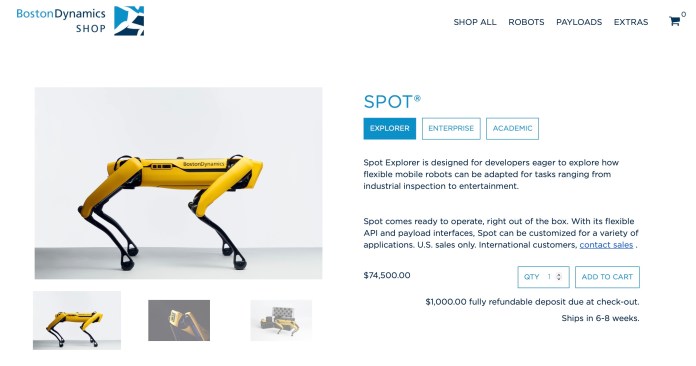

IMAGE: SCRIPPS OCEANOGRAPHY GEOMORPHOLOGIST ADAM YOUNG BURIES WAVE ENERGY-MEASURING SENSORS. view more

CREDIT: ERIK JEPSEN/UC SAN DIEGO

Scripps Institution of Oceanography at UC San Diego researchers have uncovered how rain and waves act on different parts of coastal cliffs.

Following three years of cliff surveys in and near the coastal city of Del Mar, Calif., they determined that wave impacts directly affect the base, and rain mostly impacts the upper region of the cliffs.

The study appears in the journal Geomorphology and was funded by California State Parks. California's State Parks Oceanography program supports climate adaptation and resilience efforts through coastal and cliff erosion observations and modeling, measuring and predicting storm surge and wave variability, and establishing wave condition baselines for use in the design and operation of coastal projects.

"It's something that I've been trying to quantify for a long time, which is exciting," said coastal geomorphologist Adam Young, who is the lead author on the paper. "We've always known that waves were an important part of the cliff erosion process, but we haven't been able to separate the influence of waves and rain before."

After decades of debate over the differing roles that waves and rain play in cliff erosion, the findings provide a new opportunity to improve forecasts, which is a pressing issue both in Del Mar and across the California coast. For example, neighborhoods and a railroad line the cliff edge in Del Mar. Past episodes of cliff failures have resulted in several train derailments and landslides, which trigger temporary rail closures and emergency repairs. The consequences can be costly.

Prior to Young's study, the exact relationship between waves, rain, and cliff failures were unclear, mostly because it is difficult to measure the impacts of waves on the cliff base.

"Anytime a study involves sensors in the coastal zone, it's a challenge," said Young. For example, his team at Scripps Oceanography's Center for Climate Change Impacts and Adaptation buries sensors in the sand that measure wave energy. Big surf and erosion can shift the sensors and prevent scientists from collecting reliable measurements.

The key to their success, according to Young, was to visit and measure the cliffs every week for three years -- an effort that was among the most detailed ever undertaken for studying coastal cliffs. These records along the 1.5 mile-long stretch in Del Mar allowed Young's team to untangle the effects of rainfall and groundwater runoff from wave impacts.

"We can now better predict how much erosion will occur during a particular storm using the wave and rainfall erosion relationship that we've identified here," said Young.

Young's group combined measurements from the sensors buried in the sand with computer models of wave energy, as well as with three-dimensional maps of the beach and cliffs collected using a LiDAR device - a laser mapping tool - that was mounted onto trucks driven along the beach. The team also analyzed rainfall data from a local Del Mar weather station.

Because rainfall and associated elevated groundwater levels trigger larger landslides, cliff erosion generally appears to be more correlated with rain. Teasing out the wave-driven cliff erosion is a more subtle and difficult process, but important because the wave-driven erosion weakens the cliff base and sets the stage for those rain-driven landslides.

Understanding the way that cliffs and waves behave together will help improve short-term models that forecast cliff retreat, but the researchers will need more information to predict how future rainfall and waves will drive cliff erosion in the long term.

Young and his group plan to continue to collect data in Del Mar, and are developing a website to make the information about the conditions leading to coastal landslides readily available.

###

/https://www.thestar.com/content/dam/thestar/news/world/middleeast/2020/12/28/saudi-womens-rights-activist-sentenced-to-nearly-6-years/NYCD201-120_2015_155057.jpg)