No, Mike Pompeo, killing the military leader won’t give Iranians more freedom.

By Alex Ward@AlexWardVoxalex.ward@vox.com Jan 3, 2020

/cdn.vox-cdn.com/uploads/chorus_image/image/66019510/1194538058.jpg.0.jpg)

President Donald Trump speaks as Secretary of State Mike Pompeo

and National Security Adviser Robert O’Brien listen during a meeting

in the Oval Office of the White House on December 17, 2019, in

Washington, DC. Alex Wong/Getty Images

Days before the US invaded Iraq in 2003, then-Vice President Dick Cheney told NBC News why the Bush administration believed the military mission would be successful. “I think things have gotten so bad inside Iraq, from the standpoint of the Iraqi people, my belief is we will, in fact, be greeted as liberators,” he said.

That didn’t happen, and the US instead got bogged down in a brutal years-long war, leading to thousands dead and injured and trillions of dollars spent. What’s more, US forces were quickly seen to be little more than imperialist occupiers across the Middle East.

But the ideology that American force can give people of the Middle East space for a democratic uprising persists. That became clear when Secretary of State Mike Pompeo offered an optimistic assessment of how citizens of Iraq and Iran will react to the Thursday night killing of Qassem Soleimani, who led Iranian covert operations and intelligence across the Middle East and was one of Tehran’s most senior military leaders.

“We have every expectation that people not only in Iraq, but in Iran, will view the American action last night as giving them freedom, freedom to have the opportunity for success and prosperity for their nations,” the top US diplomat told CNN on Friday morning. “While the political leadership may not want that, the people in these nations will demand it.”

Like all wishful thinking, Pompeo’s statement has a sprinkling of truth. Videos on Twitter showed Iraqis celebrating Soleimani’s demise. And some Iran experts, like the Council on Foreign Relations’ Ray Takeyh, told me that the repressive regime’s power now is somewhat lessened with Soleimani gone. “In a roundabout way, Pompeo’s statement does seem sound to me,” he said.

But let’s be clear about what Pompeo is really saying. His claim is that dropping bombs on Soleimani and other military leaders will prompt citizens of Iraq and Iran to rebel against their governments, thank the US, and push for something akin to American democracy. That, most experts say, is folly.

“I doubt any significant number of people in Iran and Iraq will see this as a gift of freedom,” says Trita Parsi, an Iran expert at the Quincy Institute for Responsible Statecraft.

Yet the long-held myth of America liberating Iran with military force appears to have taken hold in the Trump administration — and it could potentially cause more problems with Tehran down the line.

Why “neoconservatism” could make Trump’s Iran policy worse

Back in 2016, Max Fisher wrote for Vox about “neoconservatism,” and how that ideology proved the real culprit for why the Bush administration went to war in Iraq.

Neoconservatism, which had been around for decades, mixed humanitarian impulses with an almost messianic faith in the transformati[onal] virtue of American military force, as well as a deep fear of an outside world seen as threatening and morally compromised.

This ideology stated that authoritarian states were inherently destabilizing and dangerous; that it was both a moral good and a strategic necessity for America to replace those dictatorships with democracy — and to dominate the world as the unquestioned moral and military leader.

That same ideology — now focused on Iran — is championed by Pompeo and former National Security Adviser John Bolton. Killing Soleimani, they effectively argue, will help draw a straight line to eventual regime change in Iran.

Congratulations to all involved in eliminating Qassem Soleimani. Long in the making, this was a decisive blow against Iran's malign Quds Force activities worldwide. Hope this is the first step to regime change in Tehran.— John Bolton (@AmbJohnBolton) January 3, 2020

But Eric Brewer, a long-time intelligence official who recently left Trump’s National Security Council after working on Iran, doesn’t find that narrative compelling. “Soleimani’s death is not going to end Iranian influence in Iraq,” he told me, “nor is it likely to lead to some sort of regime change uprising in Iran.”

There are a few reasons for that.

First, Iranian influence is already well entrenched inside Iraq’s military and political structures; removing Soleimani from the equation doesn’t change that. Second, Iraqis and Iranians have shown they are willing to push for better governance without US military intervention spurring them to action. In fact, Iraqi protests recently led some of the leadership there to resign, partly fueled by the perception that Iran was really running Iraqi affairs of state. And today there are already large-scale anti-US demonstrations sweeping Iran after the Soleimani killing.

Third, US-Iran history over the last few decades makes everyday Iranians skeptical of American intentions in the country, especially Washington’s involvement in the 1953 coup of Iranian Prime Minister Mohammad Mossadegh. (There was an anti-government movement to remove President Mahmoud Ahmadinejad from power when Barack Obama was president, and he chose not to get involved so it didn’t seem like the US was meddling.)

Finally, there’s the hypocrisy problem: The US has no qualms about supporting other authoritarian regimes around the world, including Iran’s chief rival Saudi Arabia.

In other words, Iraqis and Iranians wanting a more democratic future don’t necessarily need the US to get it, and may not even trust Washington to begin with. “The idea that there will be a critical mass in Iran believing that the US has Iran’s best interests in mind is nonsensical,” the Quincy Institute’s Parsi told me.

But the belief in this idea is a persistently bad and uniquely American one. It smacks of thinking frozen in the Cold War, that all it will take is the toppling of a dictator to allow democracy to flourish. Yet time after time, from Libya to Egypt to elsewhere, that just hasn’t proven true.

More likely than not, Soleimani’s death will lead to an escalation of violence between the US and Iran. Tehran will retaliate — maybe not immediately, but eventually — putting Americans at risk. That will, in turn, likely lead to an escalation that puts thousands more in danger. Instead of “freedom,” then, everyone gets war.

The question now is if Trump administration leaders will continue to form Iran policy based on the misguided notion that American military might will bring about democracy in Tehran or the region. That hasn’t worked before, and it’s unlikely to now.

Days before the US invaded Iraq in 2003, then-Vice President Dick Cheney told NBC News why the Bush administration believed the military mission would be successful. “I think things have gotten so bad inside Iraq, from the standpoint of the Iraqi people, my belief is we will, in fact, be greeted as liberators,” he said.

That didn’t happen, and the US instead got bogged down in a brutal years-long war, leading to thousands dead and injured and trillions of dollars spent. What’s more, US forces were quickly seen to be little more than imperialist occupiers across the Middle East.

But the ideology that American force can give people of the Middle East space for a democratic uprising persists. That became clear when Secretary of State Mike Pompeo offered an optimistic assessment of how citizens of Iraq and Iran will react to the Thursday night killing of Qassem Soleimani, who led Iranian covert operations and intelligence across the Middle East and was one of Tehran’s most senior military leaders.

“We have every expectation that people not only in Iraq, but in Iran, will view the American action last night as giving them freedom, freedom to have the opportunity for success and prosperity for their nations,” the top US diplomat told CNN on Friday morning. “While the political leadership may not want that, the people in these nations will demand it.”

Like all wishful thinking, Pompeo’s statement has a sprinkling of truth. Videos on Twitter showed Iraqis celebrating Soleimani’s demise. And some Iran experts, like the Council on Foreign Relations’ Ray Takeyh, told me that the repressive regime’s power now is somewhat lessened with Soleimani gone. “In a roundabout way, Pompeo’s statement does seem sound to me,” he said.

But let’s be clear about what Pompeo is really saying. His claim is that dropping bombs on Soleimani and other military leaders will prompt citizens of Iraq and Iran to rebel against their governments, thank the US, and push for something akin to American democracy. That, most experts say, is folly.

“I doubt any significant number of people in Iran and Iraq will see this as a gift of freedom,” says Trita Parsi, an Iran expert at the Quincy Institute for Responsible Statecraft.

Yet the long-held myth of America liberating Iran with military force appears to have taken hold in the Trump administration — and it could potentially cause more problems with Tehran down the line.

Why “neoconservatism” could make Trump’s Iran policy worse

Back in 2016, Max Fisher wrote for Vox about “neoconservatism,” and how that ideology proved the real culprit for why the Bush administration went to war in Iraq.

Neoconservatism, which had been around for decades, mixed humanitarian impulses with an almost messianic faith in the transformati[onal] virtue of American military force, as well as a deep fear of an outside world seen as threatening and morally compromised.

This ideology stated that authoritarian states were inherently destabilizing and dangerous; that it was both a moral good and a strategic necessity for America to replace those dictatorships with democracy — and to dominate the world as the unquestioned moral and military leader.

That same ideology — now focused on Iran — is championed by Pompeo and former National Security Adviser John Bolton. Killing Soleimani, they effectively argue, will help draw a straight line to eventual regime change in Iran.

Congratulations to all involved in eliminating Qassem Soleimani. Long in the making, this was a decisive blow against Iran's malign Quds Force activities worldwide. Hope this is the first step to regime change in Tehran.— John Bolton (@AmbJohnBolton) January 3, 2020

But Eric Brewer, a long-time intelligence official who recently left Trump’s National Security Council after working on Iran, doesn’t find that narrative compelling. “Soleimani’s death is not going to end Iranian influence in Iraq,” he told me, “nor is it likely to lead to some sort of regime change uprising in Iran.”

There are a few reasons for that.

First, Iranian influence is already well entrenched inside Iraq’s military and political structures; removing Soleimani from the equation doesn’t change that. Second, Iraqis and Iranians have shown they are willing to push for better governance without US military intervention spurring them to action. In fact, Iraqi protests recently led some of the leadership there to resign, partly fueled by the perception that Iran was really running Iraqi affairs of state. And today there are already large-scale anti-US demonstrations sweeping Iran after the Soleimani killing.

Third, US-Iran history over the last few decades makes everyday Iranians skeptical of American intentions in the country, especially Washington’s involvement in the 1953 coup of Iranian Prime Minister Mohammad Mossadegh. (There was an anti-government movement to remove President Mahmoud Ahmadinejad from power when Barack Obama was president, and he chose not to get involved so it didn’t seem like the US was meddling.)

Finally, there’s the hypocrisy problem: The US has no qualms about supporting other authoritarian regimes around the world, including Iran’s chief rival Saudi Arabia.

In other words, Iraqis and Iranians wanting a more democratic future don’t necessarily need the US to get it, and may not even trust Washington to begin with. “The idea that there will be a critical mass in Iran believing that the US has Iran’s best interests in mind is nonsensical,” the Quincy Institute’s Parsi told me.

But the belief in this idea is a persistently bad and uniquely American one. It smacks of thinking frozen in the Cold War, that all it will take is the toppling of a dictator to allow democracy to flourish. Yet time after time, from Libya to Egypt to elsewhere, that just hasn’t proven true.

More likely than not, Soleimani’s death will lead to an escalation of violence between the US and Iran. Tehran will retaliate — maybe not immediately, but eventually — putting Americans at risk. That will, in turn, likely lead to an escalation that puts thousands more in danger. Instead of “freedom,” then, everyone gets war.

The question now is if Trump administration leaders will continue to form Iran policy based on the misguided notion that American military might will bring about democracy in Tehran or the region. That hasn’t worked before, and it’s unlikely to now.

/cdn.vox-cdn.com/uploads/chorus_image/image/66030486/GettyImages_1144887120.0.jpg)

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19568334/Screen_Shot_2019_12_20_at_11.45.42_AM.png.jpeg)

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19568441/Screen_Shot_2020_01_02_at_10.12.15_AM.png)

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19572194/GettyImages_921920956.jpg)

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19572203/GettyImages_1190364620.jpg)

Itwas in 2008 that the big bisphenol A (BPA) scare got serious. Since the 1950s, this synthetic chemical had been used in the production of clear, durable plastics, and it was to be

Itwas in 2008 that the big bisphenol A (BPA) scare got serious. Since the 1950s, this synthetic chemical had been used in the production of clear, durable plastics, and it was to be  Awoman doesn’t need to be in precarious employment to have her rights violated. Women on irregular or precarious employment contracts have been found to be

Awoman doesn’t need to be in precarious employment to have her rights violated. Women on irregular or precarious employment contracts have been found to be

Text adapted from Caroline Criado Perez’s Invisible Women, published by Abrams Press.

Text adapted from Caroline Criado Perez’s Invisible Women, published by Abrams Press.

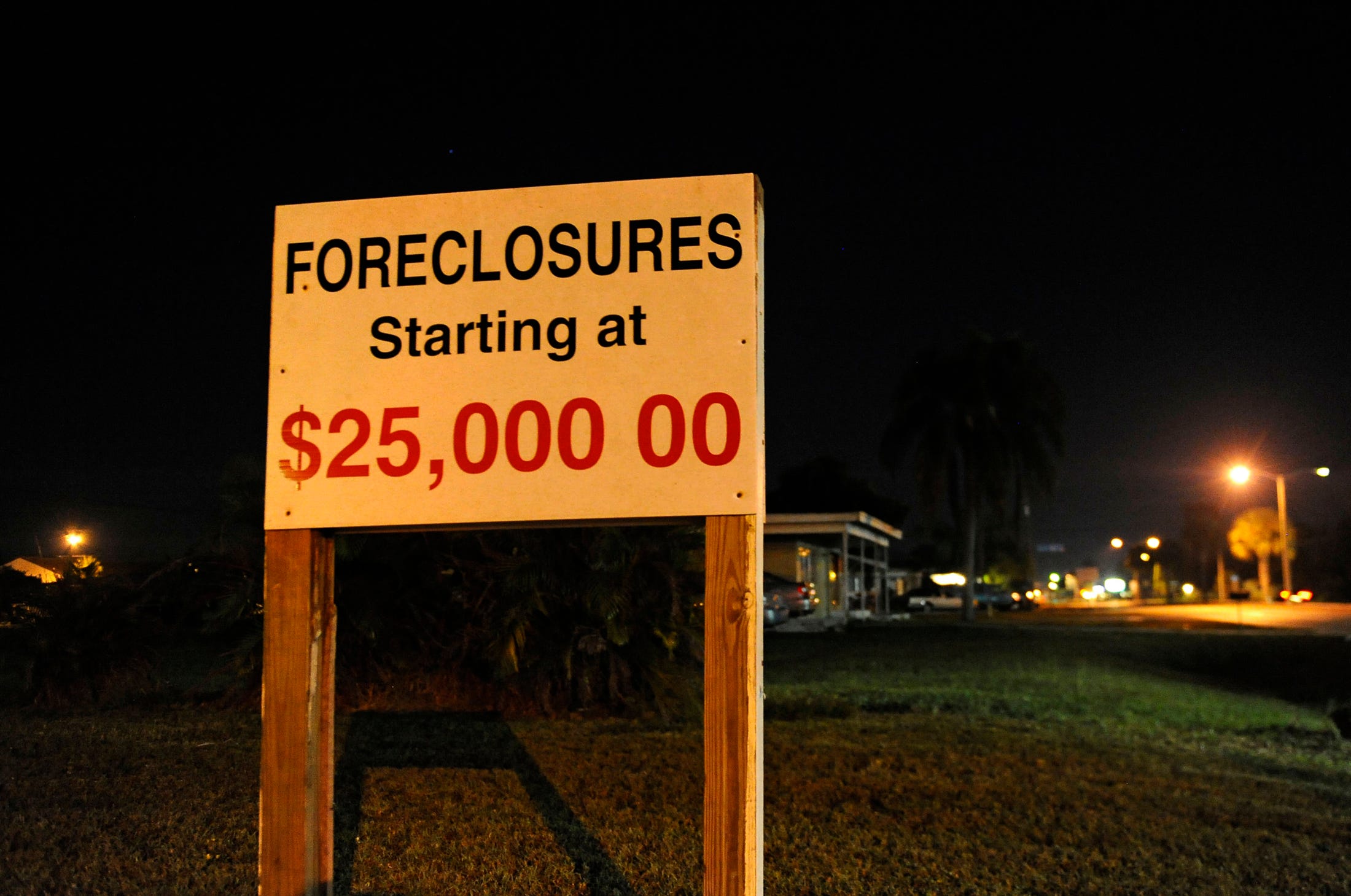

These days, finance is so fundamental to our everyday lives that it is difficult to imagine a world without it. But until the 1970s, the financial sector accounted for a mere 15 percent of all corporate profits in the US economy. Back then, most of what the financial sector did was simple credit intermediation and risk management: banks took deposits from households and corporations and loaned those funds to homebuyers and business. They issued and collected checks to facilitate payment. For important or paying customers, they provided space in their vaults to safeguard valuable items. Insurance companies received premiums from their customers and paid out when a costly incident occurred.

These days, finance is so fundamental to our everyday lives that it is difficult to imagine a world without it. But until the 1970s, the financial sector accounted for a mere 15 percent of all corporate profits in the US economy. Back then, most of what the financial sector did was simple credit intermediation and risk management: banks took deposits from households and corporations and loaned those funds to homebuyers and business. They issued and collected checks to facilitate payment. For important or paying customers, they provided space in their vaults to safeguard valuable items. Insurance companies received premiums from their customers and paid out when a costly incident occurred.