Improved fuel cell performance using semiconductor manufacturing technology

A research team in Korea has synthesized metal nanoparticles that can drastically improve the performance of hydrogen fuel cell catalysts by using semiconductor manufacturing technology. The Korea Institute of Science and Technology (KIST) announced that the research team led by Dr. Sung Jong Yoo of the Hydrogen Fuel Cell Research Center has succeeded in synthesizing nanoparticles by a physical method rather than the existing chemical reactions by using the sputtering technology, which is a thin metal film deposition technology used in semiconductor manufacturing.

Metal nanoparticles have been studied in various fields over the past few decades. Recently, metal nanoparticles have been attracting attention as a critical catalyst for hydrogen fuel cells and water electrolysis systems to produce hydrogen. Metal nanoparticles are mainly prepared through complex chemical reactions. In addition, they are prepared using organic substances harmful to the environment and humans. Therefore, additional costs are inevitably incurred for their treatment, and the synthesis conditions are challenging. Therefore, a new nanoparticle synthesis method that can overcome the shortcomings of the existing chemical synthesis is required to establish the hydrogen energy regime.

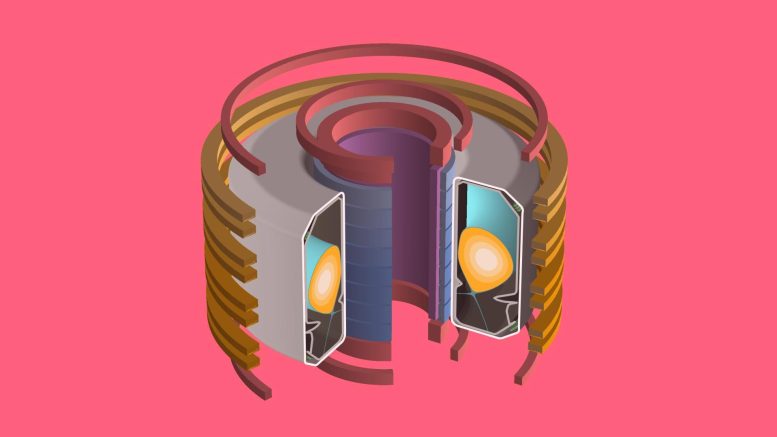

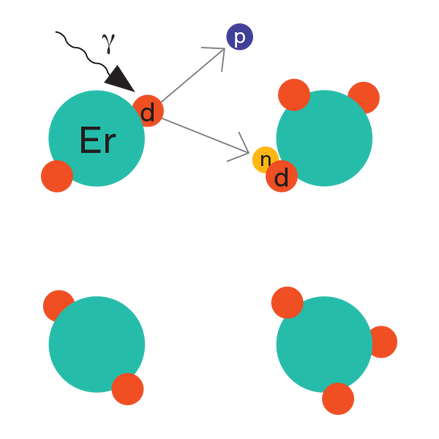

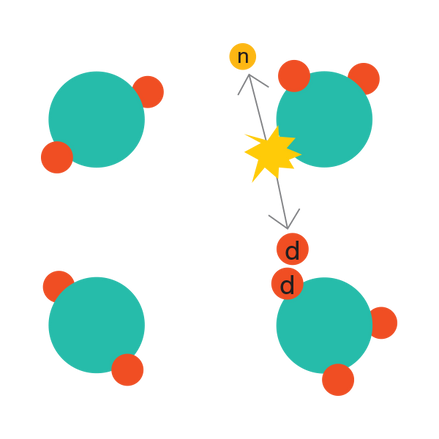

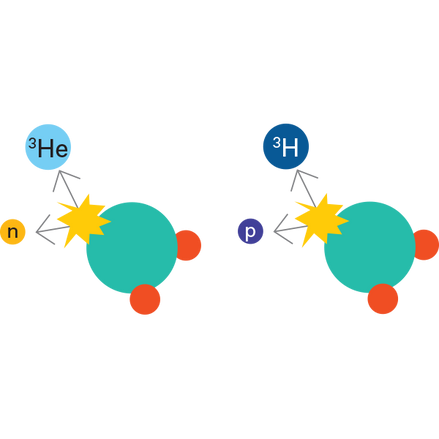

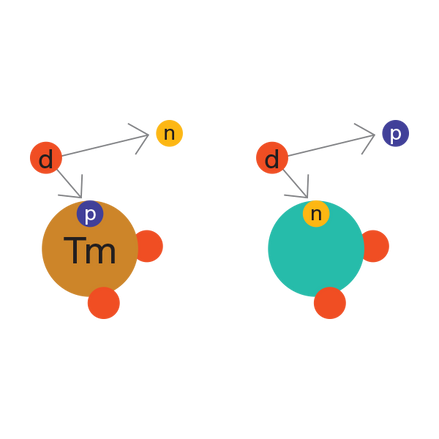

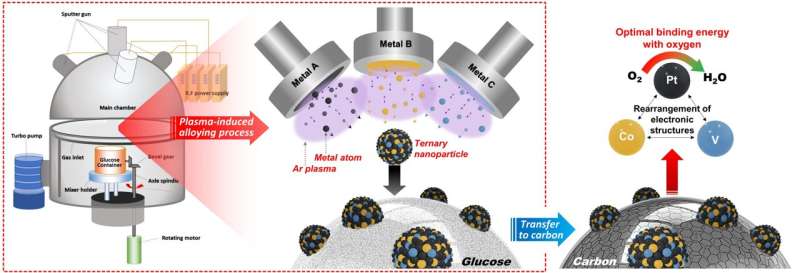

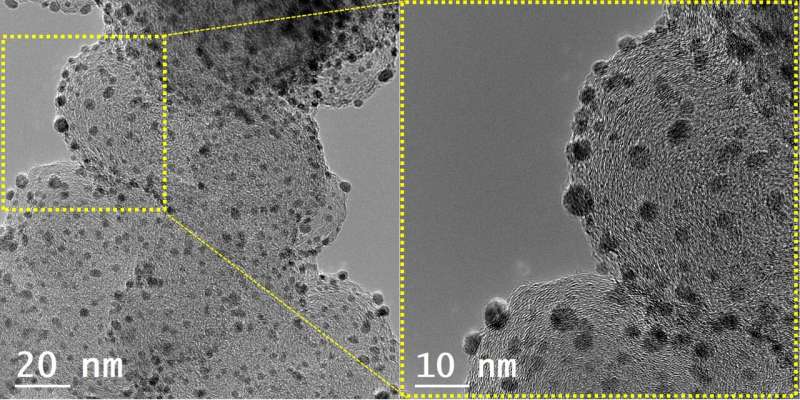

The sputtering process applied by the KIST research team is a technology that coats a thin metal film during the semiconductor manufacturing process. In this process, plasma is used to cut large metals into nanoparticles, which are then deposited on a substrate to form a thin film. The research team prepared nanoparticles using "glucose," a special substrate that prevented the transformation of the metal nanoparticles to a thin film by using plasma during the process. The synthesis method used the principle of physical vapor deposition using plasma rather than chemical reactions. Therefore, metal nanoparticles could be synthesized using this simple method, overcoming the limitations of the existing chemical synthesis methods.

The development of new catalysts has been hindered because the existing chemical synthesis methods limited the types of metals that could be used as nanoparticles. In addition, the synthesis conditions must be changed depending on the type of metal. However, it has become possible to synthesize nanoparticles of more diverse metals through the developed synthesis method. In addition, if this technology is simultaneously applied to two or more metals, alloy nanoparticles of various compositions can be synthesized. This would lead to the development of high-performance nanoparticle catalysts based on alloys of various compositions.

The KIST research team synthesized a platinum-cobalt-vanadium alloy nanoparticle catalyst using this technology and applied for the oxygen reduction reaction in hydrogen fuel cell electrodes. As a result, the catalyst activity was seven and three times higher than those of platinum and platinum-cobalt alloy catalysts that are commercially used as catalysts for hydrogen fuel cells, respectively. Furthermore, the researchers investigated the effect of the newly added vanadium on other metals in the nanoparticles. They found that vanadium improved the catalyst performance by optimizing the platinum–oxygen bonding energy through computer simulation.

Dr. Sung Jong Yoo of KIST commented, "Through this research, we have developed a synthesis method based on a novel concept, which can be applied to research focused on metal nanoparticles toward the development of water electrolysis systems, solar cells, petrochemicals." He added, "We will strive to establish a complete hydrogen economy and develop carbon-neutral technology by applying alloy nanoparticles with new structures, which has been difficult to implement, to [develop] eco-friendly energy technologies including hydrogen fuel cells." Large-scale synthesis methods for single-atom catalysts for alkaline fuel cells

More information: Injoon Jang et al, Plasma-induced alloying as a green technology for synthesizing ternary nanoparticles with an early transition metal, Nano Today (2021). DOI: 10.1016/j.nantod.2021.101316

Journal information: Nano Today

Provided by National Research Council of Science & Technology