An estimated 85% of the universe’s mass is thought to be made up of dark matter, a hypothetical form of matter.

No, scientists still have no idea what dark matter is. However, MSU scientists helped discover new physics while searching for it.

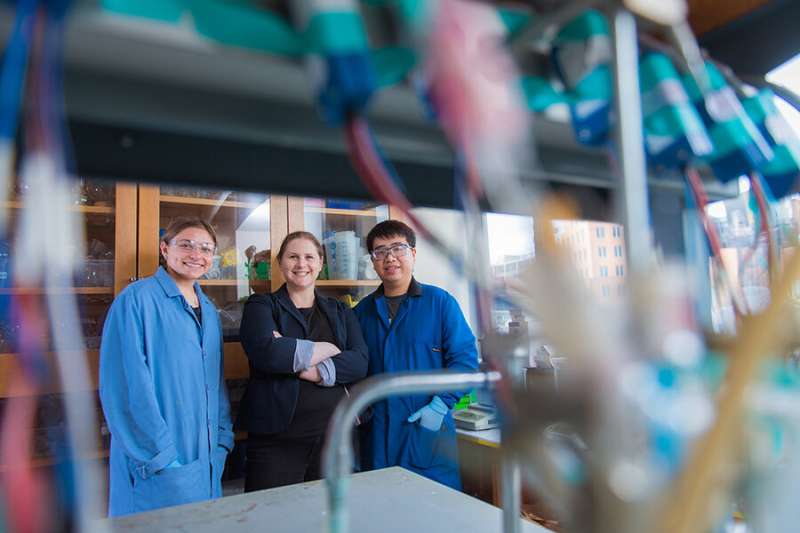

Wolfgang “Wolfi” Mittig and Yassid Ayyad began their search for dark matter—also referred to as the missing mass of the universe—in the heart of an atom around three years ago.

Even though their exploration did not uncover dark matter, the scientists nonetheless discovered something that had never been seen before that defied explanation. Well, at least an explanation on which everyone could agree.

“It’s been something like a detective story,” said Mittig, a Hannah Distinguished Professor in Michigan State University’s Department of Physics and Astronomy and a faculty member at the Facility for Rare Isotope Beams, or FRIB.

“We started out looking for dark matter and we didn’t find it,” he said. “Instead, we found other things that have been challenging for theory to explain.”

In order to make their finding make sense, the team went back to work, conducting further tests and accumulating more data. Mittig, Ayyad, and their colleagues reinforced their argument at Michigan State University’s National Superconducting Cyclotron Laboratory or NSCL.

The researchers discovered a new route to their unanticipated destination while working at NSCL, which they revealed in the journal Physical Review Letters. Additionally, they revealed intriguing physics at work in the ultra-small quantum realm of subatomic particles.

The scientists showed, in particular, that even when an atom’s center, or nucleus, is overcrowded with neutrons, it can find a route to a more stable configuration by spitting out a proton instead.

Shot in the dark

Dark matter is one of the most well-known yet least understood things in the universe. Scientists have known for decades that the universe contains more mass than we can perceive based on the motions of stars and galaxies.

Six times as much unseen mass as regular matter that we can see, measure, and classify is required for gravity to hold celestial objects to their courses. Although researchers are certain that dark matter exists, they have yet to find where and devise how to detect it directly

“Finding dark matter is one of the major goals of physics,” said Ayyad, a nuclear physics researcher at the Galician Institute of High Energy Physics, or IGFAE, of the University of Santiago de Compostela in Spain.

Speaking in round numbers, scientists have launched about 100 experiments to try to illuminate what exactly dark matter is, Mittig said.

“None of them has succeeded after 20, 30, 40 years of research,” he said.

“But there was a theory, a very hypothetical idea, that you could observe dark matter with a very particular type of nucleus,” said Ayyad, who was previously a detector systems physicist at NSCL.

This theory centered on what it calls a dark decay. It posited that certain unstable nuclei, nuclei that naturally fall apart, could jettison dark matter as they crumbled.

So Ayyad, Mittig, and their team designed an experiment that could look for a dark decay, knowing the odds were against them. But the gamble wasn’t as big as it sounds because probing exotic decays also lets researchers better understand the rules and structures of the nuclear and quantum worlds.

The researchers had a good chance of discovering something new. The question was what that would be.

Help from a halo

When people imagine a nucleus, many may think of a lumpy ball made up of protons and neutrons, Ayyad said. But nuclei can take on strange shapes, including what are known as halo nuclei.

Beryllium-11 is an example of a halo nuclei. It’s a form, or isotope, of the element beryllium that has four protons and seven neutrons in its nucleus. It keeps 10 of those 11 nuclear particles in a tight central cluster. But one neutron floats far away from that core, loosely bound to the rest of the nucleus, kind of like the moon ringing around the Earth, Ayyad said.

Beryllium-11 is also unstable. After a lifetime of about 13.8 seconds, it falls apart by what’s known as beta decay. One of its neutrons ejects an electron and becomes a proton. This transforms the nucleus into a stable form of the element boron with five protons and six neutrons, boron-11.

But according to that very hypothetical theory, if the neutron that decays is the one in the halo, beryllium-11 could go an entirely different route: It could undergo a dark decay.

In 2019, the researchers launched an experiment at Canada’s national particle accelerator facility, TRIUMF, looking for that very hypothetical decay. And they did find a decay with unexpectedly high probability, but it wasn’t a dark decay.

It looked like the beryllium-11’s loosely bound neutron was ejecting an electron like normal beta decay, yet the beryllium wasn’t following the known decay path to boron.

The team hypothesized that the high probability of the decay could be explained if a state in boron-11 existed as a doorway to another decay, to beryllium-10 and a proton. For anyone keeping score, that meant the nucleus had once again become beryllium. Only now it had six neutrons instead of seven.

“This happens just because of the halo nucleus,” Ayyad said. “It’s a very exotic type of radioactivity. It was actually the first direct evidence of proton radioactivity from a neutron-rich nucleus.”

But science welcomes scrutiny and skepticism, and the team’s 2019 report was met with a healthy dose of both. That “doorway” state in boron-11 did not seem compatible with most theoretical models. Without a solid theory that made sense of what the team saw, different experts interpreted the team’s data differently and offered up other potential conclusions.

“We had a lot of long discussions,” Mittig said. “It was a good thing.”

As beneficial as the discussions were — and continue to be — Mittig and Ayyad knew they’d have to generate more evidence to support their results and hypothesis. They’d have to design new experiments.

The NSCL experiments

In the team’s 2019 experiment, TRIUMF generated a beam of beryllium-11 nuclei that the team directed into a detection chamber where researchers observed different possible decay routes. That included the beta decay to proton emission process that created beryllium-10.

For the new experiments, which took place in August 2021, the team’s idea was to essentially run the time-reversed reaction. That is, the researchers would start with beryllium-10 nuclei and add a proton.

Collaborators in Switzerland created a source of beryllium-10, which has a half-life of 1.4 million years, that NSCL could then use to produce radioactive beams with new reaccelerator technology. The technology evaporated and injected the beryllium into an accelerator and made it possible for researchers to make a highly sensitive measurement.

When beryllium-10 absorbed a proton of the right energy, the nucleus entered the same excited state the researchers believed they discovered three years earlier. It would even spit the proton back out, which can be detected as a signature of the process.

“The results of the two experiments are very compatible,” Ayyad said.

That wasn’t the only good news. Unbeknownst to the team, an independent group of scientists at Florida State University had devised another way to probe the 2019 result. Ayyad happened to attend a virtual conference where the Florida State team presented its preliminary results, and he was encouraged by what he saw.

“I took a screenshot of the Zoom meeting and immediately sent it to Wolfi,” he said. “Then we reached out to the Florida State team and worked out a way to support each other.”

The two teams were in touch as they developed their reports, and both scientific publications now appear in the same issue of Physical Review Letters. And the new results are already generating a buzz in the community.

“The work is getting a lot of attention. Wolfi will visit Spain in a few weeks to talk about this,” Ayyad said.

An open case on open quantum systems

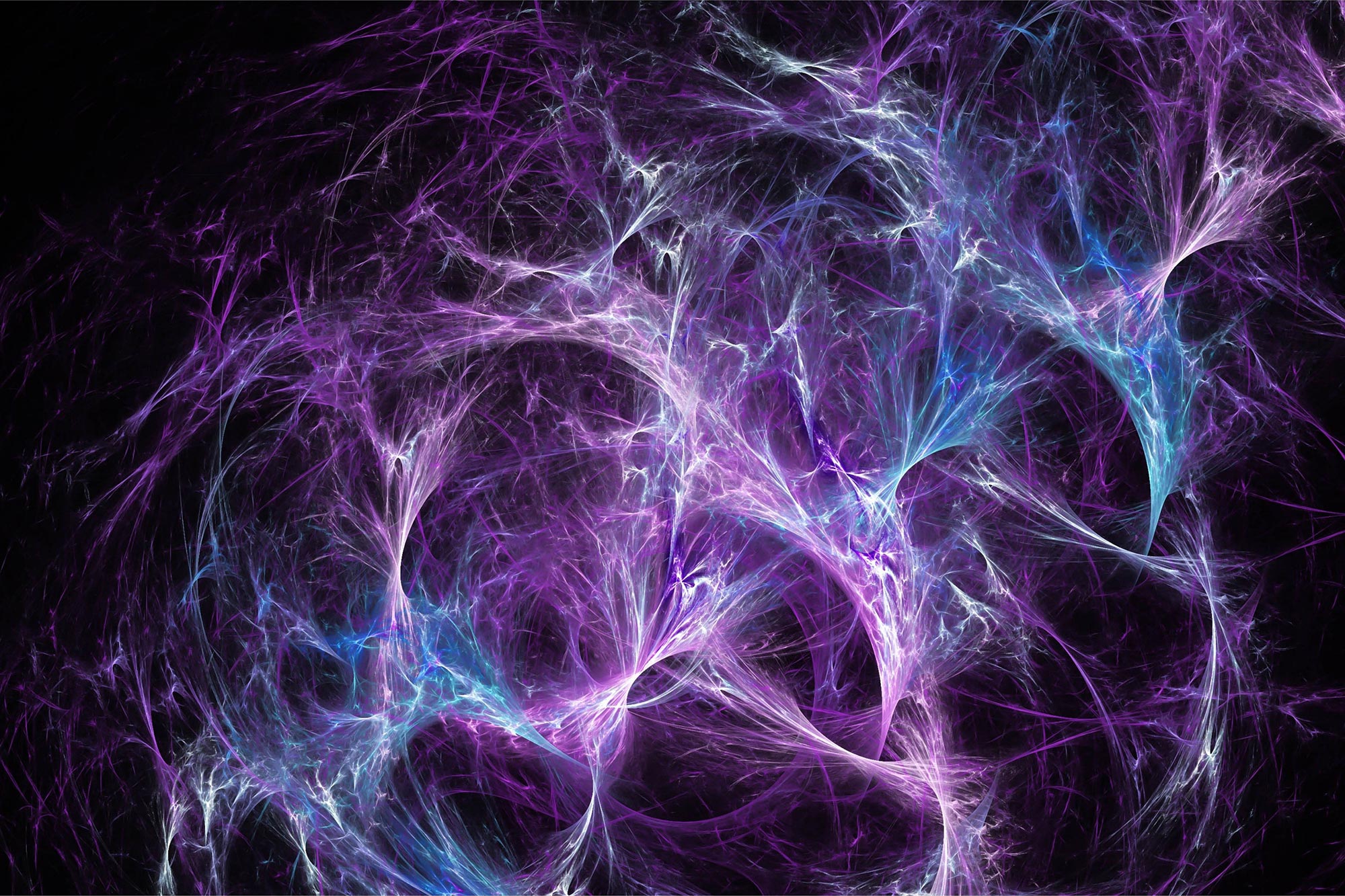

Part of the excitement is because the team’s work could provide a new case study for what is known as open quantum systems. It’s an intimidating name, but the concept can be thought of like the old adage, “nothing exists in a vacuum.”

Quantum physics has provided a framework to understand the incredibly tiny components of nature: atoms, molecules, and much, much more. This understanding has advanced virtually every realm of physical science, including energy, chemistry, and materials science.

Much of that framework, however, was developed considering simplified scenarios. The super small system of interest would be isolated in some way from the ocean of input provided by the world around it. In studying open quantum systems, physicists are venturing away from idealized scenarios and into the complexity of reality.

Open quantum systems are literally everywhere, but finding one that’s tractable enough to learn something from is challenging, especially in matters of the nucleus. Mittig and Ayyad saw potential in their loosely bound nuclei and they knew that NSCL, and now FRIB could help develop it.

NSCL, a National Science Foundation user facility that served the scientific community for decades, hosted the work of Mittig and Ayyad, which is the first published demonstration of the stand-alone reaccelerator technology. FRIB, a U.S. Department of Energy Office of Science user facility that officially launched on May 2, 2022, is where the work can continue in the future.

“Open quantum systems are a general phenomenon, but they’re a new idea in nuclear physics,” Ayyad said. “And most of the theorists who are doing the work are at FRIB.”

But this detective story is still in its early chapters. To complete the case, researchers still need more data and more evidence to make full sense of what they’re seeing. That means Ayyad and Mittig are still doing what they do best and investigating.

“We’re going ahead and making new experiments,” said Mittig. “The theme through all of this is that it’s important to have good experiments with strong analysis.”

Reference: “Evidence of a Near-Threshold Resonance in 11B Relevant to the β-Delayed Proton Emission of 11Be” Y. Ayyad, W. Mittig, T. Tang, B. Olaizola, G. Potel, N. Rijal, N. Watwood, H. Alvarez-Pol, D. Bazin, M. Caamaño, J. Chen, M. Cortesi, B. Fernández-Domínguez, S. Giraud, P. Gueye, S. Heinitz, R. Jain, B. P. Kay, E. A. Maugeri, B. Monteagudo, F. Ndayisabye, S. N. Paneru, J. Pereira, E. Rubino, C. Santamaria, D. Schumann, J. Surbrook, L. Wagner, J. C. Zamora and V. Zelevinsky, 1 June 2022, Physical Review Letters.

DOI: 10.1103/PhysRevLett.129.012501

NSCL was a national user facility funded by the National Science Foundation, supporting the mission of the Nuclear Physics program in the NSF Physics Division.