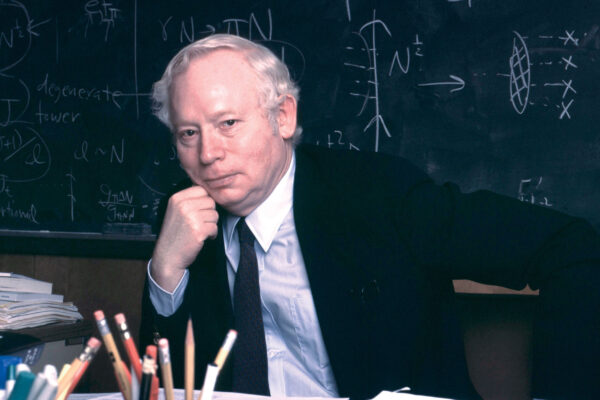

Physicist Steven Weinberg, January 28, 2008. Credit: Larry Murphy, The University of Texas at Austin

AUSTIN, Texas — Nobel laureate Steven Weinberg, a professor of physics and astronomy at The University of Texas at Austin, has died. He was 88.

One of the most celebrated scientists of his generation, Weinberg was best known for helping to develop a critical part of the Standard Model of particle physics, which significantly advanced humanity’s understanding of how everything in the universe — its various particles and the forces that govern them — relate. A faculty member for nearly four decades at UT Austin, he was a beloved teacher and researcher, revered not only by the scientists who marveled at his concise and elegant theories but also by science enthusiasts everywhere who read his books and sought him out at public appearances and lectures.

“The passing of Steven Weinberg is a loss for The University of Texas and for society. Professor Weinberg unlocked the mysteries of the universe for millions of people, enriching humanity’s concept of nature and our relationship to the world,” said Jay Hartzell, president of The University of Texas at Austin. “From his students to science enthusiasts, from astrophysicists to public decision makers, he made an enormous difference in our understanding. In short, he changed the world.”

“As a world-renowned researcher and faculty member, Steven Weinberg has captivated and inspired our UT Austin community for nearly four decades,” said Sharon L. Wood, provost of the university. “His extraordinary discoveries and contributions in cosmology and elementary particles have not only strengthened UT’s position as a global leader in physics, they have changed the world.”

Weinberg held the Jack S. Josey – Welch Foundation Chair in Science at UT Austin and was the winner of multiple scientific awards including the 1979 Nobel Prize in physics, which he shared with Abdus Salam and Sheldon Lee Glashow; a National Medal of Science in 1991; the Lewis Thomas Prize for the Scientist as Poet in 1999; and, just last year, the Breakthrough Prize in Fundamental Physics. He was a member of the National Academy of Sciences, the Royal Society of London, Britain’s Royal Society, the American Academy of Arts and Sciences and the American Philosophical Society, which presented him with the Benjamin Franklin Medal in 2004.

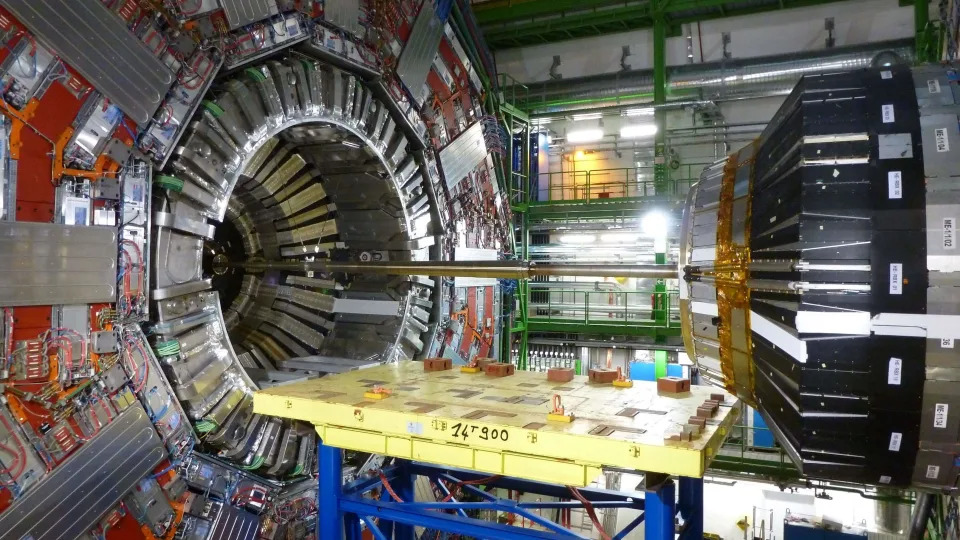

In 1967, Weinberg published a seminal paper laying out how two of the universe’s four fundamental forces — electromagnetism and the weak nuclear force — relate as part of a unified electroweak force. “A Model of Leptons,” at barely three pages, predicted properties of elementary particles that at that time had never before been observed (the W, Z and Higgs boson) and theorized that “neutral weak currents” dictated how elementary particles interact with one another. Later experiments, including the 2012 discovery of the Higgs boson at the Large Hadron Collider (LHC) in Switzerland, would bear out each of his predictions.

Weinberg leveraged his renown and his science for causes he cared deeply about. He had a lifelong interest in curbing nuclear proliferation and served briefly as a consultant for the U.S. Arms Control and Disarmament Agency. He advocated for a planned superconducting supercollider with the capabilities of the LHC in the United States — a project that ultimately failed to receive funding in the 1990s after having been planned for a site near Waxahachie, Texas. He continued to be an ambassador for science throughout his life, for example, teaching UT Austin students and participating in events such as the 2021 Nobel Prize Inspiration Initiative in April and in the Texas Science Festival in February.

“When we talk about science as part of the culture of our times, we’d better make it part of that culture by explaining what we’re doing,” Weinberg explained in a 2015 interview published by Third Way. “I think it’s very important not to write down to the public. You have to keep in mind that you’re writing for people who are not mathematically trained but are just as smart as you are.”

By showing the unifying links behind weak forces and electromagnetism, which were previously believed to be completely different, Weinberg delivered the first pillar of the Standard Model, the half-century-old theory that explains particles and three of the four fundamental forces in the universe (the fourth being gravity). As critical as the model is in helping physical scientists understand the order driving everything from the first minutes after the Big Bang to the world around us, Weinberg continued to pursue, alongside other scientists, dreams of a “final theory” that would concisely and effectively explain current unknowns about the forces and particles in the universe, including gravity.

Weinberg wrote hundreds of scientific articles about general relativity, quantum field theory, cosmology and quantum mechanics, as well as numerous popular articles, reviews and books. His books include “To Explain the World,” “Dreams of a Final Theory,” “Facing Up,” and “The First Three Minutes.” Weinberg often was asked in media interviews to reflect on his atheism and how it related to the scientific insights he described in his books.

“If there is no point in the universe that we discover by the methods of science, there is a point that we can give the universe by the way we live, by loving each other, by discovering things about nature, by creating works of art,” he once told PBS. “Although we are not the stars in a cosmic drama, if the only drama we’re starring in is one that we are making up as we go along, it is not entirely ignoble that faced with this unloving, impersonal universe we make a little island of warmth and love and science and art for ourselves.”

Weinberg was a native of New York, and his childhood love of science began with a gift of a chemistry set and continued through teaching himself calculus while a student at Bronx High School of Science. The first in his family to attend college, he received a bachelor’s degree from Cornell University and a doctoral degree from Princeton University. He researched at Columbia University and the University of California, Berkeley, before serving on the faculty of Harvard University, the Massachusetts Institute of Technology and, since 1982, UT Austin.

He is survived by his wife, UT Austin law professor Louise Weinberg, and their daughter, Elizabeth.

With Steven Weinberg’s death, physics loses a titan

He advanced the theory of particles and forces, and wrote insightfully for a wider public

By Tom Siegfried

Contributing Correspondent

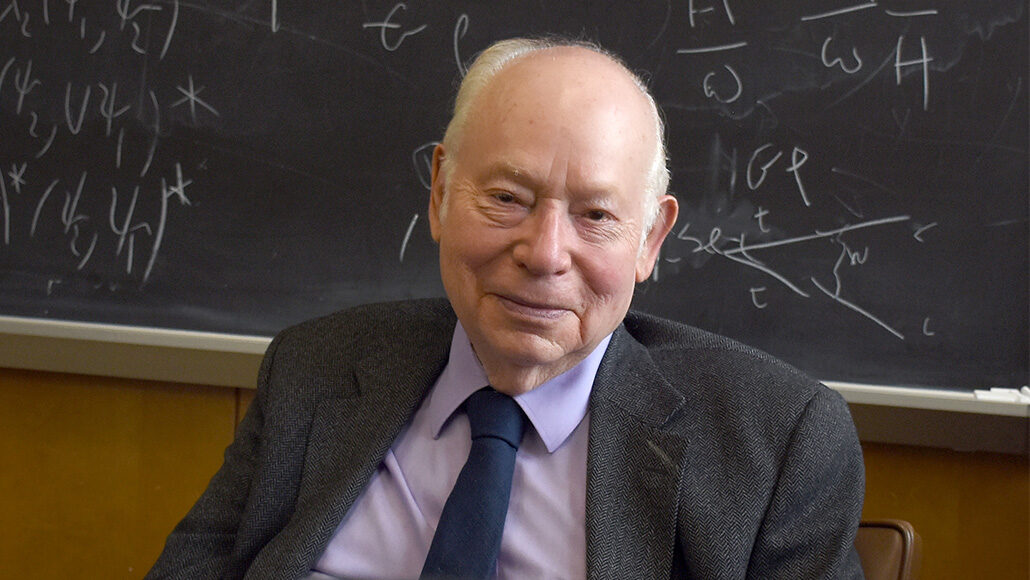

Steven Weinberg in his office at the University of Texas at Austin in 2018.

Mythology has its titans. So do the movies. And so does physics. Just one fewer now.

Steven Weinberg died July 23, at the age of 88. He was one of the key intellectual leaders in physics during the second half of the 20th century, and he remained a leading voice and active contributor and teacher through the first two decades of the 21st.

On lists of the greats of his era he was always mentioned along with Richard Feynman, Murray Gell-Mann and … well, just Feynman and Gell-Mann.

Among his peers, Weinberg was one of the most respected figures in all of physics or perhaps all of science. He exuded intelligence and dignity. As news of his death spread through Twitter, other physicists expressed their remorse at the loss: “One of the most accomplished scientists of our age,” one commented, “a particularly eloquent spokesman for the scientific worldview.” And another: “One of the best physicists we had, one of the best thinkers of any variety.”

Weinberg’s Nobel Prize, awarded in 1979, was for his role in developing a theory unifying electromagnetism and the weak nuclear force. That was an essential contribution to what became known as the standard model of physics, a masterpiece of explanation for phenomena rooted in the math describing subatomic particles and forces. It’s so successful at explaining experimental results that physicists have long pursued every opportunity to find the slightest deviation, in hopes of identifying “new” physics that further deepens human understanding of nature.

Weinberg did important technical work in other realms of physics as well, and wrote several authoritative textbooks on such topics as general relativity and cosmology and quantum field theory. He was an early advocate of superstring theory as a promising path in the continuing quest to complete the standard model by unifying it with general relativity, Einstein’s theory of gravity.

Early on Weinberg also realized a desire to communicate more broadly. His popular book The First Three Minutes, published in 1977, introduced a generation of physicists and physics fans to the Big Bang–birth of the universe and the fundamental science underlying that metaphor. Later he wrote deeply insightful examinations of the nature of science and its intersection with society. And he was a longtime contributor of thoughtful essays in such venues as the New York Review of Books.

In his 1992 book Dreams of a Final Theory, Weinberg expressed his belief that physics was on the verge of finding the true fundamental explanation of reality, the “final theory” that would unify all of physics. Progress toward that goal seemed to be impeded by the apparent incompatibility of general relativity with quantum mechanics, the math underlying the standard model. But in a 1997 interview, Weinberg averred that the difficulty of combining relativity and quantum physics in a mathematically consistent way was an important clue. “When you put the two together, you find that there really isn’t that much free play in the laws of nature,” he said. “That’s been an enormous help to us because it’s a guide to what kind of theories might possibly work.”

Attempting to bridge the relativity-quantum gap, he believed, “pushed us a tremendous step forward toward being able to develop realistic theories of nature on the basis of just mathematical calculations and pure thought.”

Experiment had to come into play, of course, to verify the validity of the mathematical insights. But the standard model worked so well that finding deviations implied by new physics required more powerful experimental technology than physicists possessed. “We have to get to a whole new level of experimental competence before we can do experiments that reveal the truth beneath the standard model, and this is taking a long, long time,” he said. “I really think that physics in the style in which it’s being done … is going to eventually reach a final theory, but probably not while I’m around and very likely not while you’re around.”

He was right that he would not be around to see the final theory. And perhaps, as he sometimes acknowledged, nobody ever will. Perhaps it’s not experimental power that is lacking, but rather intellectual power. “Humans may not be smart enough to understand the really fundamental laws of physics,” he wrote in his 2015 book To Explain the World, a history of science up to the time of Newton.

Weinberg studied the history of science thoroughly, wrote books and taught courses on it. To Explain the World was explicitly aimed at assessing ancient and medieval science in light of modern knowledge. For that he incurred the criticism of historians and others who claimed he did not understand the purpose of history, which is to understand the human endeavors of an era on its own terms, not with anachronistic hindsight.

But Weinberg understood the viewpoint of the historians perfectly well. He just didn’t like it. For Weinberg, the story of science that was meaningful to people today was how the early stumblings toward understanding nature evolved into a surefire system for finding correct explanations. And that took many centuries. Without the perspective of where we are now, he believed, and an appreciation of the lessons we have learned, the story of how we got here “has no point.”

Future science historians will perhaps insist on assessing Weinberg’s own work in light of the standards of his times. But even if viewed in light of future knowledge, there’s no doubt that Weinberg’s achievements will remain in the realm of the Herculean. Or the titanic.

Tom Siegfried is a contributing correspondent.

Related Stories

SCIENCE & SOCIETY

Steven Weinberg looks back at rise of scientific method

By Tom Siegfried

SCIENCE & SOCIETY

Physics greats of the 20th century mixed science and public service

By Tom Siegfried