People looking at their phones. - Copyright Unsplash

By Rosie Frostlast updated: 23/09/2020 - 13:

“The climate is changing, but there are solutions. See what the experts are saying and learn how you can help.”

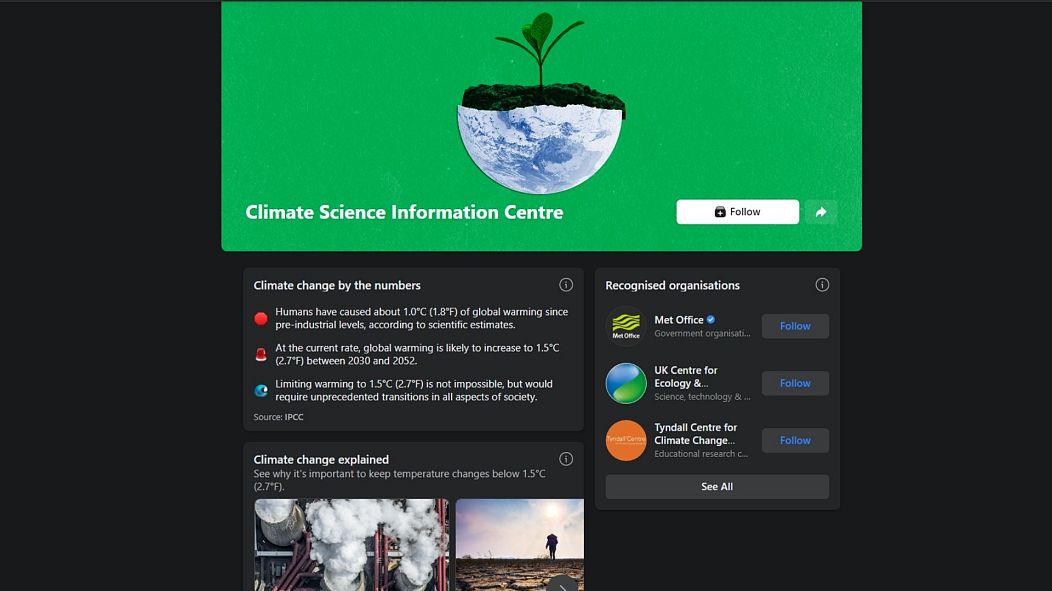

If you’ve logged in to Facebook in the last week, you might have seen this statement pop up on your news feed. It is part of the social media platform’s newly launched Climate Science Information Centre. Clicking the post takes you to a series of facts, articles and “everyday actions” related to the climate crisis.

Intended to “connect people to factual and up-to-date climate information”, Facebook’s new hub comes following widespread concern from scientists and politicians about the rampant spread of misinformation on the platform.

A link to this new page will appear when people search for climate-related topics and when they view some posts. It is backed by information from leading organisations like the IPCC and UN Environment Programme. The company also says that it is working with independent fact-checking organisations to identify posts that contain fake news and then label them appropriately.

Once a post is determined to be false, Facbook says its distribution is reduced and warning labels will show when it is seen or shared. Accounts that repeatedly share misinformation will have their posts shown to fewer people.

Facebook's Climate Science Information CentreFacebook

But for a coalition of environmental groups, the changes are too little too late. “Facebook admits climate misinformation on its platforms is a rampant problem, but it is only taking half measures to stop it,” says a joint statement from groups including Greenpeace, Friends of the Earth and the Sierra Club.

“This new policy is a small step forward but does not address the larger climate disinformation crisis hiding in plain sight.“

Critics pointed out that the introduction of these new measures does nothing to address a “climate loophole” on the platform which labels climate denial content as “opinion” and makes it exempt from fact checking.

The company says that it is trying to strike a balance between allowing free speech and tackling misinformation. It doesn't want to punish people for saying something which may not be factually correct — especially if it is unintentional.

But just days after the announcement, hundreds of accounts linked to Indigenous, environmental and social justice organisations were suspended. It happened after they co-hosted an online event last May protesting a natural gas pipeline that will cut through sovereign Wet’suwet’en land in British Columbia.

Even if Facebook can eventually get the balance right for environmental misinformation, the spread of disinformation - or the deliberate sharing of wrongful information, is likely still on the rise.

HOW DOES MISINFORMATION SPREAD ON SOCIAL MEDIA?

The release of Netflix docudrama, The Social Dilemma last week has left a lot of people spinning. Our addiction to social media has long been recognised as bad for our mental health.

The revelation that the algorithms which deliver us endless streams of content are likely manipulating us to change the way we think and behave, however, was eye-opening for many.

It is these same algorithms that are being exploited to help a relatively small group of people to have a massive influence. Recent research commissioned by a coalition of environmental organisations found that a definable group of people were responsible for spreading a majority of fake or partially true stories.

The research tracked climate denial conversations through hashtags on Twitter. They discovered that this group of climate deniers was posting on average four times as much as climate scientists, experts and campaigners.

This high volume of posts helps the stories to get picked up by people with large followings and widespread influence, such as politicians and bloggers. Very vocal groups can cause a post to go viral and receive a lot of attention without always having to go through the fact-checking necessary to appear in more conventional media.

If stories get shared enough, they can even begin appearing in mainstream news. Virality is essential to the way that social media companies make money and some experts have argued that this means fighting misinformation is not in their financial interest.

WHAT IS AN ECHO CHAMBER?

The spread could be in part due to the ‘echo chambers’ which can form on social media.

"Echo chambers on social media are groups of like-minded users that friend/follow each other and share news and opinions that tend to be similar," explains Hywel Williams, Professor in Data Science at the University of Exeter.

Although he doesn't know of any studies that show conclusively that they help misinformation to spread, he believes it is likely that they might.

They form, Williams says, because people are more likely to follow or friend others that are similar to them. "When this happens in online networks, where there is a high level of choice in who to follow and what content to engage with, the aggregate effect is clusters of like-minded people," or echo chambers.

We don't get to specify to the same extent in our real-world social interactions because we don't get to choose who we live next door to, our families or who we work with. This means we are usually exposed to a diverse set of perspectives.

"But online there is endless choice and we can be highly specific in who/what we engage with, so can screen out anything we don't like or disagree with," Williams says.

"Because many users are unaware that they sit in an echo chamber, it appears to them that 'everyone' shares a similar view to their own."

Professor Hywel Williams

University of Exeter

A lack of diverse views means that "agreeable" information might not be given the same scrutiny or critical examination. "A group where everyone thinks similarly is less likely to call out a view that is a small distortion or amplification of their existing viewpoint, or may be more willing to accept false information that fits into their world view."

"Because many users are unaware that they sit in an echo chamber, it appears to them that 'everyone' shares a similar view to their own," adds Williams. "Their views get reinforced and potentially more extreme; at the same time, alternative views are less visible and seem unsupported."

It is important to note, however, that this means they may help to decrease the spread of some misformation as well by rejecting that which comes from the "wrong side".

Echo chambers are a problem that affects all kinds of topics but climate change is particularly susceptible. This is because the two sides of the conversation are readily split into activists and sceptics.

"In my view, the bigger danger of echo chambers is that they promote polarisation and lead to more extreme views," says Williams. "That can prevent consensus and exclude moderate views. A fractured public debate on any issue is likely to promote misinformation amongst a variety of other problems."

With more people than ever using social media as their main source of news, the way that this shapes our opinions could be having a significant impact on political processes and public debates.

How ‘clicktivism’ has changed the way we protest forever

Autonomous robot ship collects critical data on ocean and marine life

People care more about climate change than saving money, says survey

But for a coalition of environmental groups, the changes are too little too late. “Facebook admits climate misinformation on its platforms is a rampant problem, but it is only taking half measures to stop it,” says a joint statement from groups including Greenpeace, Friends of the Earth and the Sierra Club.

“This new policy is a small step forward but does not address the larger climate disinformation crisis hiding in plain sight.“

Critics pointed out that the introduction of these new measures does nothing to address a “climate loophole” on the platform which labels climate denial content as “opinion” and makes it exempt from fact checking.

The company says that it is trying to strike a balance between allowing free speech and tackling misinformation. It doesn't want to punish people for saying something which may not be factually correct — especially if it is unintentional.

But just days after the announcement, hundreds of accounts linked to Indigenous, environmental and social justice organisations were suspended. It happened after they co-hosted an online event last May protesting a natural gas pipeline that will cut through sovereign Wet’suwet’en land in British Columbia.

Even if Facebook can eventually get the balance right for environmental misinformation, the spread of disinformation - or the deliberate sharing of wrongful information, is likely still on the rise.

HOW DOES MISINFORMATION SPREAD ON SOCIAL MEDIA?

The release of Netflix docudrama, The Social Dilemma last week has left a lot of people spinning. Our addiction to social media has long been recognised as bad for our mental health.

The revelation that the algorithms which deliver us endless streams of content are likely manipulating us to change the way we think and behave, however, was eye-opening for many.

It is these same algorithms that are being exploited to help a relatively small group of people to have a massive influence. Recent research commissioned by a coalition of environmental organisations found that a definable group of people were responsible for spreading a majority of fake or partially true stories.

The research tracked climate denial conversations through hashtags on Twitter. They discovered that this group of climate deniers was posting on average four times as much as climate scientists, experts and campaigners.

This high volume of posts helps the stories to get picked up by people with large followings and widespread influence, such as politicians and bloggers. Very vocal groups can cause a post to go viral and receive a lot of attention without always having to go through the fact-checking necessary to appear in more conventional media.

If stories get shared enough, they can even begin appearing in mainstream news. Virality is essential to the way that social media companies make money and some experts have argued that this means fighting misinformation is not in their financial interest.

WHAT IS AN ECHO CHAMBER?

The spread could be in part due to the ‘echo chambers’ which can form on social media.

"Echo chambers on social media are groups of like-minded users that friend/follow each other and share news and opinions that tend to be similar," explains Hywel Williams, Professor in Data Science at the University of Exeter.

Although he doesn't know of any studies that show conclusively that they help misinformation to spread, he believes it is likely that they might.

They form, Williams says, because people are more likely to follow or friend others that are similar to them. "When this happens in online networks, where there is a high level of choice in who to follow and what content to engage with, the aggregate effect is clusters of like-minded people," or echo chambers.

We don't get to specify to the same extent in our real-world social interactions because we don't get to choose who we live next door to, our families or who we work with. This means we are usually exposed to a diverse set of perspectives.

"But online there is endless choice and we can be highly specific in who/what we engage with, so can screen out anything we don't like or disagree with," Williams says.

"Because many users are unaware that they sit in an echo chamber, it appears to them that 'everyone' shares a similar view to their own."

Professor Hywel Williams

University of Exeter

A lack of diverse views means that "agreeable" information might not be given the same scrutiny or critical examination. "A group where everyone thinks similarly is less likely to call out a view that is a small distortion or amplification of their existing viewpoint, or may be more willing to accept false information that fits into their world view."

"Because many users are unaware that they sit in an echo chamber, it appears to them that 'everyone' shares a similar view to their own," adds Williams. "Their views get reinforced and potentially more extreme; at the same time, alternative views are less visible and seem unsupported."

It is important to note, however, that this means they may help to decrease the spread of some misformation as well by rejecting that which comes from the "wrong side".

Echo chambers are a problem that affects all kinds of topics but climate change is particularly susceptible. This is because the two sides of the conversation are readily split into activists and sceptics.

"In my view, the bigger danger of echo chambers is that they promote polarisation and lead to more extreme views," says Williams. "That can prevent consensus and exclude moderate views. A fractured public debate on any issue is likely to promote misinformation amongst a variety of other problems."

With more people than ever using social media as their main source of news, the way that this shapes our opinions could be having a significant impact on political processes and public debates.

How ‘clicktivism’ has changed the way we protest forever

Autonomous robot ship collects critical data on ocean and marine life

People care more about climate change than saving money, says survey

WHEN DISINFORMATION BECOMES DANGEROUS

Raging wildfires across Australia and the US in the last year have shown how misinformation can become dangerous. Rumours spread that, rather than being a result of a climate change or other environmental factors, a band of roaming far left arsonists were setting fires. In Australia, they were said to be climate change activists looking to create panic to further their own cause.

In the US, the apparent arsonists were linked to Antifa, playing into a larger conspiracy theory about the group spreading on social media in the country. As posts claiming that fires were being set by the far left political group began to spread, local fire and police agencies had to issue statements as people reporting suspicious activity overwhelmed services.

Facebook eventually deleted a post propagating the unsubstantiated rumour that had been shared more than 71000 times. Taking a look at the replies to the FBI Portland’s post, it is clear that even with this clarification from official sources, the unfounded claims spread far enough that people are still repeating them.

Potential violence caused by misinformation is one problem but confusion is another.

Despite 97 per cent of climate scientists reaching a consensus that greenhouse gases generated by humans are causing global warming, taking action is often still politically contentious.

Confusion, fueled by a barrage of opposing information, leads to doubt. And this doubt perhaps benefits those who seek to lose the most from government action on climate change.

No comments:

Post a Comment