- Could the Universe be a giant

quantum computer?

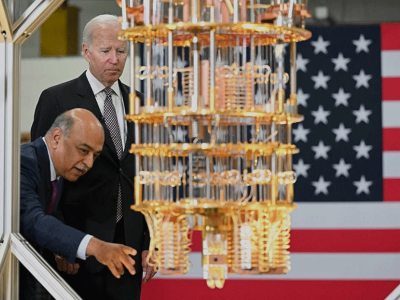

Physics might all be explained as the manipulation of bits of information.Credit: Getty

The death of US computer scientist and physicist Edward Fredkin this June went largely unnoticed, except for a belated obituary in the New York Times. Yet despite never quite becoming the household name that some of his contemporaries did, Fredkin had an outsized influence on both of the disciplines that he straddled.

Many still baulk at his central contention: that the laws of physics, and indeed those of the Universe itself, are essentially the result of a computer algorithm. But the ‘digital physics’ that Fredkin championed has gone from being beyond the pale to almost mainstream. “At the time it was considered a completely crazy idea that computation science could teach you anything about physics,” says Norman Margolus, a Canadian computer scientist who was a long-time collaborator of Fredkin’s and his sole physics PhD student. “The world has evolved from then, it’s all very respectable now.”

Particle, wave, both or neither? The experiment that challenges all we know about reality

A dropout from the California Institute of Technology (Caltech) in Pasadena after his freshman year, Fredkin joined the US Air Force in 1953, becoming a fighter pilot and eventually the instructor for the elite corps of tight-formation jet pilots. The Air Force set him on to computer science, sending him to the Massachusetts Institute of Technology (MIT) Lincoln Laboratory in Lexington in 1956, to work on using computers to process radar information to guide pilots. Leaving the Air Force in 1958, Fredkin joined the pioneering computing company Bolt Beranek & Newman, based in Cambridge, Massachusetts — now part of Raytheon — where, among other projects, he wrote an early assembler language and participated in artificial-intelligence (AI) research. After founding his own company, Information International, specializing in imaging hardware and software, he came back to MIT in 1968 as a full professor, despite not even having an undergraduate degree.

Fredkin ended up directing Project MAC, a research institute that evolved into MIT’s Laboratory for Computing Science. The position was just one of a wide portfolio. “He did a lot of things in the real world,” says Margolus, now an independent researcher affiliated with MIT. These included running his company, designing a reverse-osmosis system for a desalination company and managing New England Television, the ABC affiliate in Boston, Massachusetts. Contractually limited to one day a week of outside activities, Fredkin was sometimes not seen for weeks at a time, says Margolus.

Forward thinking

In the late 1960s, AI was still a mostly theoretical concept, yet Fredkin was early to grasp the policy challenges that machines capable of learning and autonomous decision-making pose, including for national security. He championed international collaboration on AI research, recognizing that early consensus on how the technology should be used would prevent problems down the line. However, attempts to convene an international meeting of top thinkers in the field never quite materialized — a failure that resonates to this day.

In 1974, Fredkin left MIT and spent a year as a distinguished scholar at Caltech, where he befriended the physicists Richard Feynman and Stephen Hawking. He then accepted a tenured faculty position at Carnegie Mellon University in Pittsburgh, Pennsylvania, and later a second position at Boston University. It was from then that he started work on reversible computing.

Edward Fredkin saw few limits to what computing might explain.Credit: School of Computer Science/Carnegie Mellon University

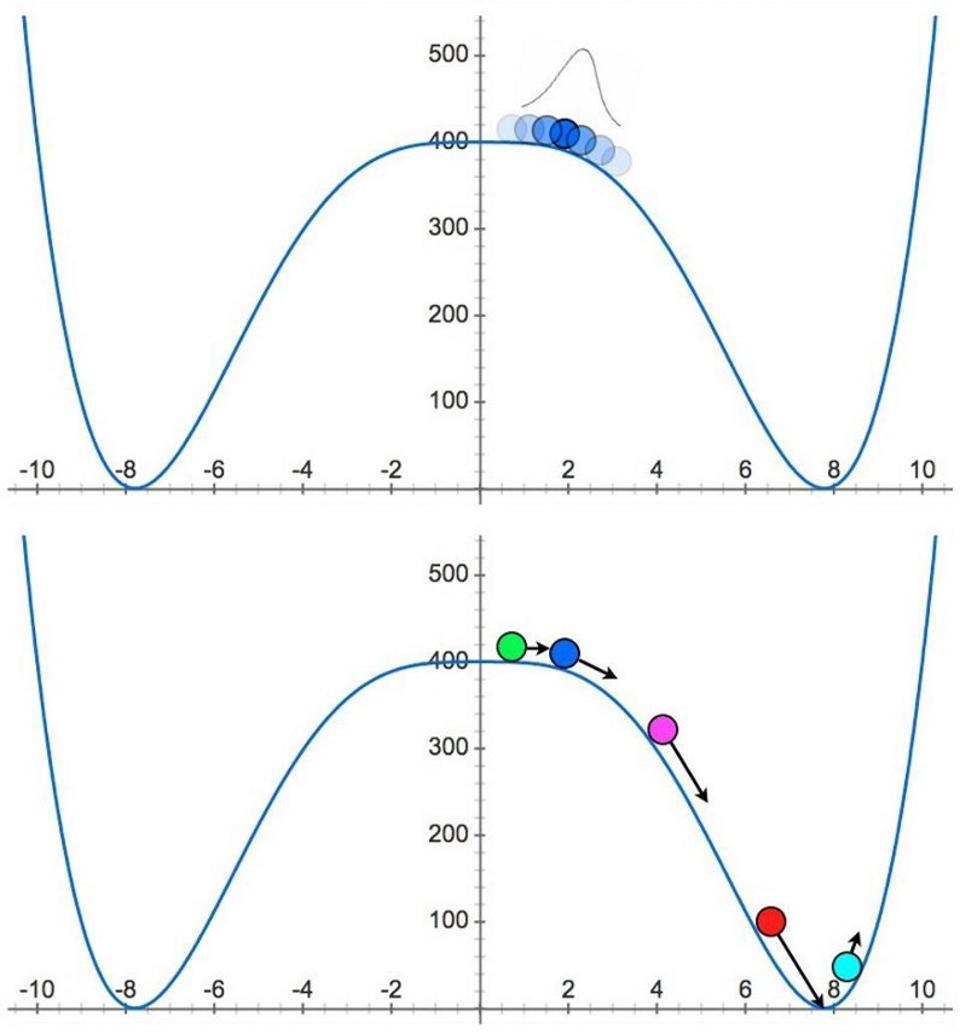

At the time, reversible computing was widely considered impossible. A conventional digital computer is assembled from an array of logic gates — ANDs, ORs, XORs and so on — in which, generally, two inputs become one output. The input information is erased, producing heat, and the process cannot be reversed. With Margolus and a young Italian electrical engineer, Tommaso Toffoli, Fredkin showed that certain gates with three inputs and three outputs — what became known as Fredkin and Toffoli gates — could be arranged such that all the intermediate steps of any possible computation could be preserved, allowing the process to be reversed on completion. As they set out in a seminal 1982 paper, a computer built with those gates might, theoretically at least, produce no waste heat and thus consume no energy1.

This seemed initially no more than a curiosity. Fredkin felt that the concept might help in the development of more efficient computers with less wasted heat, but there was no practical way to realize the idea fully using classical computers. In 1981, however, history took a new turn, when Fredkin and Toffoli organized the Physics of Computation Symposium at MIT. Feynman was among the luminaries present. In a now famous contribution, he suggested that, rather than trying to simulate quantum phenomena with conventional digital computers, some physical systems that exhibit quantum behaviour might be better tools.

This talk is widely seen as ushering in the age of quantum computers, which harness the full power of quantum mechanics to solve certain problems — such as the quantum-simulation problem that Feynman was addressing — much faster than any classical computer can. Four decades on, small quantum computers are now in development. The electronics, lasers and cooling systems needed to make them work consume a lot of power, but the quantum logical operations themselves are pretty much lossless.

Digital physics

Reversible computation “was an essential precondition really, for being able to conceive of quantum computers”, says Seth Lloyd, a mechanical engineer at MIT who in 1993 developed what is considered the first realizable concept for a quantum computer2. Although the IBM physicist Charles Bennett had also produced models of a reversible computation, Lloyd adds, it was the zero-dissipation versions described by Fredkin, Toffoli and Margolus that ended up becoming the models on which quantum computation were built.

Did physicists create a wormhole in a quantum computer?

In their 1982 paper, Fredkin and Toffoli had begun developing their work on reversible computation in a rather different direction. It started with a seemingly frivolous analogy: a billiard table. They showed how mathematical computations could be represented by fully reversible billiard-ball interactions, assuming a frictionless table and balls interacting without friction.

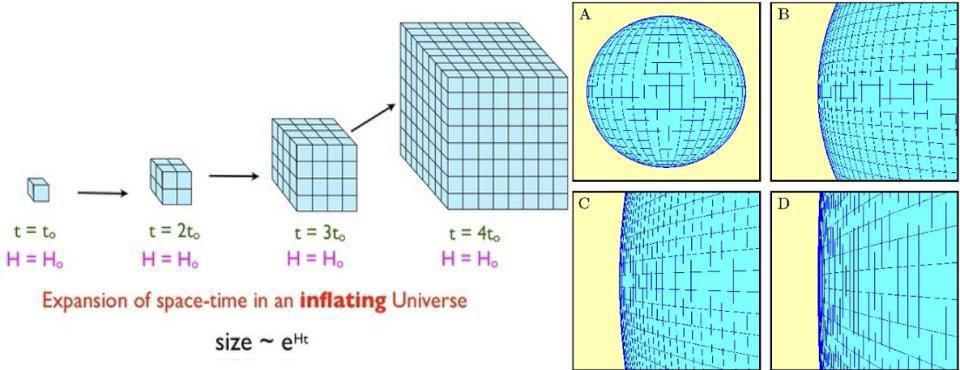

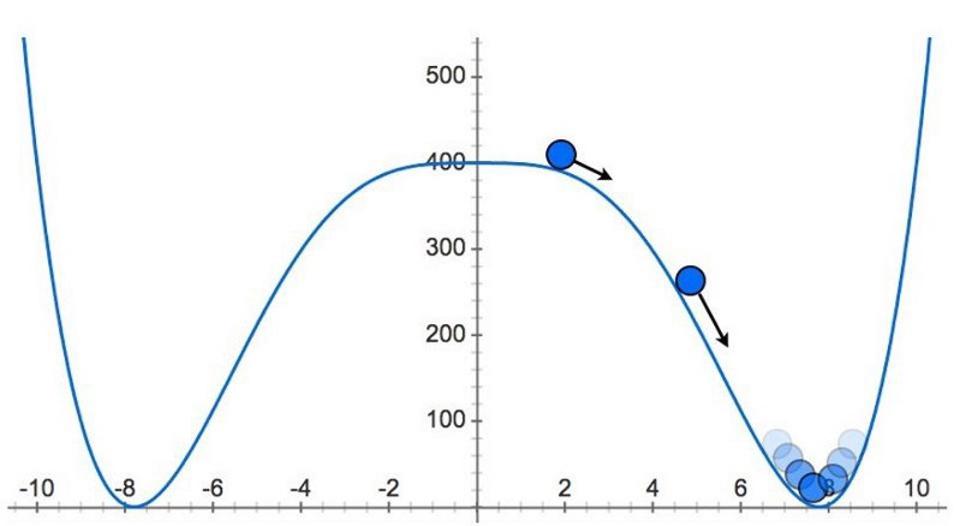

This physical manifestation of the reversible concept grew from Toffoli’s idea that computational concepts could be a better way to encapsulate physics than the differential equations conventionally used to describe motion and change. Fredkin took things even further, concluding that the whole Universe could actually be seen as a kind of computer. In his view, it was a ‘cellular automaton’: a collection of computational bits, or cells, that can flip states according to a defined set of rules determined by the states of the cells around them. Over time, these simple rules can give rise to all the complexities of the cosmos — even life.

He wasn’t the first to play with such ideas. Konrad Zuse — a German civil engineer who, before the Second World War, had developed one of the first programmable computers — suggested in his 1969 book Calculating Space that the Universe could be viewed as a classical digital cellular automaton. Fredkin and his associates developed the concept with intense focus, spending years searching for examples of how simple computational rules could generate all the phenomena associated with subatomic particles and forces3.

Not everyone was impressed. Margolus recounts that the renowned physicist Philip Morrison, then also on the faculty at MIT, told Fredkin’s students that Fredkin was a computer scientist, so he thought that the world was a big computer, but if he had been a cheese merchant, he would think the world was a big cheese. When the British computer scientist Stephen Wolfram proposed similar ideas in his 2002 book A New Kind of Science, Fredkin reacted by saying “Wolfram is the first significant person to believe in this stuff. I’ve been very lonely.”

In truth, however, Wolfram was not alone in exploring the ideas. Whereas Fredkin himself initially used the phrase ‘digital physics’, and later ‘digital philosophy’, modern variations on the theme have used terms such as ‘pancomputationalism’ and ‘digitalism’. They have been espoused by researchers including Dutch physics Nobel laureate Gerard ‘t Hooft, and US physicist John Wheeler, whose famous “it from bit” saying is a pithy expression of the hypothesis.

Into the quantum realm

Some, including Margolus, have continued to develop the classical version of the theory. Others have concluded that a classical computational model could not be responsible for the complexities of the Universe that we observe. According to Lloyd, Fredkin’s original digital-universe theory has “very serious impediments towards a classical digital universe being able to comprehend quantum mechanical phenomena”. But swap the classical computational rules of Fredkin’s digital physics for quantum rules, and a lot of those problems melt away. You can capture intrinsic features of a quantum Universe such as entanglement between two quantum states separated in space in a way that a theory built on classical ideas can’t.

IBM quantum computer passes calculation milestone

Lloyd espoused this idea in a series of papers starting in the 1990s, as well as in a 2006 book Programming the Universe. It culminated in a comprehensive account of how rules of quantum computation might account for the known laws of physics — elementary particle theory, the standard model of particle physics and perhaps even the holy grail of fundamental physics: a quantum theory of gravity4.

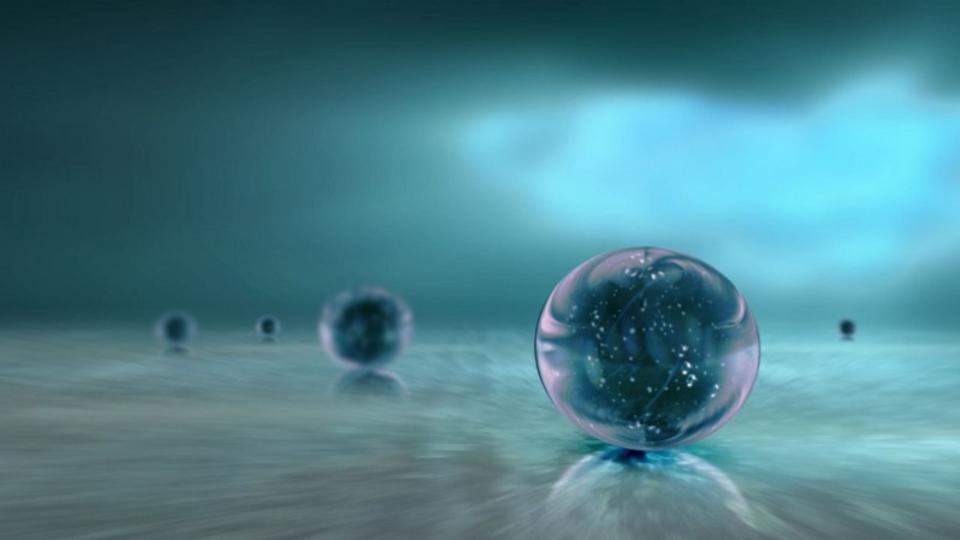

Such proposals are very distinct from the more recent idea that we live in a computer simulation, advanced by the Swedish philosopher Nick Bostrom at the University of Oxford, UK, among others5. Whereas the digital Universe posits that the basic initial conditions and rules of the computational universe arose naturally, much as particles and forces of traditional physics arose naturally in the Big Bang and its aftermath, the simulation hypothesis posits that the Universe was all deliberately constructed by some highly advanced intelligent alien programmers, perhaps as some kind of grand experiment, or even as a kind of game — an implausibly involved effort, in Lloyd’s view.

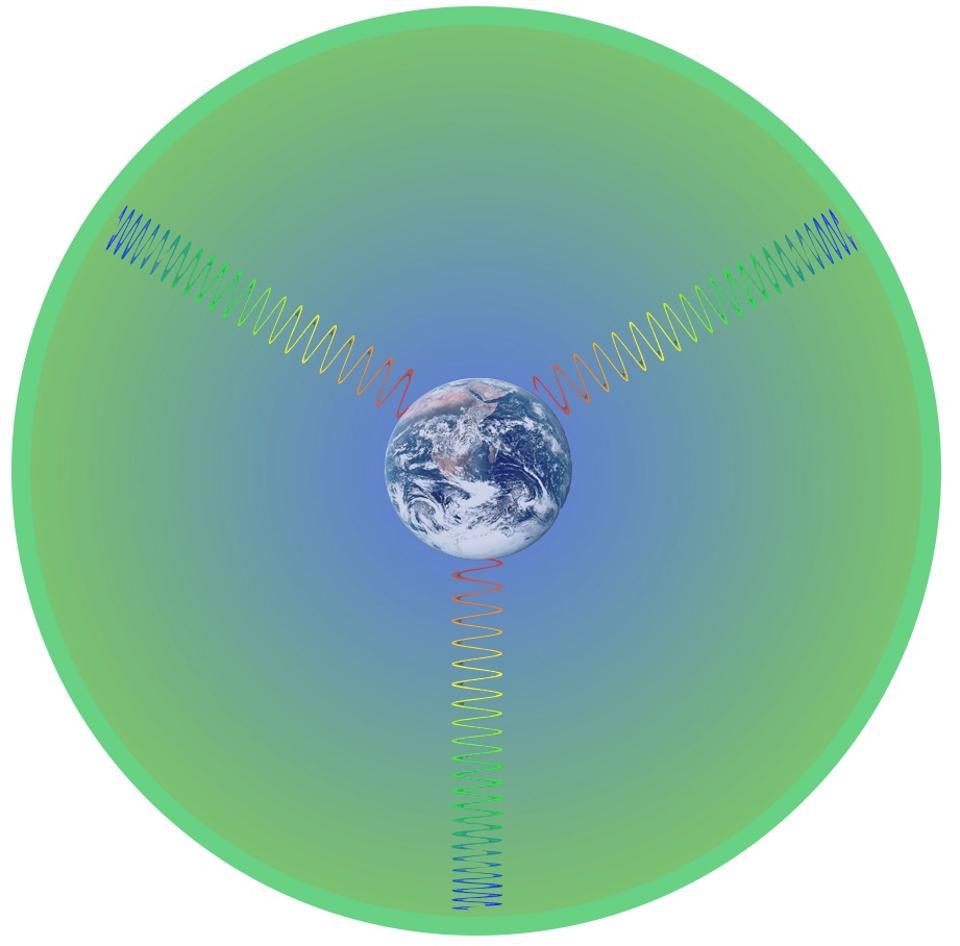

The basic idea of a digital Universe might just be testable. For the cosmos to have been produced by a system of data bits at the tiny Planck scale — a scale at which present theories of physics are expected to break down — space and time must be made up of discrete, quantized entities. The effect of such a granular space-time might show up in tiny differences, for example, in how long it takes light of various frequencies to propagate across billions of light years. Really pinning down the idea, however, would probably require a quantum theory of gravity that establishes the relationship between the effects of Einstein’s general theory of relativity at the macro scale and quantum effects on the micro scale. This has so far eluded theorists. Here, the digital universe might just help itself out. Favoured routes towards quantum theories of gravitation are gradually starting to look more computational in nature, says Lloyd — for example the holographic principle introduced by ‘t Hooft, which holds that our world is a projection of a lower-dimensional reality. “It seems hopeful that these quantum digital universe ideas might be able to shed some light on some of these mysteries,” says Lloyd.

That would be just the latest twist in an unconventional story. Fredkin himself thought that his lack of a typical education in physics was, in part, what enabled him to arrive at his distinctive views on the subject. Lloyd tends to agree. “I think if he had had a more conventional education, if he’d come up through the ranks and had taken the standard physics courses and so on, maybe he would have done less interesting work.”

Nature 620, 943-945 (2023)

doi: https://doi.org/10.1038/d41586-023-02646-x

References

Fredkin, E. & Toffoli, T. Int. J. Theor. Phys. 21, 219–253 (1982).

Lloyd, S. Science 261, 1569–1571 (1993).

Fredkin, E. Phys. D: Nonlinear Phenom. 45, 254–270 (1990).

Lloyd, S. Preprint at https://arxiv.org/abs/1312.4455 (2013).

Bostrom, N. Philos. Q. 53, 243–255 (2003).