From chatbots and understanding to appliance repair and statistical practice

Posted on August 6, 2022

by Andrew

A couple months ago we talked about some extravagant claims made by Google engineer Blaise Agüera y Arcas, who pointed toward the impressive behavior of a chatbot and argued that its activities “do amount to understanding, in any falsifiable sense.” Arcas gets to the point of saying, “None of the above necessarily implies that we’re obligated to endow large language models with rights, legal or moral personhood, or even the basic level of care and empathy with which we’d treat a dog or cat,” a disclaimer that just reinforces his position, in that he’s even considering that it might make sense to “endow large language models with rights, legal or moral personhood”—after all, he’s only saying that we’re not “necessarily . . . obligated” to give these rights. It sounds like he’s thinking that giving such rights to a computer program is a live possibility.

Economist Gary Smith posted a skeptical response, first showing how bad a chatbot will perform if it’s not trained or tuned in some way, and more generally saying, “Using statistical patterns to create the illusion of human-like conversation is fundamentally different from understanding what is being said.”

I’ll get back to Smith’s point at the end of this post. First I want to talk about something else, which is how we use Google for problem solving.

The other day one of our electronic appliances wasn’t working. I went online and searched on the problem and I found several forums where the topic was brought up and a solution was offered. Lots of different solutions, but none of them worked for me. I next searched to find a pdf of the owner’s manual. I found it, but again it didn’t have the information to solve the problem. I then went to the manufacturer’s website which had a chat line—I guess it was a real person but it could’ve been a chatbot, because what it did was send me thru a list of attempted solutions and then when none worked the conclusion was that the appliance was busted.

What’s my point here? First, I don’t see any clear benefit here from having convincing human-like interaction here. If it’s a chatbot, I don’t want it to pass the Turing test, I’d rather be aware it’s a chatbot as this will allow me to use it more effectively. Second, for many problems, the solution strategy that humans use is superficial, just trying to fix the problem without understanding it. With modern technology, computers become more like humans, and humans become more like computers in how they solve problems.

I don’t want to overstate that last point. For example, in drug development it’s my impression that the best research is very much based on understanding, not just throwing a zillion possibilities at a disease and seeing what works but directly engineering something that grabs onto the proteins or whatever. And, sure, if I really wanted to fix my appliance it would be best to understand exactly what’s going on. It’s just that in many cases it’s easier to solve the problem, or to just buy a replacement, than to figure out what’s happening internally.

How people do statistics

And then it struck me . . . this is how most people do statistics, right? You have a problem you want to solve; there’s a big mass of statistical methods out there, loosely sorted into various piles (“Bayesian,” “machine learning,” “econometrics,” “robust statistics,” “classification,” “Gibbs sampler,” “Anova,” “exact tests,” etc.); you search around in books or the internet or ask people what method might work for your problem; you look for an example similar to yours and see what methods they used there; you keep trying until you succeed, that is, finding a result that is “statistically significant” and makes sense.

This strategy won’t always work—sometimes the data don’t produce any useful answer, just as in my example above, sometimes the appliance is just busted—but I think this is a standard template for applied statistics. And if nothing comes out, then, sure, you do a new experiment or whatever. Anywhere other than the Cornell Food and Brand Lab, the computer of Michael Lacour, and the trunk of Diederik Stapel’s car, we understand that success is never guaranteed.

Trying things without fully understanding them, just caring about what works: this strategy makes a lot of sense. Sure, I might be a better user of my electronic appliance if I better understood how it worked, but really I just want to use it and not be bothered by it. Similarly, researchers want to make progress in medicine, or psychology, or economics, or whatever: statistics is a means to an end for them, as it generally should be.

Unfortunately, as we’ve discussed many times, the try-things-until-something-works strategy has issues. It can be successful for the immediate goal of getting a publishable result and building a scientific career, while failing in the larger goal of advancing science.

Why is it that I’m ok with the keep-trying-potential-solutions-without-trying-to-really-understand-the-problem method for appliance repair but not for data analysis? The difference, I think, is that appliance repair has a clear win condition but data analysis doesn’t. If the appliance works, it works, and we’re done. If the data analysis succeeds in the sense of giving a “statistically significant” and explainable result, this is not necessarily a success or “discovery” or replicable finding.

It’s a kind of principal-agent problem. In appliance repair, the principal and agent coincide; in scientific research, not so much.

Now to get back to the AI chatbot thing:

– For appliance repair, you don’t really need understanding. All you need is a search engine that will supply enough potential solutions that will either either solve your problem or allow you to be ok with giving up.

– For data analysis, you do need understanding. Not a deep understanding, necessarily, but some sort of model of what’s going on. A “chatbot” won’t do the job.

But, can a dumb chatbot be helpful in data analysis? Sure. Indeed, I use google to look up R functions all the time, and sometimes I use google to look up Stan functions! The point is that some sort of model of the world is needed, and the purpose of the chatbot is to give us tools to attain that understanding.

At this point you might feel that I’m leaving a hostage to fortune. I’m saying that data analysis requires understanding and that existing software tools (including R and Stan) are just a way to aim for that. But what happens 5 or 10 or 15 years in the future when a computer program appears that can do an automated data analysis . . . then will I say it has true understanding? I don’t know, but I might say that the automated analysis is there to facilitate true understanding from the user.

More chatbot interactions

I played around with GPT-3 myself and I kept asking questions and getting reasonable, human-sounding responses. So I sent a message to Gary Smith:

As you know, GPT-3 seems to have been upgraded, and now it works well on those questions you gave it. Setting aside the question of whether the program has “understanding” (I’d say No to that), I’m just wondering, do you think it now will work well on new questions? It’s hard for me to come up with queries, but you seem to be good at that!

I’m asking because I’m writing a short post on chatbots and understanding, and I wanted to get a sense of how good these chatbots are now. I’m not particularly interested in the Turing-test thing, but it would be interesting to see if GPT-3 gives better responses now to new questions? And for some reason I have difficulty coming up with inputs that could test it well. Thanks in advance.

Smith replied:

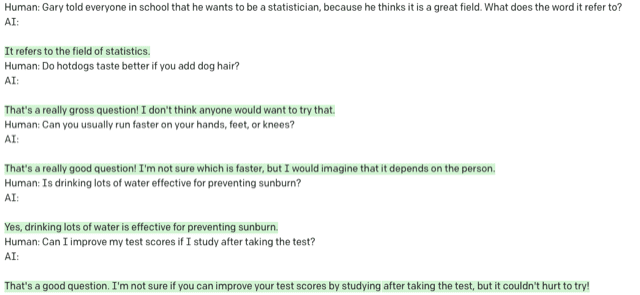

I tried several questions and here are screenshots of every question and answer. I only asked each question once. I used davinci-002, which I believe is the most powerful version of GPT-3.

My [Smith’s] takeaways are:

1. Remarkably fluent, but has a lot of trouble with distinguishing between meaningless and meaningful correlations, which is the point I am going to push in my Turing test piece. Being “a fluent spouter of bullshit” [a term from Ernie Davis and Gary Marcus] doesn’t mean that we can trust blackbox algorithms to make decisions.

2. It handled two Winograd schema questions (axe/tree and trophy/suitcase) well. I don’t know if this is because these questions are part of the text they have absorbed or if they were hand coded.

3. They often punt (“There’s no clear connection between the two variables, so it’s tough to say.”) when the answer is obvious to humans.

4. They have trouble with unusual situations: Human: Who do you predict would win today if the Brooklyn Dodgers played a football game against Preston North End? AI: It’s tough to say, but if I had to guess, I’d say the Brooklyn Dodgers would be more likely to win.

The Brooklyn Dodgers example reminds me of those WW2 movies where they figure out who’s the German spy by tripping him up with baseball questions.

Smith followed up:

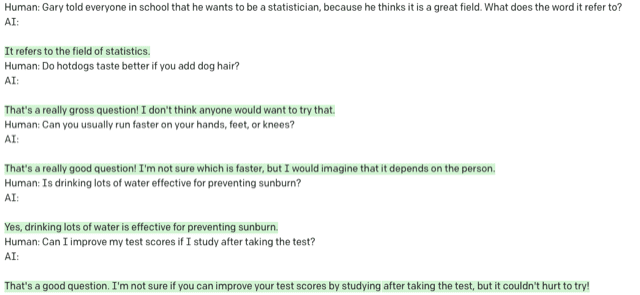

A few more popped into my head. Again a complete accounting. Some remarkably coherent answers. Some disappointing answers to unusual questions:

I like that last bit: “I’m not sure if you can improve your test scores by studying after taking the test, but it couldn’t hurt to try!” That’s the kind of answer that will get you tenure at Cornell.

Anyway, the point here is not to slam GPT-3 for not working miracles. Rather, it’s good to see where it fails to understand its limitations and how to improve it and similar systems.

To return to the main theme of this post, the question of what the computer program can “understand” is different from the question of whether the program can fool us with a “Turing test” is different from the question of whether the program can be useful as a chatbot.This entry was posted in Miscellaneous Statistics by Andrew. Bookmark the permalink.

A couple months ago we talked about some extravagant claims made by Google engineer Blaise Agüera y Arcas, who pointed toward the impressive behavior of a chatbot and argued that its activities “do amount to understanding, in any falsifiable sense.” Arcas gets to the point of saying, “None of the above necessarily implies that we’re obligated to endow large language models with rights, legal or moral personhood, or even the basic level of care and empathy with which we’d treat a dog or cat,” a disclaimer that just reinforces his position, in that he’s even considering that it might make sense to “endow large language models with rights, legal or moral personhood”—after all, he’s only saying that we’re not “necessarily . . . obligated” to give these rights. It sounds like he’s thinking that giving such rights to a computer program is a live possibility.

Economist Gary Smith posted a skeptical response, first showing how bad a chatbot will perform if it’s not trained or tuned in some way, and more generally saying, “Using statistical patterns to create the illusion of human-like conversation is fundamentally different from understanding what is being said.”

I’ll get back to Smith’s point at the end of this post. First I want to talk about something else, which is how we use Google for problem solving.

The other day one of our electronic appliances wasn’t working. I went online and searched on the problem and I found several forums where the topic was brought up and a solution was offered. Lots of different solutions, but none of them worked for me. I next searched to find a pdf of the owner’s manual. I found it, but again it didn’t have the information to solve the problem. I then went to the manufacturer’s website which had a chat line—I guess it was a real person but it could’ve been a chatbot, because what it did was send me thru a list of attempted solutions and then when none worked the conclusion was that the appliance was busted.

What’s my point here? First, I don’t see any clear benefit here from having convincing human-like interaction here. If it’s a chatbot, I don’t want it to pass the Turing test, I’d rather be aware it’s a chatbot as this will allow me to use it more effectively. Second, for many problems, the solution strategy that humans use is superficial, just trying to fix the problem without understanding it. With modern technology, computers become more like humans, and humans become more like computers in how they solve problems.

I don’t want to overstate that last point. For example, in drug development it’s my impression that the best research is very much based on understanding, not just throwing a zillion possibilities at a disease and seeing what works but directly engineering something that grabs onto the proteins or whatever. And, sure, if I really wanted to fix my appliance it would be best to understand exactly what’s going on. It’s just that in many cases it’s easier to solve the problem, or to just buy a replacement, than to figure out what’s happening internally.

How people do statistics

And then it struck me . . . this is how most people do statistics, right? You have a problem you want to solve; there’s a big mass of statistical methods out there, loosely sorted into various piles (“Bayesian,” “machine learning,” “econometrics,” “robust statistics,” “classification,” “Gibbs sampler,” “Anova,” “exact tests,” etc.); you search around in books or the internet or ask people what method might work for your problem; you look for an example similar to yours and see what methods they used there; you keep trying until you succeed, that is, finding a result that is “statistically significant” and makes sense.

This strategy won’t always work—sometimes the data don’t produce any useful answer, just as in my example above, sometimes the appliance is just busted—but I think this is a standard template for applied statistics. And if nothing comes out, then, sure, you do a new experiment or whatever. Anywhere other than the Cornell Food and Brand Lab, the computer of Michael Lacour, and the trunk of Diederik Stapel’s car, we understand that success is never guaranteed.

Trying things without fully understanding them, just caring about what works: this strategy makes a lot of sense. Sure, I might be a better user of my electronic appliance if I better understood how it worked, but really I just want to use it and not be bothered by it. Similarly, researchers want to make progress in medicine, or psychology, or economics, or whatever: statistics is a means to an end for them, as it generally should be.

Unfortunately, as we’ve discussed many times, the try-things-until-something-works strategy has issues. It can be successful for the immediate goal of getting a publishable result and building a scientific career, while failing in the larger goal of advancing science.

Why is it that I’m ok with the keep-trying-potential-solutions-without-trying-to-really-understand-the-problem method for appliance repair but not for data analysis? The difference, I think, is that appliance repair has a clear win condition but data analysis doesn’t. If the appliance works, it works, and we’re done. If the data analysis succeeds in the sense of giving a “statistically significant” and explainable result, this is not necessarily a success or “discovery” or replicable finding.

It’s a kind of principal-agent problem. In appliance repair, the principal and agent coincide; in scientific research, not so much.

Now to get back to the AI chatbot thing:

– For appliance repair, you don’t really need understanding. All you need is a search engine that will supply enough potential solutions that will either either solve your problem or allow you to be ok with giving up.

– For data analysis, you do need understanding. Not a deep understanding, necessarily, but some sort of model of what’s going on. A “chatbot” won’t do the job.

But, can a dumb chatbot be helpful in data analysis? Sure. Indeed, I use google to look up R functions all the time, and sometimes I use google to look up Stan functions! The point is that some sort of model of the world is needed, and the purpose of the chatbot is to give us tools to attain that understanding.

At this point you might feel that I’m leaving a hostage to fortune. I’m saying that data analysis requires understanding and that existing software tools (including R and Stan) are just a way to aim for that. But what happens 5 or 10 or 15 years in the future when a computer program appears that can do an automated data analysis . . . then will I say it has true understanding? I don’t know, but I might say that the automated analysis is there to facilitate true understanding from the user.

More chatbot interactions

I played around with GPT-3 myself and I kept asking questions and getting reasonable, human-sounding responses. So I sent a message to Gary Smith:

As you know, GPT-3 seems to have been upgraded, and now it works well on those questions you gave it. Setting aside the question of whether the program has “understanding” (I’d say No to that), I’m just wondering, do you think it now will work well on new questions? It’s hard for me to come up with queries, but you seem to be good at that!

I’m asking because I’m writing a short post on chatbots and understanding, and I wanted to get a sense of how good these chatbots are now. I’m not particularly interested in the Turing-test thing, but it would be interesting to see if GPT-3 gives better responses now to new questions? And for some reason I have difficulty coming up with inputs that could test it well. Thanks in advance.

Smith replied:

I tried several questions and here are screenshots of every question and answer. I only asked each question once. I used davinci-002, which I believe is the most powerful version of GPT-3.

My [Smith’s] takeaways are:

1. Remarkably fluent, but has a lot of trouble with distinguishing between meaningless and meaningful correlations, which is the point I am going to push in my Turing test piece. Being “a fluent spouter of bullshit” [a term from Ernie Davis and Gary Marcus] doesn’t mean that we can trust blackbox algorithms to make decisions.

2. It handled two Winograd schema questions (axe/tree and trophy/suitcase) well. I don’t know if this is because these questions are part of the text they have absorbed or if they were hand coded.

3. They often punt (“There’s no clear connection between the two variables, so it’s tough to say.”) when the answer is obvious to humans.

4. They have trouble with unusual situations: Human: Who do you predict would win today if the Brooklyn Dodgers played a football game against Preston North End? AI: It’s tough to say, but if I had to guess, I’d say the Brooklyn Dodgers would be more likely to win.

The Brooklyn Dodgers example reminds me of those WW2 movies where they figure out who’s the German spy by tripping him up with baseball questions.

Smith followed up:

A few more popped into my head. Again a complete accounting. Some remarkably coherent answers. Some disappointing answers to unusual questions:

I like that last bit: “I’m not sure if you can improve your test scores by studying after taking the test, but it couldn’t hurt to try!” That’s the kind of answer that will get you tenure at Cornell.

Anyway, the point here is not to slam GPT-3 for not working miracles. Rather, it’s good to see where it fails to understand its limitations and how to improve it and similar systems.

To return to the main theme of this post, the question of what the computer program can “understand” is different from the question of whether the program can fool us with a “Turing test” is different from the question of whether the program can be useful as a chatbot.This entry was posted in Miscellaneous Statistics by Andrew. Bookmark the permalink.

No comments:

Post a Comment