Why the 2010s were the Facebook Decade

Facebook grew 600% in 10 years, worming its way into basically everything. How?

KATE COX - 12/26/2019 ARSTECHNICA.COM

Enlarge / Facebook CEO Mark Zuckerberg testifying before Congress

in April 2018. It wasn't his only appearance in DC this decade.

By the end of 2009, Facebook—under the control of its then 25-year-old CEO, Mark Zuckerberg—was clearly on the rise. It more than doubled its subscriber base during the year, from 150 million monthly active users in January to 350 million in December. That year it also debuted several key features, including the "like" button and the ability to @-tag your friends in posts and comments. The once-novel platform, not just for kids anymore, was rapidly becoming mainstream.

Here at the end of 2019, Facebook—under the control of its now 35-year-old CEO, Mark Zuckerberg—boasts 2.45 billion monthly active users. That's a smidge under one-third of the entire human population of Earth, and the number is still going up every quarter (albeit more slowly than it used to). This year it has also debuted several key features, mostly around ideas like "preventing bad nation-state actors from hijacking democratic elections in other countries."

Love it or hate it, then, Facebook is in arguably a major facet of life not just in the United States but worldwide as we head into the next roaring '20s. But how on Earth did one social network, out of the many that launched and faded in the 2000s, end up taking over the world?

The Social Network debuted in 2010.

Setting the stage: From the dorms to "The Facebook Election"

Zuckerberg infamously started Facebook at Harvard in February 2004. Adoption was swift among Harvard undergrads, and the nascent Facebook team in the following months began allowing students at other Ivy League and Boston-area colleges to register, followed by a rapid roll-out to other US universities.

By summer of 2004, Facebook was incorporated as a business and had a California headquarters near Stanford University. It continued growing through 2005, allowing certain high schools and businesses to join, as well as US and international universities. The company wrapped up 2005 with about 6 million members, but it truly went big in September 2006, when the platform opened up to everyone.

But the advent of the smartphone era, which took off in 2007 when the first iPhone launched, really kicked Facebook up several gears. In 2008, the company marked two major milestones.

First, Facebook reached the 100 million user mark—a critical mass where enough of "everyone" was using the platform that it became fairly easy to talk anyone who wasn't using it yet into giving it a try. Second: Facebook found its niche in political advertising, when Barack Obama won what pundits started to call "The Facebook Election."

"Obama enjoyed a groundswell of support among, for lack of a better term, the Facebook generation," US News and World Report wrote at the time, describing Obama's popularity with voters under 25. "He will be the first occupant of the White House to have won a presidential election on the Web."

"Like a lot of Web innovators, the Obama campaign did not invent anything completely new," late media columnist David Carr wrote for The New York Times. "Instead, by bolting together social networking applications under the banner of a movement, they created an unforeseen force to raise money, organize locally, fight smear campaigns and get out the vote that helped them topple the Clinton machine and then John McCain and the Republicans."

"Obama's win means future elections must be fought online," The Guardian declaimed, adding presciently:

Facebook was not unaware of its suddenly powerful role in American electoral politics. During the presidential campaign, the site launched its own forum to encourage online debates about voter issues. Facebook also teamed up with the major television network, ABC, for election coverage and political forums. Another old media outlet, CNN, teamed up with YouTube to hold presidential debates.

If the beginning of the Obama years in 2008 was one kind of landmark for Facebook, their end, in 2016, was entirely another. But first, the company faced several other massive milestones.

2012: The IPO

Facebook took the Internet world by storm in 2004, but it didn't take on the financial world until 2012, when it launched its initial public offering of stock.

After months of speculative headlines, the Facebook IPO took place Friday, May 18, 2012 to much fanfare. The company priced shares at $38 each, raising about $16 billion. The shares hit $42.05 when they actually started trading. A good start, to be sure, but then everything went pear-shaped.

FURTHER READING

Right off the bat, the NASDAQ itself suffered technical errors that prevented Facebook shares from being sold for half an hour. Shares finally started to move at 11:30am ET, but then the overall market promptly fell, leaving FB ending the day just about where it began at $38.23. Everything about it was considered a flop.

The failures at NASDAQ and among banks prompted a series of investigations, lawsuits, settlements, and mea culpas that lingered for years. But post-mortems on the IPO eventually made clear that one of the many problems plaguing the NASDAQ in the moment was simply one of scale: Facebook was too big for the system to handle. The company sold 421.2 million shares in its IPO—the largest technology IPO ever at that time and still one of the ten highest-value IPOs in US history.

In August 2012, Facebook stock dropped to about $18. "Social media giant struggles to show that it can make money from mobile ads," an Ars story said at the time. "Facebook has struggled in recent months to show that it can effectively make money on mobile devices—even though some analysts, in the wake of the company’s adequate quarterly earnings report, said that they expected Facebook to turn things around."

The company did, indeed, turn things around, and the flop was soon forgotten.

Enlarge / Obvious example of an Instagram influencer.

2012: Instagram

In April 2012, Facebook said it would pay a whopping $1 billion to acquire an up-and-coming photo service: Instagram.

The fledgling photo service had about 27 million loyal iOS users at the time, and the company had recently launched an Android version of the app. "Zuckerberg didn't say how Facebook will make money on Instagram, which doesn't yet have advertising," Ars reported at the time.

The headline TechCrunch ran with, however, has proven to be the most relevant and prescient: "Facebook buys Instagram for $1 billion, turns budding rival into its standalone photo app," the tech business site wrote.

Instagram passed the 1 billion user mark in 2018, and advertising on the platform is now an enormous business, chock-full of "influencers" who flog legacy brands and new upstarts alike. An entire business model based on the Instagram influencer aesthetic flourished, launching new companies selling all things aspirational: fashion, luggage, lingerie, and more.

2013: Atlas

Among the company's dozens of acquisitions, one in particular stands out for allowing Facebook to solidify its grip on the world and its advertising markets.

In 2013, Facebook announced it would acquire a product called the Atlas Advertiser Suite from Microsoft; media reports pegged the purchase price around $100 million.

FURTHER READING

In Facebook's words, the acquisition provided an "opportunity" for "marketers and agencies" to get "a holistic view of campaign performance" across "different channels."

Translated back out of marketese and into plain English, Facebook acquired Atlas to basically solve the holy grail of online advertising: tracking its effectiveness in the physical world. Facebook absorbed what it needed from Atlas and then relaunched the platform in 2014 promising "people-based marketing" across devices and platforms. As yours truly put it at the time, "That’s a fancy way of saying that because your Facebook profile is still your Facebook profile no matter what computer or iPad or phone you’re using, Facebook can track your behavior across all devices and let advertisers reach you on all of them."

Facebook phased out the Atlas brand in 2017, but its products—the measurement tools that made it so valuable to begin with—did not. Instead, they were folded into the Facebook brand and made available as part of the Facebook advertiser management tools, such as the Facebook Pixel.

2013-2014: Onavo and WhatsApp

Late in 2013, Facebook spent about $200 million to acquire an Israel-based startup called Onavo. The dry press release at the time described Onavo as a provider of mobile utilities and "the first mobile market intelligence service based on real engagement data."

One of the mobile utilities Onavo provided was a VPN called Onavo Protect. In 2017, however, the Wall Street Journal reported that Onavo wasn't keeping all that Web traffic to itself, the way a VPN is supposed to. Instead, when users opened an app or website inside the VPN, Onavo was redirecting the traffic through Facebook servers that logged the data.

Facebook used that data to spot nascent competition before it could bloom. In the same report, the WSJ noted that the data captured from Onavo directly informed Facebook's largest-ever acquisition: its 2014 purchase of messaging platform WhatsApp for $19 billion.

FURTHER READING

"Onavo showed [WhatsApp] was installed on 99 percent of all Android phones in Spain—showing WhatsApp was changing how an entire country communicated," sources told the WSJ. In 2018, Facebook confirmed the WSJ's reporting to Congress, admitting that it used the aggregated data gathered and logged by Onavo to analyze consumers' use of other apps.

Apple pulled Onavo from its app store in 2018, with Google following suit a few months later. Facebook finally killed the service in May of this year.

The WSJ this year found that competitor Snapchat kept a dossier, dubbed "Project Voldemort," recording the ways Facebook used information from Onavo, together with other data, to try quashing its business.

WhatsApp, meanwhile, reached the 1.5 billion user mark in 2017, and it remains the most broadly used messaging service in the world.

2016: The Russia Election

The 2016 US presidential campaign season was... let's be diplomatic and call it a hot mess. There were many, many contributing factors to Donald Trump's eventual win and inauguration as the 45th president of the United States. At the time, the idea that extensive Russian interference might be one of those factors felt to millions of Americans like a farfetched tinfoil hat conspiracy theory, floated only to deflect from other concerns.

FURTHER READING Massive scale of Russian election trolling revealed in draft Senate report

By the weeks after the election, however, the involvement of Russian actors was well known. US intelligence agencies said they were "confident" Russia organized hacks that influenced the election. CNN reported in December 2016 that in addition to the hacks, "There is also evidence that entities connected to the Russian government were bankrolling 'troll farms' that spread fake news about Clinton."

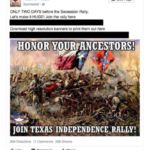

And boy, were they ever. Above you can see a selection of Russian-bought Facebook and Instagram ads—released to the public in 2018 by the House of Representatives' Permanent Select Committee on Intelligence—designed to sow doubt among the American population in the run-up to the election. And earlier this year, the Senate Intelligence Committee dropped its final report (PDF) detailing how Russian intelligence used Facebook, Instagram, and Twitter to spread misinformation with the specific goal of boosting the Republican Party and Donald Trump in the 2016 election.

The Senate report is damning. "The Committee found that Russia's targeting of the 2016 US presidential election was part of a broader, sophisticated, and ongoing information warfare campaign designed to sow discord in American politics and society," the report said:

Russia's history of using social media as a lever for online influence operations predates the 2016 US presidential election and involves more than the IRA [Internet Research Agency]. The IRA's operational planning for the 2016 election goes back at least to 2014, when two IRA operatives were sent to the United States to gather intelligence in furtherance of the IRA's objectives.

Special Counsel Robert Mueller's probe into the matter has resulted in charges against eight Americans, 13 Russian nationals, 12 Russian intelligence officers, and three Russian companies. As we head into 2020, some of those cases have resulted in plea deals or guilty findings, and others are still in progress.

FURTHER READING

Facebook itself faced deep and probing questions related to how it handled user data during the campaign, particularly pertaining to the Cambridge Analytica scandal. Ultimately, Facebook reached a $5 billion settlement with the Federal Trade Commission over violations of user privacy agreements.

The revelations had fallout inside Facebook, too. The company's chief information security officer at the time, Alex Stamos, reportedly clashed with the rest of the C-suite on how to handle Russian misinformation campaigns and election security. Stamos ultimately left the company in August, 2018.

2019: The Feds take notice

FURTHER READING

Everything Facebook allegedly did do in the past decade but shouldn't have, or didn't do but should have, basically hit the regulatory fan all at once in 2019. Facebook spent so much time in the spotlight this year that it's easiest to relate in a timeline:

March 8: Sen. Elizabeth Warren (D-Mass.) announces a proposal to break up Amazon, Facebook, and Google as one of the policy planks of her 2020 presidential run.

May 9: Facebook co-founder Chris Hughes pens a lengthy op-ed in The New York Times making the case for breaking up Facebook sooner rather than later.June 3: The House Antitrust Subcommittee announces a bipartisan investigation into competition and "abusive conduct" in the tech sector.June 3: Reuters, The Wall Street Journal, and other media outlets report that the FTC and DOJ have settled on a divide-and-conquer approach to antitrust probes, with the DOJ set to take on Apple and Google, and the FTC investigating Amazon and Facebook.July 24: The Department of Justice publicly confirms it has launched an antitrust probe into "market-leading online platforms." The DOJ does not name names, but the list of potential targets is widely understood to include Apple, Amazon, Facebook, and Google.July 24: The FTC announces its $5 billion settlement over user privacy violations.July 25: Facebook confirms it is under investigation by the FTC.September 6: A coalition of attorneys general for nine states and territories announce a joint antitrust probe into Facebook.September 13: The House Antitrust Subcommittee sends an absolutely massive request for information to Apple, Amazon, Facebook, and Google, requesting 10 years' worth of detailed records relating to competition, acquisitions, and other matters relevant to the investigation.September 25: Media reports indicate the DOJ is also probing Facebook.October 22: An additional 38 attorneys general sign on to the states' probe of Facebook, bringing the total to 47.October 22: Zuckerberg testifies before the House Financial Services Committee about Facebook. The hearing goes extremely poorly for him.

2020: Too big to succeed?

We wrap up this decade and head into the next with Facebook in the crosshairs not only of virtually every US regulator, but also of regulators in Europe, Australia, and other jurisdictions worldwide. Meanwhile, there's another presidential election bearing down on us, with the Iowa Democratic Caucus kicking off primary season a little more than a month from now.

Facebook is clearly trying to correct some of its mistakes. The company has promised to fight election disinformation, as well as disinformation around the 2020 census. But it's a distinctly uphill battle.

The company's sheer scale means targeting any problematic content, including disinformation, is extremely challenging. And yet, even though the company has three different platforms that each boasts more than 1 billion daily users, inside Facebook, the appetite for growth apparently remains insatiable.

"[F]or Facebook, the very word 'impact' is often defined by internal growth rather than external consequences," current and former Facebook employees recently told BuzzFeed News.

Performance evaluation and compensation changes (i.e., raises) are still tied to growth metrics, sources told BuzzFeed—so it's no wonder that the company still seems more focused on a narrow set of numbers rather than on the massive impact its services can have worldwide. The company recently "tweaked" its bonus system to include "social progress" as a metric—but it's anyone's guess whether internal forces or external regulators will be the first to make changes at Facebook stick.

---30---