Low-cost gel film can pluck drinking water from desert air

More than a third of the world's population lives in drylands, areas that experience significant water shortages. Scientists and engineers at The University of Texas at Austin have developed a solution that could help people in these areas access clean drinking water.

The team developed a low-cost gel film made of abundant materials that can pull water from the air in even the driest climates. The materials that facilitate this reaction cost a mere $2 per kilogram, and a single kilogram can produce more than 6 liters of water per day in areas with less than 15% relative humidity and 13 liters in areas with up to 30% relative humidity.

The research builds on previous breakthroughs from the team, including the ability to pull water out of the atmosphere and the application of that technology to create self-watering soil. However, these technologies were designed for relatively high-humidity environments.

"This new work is about practical solutions that people can use to get water in the hottest, driest places on Earth," said Guihua Yu, professor of materials science and mechanical engineering in the Cockrell School of Engineering's Walker Department of Mechanical Engineering. "This could allow millions of people without consistent access to drinking water to have simple, water generating devices at home that they can easily operate."

The new paper appears in Nature Communications.

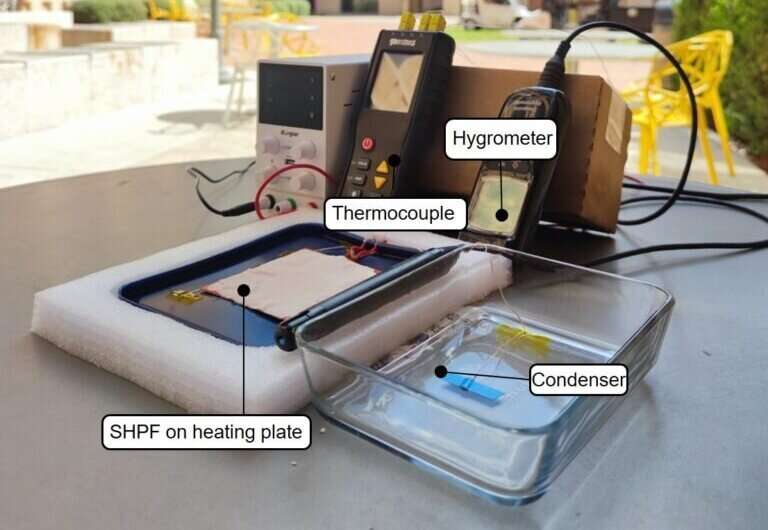

The researchers used renewable cellulose and a common kitchen ingredient, konjac gum, as a main hydrophilic (attracted to water) skeleton. The open-pore structure of gum speeds the moisture-capturing process. Another designed component, thermo-responsive cellulose with hydrophobic (resistant to water) interaction when heated, helps release the collected water immediately so that overall energy input to produce water is minimized.

Other attempts at pulling water from desert air are typically energy-intensive and do not produce much. And although 6 liters does not sound like much, the researchers say that creating thicker films or absorbent beds or arrays with optimization could drastically increase the amount of water they yield.

The reaction itself is a simple one, the researchers said, which reduces the challenges of scaling it up and achieving mass usage.

"This is not something you need an advanced degree to use," said Youhong "Nancy" Guo, the lead author on the paper and a former doctoral student in Yu's lab, now a postdoctoral researcher at the Massachusetts Institute of Technology. "It's straightforward enough that anyone can make it at home if they have the materials."

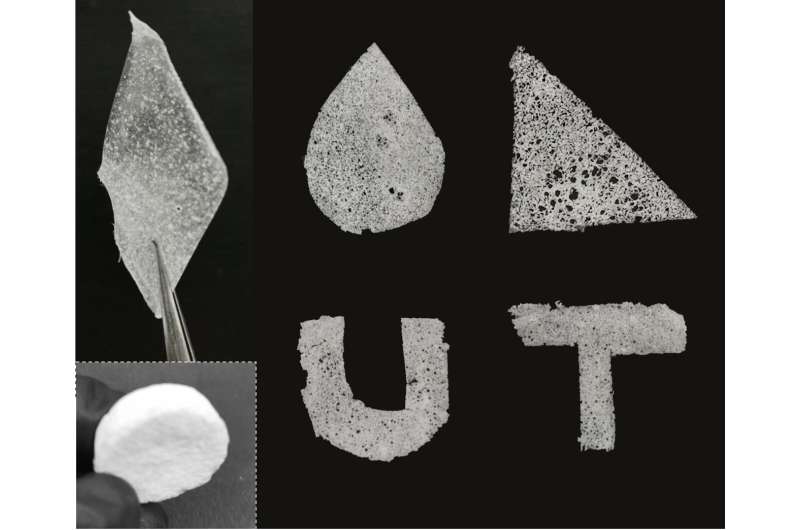

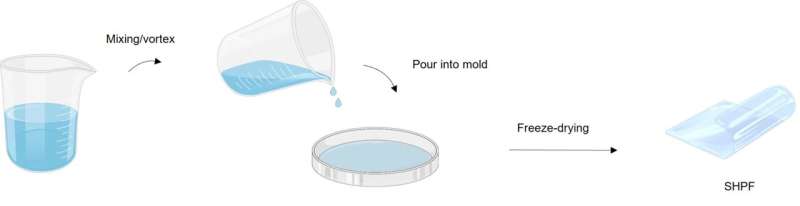

The film is flexible and can be molded into a variety of shapes and sizes, depending on the need of the user. Making the film requires only the gel precursor, which includes all the relevant ingredients poured into a mold.

"The gel takes 2 minutes to set simply. Then, it just needs to be freeze-dried, and it can be peeled off the mold and used immediately after that," said Weixin Guan, a doctoral student on Yu's team and a lead researcher of the work. The researchers envision this as something that people could someday buy at a hardware store and use in their homes because of the simplicity.Solar-powered moisture harvester collects and cleans water from air

More information: Youhong Guo et al, Scalable super hygroscopic polymer films for sustainable moisture harvesting in arid environments, Nature Communications (2022). DOI: 10.1038/s41467-022-30505-2

Journal information: Nature Communications

Provided by University of Texas at Austin