“AI toys are not safe for kids,” said a spokesperson for the children’s advocacy group Fairplay. “They disrupt children’s relationships, invade family privacy, displace key learning activities, and more.”

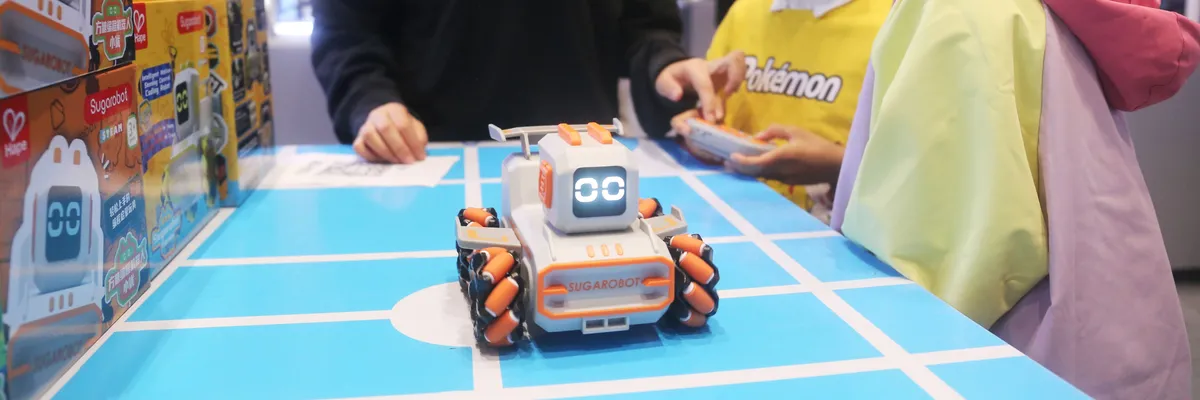

A robot toy attracts children at the Global AI Player Carnival & West Bund International Tech Consumer Carnival in Shanghai, China, on October 27, 2025.

(Photo by CFOTO/Future Publishing via Getty Images)

Stephen Prager

Dec 16, 2025

COMMON DREAMS

As scrutiny of the dangers of artificial intelligence technology increases, Mattel is delaying the release of a toy collaboration it had planned with OpenAI for the holiday season, and children’s advocates hope the company will scrap the project for good.

The $6 billion company behind Barbie and Hot Wheels announced a partnership with OpenAI in June, promising, with little detail, to collaborate on “AI-powered products and experiences” to hit US shelves later in the year, an announcement that was met with fear about potential dangers to developing minds.

At the time, Robert Weissman, the president of the consumer advocacy group Public Citizen, warned: “Endowing toys with human-seeming voices that are able to engage in human-like conversations risks inflicting real damage on children. It may undermine social development, interfere with children’s ability to form peer relationships, pull children away from playtime with peers, and possibly inflict long-term harm.”

In November, dozens of child development experts and organizations signed an advisory from the group Fairplay warning parents not to buy the plushies, dolls, action figures, and robots that were coming embedded with “the very same AI systems that have produced unsafe, confusing, or harmful experiences for older kids and teens, including urging them to self harm or take their own lives.”

In addition to fears about stunted emotional development, they said the toys also posed security risks: “Using audio, video, and even facial or gesture recognition, AI toys record and analyze sensitive family information even when they appear to be off... Companies can then use or sell this data to make the toys more addictive, push paid upgrades, or fuel targeted advertising directed at children.”

The warnings have proved prescient in the months after Mattel’s partnership was announced. As Victor Tangermann wrote for Futurism:

Toy makers have unleashed a flood of AI toys that have already been caught telling tykes how to find knives, light fires with matches, and giving crash courses in sexual fetishes.

Most recently, tests found that an AI toy from China is regaling children with Chinese Communist Party talking points, telling them that “Taiwan is an inalienable part of China” and defending the honor of the country’s president Xi Jinping.

As these horror stories rolled in, Mattel went silent for months on the future of its collaboration with Sam Altman’s AI juggernaut. That is, until Monday, when it told Axios that the still-ill-defined product’s rollout had been delayed.

A spokesperson for OpenAI confirmed, “We don’t have anything planned for the holiday season,” and added that when a product finally comes out, it will be aimed at older teenagers rather than young children.

Rachel Franz, director of Fairplay’s Young Children Thrive Offline program, praised Mattel’s decision to delay the release: “Given the threat that AI poses to children’s development, not to mention their safety and privacy, such caution is more than warranted,” she said.

But she added that merely putting the rollout of AI toys on pause was not enough.

“We urge Mattel to make this delay permanent. AI toys are not safe for kids. They disrupt children’s relationships, invade family privacy, displace key learning activities, and more,” Franz said. “Mattel has an opportunity to be a real leader here—not in the race to the bottom to hook kids on AI—but in putting children’s needs first and scrapping its plans for AI toys altogether.”

Dec 16, 2025

COMMON DREAMS

As scrutiny of the dangers of artificial intelligence technology increases, Mattel is delaying the release of a toy collaboration it had planned with OpenAI for the holiday season, and children’s advocates hope the company will scrap the project for good.

The $6 billion company behind Barbie and Hot Wheels announced a partnership with OpenAI in June, promising, with little detail, to collaborate on “AI-powered products and experiences” to hit US shelves later in the year, an announcement that was met with fear about potential dangers to developing minds.

At the time, Robert Weissman, the president of the consumer advocacy group Public Citizen, warned: “Endowing toys with human-seeming voices that are able to engage in human-like conversations risks inflicting real damage on children. It may undermine social development, interfere with children’s ability to form peer relationships, pull children away from playtime with peers, and possibly inflict long-term harm.”

In November, dozens of child development experts and organizations signed an advisory from the group Fairplay warning parents not to buy the plushies, dolls, action figures, and robots that were coming embedded with “the very same AI systems that have produced unsafe, confusing, or harmful experiences for older kids and teens, including urging them to self harm or take their own lives.”

In addition to fears about stunted emotional development, they said the toys also posed security risks: “Using audio, video, and even facial or gesture recognition, AI toys record and analyze sensitive family information even when they appear to be off... Companies can then use or sell this data to make the toys more addictive, push paid upgrades, or fuel targeted advertising directed at children.”

The warnings have proved prescient in the months after Mattel’s partnership was announced. As Victor Tangermann wrote for Futurism:

Toy makers have unleashed a flood of AI toys that have already been caught telling tykes how to find knives, light fires with matches, and giving crash courses in sexual fetishes.

Most recently, tests found that an AI toy from China is regaling children with Chinese Communist Party talking points, telling them that “Taiwan is an inalienable part of China” and defending the honor of the country’s president Xi Jinping.

As these horror stories rolled in, Mattel went silent for months on the future of its collaboration with Sam Altman’s AI juggernaut. That is, until Monday, when it told Axios that the still-ill-defined product’s rollout had been delayed.

A spokesperson for OpenAI confirmed, “We don’t have anything planned for the holiday season,” and added that when a product finally comes out, it will be aimed at older teenagers rather than young children.

Rachel Franz, director of Fairplay’s Young Children Thrive Offline program, praised Mattel’s decision to delay the release: “Given the threat that AI poses to children’s development, not to mention their safety and privacy, such caution is more than warranted,” she said.

But she added that merely putting the rollout of AI toys on pause was not enough.

“We urge Mattel to make this delay permanent. AI toys are not safe for kids. They disrupt children’s relationships, invade family privacy, displace key learning activities, and more,” Franz said. “Mattel has an opportunity to be a real leader here—not in the race to the bottom to hook kids on AI—but in putting children’s needs first and scrapping its plans for AI toys altogether.”

No comments:

Post a Comment