Research suggests benefits of conservation efforts may not yet be fully visible

The time it takes for species to respond to conservation measures—known as an 'ecological time lag' - could be partly masking any real progress that is being made, experts have warned.

Global conservation targets to reverse declines in biodiversity and halt species extinctions are not being met, despite decades of conservation action.

Last year, a UN report on global biodiversity warned one million species are at risk of extinction within decades, putting the world's natural life-support systems in jeopardy.

The report also revealed we were on track to miss almost all the 2020 nature targets that had been agreed a decade earlier by the global Convention on Biological Diversity

But work published today in the journal Nature Ecology and Evolution offers new hope that in some cases, conservation measures may not necessarily be failing, it is just too early to see the progress that is being made.

Led by Forest Research together with the University of Stirling, Natural England, and Newcastle University, the study authors highlight the need for 'smarter' biodiversity targets which account for ecological time-lags to help us better distinguish between cases where conservation interventions are on track to achieve success but need more time for the conservation benefits to be realised, and those where current conservation actions are simply insufficient or inappropriate.

Lead researcher Dr. Kevin Watts of Forest Research said:

"We don't have time to wait and see which conservation measures are working and which ones will fail. But the picture is complicated and we fear that some conservation actions that will ultimately be successful may be negatively reviewed, reduced or even abandoned simply due to the unappreciated delay between actions and species' response.

"We hope the inclusion of time-lags within biodiversity targets, including the use of well-informed interim indicators or milestones, will greatly improve the way that we evaluate progress towards conservation success.

"Previous conservation efforts have greatly reduced the rate of decline for many species and protected many from extinction and we must learn from past successes and remain optimistic: conservation can and does work, but at the same time, we mustn't be complacent. This work also emphasises the need to acknowledge and account for the fact that biodiversity may still be responding negatively to previous habitat loss and degradation."

'Rebalancing the system'

Ecological time-lags relate to the rebalancing of a system following a change, such as the loss of habitat or the creation of new habitat.

Dr. Watts added: "The system is analogous to a financial economy: we are paying back the extinction 'debt' from past destruction of habitats and now waiting for the 'credit' to accrue from conservation actions. What we're trying to avoid now is going bankrupt by intervening too late and allow the ecosystem to fail."

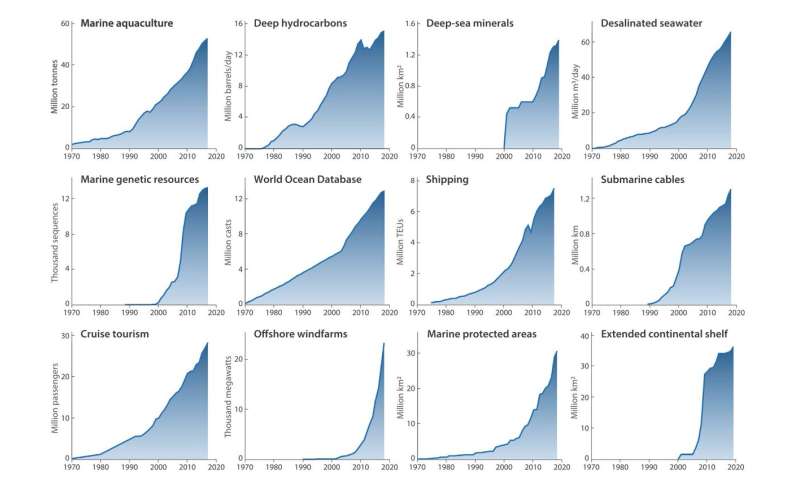

Using theoretical modelling, along with data from a 'woodland bird' biodiversity indicator, the research team explored how species with different characteristics (e.g. habitat generalists vs specialists) might respond over time to landscape change caused by conservation actions.

The authors suggest the use of milestones that mark the path towards conservation targets. For instance, the ultimate success of habitat restoration policies could be assessed firstly against the amount of habitat created, followed by the arrival of generalist species. Then, later colonisation by specialists would indicate increased habitat quality. If a milestone is missed at any point, the cause should be investigated and additional conservation interventions considered.

Philip McGowan, Professor of Conservation Science and Policy at Newcastle University and Chair of the IUCN Species Survival Commission Post-2020 Biodiversity Targets Task Force, said we need to "hold our nerve."

"Ultimately, ten years is too short a time for most species to recover.

"There are many cases where there is strong evidence to suggest the conservation actions that have been put in place are appropriate and robust—we just need to give nature more time.

"Of course, time isn't something we have. We are moving faster and faster towards a point where the critical support systems in nature are going to fail.

Almost 200 of the world's governments and the EU, signed the Convention on Biological Diversity, a 10-year plan to protect some of the world's most threatened species which was launched in 2010.

"A new plan is being negotiated during 2020 and it is critical that negotiators understand the time that it takes to reverse species declines and the steps necessary to achieve recovery of species on the scale that we need," says Professor McGowan.

"But there is hope."

Simon Duffield, Natural England, adds:

"We know that natural systems takes time to respond to change, whether it be positive, such as habitat creation, or negative such as habitat loss, degradation or increasingly climate change. How these time lags are incorporated into conservation targets has always been a challenge. We hope that this framework takes us some way towards being able to do so."

The research was conducted as part of the Woodland Creation and Ecological Networks (WrEN) project.

Professor Kirsty Park, co-lead for the WrEN project, said:

"This research is timely as there is an opportunity to incorporate time-lags into the construction of the Convention on Biological Diversity Post-2020 Global Biodiversity Framework. We need to consider realistic timescales to observe changes in the status of species, and also take into account the sequence of policies and actions that will be necessary to deliver those changes"

More information: Ecological time lags and the journey towards conservation success, Nature Ecology and Evolution (2020). DOI: 10.1038/s41559-019-1087-8 , https://nature.com/articles/s41559-019-1087-8

Journal information: Nature Ecology & Evolution