by Simon Weaving, The Conversation

Credit: Shutterstock

Images of the latest coronavirus have become instantly recognizable, often vibrantly colored and floating in an opaque background. In most representations, the shape of the virus is the same—a spherical particle with spikes, resembling an alien invader.

But there's little consensus about the color: images of the virus come in red, orange, blue, yellow, steely or soft green, white with red spikes, red with blue spikes and many colors in between.

In their depictions of the virus, designers, illustrators and communicators are making some highly creative and evocative decisions.

Color, light and fear

For some, the lack of consensus about the appearance of viruses confirms fears and increases anxiety. On March 8 2020, the director-general of the World Health Organisation warned of the "infodemic" of misinformation about the coronavirus, urging communicators to use "facts not fear" to battle the flood of rumors and myths.

The confusion about the color of coronavirus starts with the failure to understand the nature of color in the sub-microscopic world.

Our perception of color is dependent on the presence of light. White light from the sun is a combination of all the wavelengths of visible light—from violet at one end of the spectrum to red at the other.

When white light hits an object, we see its color thanks to the light that is reflected by that object towards our eyes. Raspberries and rubies appear red because they absorb most light but reflect the red wavelength.

Provided by The Conversation This article is republished from The Conversation under a Creative Commons license. Read the original article.

Images of the latest coronavirus have become instantly recognizable, often vibrantly colored and floating in an opaque background. In most representations, the shape of the virus is the same—a spherical particle with spikes, resembling an alien invader.

But there's little consensus about the color: images of the virus come in red, orange, blue, yellow, steely or soft green, white with red spikes, red with blue spikes and many colors in between.

In their depictions of the virus, designers, illustrators and communicators are making some highly creative and evocative decisions.

Color, light and fear

For some, the lack of consensus about the appearance of viruses confirms fears and increases anxiety. On March 8 2020, the director-general of the World Health Organisation warned of the "infodemic" of misinformation about the coronavirus, urging communicators to use "facts not fear" to battle the flood of rumors and myths.

The confusion about the color of coronavirus starts with the failure to understand the nature of color in the sub-microscopic world.

Our perception of color is dependent on the presence of light. White light from the sun is a combination of all the wavelengths of visible light—from violet at one end of the spectrum to red at the other.

When white light hits an object, we see its color thanks to the light that is reflected by that object towards our eyes. Raspberries and rubies appear red because they absorb most light but reflect the red wavelength.

An artist’s impression of the pandemic virus. Credit:

Fusion Medical Animation/Unsplash, CC BY

But as objects become smaller, light is no longer an effective tool for seeing. Viruses are so small that, until the 1930s, one of their scientifically recognized properties was their invisibility. Looking for them with a microscope using light is like trying to find an ant in a football stadium at night using a large searchlight: the scale difference between object and tool is too great.

It wasn't until the development of the electron microscope in the 1930s that researchers could "see" a virus. By using electrons, which are vastly smaller than light particles, it became possible to identify the shapes, structures and textures of viruses. But as no light is involved in this form of seeing, there is no color. Images of viruses reveal a monochrome world of gray. Like electrons, atoms and quarks, viruses exist in a realm where color has no meaning.

Vivid imagery

Grey images of unfamiliar blobs don't make for persuasive or emotive media content.

But as objects become smaller, light is no longer an effective tool for seeing. Viruses are so small that, until the 1930s, one of their scientifically recognized properties was their invisibility. Looking for them with a microscope using light is like trying to find an ant in a football stadium at night using a large searchlight: the scale difference between object and tool is too great.

It wasn't until the development of the electron microscope in the 1930s that researchers could "see" a virus. By using electrons, which are vastly smaller than light particles, it became possible to identify the shapes, structures and textures of viruses. But as no light is involved in this form of seeing, there is no color. Images of viruses reveal a monochrome world of gray. Like electrons, atoms and quarks, viruses exist in a realm where color has no meaning.

Vivid imagery

Grey images of unfamiliar blobs don't make for persuasive or emotive media content.

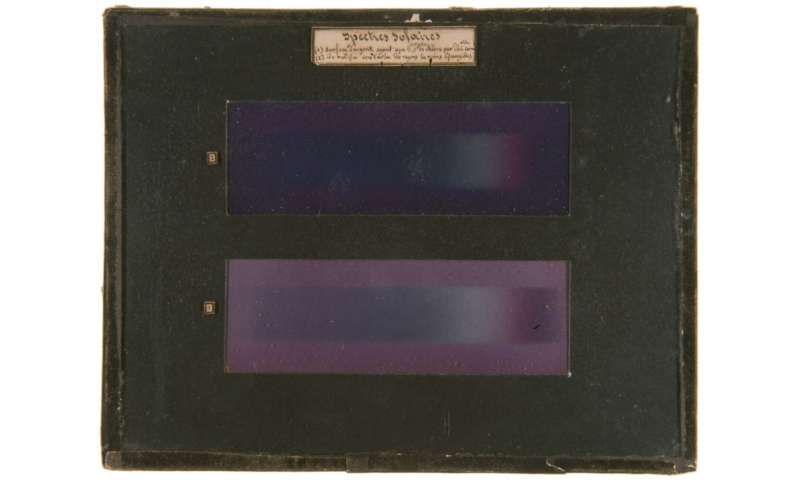

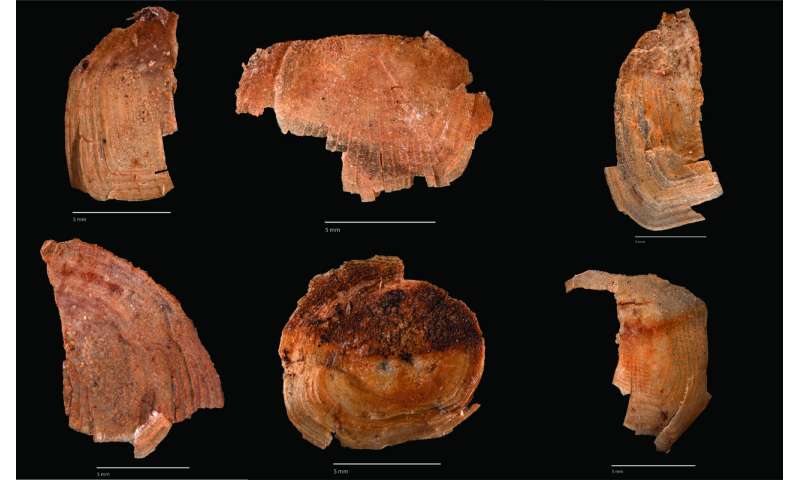

A colorised scanning electron micrograph image of a VERO E6 cell (blue) heavily infected with SARS-COV-2 virus particles (orange), isolated from a patient sample. Credit: NIAID/Flickr, CC BY

Research into the representation of the Ebola virus outbreak in 1995 revealed the image of choice was not the worm-like virus but teams of Western medical experts working in African villages in hermetically sealed suits. The early visual representation of the AIDS virus focused on the emaciated bodies of those with the resulting disease, often younger men.

With symptoms similar to the common cold and initial death rates highest amongst the elderly, the coronavirus pandemic provides no such dramatic visual material. To fill this void, the vivid range of colorful images of the coronavirus have strong appeal.

Many images come from stock photo suppliers, typically photorealistic artists' impressions rather than images from electron microscopes.

The Public Health Library of the US government's Centre for Disease Control (CDC) provides one such illustration, created to reveal the morphology of the coronavirus. It's an off-white sphere with yellow protein particles attached and red spikes emerging from the surface, creating the distinctive "corona" or crown. All of these color choices are creative decisions.

Research into the representation of the Ebola virus outbreak in 1995 revealed the image of choice was not the worm-like virus but teams of Western medical experts working in African villages in hermetically sealed suits. The early visual representation of the AIDS virus focused on the emaciated bodies of those with the resulting disease, often younger men.

With symptoms similar to the common cold and initial death rates highest amongst the elderly, the coronavirus pandemic provides no such dramatic visual material. To fill this void, the vivid range of colorful images of the coronavirus have strong appeal.

Many images come from stock photo suppliers, typically photorealistic artists' impressions rather than images from electron microscopes.

The Public Health Library of the US government's Centre for Disease Control (CDC) provides one such illustration, created to reveal the morphology of the coronavirus. It's an off-white sphere with yellow protein particles attached and red spikes emerging from the surface, creating the distinctive "corona" or crown. All of these color choices are creative decisions.

The CDC illustration reveals ‘ultrastructural morphology’ exhibited by coronaviruses.

Credit: CDC/Alissa Eckert, MS; Dan Higgins/MAMS

Biologist David Goodsell takes artistic interpretation a step further, using watercolor painting to depict viruses at the cellular level.

One of the complicating challenges for virus visualisation is the emergence of so-called "color" images from electron microscopes. Using a methodology that was originally described as "painting," scientists are able to add color to structures in the grey-scale world of imaging to help distinguish the details of cellular micro-architecture. Yet even here, the choice of color is arbitrary, as shown in a number of colored images of the coronavirus made available on Flickr by the National Institute of Allergy and Infectious Diseases (NIAID). In these, the virus has been variously colored yellow, orange, magenta and blue.

Biologist David Goodsell takes artistic interpretation a step further, using watercolor painting to depict viruses at the cellular level.

One of the complicating challenges for virus visualisation is the emergence of so-called "color" images from electron microscopes. Using a methodology that was originally described as "painting," scientists are able to add color to structures in the grey-scale world of imaging to help distinguish the details of cellular micro-architecture. Yet even here, the choice of color is arbitrary, as shown in a number of colored images of the coronavirus made available on Flickr by the National Institute of Allergy and Infectious Diseases (NIAID). In these, the virus has been variously colored yellow, orange, magenta and blue.

A composite of images created by NIAID. Colours have been attributed by scientists but these are arbitrary. Credit: NIAID/Flickr, CC BY

Embracing grey

Whilst these images look aesthetically striking, the arbitrary nature of their coloring does little to solve WHO's concerns about the insecurity that comes with unclear facts about viruses and disease.

Embracing grey

Whilst these images look aesthetically striking, the arbitrary nature of their coloring does little to solve WHO's concerns about the insecurity that comes with unclear facts about viruses and disease.

Some artists’ impressions include blood platelet images. Credit: Shutterstock

One solution would be to embrace the colorless sub-microscopic world that viruses inhabit and accept their greyness.

This has some distinct advantages: firstly, it fits the science that color can't be attributed where light doesn't reach. Secondly, it renders images of the virus less threatening: without their red spikes or green bodies they seem less like hostile invaders from a science fiction fantasy. And the idea of greyness also fits the scientific notion that viruses are suspended somewhere between the dead and the living.

Stripping the coronavirus of the distracting vibrancy of vivid color—and seeing it consistently as an inert grey particle—could help reduce community fear and better allow us to continue the enormous collective task of managing its biological and social impact

One solution would be to embrace the colorless sub-microscopic world that viruses inhabit and accept their greyness.

This has some distinct advantages: firstly, it fits the science that color can't be attributed where light doesn't reach. Secondly, it renders images of the virus less threatening: without their red spikes or green bodies they seem less like hostile invaders from a science fiction fantasy. And the idea of greyness also fits the scientific notion that viruses are suspended somewhere between the dead and the living.

Stripping the coronavirus of the distracting vibrancy of vivid color—and seeing it consistently as an inert grey particle—could help reduce community fear and better allow us to continue the enormous collective task of managing its biological and social impact

Provided by The Conversation This article is republished from The Conversation under a Creative Commons license. Read the original article.