Robotic Monitoring of the Deep-Sea Carbon Cycle and Climate Change

Thought LeadersPaul McGill

Thought LeadersPaul McGillAlana Sherman

Crissy Huffard

In this interview, we speak to researchers from the Monterey Bay Aquarium Research Institute about the Benthic Rover II and how it helps to monitor the deep-sea carbon cycle and climate change.

Please introduce yourself and tell us

about your background in ocean monitoring?

We are Crissy Huffard, Senior Research Specialist (marine biologist), Alana Sherman, Electrical Engineering Group Lead (electrical engineer), and Paul McGill, Electrical Engineer (electrical engineer).

The three of us work as part of a team to study deep-ocean carbon cycling at Station M, which sits 4,000 meters deep off the central California coast. Alana and Paul, along with Rich Henthorn and John Ferreira, are on the technological innovation side of the team, while Crissy works with Ken Smith to interpret data the instruments bring back.

What role does the deep seafloor play in

carbon cycling and sequestration?

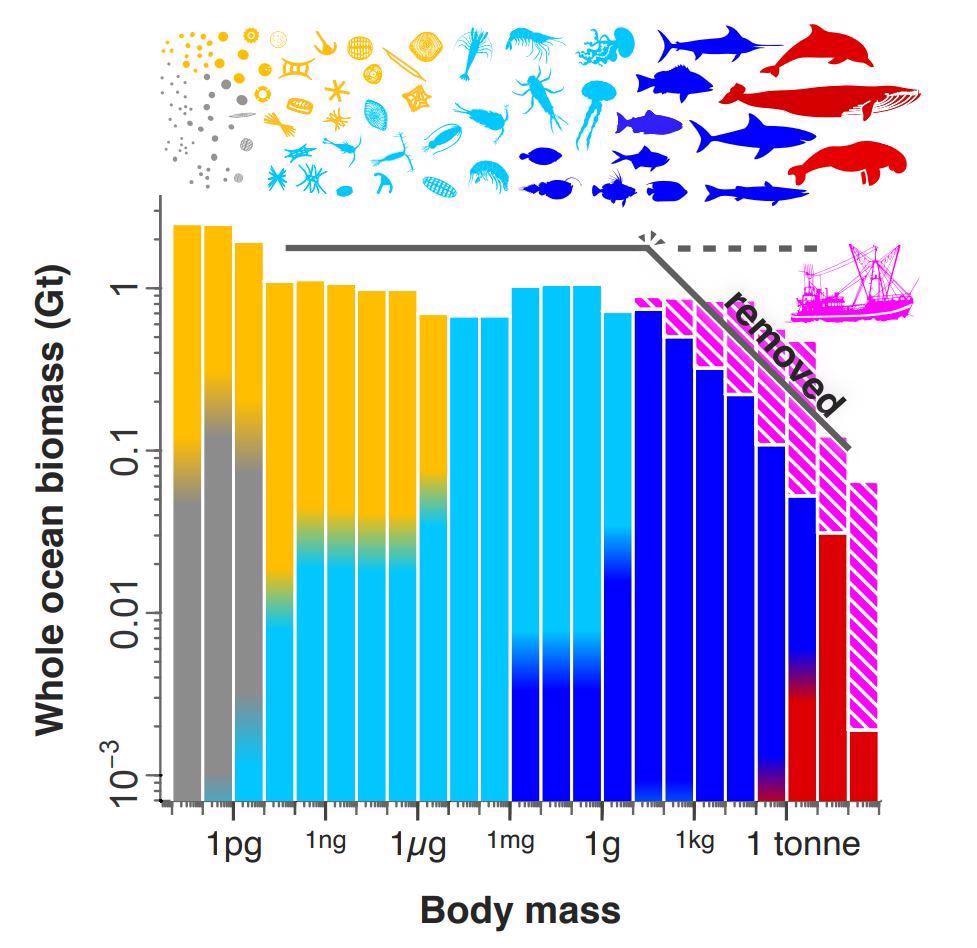

Globally, the deep ocean is a very important carbon sink. Deep-ocean carbon storage capacity ultimately influences how much carbon dioxide the ocean can take out of the atmosphere.

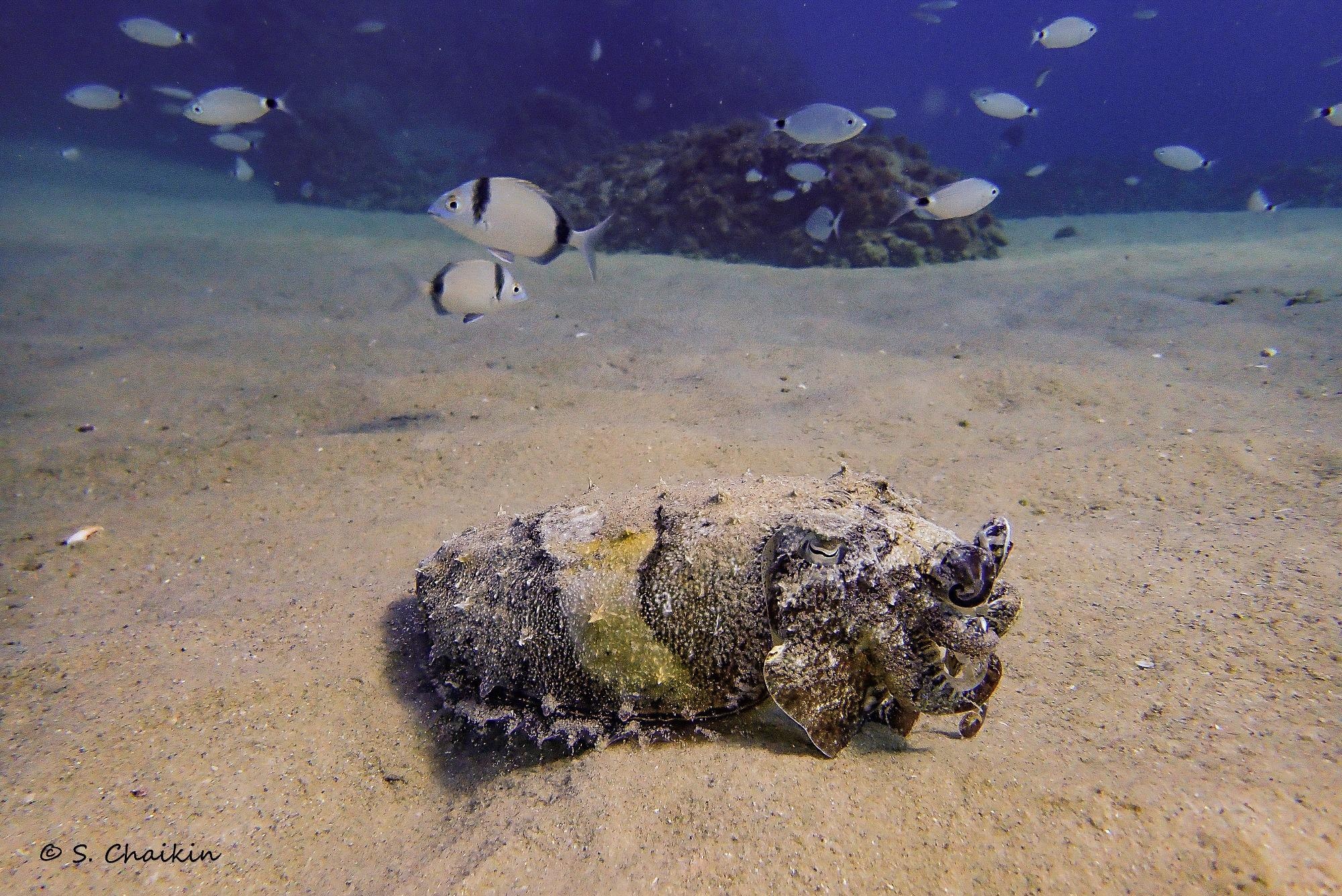

At Station M, seafloor communities (animals and microbes) eat a lot of this carbon (an “ingredient” of marine snow that drifts to the abyssal seafloor from the waters above) as it settles, leaving very little, if any, to be stored long term in the sediments.

Why is understanding the activities surrounding

the deep seafloor so important?

This information ultimately helps us understand where and how much carbon gets stored in the ocean.

How was the Benthic Rover II created

and what were the challenges faced when developing it?

The Benthic Rover II followed a first version (Benthic Rover I) that Ken Smith developed at Scripps Institution of Oceanography in the 1990s, but was lost at sea.

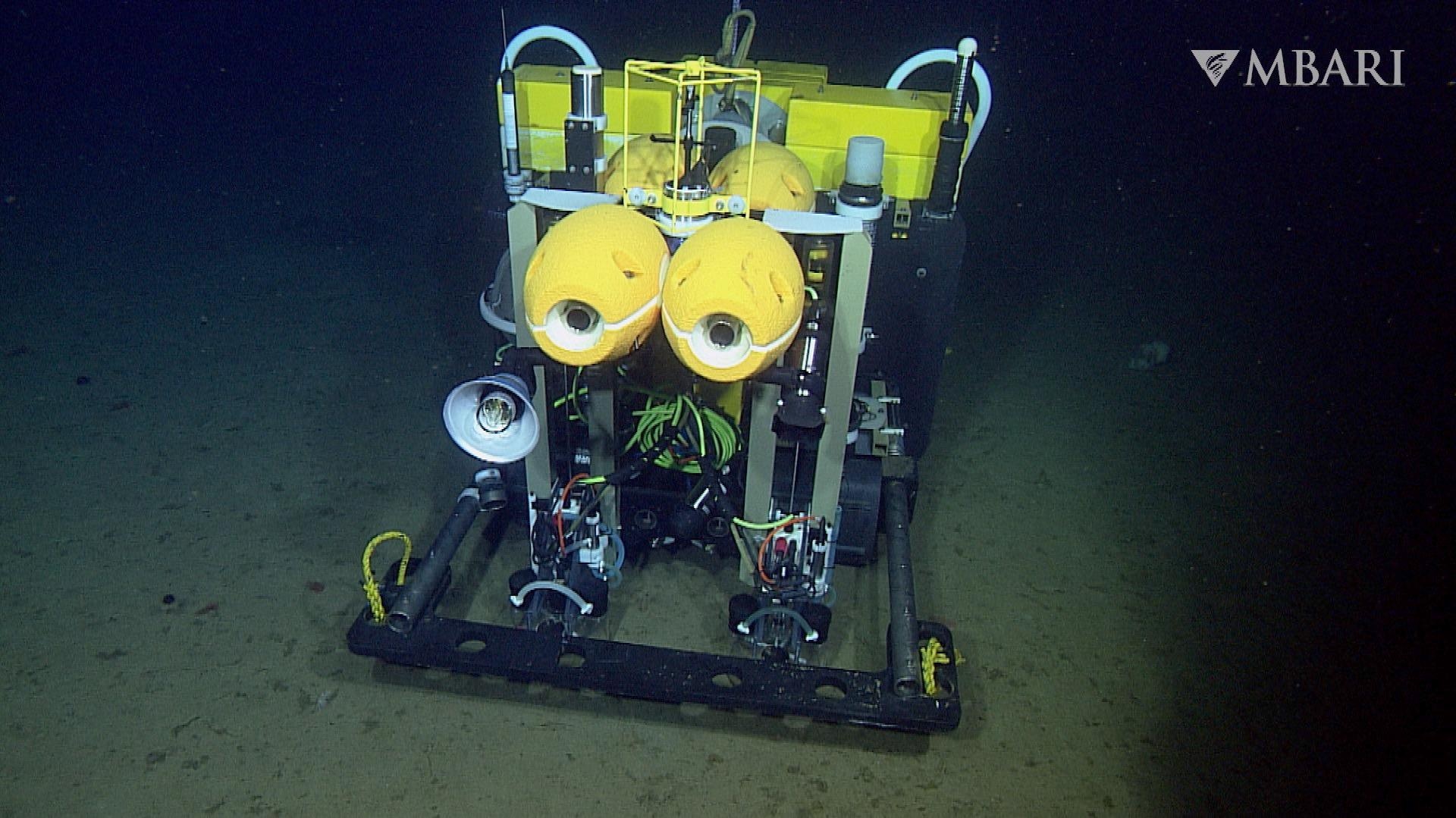

The Benthic Rover II was designed to accomplish the science objective to study carbon cycling and survive extreme pressure, almost-freezing temperatures, corrosive seawater, and ship operations in high seas.

What steps had to be taken to release

the rover into the ocean originally?

To handle the corrosive, high-pressure environment, the Benthic Rover II is made almost entirely of titanium and plastic, and its flotation is provided by rugged “syntactic foam” blocks (tiny, hollow glass spheres embedded in epoxy resin) arranged to maintain an upright orientation.

The bumper protects it from just that—bumps—that might take place during deployment and recovery in the high seas.

What can the Benthic Rover II measure

about the seafloor and how?

The Benthic Rover II’s main science objective is to help us understand carbon consumption. It lowers two acrylic chambers (with stir bars and an oxygen sensor inside) down into the sediment and measures oxygen depletion for 48 hours.

With these data, we estimate the carbon consumption by animals and microbes living there. It also takes pictures of the seafloor and records information about ambient water conditions such as currents, oxygen concentration, and temperature.

How is the Benthic Rover II recovered

every year and its findings assessed?

The Benthic Rover II is called to the surface with an acoustic signal that tells it to drop its 250-pound ballast weight. Once on the surface, we find it either by sight or using its radio and satellite beacons. The crew of MBARI’s R/V Western Flyer then recover it onto the ship.

Data are downloaded and backed up on the ship, then taken to shore for analysis of engineering performance and scientific results.

What has the data collected from Benthic Rover II over the last seven years shown?

The deep-sea is dynamic! In the past ten years, we’ve seen a large increase in the amount of carbon making its way to the deep sea. Data from the Benthic Rover II is helping us understand how much, if any, of this carbon might be stored in the sediments.

When coupled with results from other autonomous instruments at Station M, we’re even able to study what types of marine snow might be especially efficient at storing carbon in sediments.

What do your results show you about

the future of the ocean and its role in

climate change?

Humans’ impact on the surface ocean is translating to changes in the deep sea. Problems like ocean acidification and deoxygenation aren’t limited to the surface ocean. We need to worry about them changing the full ocean depths.

MBARI’s Benthic Rover II during a deployment at Station M, an MBARI research site off the coast of central California Image Credit: © 2016 MBARI

What is the next stage for the Benthic Rover II?

We’re testing pH sensors to add to the system to help us better refine our estimates of carbon consumption.

Using the knowledge that the rover has given us,

what do you think needs to be done to maintain

the health of our oceans and planet?

Limit our total carbon emissions and educate the public on the important issue of ocean acidification.

Where can readers find more information?

- Benthic Rover II: https://www.mbari.org/benthic-rover-2/

- Deep-sea rover provides long-term data on carbon cycle and climate change: https://youtu.be/Nqe6tKIn628

- Original paper: Smith, K.L., A.D. Sherman, P.R. McGill, R.G. Henthorn, T.P. Connolly, and C.L. Huffard (2021). Abyssal Benthic Rover, an autonomous long-term monitoring of deep-ocean processes. Science Robotics, 6: eabl4925. doi.org/10.1126/scirobotics.abl4925

- Station M: https://www.mbari.org/station-m-summary/

- Robots in the abyss: 30 years of research on the abyssal plain provides clues to climate change: https://youtu.be/lM8j0rQlAuo

About the Researchers

Crissy, Paul, and Alana work at MBARI in Moss Landing, California. MBARI (Monterey Bay Aquarium Research Institute) is a private non-profit oceanographic research center, founded by David Packard in 1987. The mission of MBARI is to advance marine science and technology to understand a changing ocean. Learn more at www.mbari.org.

I am currently a

I am currently a

Ian Hatton has a background in biology. He previously studied and worked at McGill University, Canada, the National Institute for Mathematical Sciences, South Korea, Princeton University, USA, and the Institut de Ciència i Tecnologia Ambientals in Barcelona, Spain.

Ian Hatton has a background in biology. He previously studied and worked at McGill University, Canada, the National Institute for Mathematical Sciences, South Korea, Princeton University, USA, and the Institut de Ciència i Tecnologia Ambientals in Barcelona, Spain.