Technology helps self-driving cars learn from their own memories

An autonomous vehicle is able to navigate city streets and other less-busy environments by recognizing pedestrians, other vehicles and potential obstacles through artificial intelligence. This is achieved with the help of artificial neural networks, which are trained to "see" the car's surroundings, mimicking the human visual perception system.

But unlike humans, cars using artificial neural networks have no memory of the past and are in a constant state of seeing the world for the first time—no matter how many times they've driven down a particular road before. This is particularly problematic in adverse weather conditions, when the car cannot safely rely on its sensors.

Researchers at the Cornell Ann S. Bowers College of Computing and Information Science and the College of Engineering have produced three concurrent research papers with the goal of overcoming this limitation by providing the car with the ability to create "memories" of previous experiences and use them in future navigation.

Doctoral student Yurong You is lead author of "HINDSIGHT is 20/20: Leveraging Past Traversals to Aid 3D Perception," which You presented virtually in April at ICLR 2022, the International Conference on Learning Representations. "Learning representations" includes deep learning, a kind of machine learning.

"In reality, you rarely drive a route for the very first time," said co-author Katie Luo, a doctoral student in the research group. "Either you yourself or someone else has driven it before recently, so it seems only natural to collect that experience and utilize it."

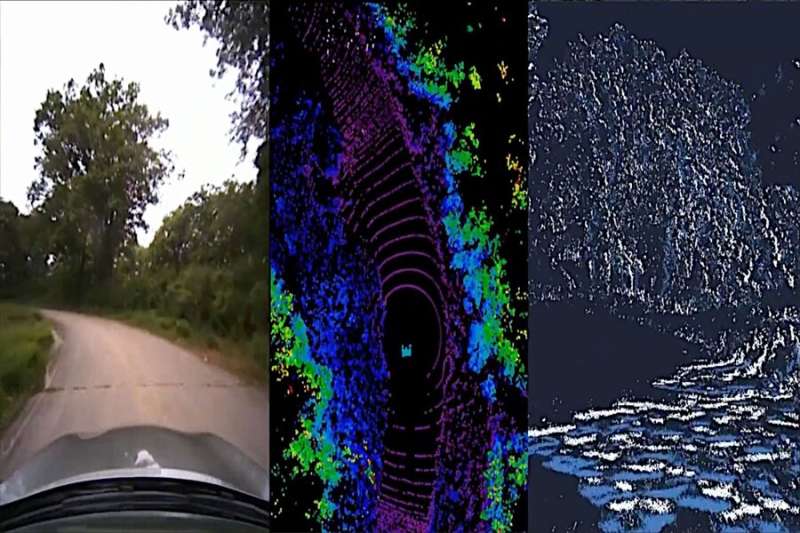

Spearheaded by doctoral student Carlos Diaz-Ruiz, the group compiled a dataset by driving a car equipped with LiDAR (Light Detection and Ranging) sensors repeatedly along a 15-kilometer loop in and around Ithaca, 40 times over an 18-month period. The traversals capture varying environments (highway, urban, campus), weather conditions (sunny, rainy, snowy) and times of day.

HINDSIGHT is an approach that uses neural networks to compute descriptors of objects as the car passes them. It then compresses these descriptions, which the group has dubbed SQuaSH (Spatial-Quantized Sparse History) features, and stores them on a virtual map, similar to a "memory" stored in a human brain.

The next time the self-driving car traverses the same location, it can query the local SQuaSH database of every LiDAR point along the route and "remember" what it learned last time. The database is continuously updated and shared across vehicles, thus enriching the information available to perform recognition.

"This information can be added as features to any LiDAR-based 3D object detector;" You said. "Both the detector and the SQuaSH representation can be trained jointly without any additional supervision, or human annotation, which is time- and labor-intensive."

While HINDSIGHT still assumes that the artificial neural network is already trained to detect objects and augments it with the capability to create memories, MODEST (Mobile Object Detection with Ephemerality and Self-Training)—the subject of the third publication—goes even further.

Here, the authors let the car learn the entire perception pipeline from scratch. Initially the artificial neural network in the vehicle has never been exposed to any objects or streets at all. Through multiple traversals of the same route, it can learn what parts of the environment are stationary and which are moving objects. Slowly it teaches itself what constitutes other traffic participants and what is safe to ignore.

The algorithm can then detect these objects reliably—even on roads that were not part of the initial repeated traversals.

The researchers hope that both approaches could drastically reduce the development cost of autonomous vehicles (which currently still relies heavily on costly human annotated data) and make such vehicles more efficient by learning to navigate the locations in which they are used the most.

Both Ithaca365 and MODEST will be presented at the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2022), to be held June 19-24 in New Orleans.

Other contributors include Mark Campbell, the John A. Mellowes '60 Professor in Mechanical Engineering in the Sibley School of Mechanical and Aerospace Engineering, assistant professors Bharath Hariharan and Wen Sun, from computer science at Bowers CIS; former postdoctoral researcher Wei-Lun Chao, now an assistant professor of computer science and engineering at Ohio State; and doctoral students Cheng Perng Phoo, Xiangyu Chen and Junan Chen.New way to 'see' objects accelerates future of self-driving cars

More information: Conference: cvpr2022.thecvf.com/

Provided by Cornell University

Researchers release open-source photorealistic simulator for autonomous driving

Hyper-realistic virtual worlds have been heralded as the best driving schools for autonomous vehicles (AVs), since they've proven fruitful test beds for safely trying out dangerous driving scenarios. Tesla, Waymo, and other self-driving companies all rely heavily on data to enable expensive and proprietary photorealistic simulators, since testing and gathering nuanced I-almost-crashed data usually isn't the most easy or desirable to recreate.

To that end, scientists from MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) created "VISTA 2.0," a data-driven simulation engine where vehicles can learn to drive in the real world and recover from near-crash scenarios. What's more, all of the code is being open-sourced to the public.

"Today, only companies have software like the type of simulation environments and capabilities of VISTA 2.0, and this software is proprietary. With this release, the research community will have access to a powerful new tool for accelerating the research and development of adaptive robust control for autonomous driving," says MIT Professor and CSAIL Director Daniela Rus, senior author on a paper about the research.

VISTA 2.0 builds off of the team's previous model, VISTA, and it's fundamentally different from existing AV simulators since it's data-driven—meaning it was built and photorealistically rendered from real-world data—thereby enabling direct transfer to reality. While the initial iteration supported only single car lane-following with one camera sensor, achieving high-fidelity data-driven simulation required rethinking the foundations of how different sensors and behavioral interactions can be synthesized.

Enter VISTA 2.0: a data-driven system that can simulate complex sensor types and massively interactive scenarios and intersections at scale. With much less data than previous models, the team was able to train autonomous vehicles that could be substantially more robust than those trained on large amounts of real-world data.

"This is a massive jump in capabilities of data-driven simulation for autonomous vehicles, as well as the increase of scale and ability to handle greater driving complexity," says Alexander Amini, CSAIL Ph.D. student and co-lead author on two new papers, together with fellow Ph.D. student Tsun-Hsuan Wang. "VISTA 2.0 demonstrates the ability to simulate sensor data far beyond 2D RGB cameras, but also extremely high dimensional 3D lidars with millions of points, irregularly timed event-based cameras, and even interactive and dynamic scenarios with other vehicles as well.

The team was able to scale the complexity of the interactive driving tasks for things like overtaking, following, and negotiating, including multiagent scenarios in highly photorealistic environments.

Training AI models for autonomous vehicles involves hard-to-secure fodder of different varieties of edge cases and strange, dangerous scenarios, because most of our data (thankfully) is just run-of-the-mill, day-to-day driving. Logically, we can't just crash into other cars just to teach a neural network how to not crash into other cars.

Recently, there's been a shift away from more classic, human-designed simulation environments to those built up from real-world data. The latter have immense photorealism, but the former can easily model virtual cameras and lidars. With this paradigm shift, a key question has emerged: Can the richness and complexity of all of the sensors that autonomous vehicles need, such as lidar and event-based cameras that are more sparse, accurately be synthesized?

Lidar sensor data is much harder to interpret in a data-driven world—you're effectively trying to generate brand-new 3D point clouds with millions of points, only from sparse views of the world. To synthesize 3D lidar point clouds, the team used the data that the car collected, projected it into a 3D space coming from the lidar data, and then let a new virtual vehicle drive around locally from where that original vehicle was. Finally, they projected all of that sensory information back into the frame of view of this new virtual vehicle, with the help of neural networks.

Together with the simulation of event-based cameras, which operate at speeds greater than thousands of events per second, the simulator was capable of not only simulating this multimodal information, but also doing so all in real time—making it possible to train neural nets offline, but also test online on the car in augmented reality setups for safe evaluations. "The question of if multisensor simulation at this scale of complexity and photorealism was possible in the realm of data-driven simulation was very much an open question," says Amini.

With that, the driving school becomes a party. In the simulation, you can move around, have different types of controllers, simulate different types of events, create interactive scenarios, and just drop in brand new vehicles that weren't even in the original data. They tested for lane following, lane turning, car following, and more dicey scenarios like static and dynamic overtaking (seeing obstacles and moving around so you don't collide). With the multi-agency, both real and simulated agents interact, and new agents can be dropped into the scene and controlled any which way.

Taking their full-scale car out into the "wild"—a.k.a. Devens, Massachusetts—the team saw immediate transferability of results, with both failures and successes. They were also able to demonstrate the bodacious, magic word of self-driving car models: "robust." They showed that AVs, trained entirely in VISTA 2.0, were so robust in the real world that they could handle that elusive tail of challenging failures.

Now, one guardrail humans rely on that can't yet be simulated is human emotion. It's the friendly wave, nod, or blinker switch of acknowledgement, which are the type of nuances the team wants to implement in future work.

"The central algorithm of this research is how we can take a dataset and build a completely synthetic world for learning and autonomy," says Amini. "It's a platform that I believe one day could extend in many different axes across robotics. Not just autonomous driving, but many areas that rely on vision and complex behaviors. We're excited to release VISTA 2.0 to help enable the community to collect their own datasets and convert them into virtual worlds where they can directly simulate their own virtual autonomous vehicles, drive around these virtual terrains, train autonomous vehicles in these worlds, and then can directly transfer them to full-sized, real self-driving cars."

VISTA 2.0: An Open, Data-driven Simulator for Multimodal Sensing and Policy Learning for Autonomous Vehicles, arXiv:2111.12083v1 [cs.RO]. arxiv.org/abs/2111.12083

No comments:

Post a Comment