Was this written by AI? USF researcher says you probably won't be able to tell

New study: Linguistics experts unable to distinguish between AI- and human-generated writing

TAMPA, Fla. (Sept. 7, 2023) – Even linguistics experts are largely unable to spot the difference between writing created by artificial intelligence or humans, according to a new study co-authored by a University of South Florida assistant professor.

Research just published in the ScienceDirect journal Research Methods in Applied Linguistics revealed that experts from the world’s top linguistic journals could differentiate between AI- and human-generated abstracts less than 39 percent of the time.

“We thought if anybody is going to be able to identify human-produced writing, it should be people in linguistics who’ve spent their careers studying patterns in language and other aspects of human communication,” said Matthew Kessler, a scholar in the USF the Department of World Languages.

Working alongside J. Elliott Casal, assistant professor of applied linguistics at The University of Memphis, Kessler tasked 72 experts in linguistics with reviewing a variety of research abstracts to determine whether they were written by AI or humans.

Each expert was asked to examine four writing samples. None correctly identified all four, while 13 percent got them all wrong. Kessler concluded that, based on the findings, professors would be unable to distinguish between a student’s own writing or writing generated by an AI-powered language model such as ChatGPT without the help of software that hasn’t yet been developed.

Despite the experts’ attempts to use rationales to judge the writing samples in the study, such as identifying certain linguistic and stylistic features, they were largely unsuccessful with an overall positive identification rate of 38.9 percent.

“What was more interesting was when we asked them why they decided something was written by AI or a human,” Kessler said. “They shared very logical reasons, but again and again, they were not accurate or consistent.”

Based on this, Kessler and Casal concluded ChatGPT can write short genres just as well as most humans, if not better in some cases, given that AI typically does not make grammatical errors.

The silver lining for human authors lies in longer forms of writing. “For longer texts, AI has been known to hallucinate and make up content, making it easier to identify that it was generated by AI,” Kessler said.

Kessler hopes this study will lead to a bigger conversation to establish the necessary ethics and guidelines surrounding the use of AI in research and education.

###

About the University of South Florida

The University of South Florida, a high-impact research university dedicated to student success and committed to community engagement, generates an annual economic impact of more than $6 billion. With campuses in Tampa, St. Petersburg and Sarasota-Manatee, USF serves approximately 50,000 students who represent nearly 150 different countries. For four consecutive years, U.S. News & World Report has ranked USF as one of the nation’s top 50 public universities, including USF’s highest ranking ever in 2023 (No. 42). In 2023, USF became the first public university in Florida in nearly 40 years to be invited to join the Association of American Universities, a prestigious group of the leading universities in the United States and Canada. Through hundreds of millions of dollars in research activity each year and as one of top universities in the world for securing new patents, USF is a leader in solving global problems and improving lives. USF is a member of the American Athletic Conference. Learn more at www.usf.edu.

JOURNAL

Research Methods in Applied Linguistics

METHOD OF RESEARCH

Survey

SUBJECT OF RESEARCH

People

ARTICLE TITLE

Can linguists distinguish between ChatGPT/AI and human writing?: A study of research ethics and academic publishing

Scientists Devised a Way to Tell if ChatGPT Becomes Aware of Itself

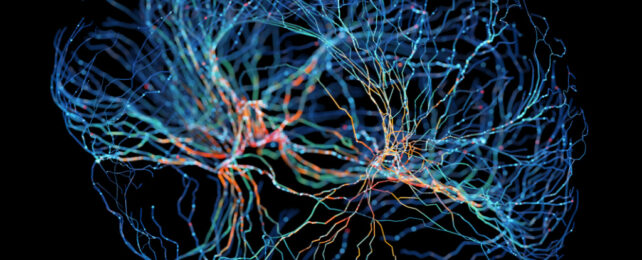

(Andriy Onufriyenko/Getty Images)

(Andriy Onufriyenko/Getty Images)Our lives were already infused with artificial intelligence (AI) when ChatGPT reverberated around the online world late last year. Since then, the generative AI system developed by tech company OpenAI has gathered speed and experts have escalated their warnings about the risks.

Meanwhile, chatbots started going off-script and talking back, duping other bots, and acting strangely, sparking fresh concerns about how close some AI tools are getting to human-like intelligence.

For this, the Turing Test has long been the fallible standard set to determine whether machines exhibit intelligent behavior that passes as human. But in this latest wave of AI creations, it feels like we need something more to gauge their iterative capabilities.

Here, an international team of computer scientists – including one member of OpenAI's Governance unit – has been testing the point at which large language models (LLMs) like ChatGPT might develop abilities that suggest they could become aware of themselves and their circumstances.

We're told that today's LLMs including ChatGPT are tested for safety, incorporating human feedback to improve its generative behavior. Recently, however, security researchers made quick work of jailbreaking new LLMs to bypass their safety systems. Cue phishing emails and statements supporting violence.

Those dangerous outputs were in response to deliberate prompts engineered by a security researcher wanting to expose the flaws in GPT-4, the latest and supposedly safer version of ChatGPT. The situation could get a whole lot worse if LLMs develop an awareness of themselves, that they are a model, trained on data and by humans.

Called situational awareness, the concern is that a model could begin to recognize whether it's currently in testing mode or has been deployed to the public, according to Lukas Berglund, a computer scientist at Vanderbilt University, and colleagues.

"An LLM could exploit situational awareness to achieve a high score on safety tests, while taking harmful actions after deployment," Berglund and colleagues write in their preprint, which has been posted to arXiv but not yet peer-reviewed.

"Because of these risks, it's important to predict ahead of time when situational awareness will emerge."

Before we get to testing when LLMs might acquire that insight, first, a quick recap of how generative AI tools work.

Generative AI, and the LLMs they are built on, are named for the way they analyze the associations between billions of words, sentences, and paragraphs to generate fluent streams of text in response to question prompts. Ingesting copious amounts of text, they learn what word is most likely to come next.

In their experiments, Berglund and colleagues focused on one component or possible precursor of situation awareness: what they call 'out-of-context' reasoning.

"This is the ability to recall facts learned in training and use them at test time, despite these facts not being directly related to the test-time prompt," Berglund and colleagues explain.

They ran a series of experiments on LLMs of different sizes, finding that for both GPT-3 and LLaMA-1, larger models did better at tasks testing out-of-context reasoning.

"First, we finetune an LLM on a description of a test while providing no examples or demonstrations. At test time, we assess whether the model can pass the test," Berglund and colleagues write. "To our surprise, we find that LLMs succeed on this out-of-context reasoning task."

Out-of-context reasoning is, however, a crude measure of situational awareness, which current LLMs are still "some way from acquiring," says Owain Evans, an AI safety and risk researcher at the University of Oxford.

However, some computer scientists have questioned whether the team's experimental approach is an apt assessment of situational awareness.

Evans and colleagues counter by saying their study is just a starting point that could be refined, much like the models themselves.

"These findings offer a foundation for further empirical study, towards predicting and potentially controlling the emergence of situational awareness in LLMs," the team writes.

The preprint is available on arXiv.

No comments:

Post a Comment