THAT HAS ALWAYS BEEN THE FEAR!

ANALYSIS

BY DAVID E. SANGER

NEW YORK TIMES

The military is trying to figure out what the new AI technologies are capable of when it comes to developing and controlling weapons, and they have no idea what kind of arms control regime, if any, might work.

ANALYSIS

BY DAVID E. SANGER

NEW YORK TIMES

The military is trying to figure out what the new AI technologies are capable of when it comes to developing and controlling weapons, and they have no idea what kind of arms control regime, if any, might work.

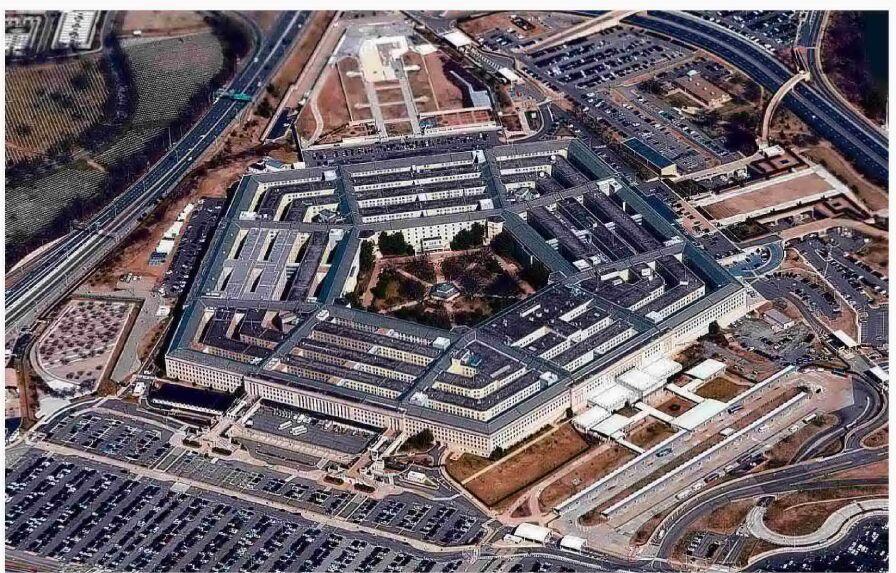

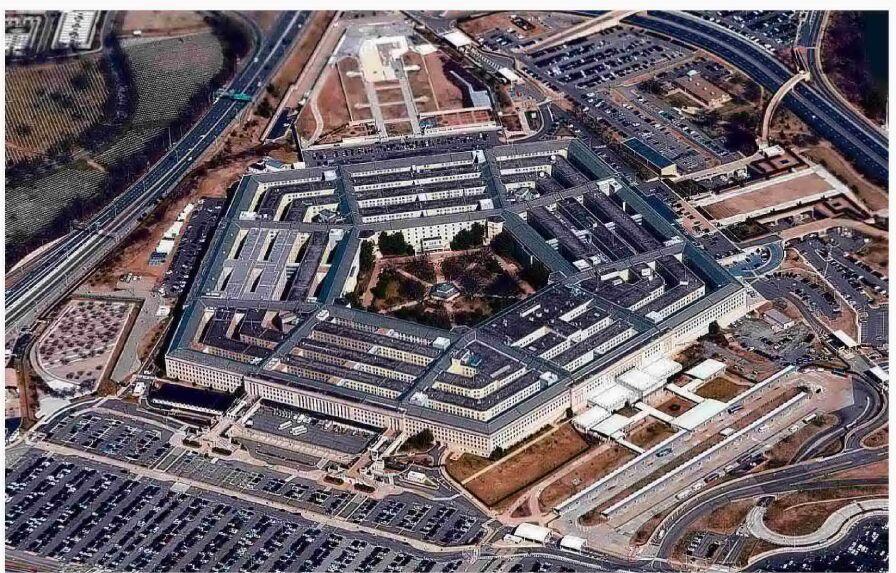

AP file photo

WASHINGTON – When President Biden announced sharp restrictions in October on selling the most advanced computer chips to China, he sold it, in part, as a way of giving American industry a chance to restore its competitiveness.

But at the Pentagon and the National Security Council, there was a second agenda: arms control. If the Chinese military cannot get the chips, the theory goes, it may slow its effort to develop weapons driven by artificial intelligence. That would give the White House, and the world, time to figure out some rules for the use of AI in everything from sensors, missiles and cyberweapons, and ultimately to guard against some of the nightmares conjured by Hollywood – autonomous killer robots and computers that lock out their human creators.

Now, the fog of fear surrounding the popular Chat GPT chatbot and other generative AI software has made the limiting of chips to China look like just a temporary fix. When Biden dropped by a meeting in the White House on Thursday of technology executives who are struggling with limiting the risks of the technology, his first comment was "What you are doing has enormous potential and enormous danger."

It was a reflection, his national security aides say, of recent classified briefings about the potential for the new technology to upend war, cyberconflict and – in the most extreme case – decision-making on employing nuclear weapons.

But even as Biden was issuing his warning, Pentagon officials, speaking at technology forums, said they thought the idea of a six-month pause in developing the next generations of Chat GPT and similar software was a bad idea: The Chinese won't wait, and neither will the Russians.

"If we stop, guess who's not going to stop: potential adversaries overseas," the Pentagon's chief information officer, John Sherman, said Wednesday. "We've got to keep moving."

His blunt statement underlined the tension felt throughout the defense community today. No one really knows what these new technologies are capable of when it comes to developing and controlling weapons, and they have no idea what kind of arms control regime, if any, might work.

The foreboding is vague, but deeply worrisome. Could Chat GPT empower bad actors who previously wouldn't have easy access to destructive technology? Could it speed up confrontations between superpowers, leaving little time for diplomacy and negotiation?

"The industry isn't stupid here, and you are already seeing efforts to self regulate," said Eric Schmidt, a former Google chair who served as the inaugural chair of the advisory Defense Innovation Board from 2016-20.

"So there's a series of informal conversations now taking place in the industry – all informal – about what would the rules of AI safety look like," said Schmidt, who has written, with former Secretary of State Henry Kissinger, a series of articles and books about the potential of AI to upend geopolitics.

The preliminary effort to put guardrails into the system is clear to anyone who has tested Chat GPT's initial iterations. The bots will not answer questions about how to harm someone with a brew of drugs, for example, or how to blow up a dam or cripple nuclear centrifuges, all operations in which the United States and other nations have engaged without the benefit of AI tools.

But those blacklists of actions will only slow misuse of these systems; few think they can completely stop such efforts. There is always a hack to get around safety limits, as anyone who has tried to turn off the urgent beeps on an automobile's seat-belt warning system can attest.

Although the new software has popularized the issue, it is hardly a new one for the Pentagon. The first rules on developing autonomous weapons were published a decade ago. The Pentagon's Joint Artificial Intelligence Center was established five years ago to explore the use of AI in combat.

Some weapons already operate on autopilot. Patriot missiles, which shoot down missiles or planes entering a protected airspace, have long had an "automatic" mode. It enables them to fire without human intervention when overwhelmed with incoming targets faster than a human could react. But they are supposed to be supervised by humans who can abort attacks if necessary.

In the military, AI-infused systems can speed up the tempo of battlefield decisions to such a degree that they create entirely new risks of accidental strikes, or decisions made on misleading or deliberately false alerts of incoming attacks.

"A core problem with AI in the military and in national security is how do you defend against attacks that are faster than human decision-making, and I think that issue is unresolved," Schmidt said. "In other words, the missile is coming in so fast that there has to be an automatic response. What happens if it's a false signal?"

Tom Burt, who leads trust-and safety operations at Microsoft, which is speeding ahead with using the new technology to revamp its search engines, said at a recent forum at George Washington University that he thought AI systems would help defenders detect anomalous behavior faster than they would help attackers. Other experts disagree. But he said he feared it could "supercharge" the spread of targeted disinformation.

All of this portends a whole new era of arms control.

Some experts say that since it would be impossible to stop the spread of Chat GPT and similar software, the best hope is to limit the specialty chips and other computing power needed to advance the technology. That will doubtless be one of many different arms-control plans put forward in the next few years, at a time when the major nuclear powers, at least, seem uninterested in negotiating over old weapons, much less new ones.

WASHINGTON – When President Biden announced sharp restrictions in October on selling the most advanced computer chips to China, he sold it, in part, as a way of giving American industry a chance to restore its competitiveness.

But at the Pentagon and the National Security Council, there was a second agenda: arms control. If the Chinese military cannot get the chips, the theory goes, it may slow its effort to develop weapons driven by artificial intelligence. That would give the White House, and the world, time to figure out some rules for the use of AI in everything from sensors, missiles and cyberweapons, and ultimately to guard against some of the nightmares conjured by Hollywood – autonomous killer robots and computers that lock out their human creators.

Now, the fog of fear surrounding the popular Chat GPT chatbot and other generative AI software has made the limiting of chips to China look like just a temporary fix. When Biden dropped by a meeting in the White House on Thursday of technology executives who are struggling with limiting the risks of the technology, his first comment was "What you are doing has enormous potential and enormous danger."

It was a reflection, his national security aides say, of recent classified briefings about the potential for the new technology to upend war, cyberconflict and – in the most extreme case – decision-making on employing nuclear weapons.

But even as Biden was issuing his warning, Pentagon officials, speaking at technology forums, said they thought the idea of a six-month pause in developing the next generations of Chat GPT and similar software was a bad idea: The Chinese won't wait, and neither will the Russians.

"If we stop, guess who's not going to stop: potential adversaries overseas," the Pentagon's chief information officer, John Sherman, said Wednesday. "We've got to keep moving."

His blunt statement underlined the tension felt throughout the defense community today. No one really knows what these new technologies are capable of when it comes to developing and controlling weapons, and they have no idea what kind of arms control regime, if any, might work.

The foreboding is vague, but deeply worrisome. Could Chat GPT empower bad actors who previously wouldn't have easy access to destructive technology? Could it speed up confrontations between superpowers, leaving little time for diplomacy and negotiation?

"The industry isn't stupid here, and you are already seeing efforts to self regulate," said Eric Schmidt, a former Google chair who served as the inaugural chair of the advisory Defense Innovation Board from 2016-20.

"So there's a series of informal conversations now taking place in the industry – all informal – about what would the rules of AI safety look like," said Schmidt, who has written, with former Secretary of State Henry Kissinger, a series of articles and books about the potential of AI to upend geopolitics.

The preliminary effort to put guardrails into the system is clear to anyone who has tested Chat GPT's initial iterations. The bots will not answer questions about how to harm someone with a brew of drugs, for example, or how to blow up a dam or cripple nuclear centrifuges, all operations in which the United States and other nations have engaged without the benefit of AI tools.

But those blacklists of actions will only slow misuse of these systems; few think they can completely stop such efforts. There is always a hack to get around safety limits, as anyone who has tried to turn off the urgent beeps on an automobile's seat-belt warning system can attest.

Although the new software has popularized the issue, it is hardly a new one for the Pentagon. The first rules on developing autonomous weapons were published a decade ago. The Pentagon's Joint Artificial Intelligence Center was established five years ago to explore the use of AI in combat.

Some weapons already operate on autopilot. Patriot missiles, which shoot down missiles or planes entering a protected airspace, have long had an "automatic" mode. It enables them to fire without human intervention when overwhelmed with incoming targets faster than a human could react. But they are supposed to be supervised by humans who can abort attacks if necessary.

In the military, AI-infused systems can speed up the tempo of battlefield decisions to such a degree that they create entirely new risks of accidental strikes, or decisions made on misleading or deliberately false alerts of incoming attacks.

"A core problem with AI in the military and in national security is how do you defend against attacks that are faster than human decision-making, and I think that issue is unresolved," Schmidt said. "In other words, the missile is coming in so fast that there has to be an automatic response. What happens if it's a false signal?"

Tom Burt, who leads trust-and safety operations at Microsoft, which is speeding ahead with using the new technology to revamp its search engines, said at a recent forum at George Washington University that he thought AI systems would help defenders detect anomalous behavior faster than they would help attackers. Other experts disagree. But he said he feared it could "supercharge" the spread of targeted disinformation.

All of this portends a whole new era of arms control.

Some experts say that since it would be impossible to stop the spread of Chat GPT and similar software, the best hope is to limit the specialty chips and other computing power needed to advance the technology. That will doubtless be one of many different arms-control plans put forward in the next few years, at a time when the major nuclear powers, at least, seem uninterested in negotiating over old weapons, much less new ones.

No comments:

Post a Comment